The Principles of Planning and Implementing Microservices

See the trees for the forest. Get practical and actionable insights into what you need to know to plan and implement microservices effectively.

Join the DZone community and get the full member experience.

Join For FreePlanning a microservices-based application — where to start? This architecture is composed of many aspects; how to break down this decoupled architecture? I hope that after reading this article, the picture will be clearer.

The Gist

- Why?

- The reasoning for choosing microservices.

- What to consider?

- The planning considerations before moving to microservices (versioning, service discovery and registry, transactions, and resourcing)

- How?

- Implementation practices (resilience, communication patterns)

- Who?

- Understanding the data flow (logging, monitoring, and alerting)

Building a viable microservices solution is attributed to the technical platform and supporting tools. This article focuses on the technical foundations and principles without diving into the underlying tools and products that carry out the solution itself (Docker, Kubernetes or other containers orchestration tool, API Gateway, authentication, etc.).

While writing this article, I noticed that each of the parts deserves a dedicated article; nevertheless, I chose to group them all into one article to see the forest, not only the trees.

1. The Principles Behind Microservices

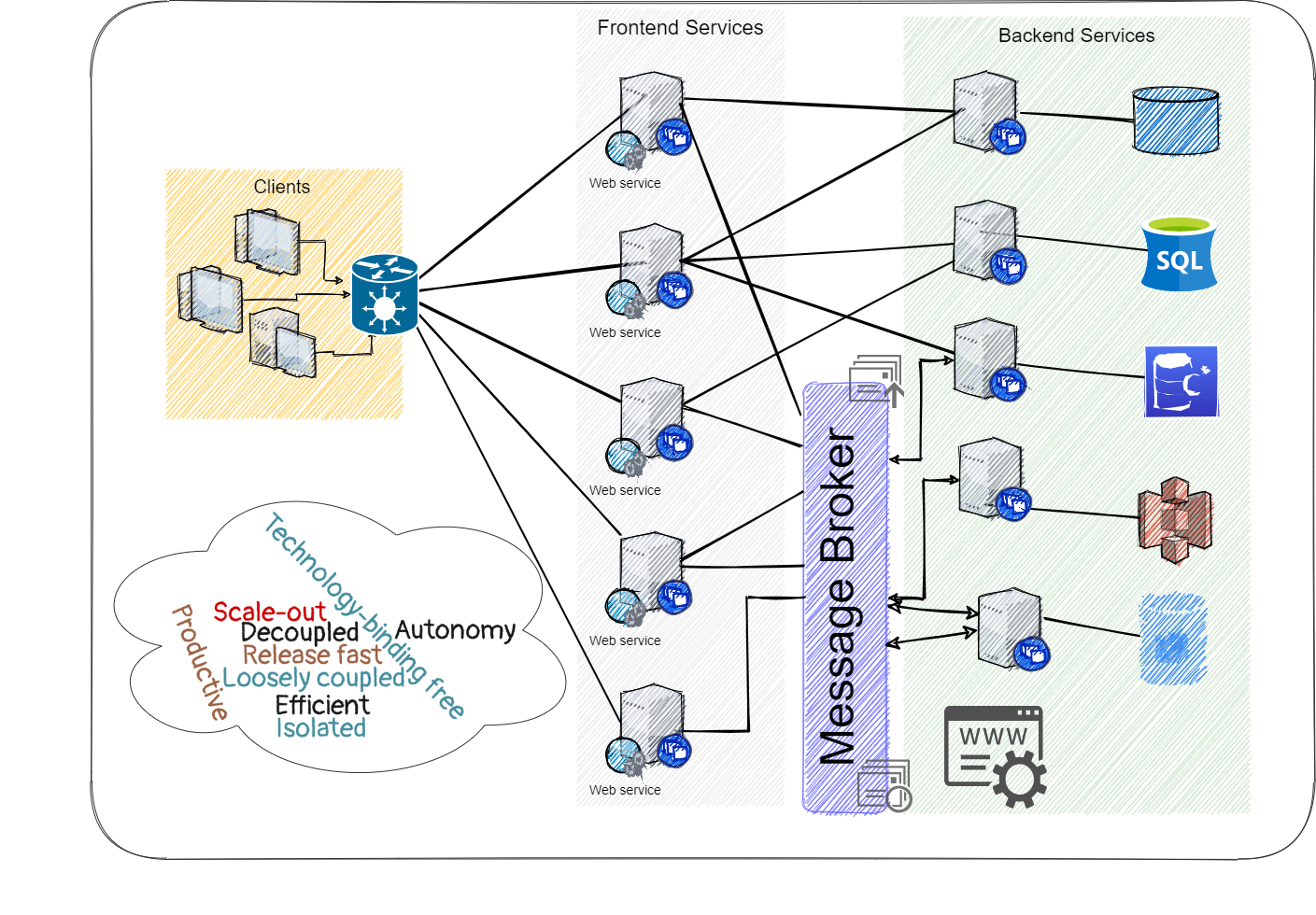

A single application is composed of many small services; each service operates on its own process while communicating with other services over the network by some inter-process communication form, which will be discussed later in Part 3.

This decoupling allows more flexibility than a monolithic application since each service is independent and can be developed, deployed, and maintained regardless of the other services that compose the application.

Microservices characteristics include three main aspects:

Domain-Driven Design (DDD)

Each service is a separate component that can do only one thing; it is upgradable, regardless of the other services. The service is built to handle a specific domain and declares its boundaries accordingly; these boundaries are exposed as a contract, which technically can be implemented by API. The considerations for decomposing the system to domains can base on data domain (model), business functionality, or the need for atomic and consistent data-transactions.

Not only the technology applies the DDD concepts, but also the development team can adhere to this concept too. Each microservice can be implemented, tested, and deployed by a different team.

Loose Coupling, High Cohesion

Changing one microservice does not impact the other microservices, so altering our solution's behavior can be done only in one place while leaving the other parts intact. Nevertheless, each service communicates with other services broadly, and the system operates as one cohesive application.

Continuous Integration, Continous Delivery

Facilitates the testing on the microservice architecture. By making extensive use of automation, we gain fast feedback on the quality of the solution. The fast feedback goes throughout the whole SDLC (software development lifecycle). It allows frequently releasing more mature and stable versions.

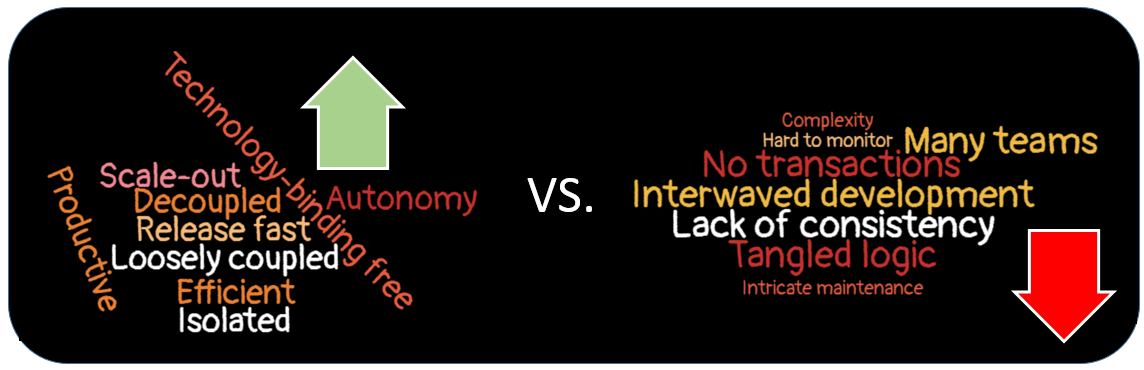

Why Microservices?

The main objective of making a transition towards a microservices solution is to develop fast, test easily, and release more frequently. In short, it means shortening the features’ time-to-market while reducing risks. In the monolith world, a change is not isolated from the rest of the application layers. With that, every change may have a huge impact on the system’s stability. Therefore, the SDLC agility is affected; releasing a new feature incurs more testing time, prudent releases, and overall prolonged time-to-market.

To summarize, the advantages of a microservices solution are:

- Easy to develop a small-scale service that focuses only on one main use-case. It is more productive to understand the logic and maintain it.

- Dividing the work between the teams is simpler. Each service can be developed solely by another team.

- The start-up time of each service is faster than a monolith application.

- Scaling-out/in is effective and controlled. Adding more services when the load rises is simple, and similarly, when the load is low, the number of running services can be reduced. With that, utilizing the underlying hardware is more efficient.

- Tackling and troubleshooting can be isolated if the source of the problem is known. After that, resolving the problem is an isolated chirurgical fix.

- Technology-binding is limited to the service level. Each service can be developed in another technology other than the others. It allows matching the best technology to address the use-case and bypass the constraints of using the same programming language as the rest of the system. Furthermore, it allows using newer technologies; if the system is written in Java (an outdated version), we can add a newer service in a newer version of Java or even another language, such as Scala.

Microservices — Not Only Benefits

When you have a hammer, everything looks like a nail. This sentence is relevant for a microservice-based solution too. Along with the advantages, there are some drawbacks:

- Understanding and developing a distributes system as a whole is complex. Designing and implementing inter-service communication can involve various protocols and products. This complexity is embedded in each phase of the SDLC, starting from designing until maintaining and monitoring.

- Since each service has one main use-case or domain to manage, developing atomic transactions should be spread into several services (unless designing the microservices based on the data-consistency domain); it is more complicated to implement its rollback across several services. The solution should adapt and embrace the eventual consistency concept (described in details later).

- Due to the granularity of services, there might be many requests. It can be inefficient and increase latency. Although there are ways to reduce the number of calls the client sends (see the section about GraphQL), developers might drift and execute the logic inadvertently with more requests than needed.

After reviewing these drawbacks, the next step is to see how to mitigate them as much as possible during the planning and implementation stages.

2. Considerations for Building a Microservices-based Application

After reviewing the incentives to build a microservices solution, next is to discuss the considerations during the design and planning stages, which lay the foundations for the implementation.

To deploy a service in a microservices solution, we need to know which service to deploy, its version, the target environment, how to publish it, and how it communicates with other services.

Let’s review the considerations in the planning stage:

Service Templates — Standardization

Since each service is decoupled from the rest, the development team can be easily drifted away and build each service differently. This has its toll; lack of standardization costs more in testing, deploying, and maintaining the system. Therefore, the benefits of using standards are:

- Creating services faster; the scaffold is already there.

- Familiarization with services is easier.

- Enforcing best-practices as part of the development phase.

Standardization should include the API the service exposes and the response structure, and the error handling. One solution to enforce guidelines is using a service template utility, which can be tailored to the development practices you define. For example:

- Dropwizard (Java), Governator (Java)

- Cookiecutter (Python)

- Jersey (REST API)

For more service template tools, please refer to reference [1].

Versioning

Each service should have a version, which updates regularly in every release. Versioning allows to identify a service and deploy a specific version of it. It also enables the service consumers to be aware when the service has changed and avoided breaking the existing contract and the communication between them.

Different versions of the same service can coexist. With that, the old version's migration to the new version can be gradual without having too much impact on the whole application.

Documentation

In a microservices environment, many small services constantly communicate, so it is easier to get lost in what the service does or how to use its API.

Documentation can facilitate that. Keeping valid, up-to-date documentation is a tedious and time-consuming task. Naturally, this can be prioritized low in the tasks list of the developer. Therefore, automation is required instead of documenting manually (readme files, notes, procedures). There are various tools to codify and automate tasks to keep the documentation updated while the code continues to change. Tools like Swagger UI or API Blueprint can do the job. They can generate a web UI for your microservices API, which alleviates the orientation efforts. Once again, standardization is advantageous; for example, Swagger implements the OpenAPI specification, which is an industry-standard.

Service Discovery

After discussing some code development aspects, let's discuss planning on adding a new service to the system.

When deploying a new service, orientation is essential for the new service and other counterpart services to establish communication. Service discovery allows services to communicate with each other and increase the clarity in monitoring each service individually.

Service discovery is built on a service registry solution, which maintains the network locations of all service instances. Service discovery can be implemented in one of two ways: static and dynamic.

Static Service Discovery

In a DNS solution, an IP address is associated with a name, which means a service has a unique address that allows clients to find it. DNS is a well-known standard, which is an advantage. However, if the environment is dynamic and frequent changes of services' paths are required, choosing DNS may not be the best solution since updating the DNS entries can be painful. Revoking the old IP address depends on the DNS TTL value.

Furthermore, clients can keep the DSN in memory or some cache, which leads to stale states. Using load-balancers as a buffer between the service and its consumers is one option to bypass this problem. Still, it may complicate the overall solution if there is no actual need to maintain laid-balancers.

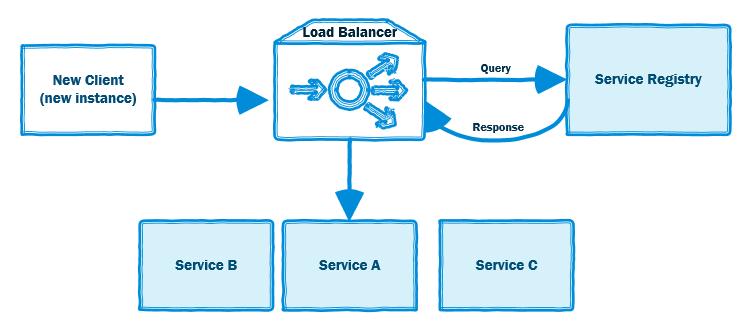

Dynamic Service Discovery

The traditional way to read the services’ addresses from a configuration file (locally or somewhere on the network) is not flexible enough, as changes are done frequently. Instances are automatically assigned to the network location, so maintaining a central configuration is not efficient and almost not practical. To solve this problem, there are two dynamic service discovery approaches client-side discovery or server-side discovery. First, we describe how services are being discovered and how they are registered into the system.

Client-side Discovery

In this dynamic discovery approach, the client queries the service registry for instances of other services. After obtaining the information, the client's responsibility to determine these instances' network locations and how to make requests to access them can be via load balancers or direct communication.

This approach has several advantages:

- The service registry and the service instances are the only actors. The client has to draw the information from the service registry, and straightforwardly it can communicate with the rest of the services.

- The client decides which route is the most efficient to connect to the other services, based on choosing the path via the load balancers.

- For making a request, there are only a few hops.

However, the disadvantages of the client-side discovery approach are:

- The clients are coupled to the service registry; without it, new clients may be blind to the existence of other services.

- The client-side routing logic has to be implemented in every service. It hurts if the services differ by their tech-stack, which means this logic should be implemented across different technologies.

Server-side Discovery

The second dynamic discovery approach is server-side. In this approach, the client is more naive, as it communicates only with a load-balancer. This load-balancer interacts with the service-registry and routes the client’s request to the available service instance. This approach is used by Kubernetes or AWS ELB (Elastic Load Balancer).

This approach's main advantage is offloading the client from figuring out the network while focusing on communicating with other services without handling the “how.” Nevertheless, the drawback is the necessity of having another tool (load-balancer) to communicate with the service registry. However, registry services have this functionality embedded already.

For further reading about service discovery, please refer to reference [2]

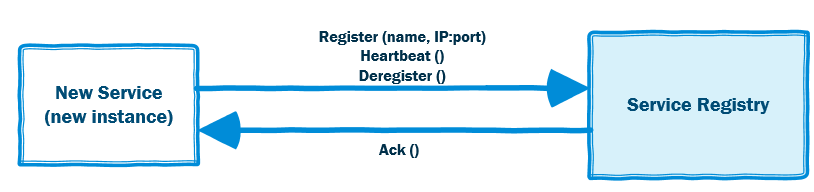

Service Registries

We discussed how a service is discovered among the other services and how it maps the other network services and communicates with them. In this section, the focus is on how services are being registered into the system. Basically, a list of all services is stored in a database containing all service instances' network locations. Although this information can be cached on the client-side, this repository should be updated, consistent, and highly available to ensure services can communicate upon loading.

There are two patterns of service registration:

- Self-Registration

As the name implies, the responsibility for registering and deregistering is on the new instance itself. After the instantiation of an instance, as part of the start-up process, the service sends a message to the service registry, “hi, I’m here.” During the period the service is alive, it sends heartbeat messages to notify the service registry that it is still functioning to avoid its expiry. In the end, before the service terminates, it sends a Goodbye message.

Similar to the client-side registration, the main advantage of this approach is its simplicity. The implementation is solely on the service itself. However, this is also a disadvantage. The service instances are coupled to internal implementation. In case there are several tech-stacks, each should implement the registration, keep alive, and deregistration logic.

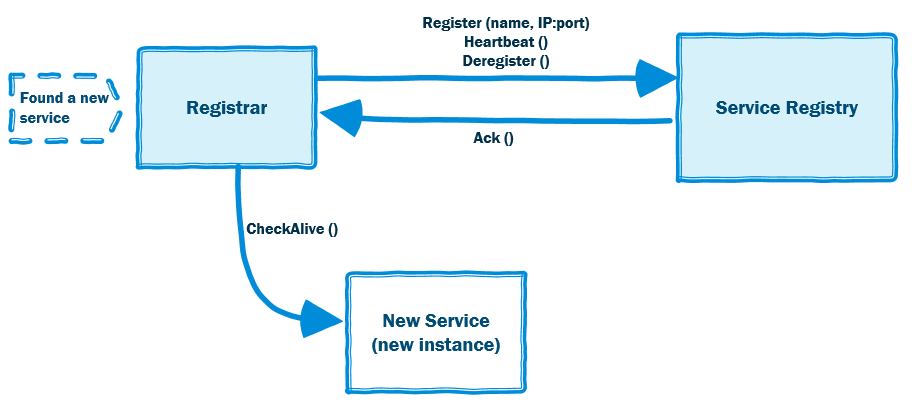

- Third-Party Registration

This approach puts a service that handles the registration by tracking changes (the Registrar service); it can be done either by subscribing to events or by pulling information from the environment. When the registrar finds a new instance, it saves it into the service registry. Only the registrar interacts with the service registry; the new microservice does not play actively in the registration process. This registrar service ensures instances are alive using health check messages.

The benefits of choosing this approach are offloading the microservice from managing its registration and decoupling it from the registry service. The registrar manages the registration; an implementation of proactive keep-alive is not required anymore. Like the server-side discovery pattern, the drawback arises if the service registry tool is not part of the microservices orchestration tool. For example, you can use Apache Zookeeper as your service registry. Alternatively, some orchestration platforms include built-in service registry features, too, Kubernetes, for example.

For further reading about service registry, please refer to reference [3]

Database Transaction Approach: ACID vs. BASE

Another important aspect of designing a microservices solution is how and where to save data into the repositories. In a microservice architecture, databases should be private; otherwise, the services will be coupled to a common resource.

Although a shared database pattern is simple and easy to start with, it is not recommended since it breaks the autonomy of services; the implementation of one service depends on an external component. Moreover, the service is coupled with a certain technology (database) while maintaining the same Data Access Layer (DAL) in more than one service. Therefore, this approach is not recommended.

Instead of sharing a database between services, it can be split into the relevant microservices, based on their domain; this approach is more complex than the monolithic solution. In the monolithic application, transactions are ACID compliant: Atomic, Consistent, Isolated, and Durable. A transaction is either succeeded or not, keeps the defined constraints, data is visible immediately after the commit operation, and the data is available once the transaction is completed.

In the microservice realm, ACID concepts cannot exist. One alternative is to implement a distributed transactions mechanism, but it is hard to develop and maintain. Thus, although it seems an obvious solution, it is better not to start with a distributed transactions pattern as the first choice; there is another way.

The alternative is to adhere to the BASE approach: Basic Availability, Soft-state, and Eventual consistency. Meaning, data will be available on the basic level until it is fully distributed to all datastores. There is no guarantee for immediate and isolated transactions in this model, but there is a goal to achieve consistency eventually. One implementation of this approach is called CQRS (Command and Query Responsibility Segregation); please refer to reference [4].

In any case, analyzing the required transaction mode is paramount to design a suitable transactivity model. If an operation must be ACID, then a microservice should be designed to encapsulate it while implementing ACID transactions locally. However, not all transactions must be atomic or isolated (ACID-based); some can compromise on the BASE approach, which gives the flexibility in defining domains, decoupling operations, and enjoying the benefits of using microservices.

Allocating Resources

In this article, the last aspect of planning is allocating adequate resources for our solution. The flexibility of scaling-out has its toll while planning the underlying hardware of a microservices-based application. We need to gauge the required hardware to supply a varying demand.

Therefore, the planner should estimate each microservice’s growth scale and its upper barrier; service consumption cannot be endless. Examining business needs should be the starting point for extrapolating the future use of each service. These needs define the metrics to determine whether the service will be scaled-out or not; they can include, for example, the number of incoming requests or expected outgoing calls, frequency of calls, and how it impacts the other services too.

The next step is to set a “price” tag for the expected growth in CPU, memory, network utilization, and other metrics that impact the essential hardware to sustain the services’ growth.

You might be thinking this step is not necessary if our system runs on the cloud since the resources are (theoretically) endless, but it is a misconception. Firstly, even the cloud resources are limited in their compute and storage. Secondly, growth comes with a financial cost, mainly an unplanned growth, which impacts the project's budget.

Once the application is in production, coping with an undervalued resource allocation may affect business aspects (SLA, budget). Thus it is essential to address this aspect before rushing to implement our solution.

3. Microservices Implementation

After reviewing the aspects to consider during the planning phase, this section focuses on the principles for implementing and maintaining the microservices application.

Planning for Resilience

The microservices solution is composed of many isolated but communicative parts, so the nature of problems differs from the monolith world. Failures happen in every system, all the more so in a distributes interconnected system; therefore, planning how to tackle these problems on the design stage is needed.

The existing reliability and fault-tolerant mechanisms used in SOA (service-oriented architectures) can be used in a microservices architecture. Some of these patterns are:

- Timeouts

- This is an effective way to abort calls that take too long or fail without returning to the caller promptly. It is better to set the timeout on the requestor side (the client) than rely on the server’s or the network’s timeout; each client should protect itself from being hung by an external party. When a timeout happens, it needs to be logged as it might be caused by a network problem or some other issue besides the service itself.

- Retries

- Failing to receive a valid response can be due to an error or timeout. The retry mechanism allows sending the same request more than once. It’s important to limit the number of retries. Otherwise, it can be an endless cycle that generates an unnecessary load on the network. Moreover, you can widen the retries interval (the first retry after 100 milliseconds, the second retry after 250 milliseconds, and the third retry after 1 second) based on the use-case of the request.

- Handshaking

- The idea behind this pattern is to ensure the service on the other side is available before executing the actual request. If the service is not available, the requestor can choose to wait, notify back to the caller, or find another alternative to fulfill the business logic. This approach offloads the system from unproductive communication.

- Throttling

- Throttling ensures the server can provide service for all requestors. It protects the service itself from being loaded and keeps the clients from sending futile requests. It can reduce the latency by signaling; there is no point in executing retries.

- The throttling approach is an alternative to autoscaling, as it sets a top barrier to the service level agreement (SLA) rather than catering to the increased demand. When a service reaches its max throughput, the system monitors it and automatically raises an alert rather than scaling-out.

- The throttling configuration can be based on various aspects; here are some suggestions. First, the number of requests per given period (millisecond/seconds/minutes) from the same client for the same or similar information. Second, the service can disregard requests from clients it deems as nonessential or not-important in a given time; it prioritizes other services.

- Lastly, to consume fewer resources and supply the demand, the service can continue providing service but with reduced quality; for example, lower resolution video streaming rather than cease the streaming totally.

- Another benefit of using throttling is enhanced security; it limits a potential DDOS attack, prevents data harvesting, and blocks irresponsible requests.

- Bulkheads

- This pattern ensures that faults in one part of the system do not take the other parts down. In a microservices architecture, when each service is isolated, naturally, it is limited when a service is down. However, when boundaries between microservices are not well defined, or microservices share repository (not recommended), the bulkhead pattern is not adhered to. Besides partitioning the services, consumers can be partitioned, meaning isolating critical consumers from standard consumers. Using the bulkheads approach increases the flexibility in monitoring each partition’s performance and SLA (for more information, please refer to reference [5]).

- Circuit breakers

- An electrical circuit breaker halts the electricity connection when a certain threshold has been breached. Similarly, a service can be flagged as down or unavailable after clients fail to interact with it. Then, clients will avoid sending more requests to the “blown” service and offload the system's void calls. After a cool-down period, the clients can resume their requests to the faulty service, hoping it has revived. If the requests were handled successfully, the circuit breaker is reset.

Using these safety measurements allows handling errors more gracefully. In some of the patterns above, a client failed to receive a valid response from the server. How to handle these faulty requests? In the synchronous call, the solution can be logging the error and propagate it to the caller. In asynchronous communication, requests can be queued up for later retries. For more information about resiliency patterns, please refer to reference[6].

Communication and Coordination Between Services

Service-to-service communication can be divided into two types: synchronous and asynchronous. The synchronous communication is easy to implement but impractical for long-running tasks since the client hangs.

On the other side, asynchronous communication frees the client from waiting for a response after calling another service, but it is more complicated to manage the response. Either low-latency systems or intermittent online system and long-running tasks Can benefit from this approach.

How do services coordinate? There are two collaboration patterns:

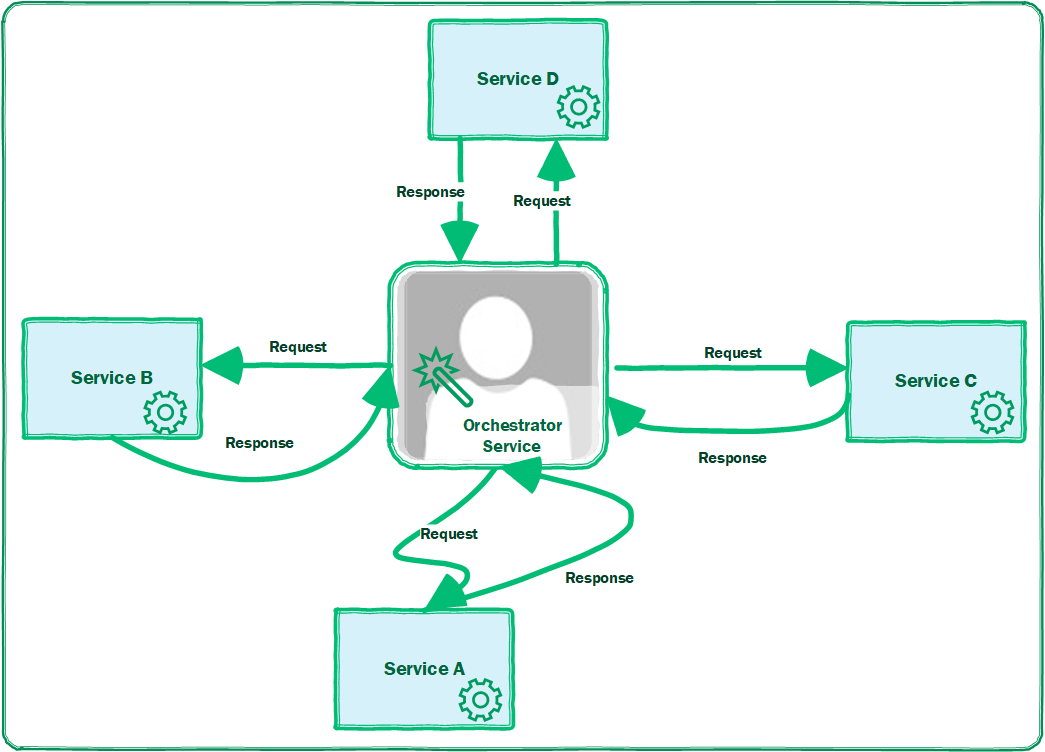

- Orchestration

- Similarly to an orchestra's conductor, there is a central brain that manages the business processes, similarly to a command-and-control concept. This brain knows every stage of each service and able to track it. Events can flow directly via the orchestrator or through a message broker, while the communication flows between the message broker and the orchestrator. The communication is based on the request-response concept. Therefore, this approach is more associated with the synchronous approach.

- The decision making relies on the orchestrator; each service executes its business logic and returns the output without any decisions about the next step or the overall flow. The next pattern, Choreography, has a different approach.

![orchestration of workflow]()

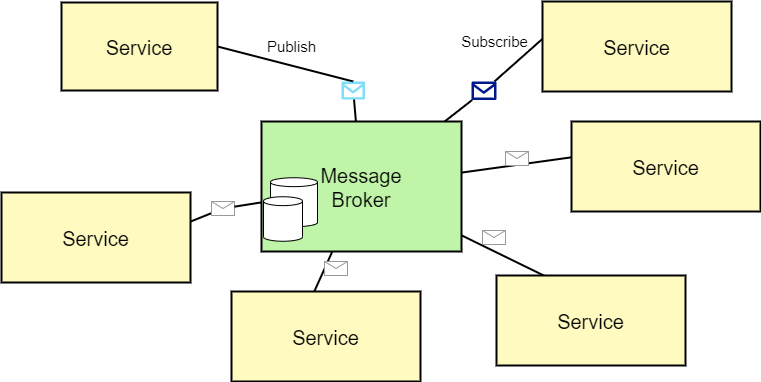

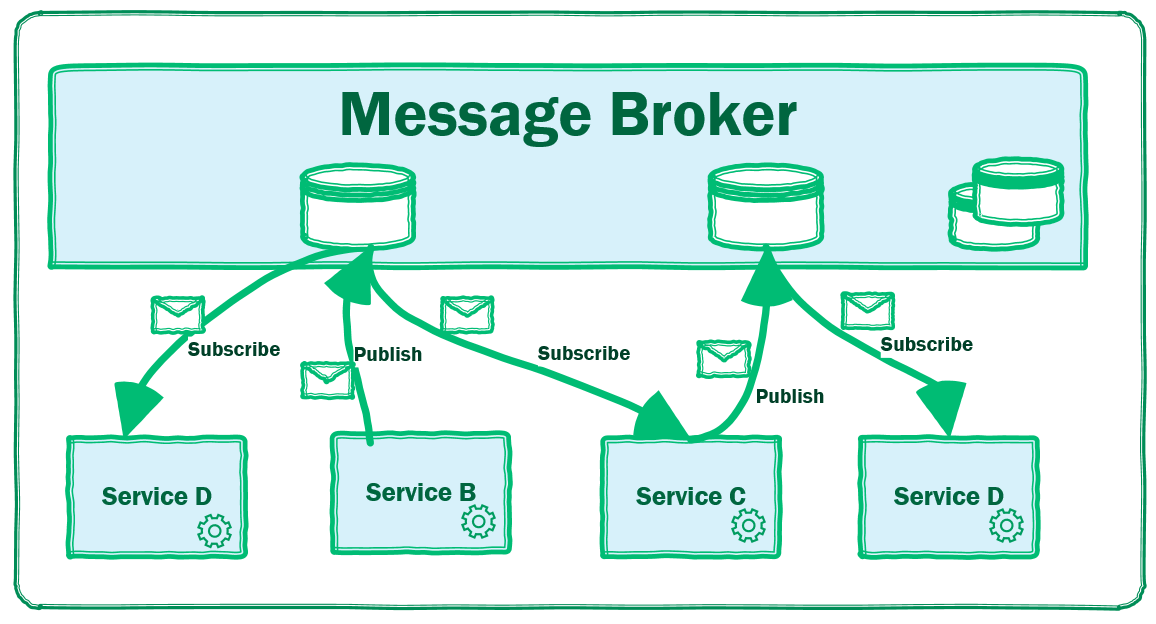

- Choreography

- As opposed to Orchestrator patterns, the Choreography pattern decentralizes each service's control; each service works independently. Thus, each service decides the next step solely without being instructed by centralized control. Events are broadcasted, and each service decides whether this information is relevant to its business logic or not; the “brain” is embedded in each service.

- The flow is based on sharing events, which creates a loosely coupled communication, mainly based on the event-driven concept. Therefore, this approach is more natural for asynchronous communication.

![message broker]()

To make it more tangible, in the AWS platform, Orchestrator can be implemented with Step Functions service. In contrast, Event Bridge or SNS service can be the underlying solution for a Choreography-based application.

Each of these two patterns is legit; preferring one over another depends on the business use cases and needs. Nevertheless, the Choreography approach is more difficult to monitor since there is no central controller, and there is no mediator to intervene and prevent cascading failures. That’s the reason that implementing timeouts or understanding the whole logic is more complicated (for delving into each approach, please refer to reference [7]).

Lastly, the decision to choose between Orchestrator to Choreography does not have to be so dichotomic. Your system may be decomposable further into subcomponents, each of which lending itself more towards Orchestration or Choreography individually.

Now, let's look at the ways to implement the communications between services.

Synchronous Communication

The request/response communication is more suitable for n-tier applications, in which a requestor should wait for a response.

Let’s cover three basic synchronous communication technologies: RPC, REST, and GraphQL.

1. RPC — Remote Process Protocol

This is an inter-process communication where a client makes a local method call that executes on a remote server. The classic example is SOAP. The client calls a local stub, the call is transferred over the network, and the logic runs on a remote service.

RPC is easy to use, and it’s easy to use as if calling a local method. On the other hand, the drawbacks are the tight coupling between the client and the server and a strict API.

Recently, with the emerge of gRPC and HTTP/2, this approach flourishes again.

2. REST — Representational State Transfer

REST is a strict pattern of querying and updating data, mostly in JSON or XML format. REST is protocol-agnostic but mostly associated with HTTP. REST uses verbs to get or update data at the base of this pattern, for example, GET, POST, PUT, PATCH.

REST allows to completely decouple the data representation from its actual structure, enabling flexibility in keeping the same API although the underneath logic or model might change.

REST follows the HATEOAS principles (Hypermedia As The Engine Of Application State) that apply links to other resources as part of the response. It enhances the API self-discoverability by allowing the client to use these links in future requests.

The abstraction that REST implements are a huge advantage. On the other hand, there are some disadvantages. The additional links the HATEOAS generates can lead to many requests on the server-side, which means a simple GET request can return many unnecessary details.

Another drawback can happen when using REST response adversely when returning more information than needed or deserializing all the underlying objects as they are represented in the data layer, which means not using abstracting the data model. Lastly, REST doesn’t allow generating client stubs easily, as opposed to RPC. Meaning the client has to build this stub if needed.

HTTP/1.1 200 OK

Content-Type: application/vnd.acme.account+json

Content-Length: …

{

"account": {

"account_number": 12345,

"balance": {

"currency": "usd",

"value": 100.00

},

"links": {

"deposit": "/accounts/12345/deposit",

"withdraw": "/accounts/12345/withdraw",

"transfer": "/accounts/12345/transfer",

"close": "/accounts/12345/close"

}

}

}

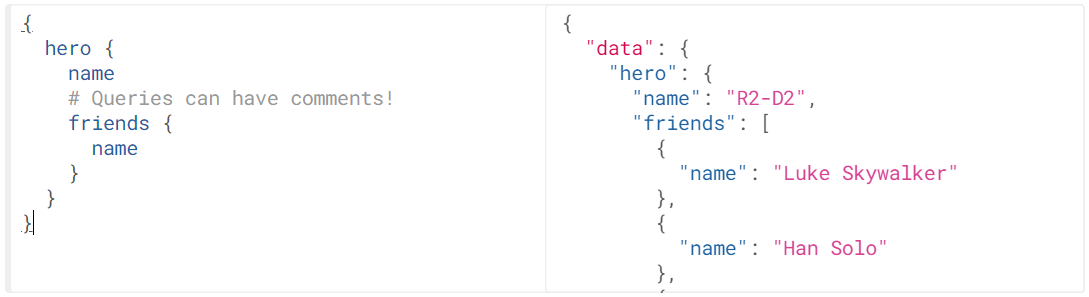

3. GraphQL

GrapQL is a relatively new technology, which can be considered an evolution for REST, but also include some RPC concepts. GraphQL allows structured access to resources but aims to make the interactions more efficient. As opposed to REST, which is based on well-defined HTTP commands, GraphQL provides a query language to access resources and fields. The query language supports CRUD operations as well.

The client defines fields and hierarchies for querying the server, so the client’s business logic should be familiar with its need. With that, the client can receive more information with fewer requests. Receiving the same information in REST requires several GET requests along with combining them on the client-side. Therefore, GraphQL is more efficient by saving network requests.

Another advantage is being agnostic to any programming language.

Like RPC, GraphQL provides the schema of the server's objects, which is very useful for orientation purposes. It allowed the client to define what to query (hierarchy, fields) and how and where to fetch data (Resolver).

It also has a publish/subscribe model, so the client can receive notifications when data has changed.

Asynchronous Communication

Events-based collaboration is based on asynchronous communication. It requires the server to emit events and clients (consumers) to be notified of the events and fetch them.

This mechanism is achieved by using a message broker, which manages a publish/subscribe solution. It also keeps track of what message was seen to avoid multiple consumers for the same message. Using message broker adheres to the concept of smart endpoint, dumb pipes. The client handles the business logic, while the broker handles the communication and transportation.

Along with that, using a message broker adds complexity in all SDLC phases (development until monitoring the solution in production). Since communication is async, the overall solution should include advanced monitoring, correlation IDs, and tracing to tackle failures and problems (described in the Monitoring chapter, Part 4). Nevertheless, this approach allows full decoupling between all the components and scalability options almost seamlessly.

Examples for message brokers are RabbitMQ, Kafka. In the AWS platform, it can be SNS or SQS.

Asynchronous Communication via Message Broker

Service to Service Authentication

Although services may be communicating in the same network, it is highly advisable to ensure the communication is secured. Besides encrypting the channel (HTTPS, TLS, IPSec), we need to make sure that authorized entities make requests. Authentication means the system identifies the client that initiated a request and approves it. The aspect of Authorization, meaning ensuring the entity is allowed to execute the request or receive data, will be covered partially in this article.

Authentication is important not only for securing the system but also for business-domain aspects; for example, choosing the adequate SLA for each consumer.

Authentication solutions can be implemented in some ways. Lets review three of them:

The first solution to consider is using certificates. Each microservice holds a unique certificate that identifies it. Although it is easy to understand, it is hard to manage since the certificates are all over the system; it means the certificate's information should be spread across the services. This problem is magnified when dealing with certificate rotation. Therefore, this approach may be relevant for specific services, but not as a comprehensive authentication solution.

The second solution is using a single-sign-on service (SSO), like OpenID Connect. This solution routes all traffic through the SSO service on the gateway. It centralizes the access-control, but on the other hand, each service should provide its credential to authenticate via the Open Connect module to be tedious. For further reading about OpenID, please refer to reference [8]

The last solution for authentication is API Keys. The underlying principle of this solution is passing a secret token alongside each request. This token allows the receiver to identify the requestor. In most implementations, there is a centralized gateway to identify the service based on its token.

There are several standards for token-based authentication, such as API Keys, OAuth, or JWT. Besides authentication, tokens can be used for authorization too. Using tokens allows checking the service’s privileges and its permissions. This is outside of this article's scope; for further reading, please refer to reference [9].

4. Tracking the Chain of Events: Logging, Monitoring, and Alerting

Although this is the last part of this article, it should be in our minds starting from the beginning. Understanding the complete flow of the data is arduous, not to mention understanding where errors stem from. To overcome this intricacy, there’s a need to reconstruct the chain of calls to reproduce a problem and remediate it thereafter.

Using a logging system, combined with monitoring and alerting solutions, is essential to understand the chain of events and to identify problems and rectify them.

Logging

In the monolithic world, logging is quite straightforward since they are mostly sequential and can be read in a certain order. However, there are multiple logs in a microservices architecture, so going through them and understanding the data flow can be complex. Therefore, it is paramount to have a tool that aggregates logs, stores them, and allows querying them. Such a tool is called Log aggregator.

There are some leading solutions in the market. For example, ELK (Elasticsearch, Logstash, Kibana): Elasticsearch for storing logs, Logstash for collecting logs, and Kibana provides a query interface for accessing logs.

Another visualization tool is Grafana, an open-source platform used for metrics, data visualization, monitoring, and analysis. It can use Elasticsearch as the log repository too, or Graphite repository.

Finally, the last tool to present is Prometheus. Prometheus is an all-in-one monitoring system with a rich and multidimensional data model, a concise and powerful query language (PromQL). Grafana can also provide the visualization layer on top of Prometheus.

Distributed Tracing

It is important to standardize the log message format in any log aggregation solution and define all log levels (debug, information, warning, errors, fatal).

Since logs are spread all over with not order, one way to unravel the sequence is Correlation IDs; a unique identifier is planted in each message after it has been initialized. This identifier allows tracking the message as it passes or is processed in each service.

The centralized log finds all the instances in which the message identifier appears and generates a complete message flow.

Besides tracking an individual message flow throughout its lifetime, this distributed tracking method can trace the requests between services, which is useful to obtain insights and behavior of our system—for example, problems like latency, bottlenecks, or overload.

Examples of open source solutions that users Correlation ID are Zipkin and Jaeger.

Audit Logging

Another approach is Audit logging. Each client reports what messages it handles into a database. It is a pretty straightforward approach, which is an advantage; however, the auditing operation is embedded with the business logic, making it more complicated.

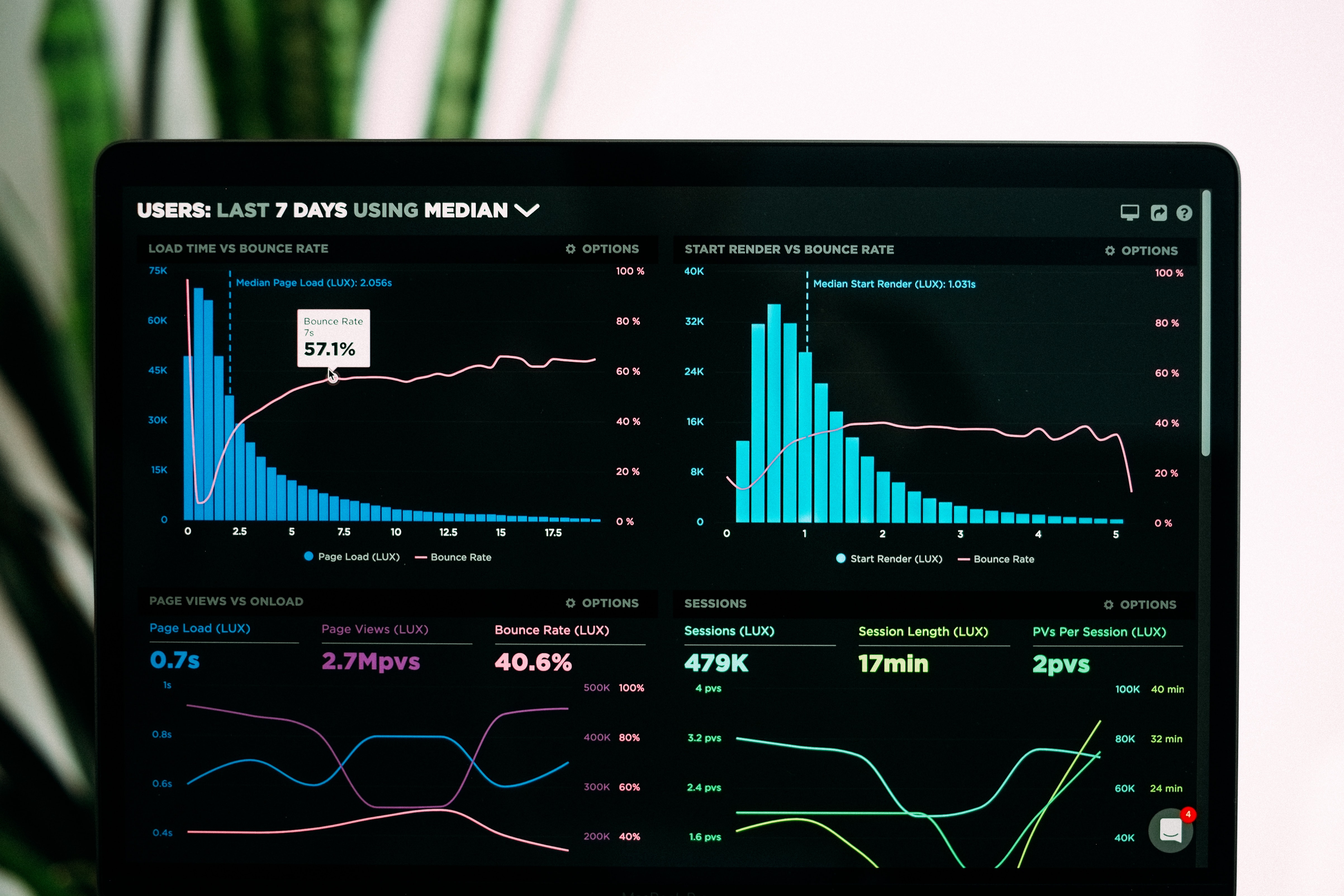

Monitoring

As you already know, monitoring a microservices environment is much more complex than monitoring a monolith application. There is one main service or several services with well-predicted communication between them.

The main reasons for this complexity are:

Firstly, the focus on real-time monitoring is greater since microservices solutions are mainly implemented to cope with the large-scale system. Thus, monitoring the state and health of each service and the system as a whole is crucial. A service’s failure might be hidden or seem negligible, but it can greatly impact the system’s functionality.

Secondly, running many separate services in parallel, either managed by an orchestrator or working independently, can lead to unforeseen behavior. Not only monitoring in production is complicated, but simulating all the possible scenarios in a testing lab is not trivial. One way to mitigate this problem by re-playing messages in a testing environment, but sometimes physical or regulatory limitations do not allow it. So, there is no other option in some cases but testing the comprehensive monitoring solution fully only in production.

After we understood the complexity in monitoring microservices-based solution, let’s see what should be monitored.

It’s trivial to start with monitoring the individual service based on predefined metrics, such as state, the number of processed messages in a time-frame, and other business-logic-related metrics. These metrics can reveal whether a service is working as per normal or some problems or latency. Measuring performance-based on statistics can be beneficial too. For example, if we know the throughput between 1 pm to 2 pm every day is 20K messages, there may be a problem if the system deviates from this extent.

The next step is to monitor the big-picture, the system as a whole, using aggregative metrics. Tools like Graphite or Prometheus can gauge a single service's response time, a group of services, or the system itself. The data is presented in dashboards, which reflect and reveal problems and anomalies. The art is how to define metrics that reflect well the system’s health; monitoring CPU, RAM, network utilization, latency, and other obvious metrics is not enough to understand whether the logic under the hood works as expected.

One of the ways is to use synthetic monitoring. The idea is to generate artificial events and insert them into the processing flow. The results are collected by the monitoring tools and trigger alerts in case of errors or problems.

Photo by Luke Chesser on Unsplash

Alerting

Monitoring is essential, but it can be likened to a falling tree in a forest without exposing problems externally, but no one is there to observe it. Therefore, any system should emit alerts once a certain threshold has been reached, or a critical scenario should be handled by external intervening.

Alerts can be on the system level or the individual microservices level. An alert should be clear and actionable. It is easy to fall into the trap that many errors raise alerts, and by that, the alerts mechanism is being abused and becomes irrelevant.

Beware of alerts fatigue, as it leads to overlooking critical alerts. Receiving many alerts shouldn’t be a norm; otherwise, it means the alert is mistuned, or the system’s configuration should be altered. Therefore, a best-practice is to review the alerts every once in a while.

To handle alerts effectively, there should be a procedure or a guide that adequately describes the nature of the alert and how to mitigate or rectify it. To tackle alerts effectively, the system should include an incident response plan for critical alerts. Not only is handling the flaw required, but post-mortem investigation must also maintain a learning process and retain knowledge-base. Remember, every incident is an opportunity to improve.

The Human Factor and the Cultural Aspects

Finally, besides the technical platform to host and maintain the microservices solution, we must address the human factor. Melvin Conway’s words summarise this concept:

“Any organization that designs a system, defined more broadly here than just information systems, will inevitably produce a design whose structure is a copy of the organization’s communication structure.”

Simply put, service ownership is crucial to keep the microservices solution efficient by reducing communication efforts and the cost of changes.

This ownership makes sure that a team is fully responsible for all aspects of service throughout the SDLC cycle, starting from requirements gathering until releasing and supporting the production environment.

Such autonomy reduces dependencies to other teams, decreases the friction with other parties, and allow the team to work in an isolated environment.

Photo by Annie Spratt on Unsplash

Wrapping Up

That was a long ride; thank you for making it till the end!

I tried to be comprehensive and cover the various topics that affect implementing a microservice-based solution. There is much more to cover out there, and you can continue with the references below.

The transition to microservices will only increase as systems continue to grow. You can keep in mind or adopt some of these concepts when you plan your new system or next upgrade.

Keep on building!

— Lior

References:

- [1] Service templates: https://www.slant.co/options/1237/alternatives

- [2] Service discovery: https://www.nginx.com/blog/service-discovery-in-a-microservices-architecture/

- [3] Service registry: https://microservices.io/patterns/service-registry.html

- [4] CQRS: https://docs.microsoft.com/en-us/azure/architecture/patterns/cqrs

- [5] Bulkhead pattern: https://docs.microsoft.com/en-us/azure/architecture/patterns/bulkhead

- [6] Resiliency patterns: https://docs.microsoft.com/en-us/azure/architecture/patterns/category/resiliency

- [7] Orchestration or Choreography: https://robertleggett.blog/2019/03/17/when-should-you-choose-choreography-vs-orchestration-for-your-microservices-architecture/

- [8] OpenID Connect: https://openid.net/connect/

- [9] JWT introduction: https://jwt.io/introduction/

- General — Designing a microservices-based solution: https://docs.microsoft.com/en-us/dotnet/architecture/microservices/multi-container-microservice-net-applications/microservice-application-design

Opinions expressed by DZone contributors are their own.

Comments