AWS Serverless Lambda Resiliency: Part 1

Resiliency considerations get complex even for the most basic scenarios and require careful systems thinking and architecture decisions.

Join the DZone community and get the full member experience.

Join For FreeIn this series of articles, we address the patterns for the resilience of cloud-native serverless systems.

This article covers an introduction to cloud-native and serverless resiliency and different scenario approach patterns, and part 2 covers further scenario patterns.

Resiliency

The ability of a system to handle unexpected situations or failure(s):

- Without the user noticing it (best case),

- With slight degradation of service (next best case),

- By failing fast (worst case).

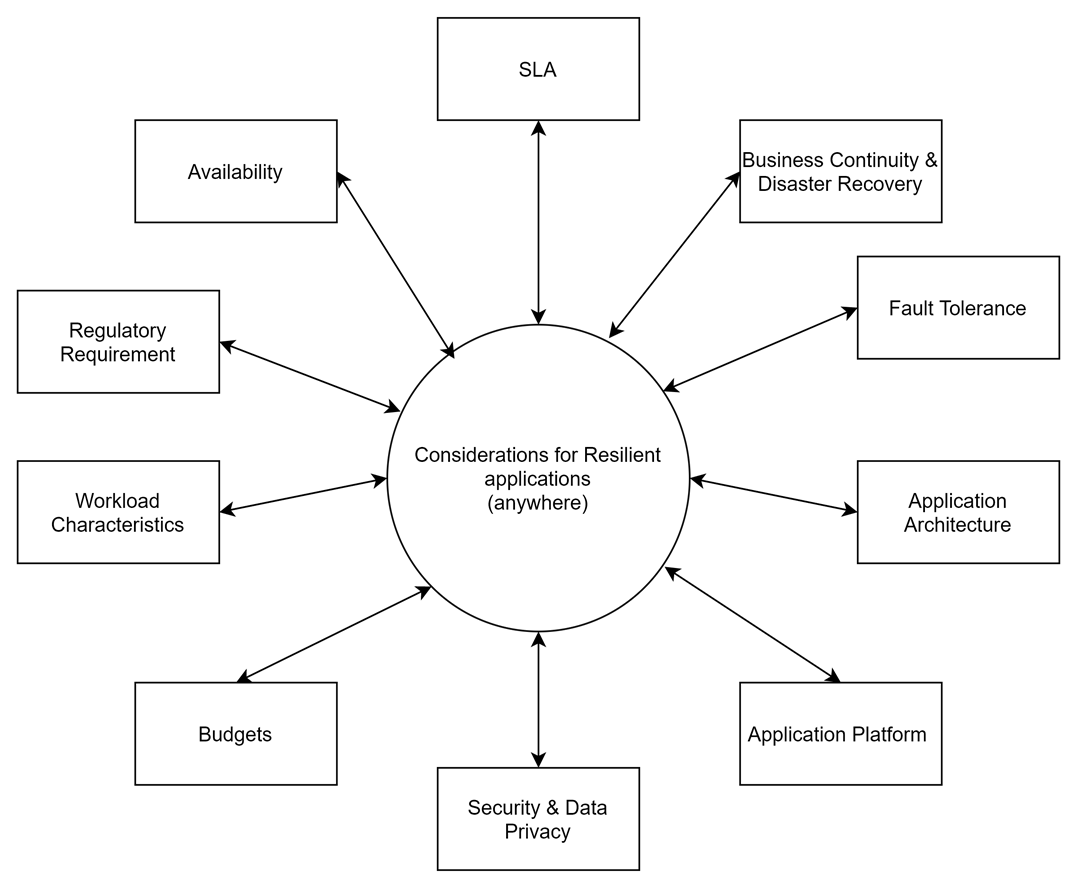

When thinking of resilient cloud-native applications, multiple aspects of the system being built need to be considered.

Resiliency considerations get complex even for the most basic scenarios and require careful systems thinking and architecture decisions. Take the most straightforward scenario of synchronous invocation between two components.

- Your call from service A may never reach service B.

- You could experience Latency which is more than you (Service A) can tolerate.

- Service B is unable to respond and the call times out.

- Service B may perform the request but never respond.

- What if these two services are participating in a distributed transaction?

- What if you have a message that needs to be further handed off?

Cloud-Native Resiliency

Cloud-native technologies through containers and serverless capabilities make it easier to develop resilient applications but handle resiliency in their own different ways. Multiple approaches such as framework and platform capabilities are available today to implement and manage resiliency. Polly, Resilience4J are good examples of thinking resiliency at the application level. In contrast, Istio is a good example of thinking resiliency at the platform level (Istio, of course, can do much more than help with resiliency). Each of these resiliency capabilities helps implement multiple patterns such as:

- Timeouts

- Circuit Breakers

- Exponential Backoff Retries

- Fall Back

- Rate Limiting

- Bulkheads

Resiliency motivations are often categorized into three common needs:

- Protecting self — Ability to protect oneself (the client) in situations where the backend network dependencies are not responding appropriately. Examples of such approaches could be timeouts, retries, and bulkheads.

- Protecting the backend — Ability to protect backend (provider) in situations where backend network dependencies are not responding appropriately. This would mean not overwhelming the backends by leveraging patterns such as circuit breakers.

- Protecting the user experience — Ability to gracefully degrade or manage user experience in such a way that service degradation impacts are not felt or felt minimally. This can be achieved through intelligent fallbacks that can be implemented for timeouts, retries, and circuit breakers.

While the container cloud-native application world is matured in terms of leveraging resiliency capabilities (frameworks and platforms), the serverless applications have some unanswered areas due to the nature of how serverless is fundamentally implemented with the pay-as-you-go model and on-demand initialization/invocation. The rest of this article focuses on those aspects.

Serverless Resiliency

As teams are building complex cloud-native products and services using serverless technologies like Lambda, establishing standard approaches to ensure resilience in a distributed ecosystem becomes a key consideration.

One of the considerations is to make serverless services more resilient. This is critical as serverless Lambda functions are charged based on Memory limits (which determines the CPU allocation) along with the duration for which these functions are invoked. Without appropriate resiliency, our Lambda services could execute for a longer duration than required, our Lambda could overwhelm the backend services when they are having issues and not available or being in a degraded state, and client experiences that invoke our Lambda functions do not get an immediate fallback response.

We will explore ways to make the consumer serverless components good clients and not overwhelm the providers facing issues like service degradation.

AWS Lambda Serverless capabilities themselves have multiple use cases when invoked synchronously or asynchronously and hence the context of how they can be made resilient can differ between the different invocations.

The approaches change based on whether the solution is deployed in a single region vs. multiple regions. There is also a dependency on where the provider service is deployed, e.g., on AWS (same or another region) or outside AWS.

We will identify ways to ensure that warm start Lambdas are not carrying forward issues that happened during cold start initialization.

The overall objective is to reduce Lambda functions' execution time/memory consumption by optimizing them before deployment.

We will separately look at Lambda services serving synchronous and asynchronous requests.

Examples of AWS services that invoke Lambda synchronously:

- Amazon API Gateway

- Amazon Cognito

- AWS CloudFormation

- Amazon Alexa

- Amazon Lex

- Amazon CloudFront

Examples of AWS services that invoke Lambda asynchronously:

- Amazon Simple Storage Service (Amazon S3)

- Amazon Simple Notification Service (Amazon SNS)

- Amazon Simple Email Service (Amazon SES)

- Amazon CloudWatch Logs

- Amazon CloudWatch Events

- AWS CodeCommit

- AWS Config

This series will look at several options to address these scenarios.

Pattern 1: Lambda Synchronous Invocation and Circuit State Validated by Invoking Lambda

In this option, let's consider Lambdas serving synchronous requests through the API gateway. Here, the Lambda function will check the circuit state of the external service before invoking. Then, based on the ability of the external service to recover, a fallback Lambda will be invoked. We will send a fraction of the requests to the external service and the remaining to the fallback lambda.

Let's walk through how this option works.

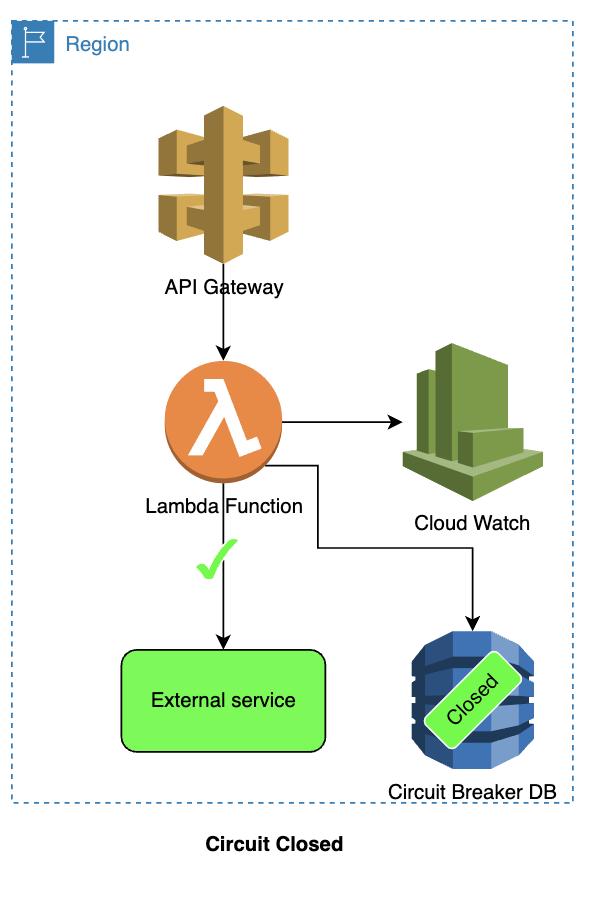

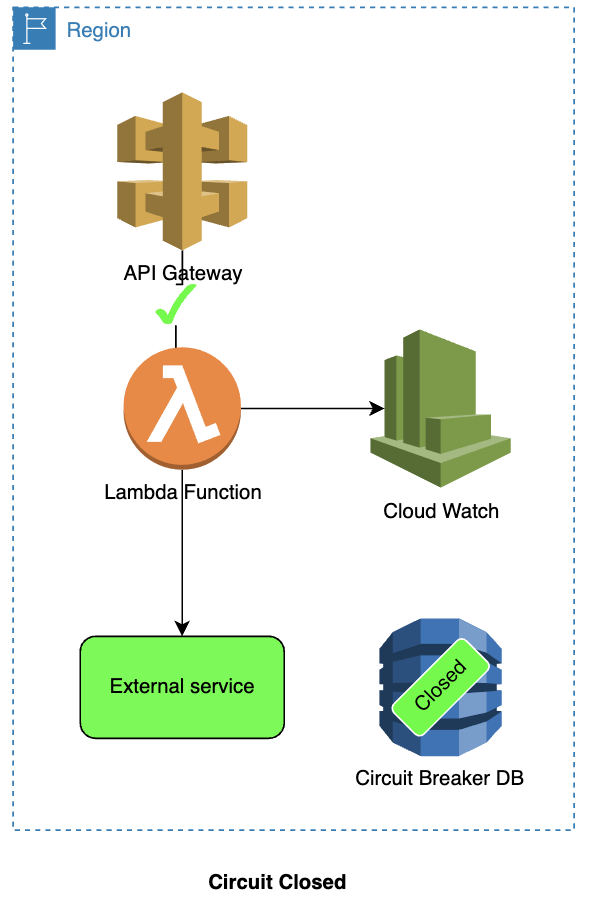

Circuit is closed:

- The API Gateway calls the Lambda function.

- Lambda function checks the status of the circuit (which is closed) from DynamoDB.

- Lambda function calls the external service.

- The calls to the external service are observed using CloudWatch (based on errors and error rates).

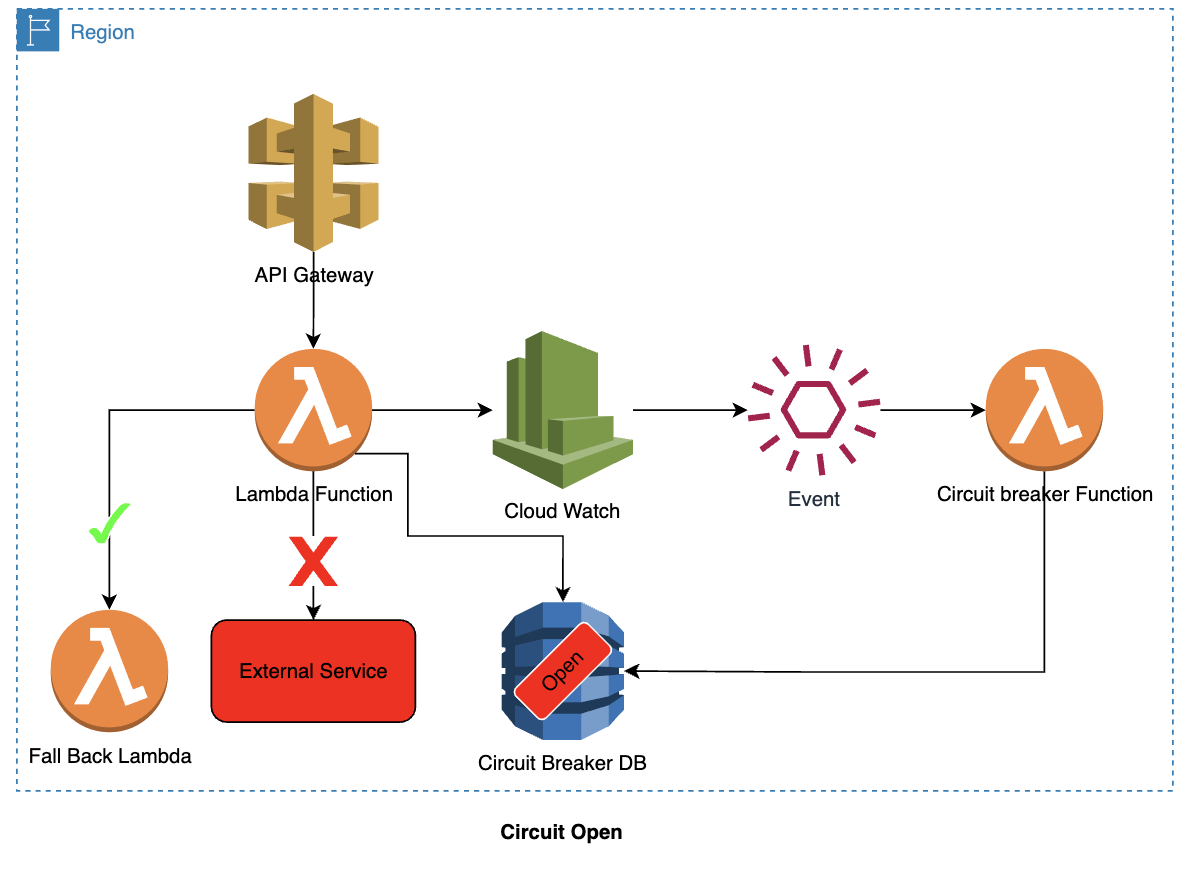

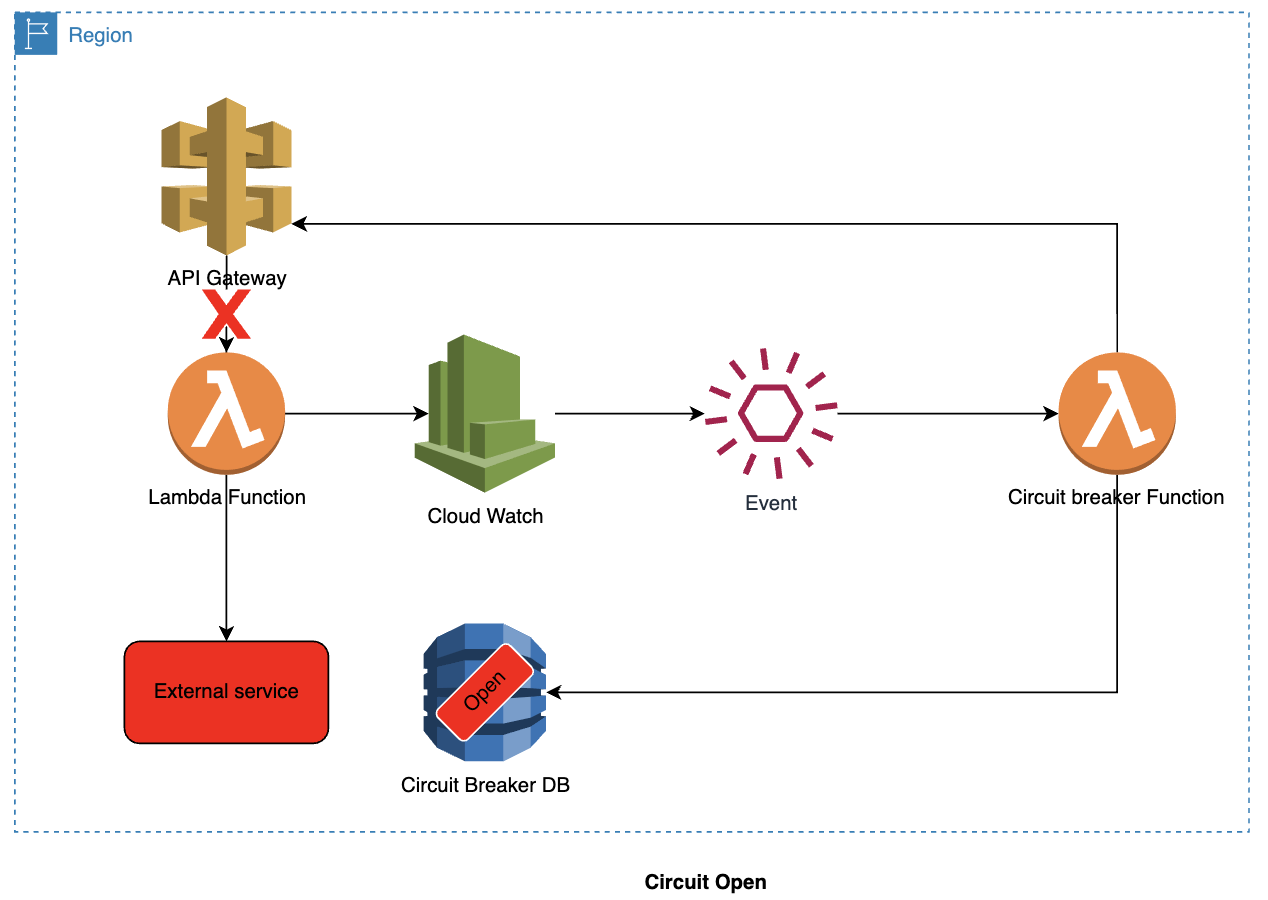

Circuit switches to open:

- Now, let's say, there are issues with the external service:

- A new request comes in through the API Gateway, which then calls the Lambda function.

- Lambda function checks the status of the circuit (which is still closed) in DynamoDB.

- Lambda function calls the external service.

- The calls to the external service are observed using CloudWatch.

- CloudWatch identifies the issues (failure/error/error code) and raises alarm/event.

- The event source configuration in CloudWatch triggers the Circuit breaker Lambda.

- Circuit Breaker lambda creates an item in the DynamoDB, which will have a duration for the open circuit. We will set the duration using the item's Time To Live.

- Circuit is now switched to an open state.

Circuit is open:

- A new request comes in through the API Gateway, which then calls the Lambda function.

- Lambda function checks the status of the circuit (which is open) from DynamoDB.

- As the circuit is open, the Lambda function invokes the fallback service.

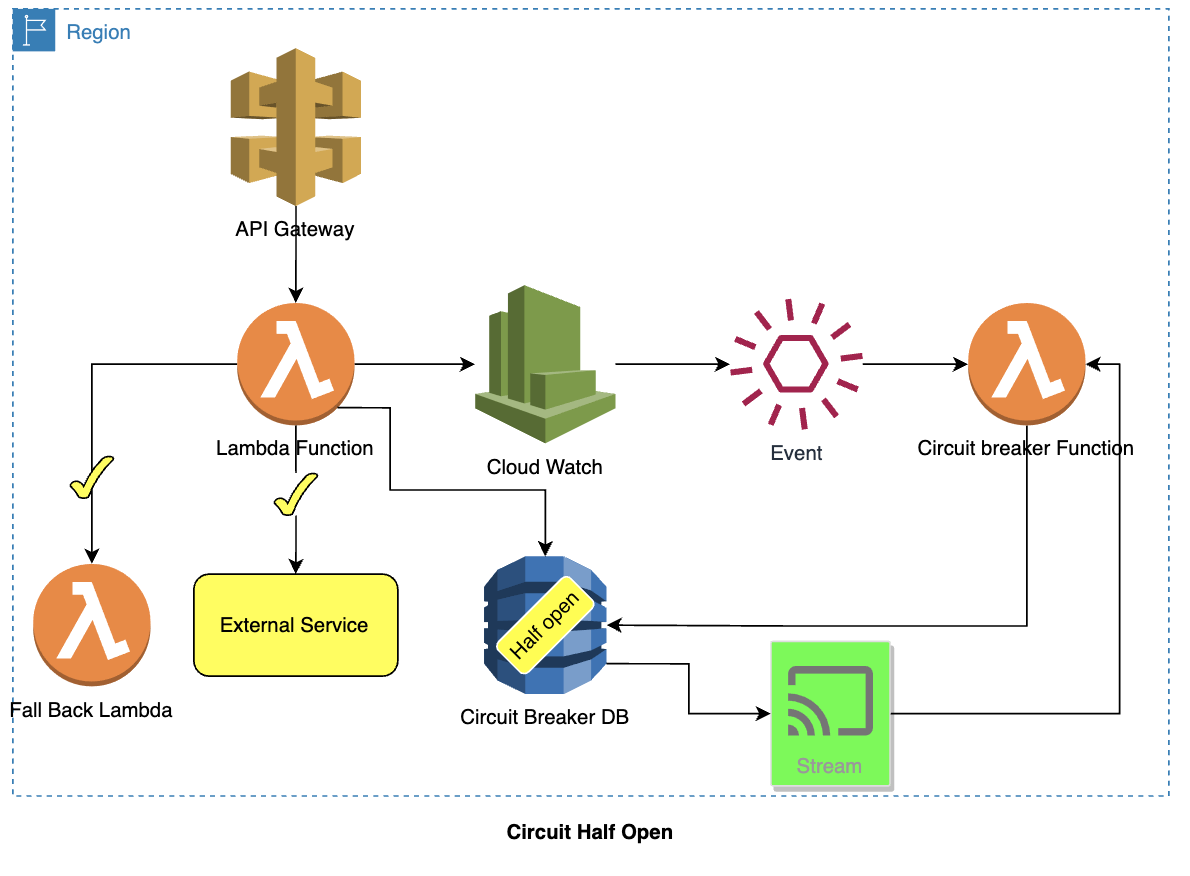

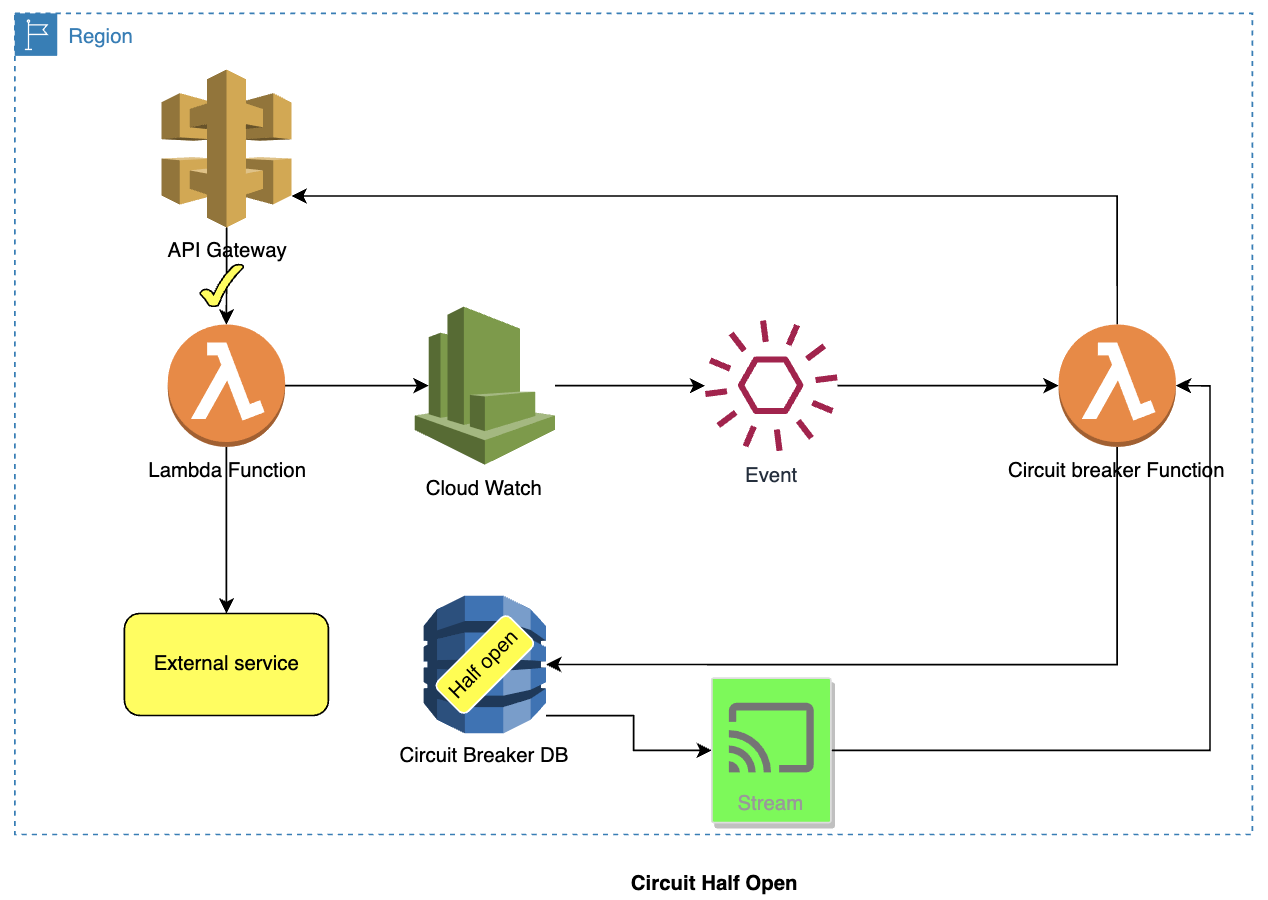

Circuit switches to half-open:

- The TTL of the item (representing the open state) in DynamoDB expires.

- The TTL expiry will result in the DynamoDB stream triggering the Circuit Breaker Lambda.

- The Lambda function creates an item in the DynamoDB, which will have a duration for which the circuit is half open and an invocation limit on the external service for that specific duration. We set the duration using the item's Time To Live.

- Circuit is now switched to a half-open state.

Circuit is half-open:

- A new request comes in through the API Gateway, which then calls the Lambda function.

- Lambda function checks the status of the circuit (which is half-open) from DynamoDB.

- The Lambda function checks if the invocation limit is reached as the circuit is half-open. The lambda function calls the fallback service if the invocation limit is reached. If the invocation limit is not reached, the lambda function decreases the invocation limit and then calls the external service.

- If the CloudWatch identifies any issues (failure/error/error code) and raises the alarm/event, which will result in the invocation of the Lambda to re-open the circuit.

Circuit switches to closed:

- Let's say the external service handled the requests as per the service objective.

- The TTL of the item (representing the half-open state) in DynamoDB expires.

- The TTL expiry will result in the DynamoDB stream triggering the Circuit Breaker Lambda.

- The Lambda function can now increase the invocation limit OR switch the circuit to Closed. Let's consider the design here where the circuit is closed.

- Circuit is now switched to a closed state.

- The Lambda function starts showing normal behavior expected when the circuit is closed.

We can use different approaches to set the open/half-open state time if issues are identified repeatedly. The duration can be based on exponential back-off or random jitter.

When may this approach be applicable?

- This approach works for synchronous services.

- It is acceptable from a business standpoint to function with reduced functionality.

- There is a fallback function service, which can be an alternative implementation with full or reduced functionality.

- The Lambda function is aware of the circuit breaker and checks the state before invoking the external service.

Pattern 2: Lambda Synchronous Invocation and Circuit State Validated by API Gateway and Leveraging Gateway Throttling Limit

In this option, let's consider Lambdas serving synchronous requests through the API gateway. In this option, the API Gateway throttles the invocation to the Lambda function based on the status of the external service.

Circuit is closed:

- The API Gateway calls the Lambda function.

- Lambda function calls the external service.

- The calls to the external service are observed using CloudWatch (based on errors and error rates).

Circuit switches to open:

- Now, let's say, there are issues with the external service.

- A new request comes in through the API Gateway, which then calls the Lambda function.

- Lambda function calls the external service.

- The calls to the external service are observed using CloudWatch.

- CloudWatch identifies the issues (failure/error/error code) and raises alarm/event.

- The event source configuration in CloudWatch triggers the Circuit breaker Lambda.

- Circuit Breaker lambda creates an item in the DynamoDB, which will have a duration for the open circuit. The duration is set using the item's Time To Live. The Lambda will then use the SDK to set the API gateway throttling to zero.

- Circuit is now switched to an open state.

Circuit is open:

- A new request comes in through the API Gateway.

- As the throttling is set to zero, the API Gateway will not call the lambda function. It will return an error to the invoking client.

Circuit switches to half-open:

- The TTL of the item (representing the open state) in DynamoDB expires.

- The TTL expiry will result in the DynamoDB stream triggering the Circuit Breaker Lambda

- The Lambda function creates an item in the DynamoDB, which will have a duration for which the circuit is half-open. The Lambda will set a partial throttling limit for that specific duration. The duration is set using the item's Time To Live.

- Circuit is now switched to a half-open state.

Circuit is half-open:

- A new request comes in through the API Gateway.

- API Gateway will not call the lambda function and return an error to the invoking client if the throttling limit is reached. The API gateway calls the lambda function if the throttling limit is not reached.

- If the CloudWatch identifies any issues (failure/error/error code) and raises the alarm/event, which will result in the invocation of the Lambda to re-open the circuit.

Circuit switches to closed:

- Let's say the external service handled the requests as per the service objective.

- The TTL of the item (representing the half-open state) in DynamoDB expires.

- The TTL expiry will result in the DynamoDB stream triggering the Circuit Breaker Lambda.

- The Lambda function can now increase the API gateway throttling limit OR switch the circuit to Closed (by setting the API Gateway throttling limit to the original value). Let's consider the design here where the circuit is closed. Updating the throttling limit is achieved using the API Gateway SDK.

- Circuit is now switched to a closed state.

- The Lambda function starts showing normal behavior expected when the circuit is closed.

When may this approach be applicable?

- This approach works for synchronous services.

- This option does not invoke the Lambda function. Hence the cost involved with Lambda invocations is avoided when the circuit is open.

- It is acceptable from a business standpoint to function with the function provided by the Lambda function and the external service.

- It is acceptable to throw throttling errors to the consuming client.

- The Lambda function is not aware of the circuit breaker.

References

- IBM Garage Resiliency Practices:

- https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-properties-apigateway-usageplan-throttlesettings.html

- https://docs.aws.amazon.com/AWSJavaSDK/latest/javadoc/com/amazonaws/services/apigateway/model/ThrottleSettings.html

- https://docs.aws.amazon.com/apigateway/latest/developerguide/api-gateway-request-throttling.html

- https://docs.aws.amazon.com/apigateway/latest/developerguide/http-api-throttling.html

Opinions expressed by DZone contributors are their own.