A data quality strategy must begin with a clearly defined business need or impact goal. Like any other strategic undertaking, it is grounded in two key premises:

- Awareness: Poor data quality poses a direct barrier to achieving business objectives.

- Company-wide: Data quality is not just a technical challenge but also an organization-wide concern with far-reaching implications for decision making, compliance, operational efficiency, and customer satisfaction.

By acknowledging these foundational truths, organizations can establish a focused framework that ensures data quality efforts are both impactful and sustainable. An effective data quality strategy requires a structured approach. The following steps outline a framework to define and implement a strategy for ensuring high-quality data.

Step One: Obtaining Business Leader Support

The first step in obtaining support for a data quality strategy is to articulate a clear and quantifiable business case. For example, a company is experiencing low conversion rates in their sales pipeline due to poor data quality.

- Problems to solve:

- Multiple duplicate entries for the same company (often with slight variations in name)

- Missing firmographic data (e.g., industry sector, revenue, employee count)

- Business goal/success metrics:

- Increase revenue by 10%. By reducing the time wasted on poor-quality leads, sales teams can focus on more qualified prospects and close deals faster.

- Reduce duplicate records by 60% (uniqueness). A tangible indicator of improved data hygiene that ensures consistent, accurate company information in the CRM system.

By presenting a clear problem (poor conversion), a measurable goal (increase revenue, reduce duplicates), and outlining how improved data quality can directly address these challenges, you build a strong business case that resonates with leadership and secures the support necessary to drive a successful data quality initiative.

Step Two: Perform a Data Quality Audit

Before defining a data quality strategy, it is crucial to understand the current state of data health. A data quality audit helps assess the accuracy, completeness, consistency, and reliability of the data within an organization. This step provides insights into critical gaps and areas requiring immediate improvement.

The key objectives of the audit are to:

- Identify data issues and assess their impact on business goals

- Prioritize areas for improvement based on risk and business criticality

- Establish a baseline for measuring and tracking future data quality improvements

This audit serves as the foundation for the next steps in the data quality strategy — a clear understanding of current data health and key problem areas to ensure that efforts are focused on the most critical areas.

Identify data sources:

- Determine where the data resides (e.g., databases, data warehouses, cloud storage, external systems via APIs)

- Identify the data entry points (e.g., applications, data pipelines, APIs)

- Identify data owners who are responsible for maintaining and updating the data

- Define the business purpose of each dataset and the stakeholders (e.g., operational, reporting, customer engagement, compliance)

Assess data freshness:

- Determine whether the data is available when needed for decision making or operations

- Measure data latency: Is the data updated in real time, hourly, daily, or less frequently?

Analyze data structure and format:

- Identify the most relevant data for key business processes

- Define the expected format and structure for each critical dataset (e.g., required fields, data types, validation rules)

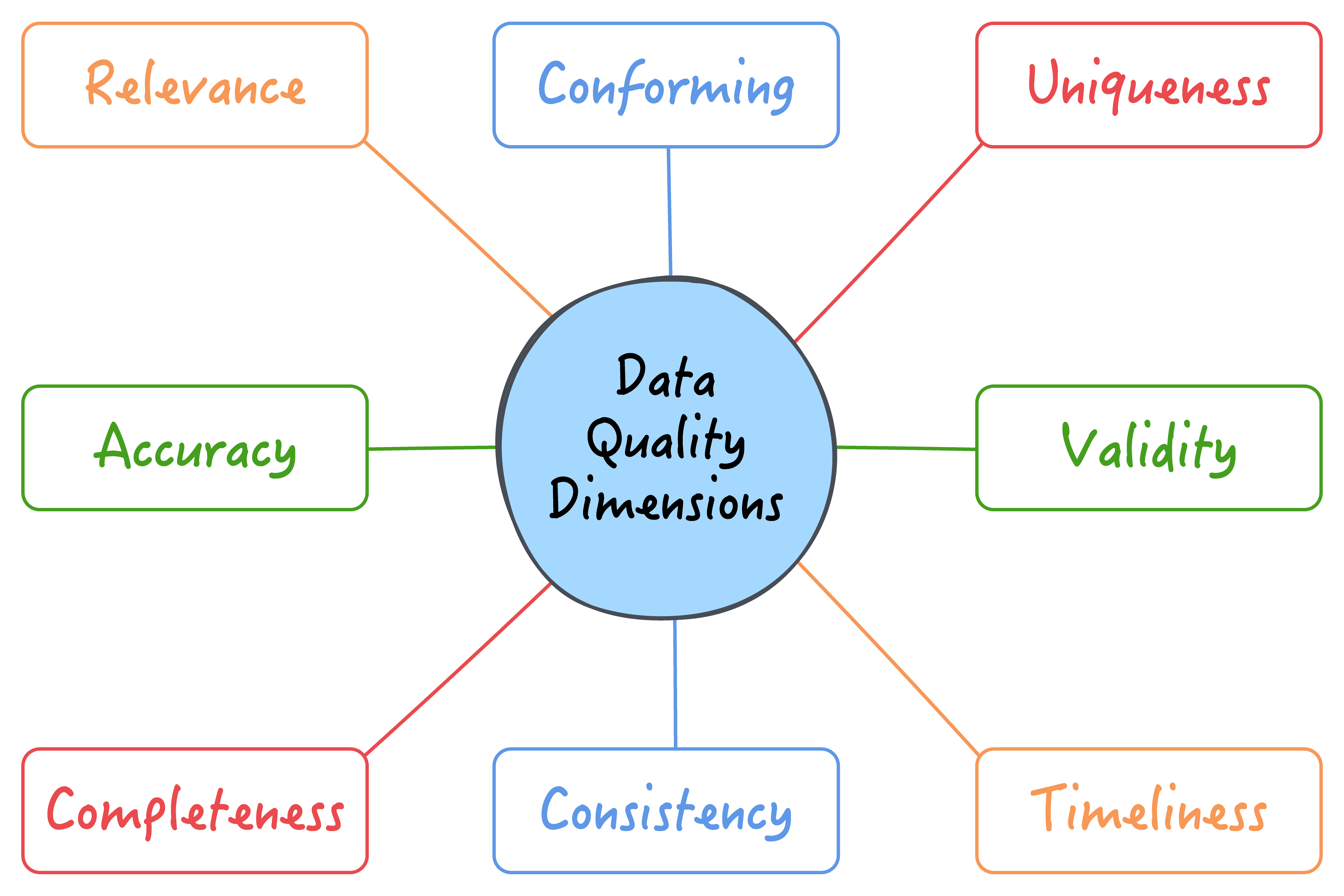

Define data quality measurement criteria:

- Accuracy – Are data values correct? (e.g., email validation, postal address verification)

- Completeness – Are required fields populated? (e.g., missing customer phone numbers)

- Consistency – Is data uniform across sources? (e.g., a customer's name matches across systems)

- Uniqueness – Are there duplicate records? (e.g., multiple entries for the same customer)

- Timeliness – Is data up to date? (e.g., last modified timestamps)

- Integrity – Are there broken relationships or missing links in relational databases?

Measure data impact and criticality scoring:

- Define a criticality scoring system that evaluates the severity of data issues based on business impact

- Consider the business case priority (e.g., if the goal is revenue growth, but a security issue arises, security may take precedence)

- Assess the percentage of data affected by quality issues to understand the scale of the problem

Determine the scope of the audit:

- Are we auditing all data sources or focusing on a subset (e.g., customer data, financial records)?

- Should we focus on specific customer segments, products, or departments?

- Will the audit cover historical data, real-time data, or both?

Use data sampling for large datasets:

- For large-scale audits, statistical sampling methods are used to analyze representative subsets

- Ensure samples are diverse and unbiased, covering different customer segments, geographies, and data sources

Benchmark against industry standards: Compare data quality metrics with industry benchmarks to assess performance relative to competitors.

Step Three: Identify Your Data Leakage Points

Next, we focus on understanding why data inconsistencies occur. By identifying the root causes of data leakage points, organizations can take targeted actions to prevent data degradation and improve overall data quality.

Some of the most common data leakage points include:

Table 1: Data leakage points

| Leakage Point |

Examples |

|

Customer-facing channels |

- Website forms – customers may input incorrect, incomplete, or inconsistent data due to lack of validation

- Call centers – agents may enter incorrect customer details or fail to update information properly

- Live chat and support interactions – data may be missing or improperly logged

|

|

Internal business processes |

- Manual data entry by employees – errors occur when data is keyed in without proper validation

- Data integration issues – problems arise when migrating or synchronizing data between systems (e.g., CRM, ERP, marketing tools)

- Duplicate data entry – same customer or transaction details being entered multiple times across different platforms

|

|

Third-party and external data sources |

- Partner or supplier data – mismatched, incomplete, or incorrect information from external sources

- Market research and lead lists – purchased datasets often contain duplicates, outdated records, or missing details

- Social media and external APIs – inconsistent or unreliable data sources feeding into internal systems

|

Data quality issues can arise from multiple sources. The most common causes include:

Table 2: Data quality issues

| ISSUE |

Examples |

|

Manual input errors |

- Typos, incomplete forms, and human mistakes during data entry

- Inconsistent formatting or missing critical details

|

|

Outdated or stale data |

- Customer contact details (email, phone, address) are not regularly updated

- Business records that become obsolete due to changes in company status

|

|

Data silos and fragmentation |

- Different departments using separate, non-integrated systems, leading to data inconsistency

- Inability to synchronize customer information across CRM, ERP, and marketing automation tools

|

|

Lack of standardization |

- No predefined naming conventions or data formatting rules (e.g., phone numbers stored in different formats across systems)

- Different teams using inconsistent taxonomies for products, customers, or transactions

|

|

Insufficient data validation and governance |

- Weak or missing validation checks when capturing data (e.g., missing required fields, incorrect email syntax)

- No real-time verification mechanisms to detect errors at the point of entry

|

|

Issues with third-party data sources |

- External data providers supplying inaccurate, duplicated, or outdated records.

- Incomplete or mismatched data during third-party data integrations

|

Organizations can take proactive measures to prevent future data quality issues by identifying where and why data inconsistencies occur. Addressing data leakage points early in the process helps maintain accurate, reliable, and high-quality data, supporting better decision making and business efficiency.

Step Four: Define a Data Quality Strategy

After identifying data inconsistencies and leakage points, it is essential to establish a structured plan for managing, monitoring, and improving data quality. A well-defined data quality strategy should incorporate techniques for data validation, cleansing, standardization, matching, and continuous monitoring to ensure data integrity across all business processes.

Data Parsing and Standardization

Ensure that data conforms to predefined standards and is formatted consistently across all systems. A clear example is customer contact data (e.g., telephone numbers, email addresses, postal addresses); we must ensure that they conform to predefined formats for effective communication.

Example Use Case: Phone Number Standardization and Verification in a CRM Tool

Sales representatives cannot contact potential customers because their phone numbers are incorrectly formatted in the CRM system. Some numbers lack country codes, and others have inconsistent spaces or special characters, making them unusable for auto-dialers or instant messaging campaigns.

Solution:

- Implement a phone number parser to analyze and correct formatting inconsistencies

- Standardize phone numbers according to E.164 international standards, ensuring compatibility with dialers

- Example transformation:

- Input: +1-8000123456, (800)012-3456, 8000123456

- Parsed output: +1 (800) 012-3456 (standardized and ready for use)

- Verify that the mobile or landline numbers are active and able to receive calls

- Automate this process upon data entry or import to ensure uniformity across all customer records

Generalized Data Cleansing

Ensure that data is accurate, standardized, and error-free by removing errors and inconsistencies to meet data quality requirements. Some of the common mistakes that occur with the information stored in CRM systems are as follows:

- Misspelled or incorrectly formatted customer names, making outreach impersonal

- Inconsistent email case formatting, causing email automation errors

- Abbreviated or misspelled company names, creating duplicate accounts and confusion

- Inconsistent use of punctuation in addresses, leading to incorrect mailings

- Unstandardized phone numbers, preventing automated dialers from functioning properly

Example Use Case: Company Name Standardization and Duplicate Removal in a CRM Tool

Inconsistent company name abbreviations lead to duplicate records. Sales reps unknowingly contact the same company multiple times, creating a poor customer experience.

- IBM Corporation vs. International Business Machines (same company, different formats)

- Microsoft.Corp vs. Microsoft Corp (punctuation inconsistency)

- Go0gle vs. Google (typo due to OCR error)

Solution:

- Create a standardized company name list or maintain commonly abbreviated company names by using external databases (e.g., LinkedIn, official company registries). When a new company name is entered into the CRM tool, a script checks against the reference list and replaces the long form with the standard abbreviation.

- Input: "International Business Machines Corporation"

- Lookup: Exists in reference list as "IBM"

- Output: "IBM" (standardized)

- Apply abbreviation standardization (e.g., always use "IBM" instead of "International Business Machines")

- Remove unnecessary punctuation:

- Microsoft.Corp → Microsoft Corp

- Use fuzzy matching to detect close matches based on similarity score and merge duplicate company records

Table 3: Matching process

| Company Name (Record 1) |

Company Name (Record 2) |

Match Score |

Action |

|

IBM |

International Business Machines |

98% |

Merge to "IBM" |

|

Microsoft Corp |

Microsoft.Corp |

90% |

Merge to "Corp" |

|

Coca-Cola Co. |

Cola |

70% |

Needs manual review |

Data Validation and Verification

Data validation ensures that newly entered or imported data adheres to predefined rules before it is stored. This prevents incorrect, incomplete, or illogical data from entering the CRM tool, reducing the likelihood of errors in sales forecasting, customer communication, and reporting.

Table 4: Validation rules

| Validation Type |

Description |

Example |

|

Format validation |

Ensures data follows a predefined structure |

"Email" must have @domain.com format (e.g., jane.doe@email.com) |

|

Type validation |

Ensures data is entered in the correct format (e.g., numeric, text) |

Phone number should only contain numbers (e.g., +1 800-555-1234) |

|

Mandatory field validation |

Ensures critical fields are not left empty |

A new lead record must contain "First Name," "Last Name," "Email," and "Company" |

|

Range validation |

Ensures numeric values fall within an expected range |

"Lead Score" must be between 0 and 100 |

|

Cross-field validation |

Ensures data fields are logically consistent |

If "Country" = "USA," then "State" should be a valid U.S. state |

|

Uniqueness validation |

Ensures duplicate records are not created |

A lead's email should not already exist in the CRM |

|

Timeliness validation |

Ensures data is updated regularly and is not outdated |

The "Last Contacted Date" should not be more than six months old |

While validation ensures data meets internal rules, data verification ensures data matches real-world sources. This process helps remove fake, outdated, or incorrect information from the CRM system.

Table 5: Verification rules

| Validation Type |

Description |

Example |

|

Address verification |

Checks if an address is valid and deliverable |

"1234 Street Name Road, Atlanta" is validated against the USPS postal database |

|

Phone number verification |

Ensures phone numbers are live and callable |

Phone numbers are checked against telecom databases to confirm validity |

|

Email verification |

Ensures email addresses exist and can receive messages |

Use an email verification service |

|

Duplicate record matching |

Ensures the same lead or customer is not entered multiple times |

If "Jane Doe - jane.doe@gmail.com" exists, a duplicate is flagged before entry |

To automate and enhance data validation, companies can use AI-driven rules for monitoring and cleansing data at scale.

Table 6: AI data validation and verification

| Rule Type |

Description |

Example |

|

Uniqueness rules |

AI detects duplicate lead entries across multiple data sources |

A sales rep enters "Mike Johnson - mike@xyz.com," but AI detects a similar record and flags it for review |

|

Validity rules and drift detection |

AI monitors fields for inconsistencies over time |

If "Industry" had 10 categories last month but now has 50, AI alerts the team about data drift |

|

Timeliness rules |

AI flags outdated records and automatically updates missing fields |

A lead hasn't been contacted in six months, so AI suggests an outreach |

|

Accuracy monitoring |

AI automatically verifies numeric values in lead scoring, revenue, and other key metrics |

If the "Lead Score" suddenly fluctuates from 10 to 95, AI checks whether this is an error or a real trend |

To fully integrate validation and verification into a CRM system, businesses can implement automated workflows that:

- Perform validation checks on data entry

- Trigger verification processes (API calls, database queries)

- Automatically correct errors or suggest fixes

- Alert sales reps when data doesn't meet quality standards

Data Matching

Data often originates from multiple sources, such as website forms, third-party integrations, call center logs, marketing lists, and manual entry, that can generate duplicate or fragmented records. Data matching solves these issues by linking disparate customer records that refer to the same entity. Data-matching techniques include:

- Deterministic matching (exact matching) finds records that exactly match on key fields (e.g., email, customer ID). This technique is effective for structured datasets with unique identifiers (e.g., Social Security numbers, employee IDs, CRM lead IDs).

-

Fuzzy matching (probabilistic matching) compares and identifies similar records when exact matches are not available. This technique is useful when names, addresses, or emails have slight variations due to typos or different formatting, and when unique identifiers (e.g., email, phone number) are missing but other fields overlap.

Below are some of the most common fuzzy matching algorithms:

- Levenshtein distance measures the minimum number of edits (insertions, deletions, or substitutions) required to transform one string into another (e.g., "Jon" → "John").

- Jaro-Winkler distance measures the similarity between two strings by considering both common characters and character transpositions while giving higher weight to prefixes that match (e.g., "Johnathan" vs. "Jonathan").

- Jaccard index compares the set of unique words (tokens) in two strings and calculates the similarity based on how many words overlap.

Data Profiling

Data profiling is a critical step that analyzes existing records, summarizes findings, and generates insights for improvement that guide improvement initiatives. It involves evaluating data across various dimensions, including:

- Completeness identifies missing or incomplete customer details (e.g., missing email addresses or phone numbers).

- Uniqueness detects duplicate or conflicting records (e.g., multiple entries for the same customer with different spellings).

- Distribution spots anomalies and outliers (e.g., invalid lead scores, extreme transaction values).

- Statistical analysis identifies appropriate value ranges (e.g., min and max values of an attribute, mean, mode, standard deviation) to provide insight into the dataset's quality.

Since data constantly changes, profiling is not a one-time process, and it should be conducted regularly to maintain high-quality, usable data. For example, a company notices declining email open rates in their CRM-based marketing campaigns. Data profiling is used to analyze the lead database and identify issues affecting campaign performance.

Table 7: CRM data profiling examples

| Issue Detected |

Impact |

Action |

|

15% of lead email addresses are missing |

Leads cannot receive campaign emails |

Enrich missing emails using external data sources |

|

Duplicate records for the same customers exist |

Leads receive multiple, identical emails, decreasing engagement |

Deduplicate leads using fuzzy matching |

|

15% of leads have an invalid country field |

Segmented campaigns may target incorrect audiences |

Standardize country formats in the CRM tool |

|

Most sales calls last 3-15 minutes, but some show zero seconds or more than two hours |

Data entry errors or call tracking malfunctions |

Define a reasonable range for valid call durations (e.g., 1–90 minutes) and automatically flag or reject records with values outside that range |

Data Monitoring

Continuous data monitoring is essential for ensuring effective and reliable data usage across your organization. Continuously access, evaluate, and improve data quality by tracking anomalies, inconsistencies, and errors over time to ensure that data remain accurate, complete, and actionable, preventing data decay and operational failure. Organizations should track data quality metrics that align with business goals and data governance policies.

Unlike one-time data cleansing, continuous monitoring allows organizations to:

- Detect errors in real time

- Identify data drift

- Prevent data decay

- Ensure compliance

- Reduce operational risks

Setting up continuous monitoring requires:

- Establishing baseline metrics

- Automating data quality checks

- Determining monitoring frequency

- Determining monitoring actions, reporting, and alerting notifications

For instance, the frequency of data monitoring can be determined by considering the following factors:

Table 8: Recommended data monitoring frequency

| Factor |

Frequency |

|

Compliance-related data

(e.g., GDPR, HIPAA records) |

Real time |

|

High-impact operational data

(e.g., CRM lead records) |

Hourly |

|

Business-critical analytics

(e.g., revenue reports) |

Daily |

|

Historical data audits

(e.g., customer churn analysis) |

Weekly |

A data quality strategy provides organizations with a structured approach to ensuring data accuracy, consistency, and reliability. By implementing data parsing, cleansing, validation, matching, profiling, monitoring, and enrichment, companies can prevent data degradation and maintain high data integrity.

Enrichment

Enhance data quality by using data from various internal and external sources:

- Enrichment helps us turn useful data into even better data. Customer information can be enriched by using external sources to verify and append a latitude and longitude coordinate for a physical address or place.

- You could then layer on other location-specific data (e.g., demographics, traffic, environment, economics, weather) to reveal unique insights.

Step Five: Turn Strategy into Action

Once your data quality strategy is clearly defined, the real work begins: putting it into practice. This involves setting up validation rules, cleansing processes, governance policies, and continuous improvement mechanisms to ensure that your data remains accurate, consistent, and usable. A well-defined strategy might span the entire enterprise or address a specific initiative, but it ultimately becomes the blueprint for practical, day-to-day execution, and it must guide the creation of project requirements, task breakdowns, scheduling, risk analysis, and resource allocation.

Create a Data Quality Roadmap

This stage marks the transition from strategy (the what and why) to project management (the how, when, and who). Provide the project manager with clearly documented objectives, datasets, stakeholder roles, and success metrics to form the backbone of a realistic and actionable plan.

When tackling a data quality initiative, consider two broad approaches depending on the organization's maturity level:

- Start small if data quality is a new initiative: A pilot project could focus on a single column of data (e.g., phone numbers) in one table, affecting only one or two processes. A concise, one-page strategy covering all essential factors may be enough to guide a smaller project.

- Accept greater risks for greater benefits: Large-scale data quality initiatives often deliver higher returns but require more comprehensive strategies and greater organizational readiness.

In either scenario, embrace iteration and incremental value:

- Deliver small wins: Successful pilot projects generate momentum, prove value, and justify further investment.

- Refine continuously: Treat strategy documents as "living" work products. As new insights emerge and organizational needs evolve, update your data quality strategy to reflect lessons learned, shifting priorities, and evolving regulatory or market demands.

Challenges

Even with a solid plan in place, moving from strategy to action can be fraught with obstacles. Below are some typical challenges and how to mitigate them:

- Scalability issues: Relying too heavily on manual processes hampers growth. Introduce automated validation and cleansing tools to maintain consistency and reduce manual overhead.

- Insufficient training and awareness: Employees who lack expertise or tools can inadvertently introduce errors. Provide role-specific training and promote a data-driven culture that values quality at every level.

- Measuring data quality improvements: Tying data quality to ROI or bottom-line results can be difficult. Track tangible KPIs (e.g., reduced duplicates, increased conversion rates) to justify continued investment.

- Lack of executive buy-in: Projects often stall without senior sponsorship. Demonstrate quick wins from pilot projects and highlight potential risk reductions or revenue gains to win leadership support.

- Insufficient data governance: Without clear governance standards and roles, accountability falls through the cracks. Formalize data ownership, establish policies, and set escalation paths for resolving quality issues.

- High maintenance costs: Sustaining large-scale initiatives can be expensive. Consider incremental rollouts that allow you to prove value early and secure a budget for ongoing improvements.

- Resistance to change: Organizational inertia can slow adoption of new tools or processes. Use effective change management strategies: communicate benefits, offer training, and build champions.