Apache Airflow Architecture on OpenShift

This blog will walk you through the Apache Airflow architecture on OpenShift.

Join the DZone community and get the full member experience.

Join For Freethis blog will walk you through the apache airflow architecture on openshift. we are going to discuss the function of the individual airflow components and how they can be deployed to openshift. this article focuses on the latest apache airflow version 1.10.12.

architecture overview

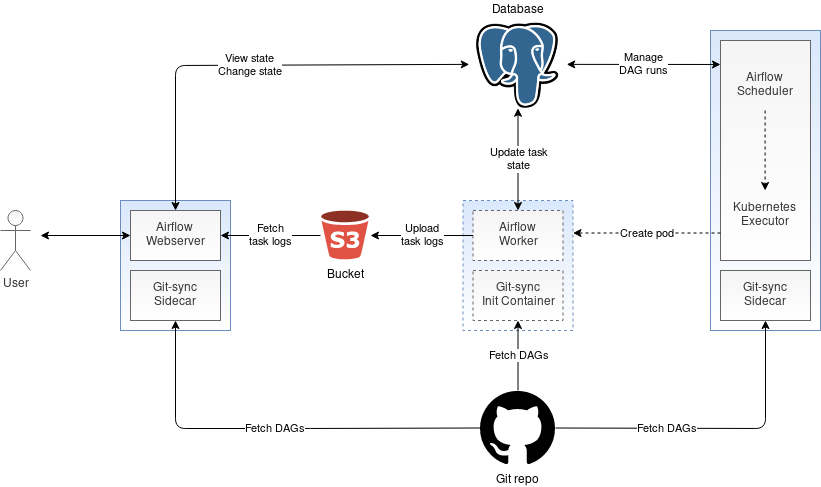

the three main components of apache airflow are the webserver, scheduler, and workers. the webserver provides the web ui which is the airflow's main user interface. it allows users to visualize their dags (directed acyclic graph) and control the execution of their dags. in addition to the web ui, the webserver also provides an experimental rest api that allows controlling airflow programatically as opposed to through the web ui. the second component - the airflow scheduler - orchestrates the execution of dags by starting the dag tasks at the right time and in the right order. both airflow webserver and scheduler are long-running services. on the other hand, airflow workers - the last of the three main components - run as ephemeral pods. they are created by the kubernetes executor and their sole purpose is to execute a single dag task. after the task execution is complete, the worker pod is deleted. the following diagram depicts the aiflow architecture on openshift:

shared database

as shown in the architecture diagram above, none of the airflow components communicate directly with each other. instead, they all read and modify the state that is stored in the shared database . for instance, the webserver reads the current state of the dag execution from the database and displays it in the web ui. if you trigger a dag in the web ui, the webserver will update the dag in the database accordingly. next comes the scheduler that checks the dag state in the database periodically. it finds the triggered dag and if the time is right, it will schedule the new tasks for execution. after the execution of the specific task is complete, the worker marks that state of the task in the database as done. finally, the web ui will learn the new state of the task from the database and will show it to the user.

the shared database architecture provides airflow components with a perfectly consistent view of the current state. on the other hand, as the number of tasks to execute grows, the database becomes a performance bottleneck as more and more workers connect to the database. to alleviate the load on the database, a connection pool like pgbouncer may be deployed in front of the database. the pool manages a relatively small amount of database connections which are re-used to serve requests of different workers.

regarding the choice of a particular dbms, in production deployments the database of choice is typically postgresql or mysql. you can choose to run the database directly on openshift. in that case, you will need to put it on an rwo (readwriteonce) persistent volume provided for example by openshift container storage . or, you can use an external database. for instance, if you are hosting openshift on top of aws, you can leverage a fully managed database provided by amazon rds .

making dags accessible to airflow components

all three airflow components webserver, scheduler, and workers assume that the dag definitions can be read from the local filesystem. the question is, how to make the dags available on the local filesystem in the container? there are two approaches to achieve this. in the first approach, a shared volume is created to hold all the dags. this volume is then attached to the airflow pods. the second approach assumes that your dags are hosted in a git repository. a sidecar container is deployed along with the airflow server and scheduler. this sidecar container synchronizes the latest version of your dags with the local filesystem periodically. for the worker pods, the pulling of the dags from the git repository is done only once by the init container before the worker is brought up.

note that since airflow 1.10.10, you can use the dag serialization feature. with dag serialization, the scheduler reads the dags from the local filesystem and saves them in the database. the airflow webserver then reads the dags from the database instead of the local filesystem. for the webserver container, you can avoid the need to mount a shared volume or configure git-sync if you enable the dag serialization.

to synchronize the dags with the local filesystem, i personally prefer using git-sync over the shared volumes approach. first, you want to keep you dags in the source control anyway to facilitate the development of the dags. second, git-sync seems to be easier to troubleshoot and recover in the case of failure.

airflow monitoring

as the old saying goes, "if you are not monitoring it, it's not in production". so, how can we monitor apache airflow running on openshift? prometheus is a monitoring system widely used for monitoring kubernetes workloads and i recommend that you consider it for monitoring airflow as well. airflow itself reports metrics using the statsd protocol, so you will need to deploy the statsd_exporter piece between airflow and the prometheus server. this exporter will aggregate the statsd metrics, convert them into prometheus format and expose them for the prometheus server to scrape.

collecting airflow logs

by default, apache airflow writes the logs to the local filesystem. if you have an rwx (readwritemany) persistent volume available, you can attach it to the webserver, scheduler, and worker pods to capture the logs. as the worker logs are written to the shared volume, they are instantly accessible by the webserver. this allows for viewing the logs live in the web ui.

an alternative approach to handling the airflow logs is to enable remote logging. with remote logging, the worker logs can be pushed to the remote location like s3. the logs are then grabbed from s3 by the webserver to display them in the web ui. note that when using an object store as your remote location, the worker logs are uploaded to the object store only after the task run is complete. that means that you won't be able to view the logs live in the web ui while the task is still running.

the remote logging feature in airflow takes care of the worker logs. how can you handle webserver and scheduler logs when not using a persistent volume? you can configure airflow to dump the logs to stdout. the openshift logging will collect the logs and send them to the central location.

conclusion

in this article, we reviewed the apache airflow architecture on openshift. we discussed the role of individual airflow components and described how they interact with each other. we discussed the airflow's shared database, explained how to make dags accessible to the airflow components, and talked about airflow monitoring and log collection.

apache airflow can be deployed in several different ways. what is your favorite architecture for deploying airflow? i would like to hear about your approach. if you have any further questions or comments, please add them to the comment section below.

Published at DZone with permission of Ales Nosek. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments