Building a Voice-Powered Smart Kitchen App Using LLaMA 3.1, Firebase, and Node.js

A step-by-step guide to creating a hands-free kitchen assistant with voice commands, real-time grocery list management, and recipe suggestions.

Join the DZone community and get the full member experience.

Join For FreeIn this tutorial, I'll guide you through creating a Smart Kitchen App (Chent) that simplifies managing grocery lists based on personalized preferences. This app operates through voice commands, making it easier to interact and add items hands-free. It's ideal for users who want to quickly create grocery lists by simply speaking what they need.

This project leverages LLaMA 3.1 for natural language processing (NLP) to interpret voice inputs, Firebase for real-time database storage and user authentication, and Node.js to handle the backend logic and integration. Users can input commands to add items, set dietary preferences, or even specify quantities, and the app will intelligently generate a grocery list that meets these requirements.

Throughout this tutorial, I'll cover everything from setting up Firebase and configuring LLaMA for voice commands to storing and managing grocery lists in real time.

Setting Up the Environment for Development

We need to set up our working environment before we start writing code for our smart-kitchen-app app.

1. Installing Node.js and npm

The first step is to install Node.js and npm. Go to the Node.js website and get the Long-Term Support version for your computer's running system. Then, follow the installation steps.

2. Making a Project With Next.js

Start up your terminal and go to the location where you want to make your project. After that, run these commands:

npx create-next-app@latest smart-kitchen app(With the@latestflag, npm gets the most recent version of the Next.js starting setup.)cd smart-kitchen-app

It will make a new Next.js project and take you to its path. You'll be given a number of configuration choices during the setup process; set them as given below:

- Would you like to use TypeScript? No

- Would you like to use ESLint? Yes

- Would you like to use Tailwind CSS? No

- Would you like to use the src/ directory? No

- Would you like to use App Router? Yes

- Would you like to customize the default import alias? No

3. Installing Firebase and Material-UI

In the directory of your project, execute the following command:

npm install @mui/material @emotion/react @emotion/styled firebaseSetting Up Firebase

- Launch a new project on the Firebase Console.

- Click "Add app" after your project has been built, then choose the web platform (

</>). - Give your app a name when you register it, such as "

smart-kitchen-app." - Make a copy of the Firebase setup file. Afterwards, this will be useful.

4. Creating a Firebase Configuration File

Make a new file called firebase.js in the root directory of your project, and add the following code, replacing the placeholders with the real Firebase settings for your project:

import { initializeApp } from "firebase/app";

import { getAnalytics } from "firebase/analytics";

import { getAuth } from "firebase/auth";

import { getFirestore } from "firebase/firestore";

const firebaseConfig = {

apiKey: "YOUR_API_KEY",

authDomain: "YOUR_PROJECT_ID.firebaseapp.com",

projectId: "YOUR_PROJECT_ID",

storageBucket: "YOUR_PROJECT_ID.appspot.com",

messagingSenderId: "YOUR_MESSAGING_SENDER_ID",

appId: "YOUR_APP_ID"

};

const app = initializeApp(firebaseConfig);

const analytics = getAnalytics(app);

export const auth = getAuth(app);

export const db = getFirestore(app);How to Create an API Token in OpenRouter

We will use the free version of LLaMA 3.1 from OpenRouter, and for that, we need to get the API token. Below are the steps to get one:

Step 1: Sign Up or Log In to OpenRouter

- Visit OpenRouter's official website: Go to openrouter.ai.

- Create an account if you don't have one. You can either sign up with your email or use an OAuth provider like Google, GitHub, or others.

- Log in to your OpenRouter account if you already have one.

Step 2: Navigate to API Key Settings

- Once you are logged in, go to the Dashboard.

- In the dashboard, look for the API or Developer Tools section.

- Click on the API Keys or Tokens option.

Step 3: Generate a New API Key

- In the API Keys section, you should see a button or link to Generate New API Key.

- Click on the Generate button to create a new API key.

- You may be asked to give your API key a name. If you have multiple API keys for different projects (e.g., "Smart-Kitchen App Key"), this helps you organize them.

Step 4: Copy the API Key

- Once the API key is generated, it will be displayed on the screen. Copy the API key immediately, as some services may not show it again after you leave the page.

- Store the API key securely in your environment configuration file (e.g.,

.env.local).

Step 5: Add API Key to .env.local File

- In your Next.js project, open the

.env.localfile (if you don't have one, create it). - Add the following line:

OPENROUTER_API_KEY=your-generated-api-key-here

Make sure to replace your-generated-api-key-here with the actual API key you copied.

Step 6: Use the API Key in Your Application

- Now that you have stored the API key in your

.env.localfile, you can use it in your application. - Access the key through

process.env.OPENROUTER_API_KEYin your server-side code or when making API requests. Make sure to keep the key secure and avoid exposing it to the client side in your production app.

Building the Core Logic to Import LLaMa 3.1 for Creating Smart Kitchen App Responses

Create a new folder named app, and under it, create a subfolder named Extract with a file name route.ts and follow the code given below:

import { NextRequest, NextResponse } from 'next/server';

export async function POST(req: NextRequest) {

const { prompt } = await req.json();

// Check if the API key is available

const apiKey = process.env.OPENROUTER_API_KEY;

if (!apiKey) {

console.error('API key not found');

return NextResponse.json({ error: "API key is missing" }, { status: 500 });

}

const response = await fetch("https://openrouter.ai/api/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${apiKey}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "meta-llama/llama-3.1-8b-instruct",

messages: [

{ role: "system", content: "You are an assistant that extracts information and outputs only valid JSON. Do not include any explanation or extra information. Only respond with a JSON object that has the following structure: {\"itemName\": \"string\", \"quantity\": \"number\"}." },

{ role: "user", content: `Extract the item name and quantity from the following command: "${prompt}"` }

],

})

});

if (!response.ok) {

console.error('LLaMA API request failed with status:', response.status);

return NextResponse.json({ error: "Failed to process command with LLaMA" }, { status: 500 });

}

const completion = await response.json();

const rawJson = completion.choices[0].message.content;

console.log('Raw response from LLaMA:', rawJson); // Detailed logging of the raw response

// Extracting JSON from the response content

const startIndex = rawJson.indexOf('{');

const endIndex = rawJson.lastIndexOf('}') + 1;

if (startIndex === -1 || endIndex === -1) {

console.error('Failed to find valid JSON in LLaMA response');

return NextResponse.json({ error: "Failed to extract JSON from LLaMA response" }, { status: 500 });

}

const jsonString = rawJson.substring(startIndex, endIndex);

console.log('Extracted JSON string:', jsonString); // Logging extracted JSON string

try {

const parsedData = JSON.parse(jsonString);

console.log('Parsed data:', parsedData); // Logging the parsed data

const { itemName, quantity } = parsedData;

if (!itemName || !quantity) {

console.error('Missing fields in parsed data:', parsedData);

return NextResponse.json({ error: "Invalid data received from LLaMA" }, { status: 500 });

}

return NextResponse.json({ itemName, quantity });

} catch (error) {

console.error('Error parsing JSON from LLaMA response:', error);

return NextResponse.json({ error: "Failed to parse JSON from LLaMA response" }, { status: 500 });

}

}

This code defines a POST API endpoint that extracts specific information (item name and quantity) from a user's voice command using the LLaMA 3.1 model, focusing on delivering structured data in JSON format.

First, it receives a request containing a prompt and checks if the necessary API key (OPENROUTER_API_KEY) is available. If the API key is missing, it responds with an error. The request is then sent to the OpenRouter AI API, asking the AI to return only valid JSON with the fields itemName and quantity extracted from the user's input.

The response from the AI is logged and examined to ensure that a valid JSON object is returned. If the response contains valid JSON, the string is parsed, and the fields are checked for completeness. If both itemName and quantity are present, the data is returned to the user in JSON format; otherwise, appropriate errors are logged and returned. This code ensures the AI assistant provides structured and actionable responses that are suitable for grocery list creation in a smart kitchen app.

Under the app folder, create a subfolder named Llama with a file name route.ts and follow the code given below:

import { NextRequest, NextResponse } from 'next/server';

const systemPrompt = `

You are a helpful and friendly AI kitchen assistant. Your role is to assist users in their kitchen tasks, providing guidance, suggestions, and support in a clear and concise manner. Follow these guidelines:

1. Provide friendly, helpful, and respectful responses to all user inquiries, ensuring a positive experience.

2. When asked for a recipe, suggest simple and delicious recipes based on the ingredients the user has available. Keep the instructions clear and easy to follow.

3. Assist with creating grocery lists by accurately adding items mentioned by the user. Confirm each addition and offer to help with more items.

4. Handle common kitchen-related tasks such as suggesting recipes, checking pantry items, offering cooking tips, and more.

5. If the user is unsure or needs help deciding, offer suggestions or ask clarifying questions to better assist them.

6. Recognize when the user is trying to end the conversation and respond politely, offering a warm closing message.

7. Avoid overly technical language or complex instructions. Keep it simple, friendly, and approachable.

8. Provide accurate and practical information, such as cooking times, ingredient substitutions, or food storage tips.

9. Tailor responses to the user's preferences and dietary restrictions whenever mentioned.

10. Ensure every interaction feels personal, supportive, and designed to help the user enjoy their cooking experience.

Remember, your goal is to make cooking and kitchen tasks easier, more enjoyable, and stress-free for the user.

`;

export async function POST(req: NextRequest) {

try {

const { command } = await req.json();

console.log('Received command:', command);

const response = await fetch("https://openrouter.ai/api/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${process.env.OPENROUTER_API_KEY}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "meta-llama/llama-3.1-8b-instruct",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: command }

],

})

});

if (!response.ok) {

throw new Error(`Failed to fetch from OpenRouter AI: ${response.statusText}`);

}

const completion = await response.json();

const responseMessage = completion.choices[0].message.content;

console.log('Received response:', responseMessage);

return NextResponse.json({ response: responseMessage });

} catch (error) {

console.error("Error processing request:", error);

return NextResponse.json({ error: "Error processing request" }, { status: 500 });

}

}This code sets up a POST API endpoint in Next.js for a kitchen assistant app, enabling it to process user commands through voice input utilizing OpenRouter AI with the LLaMA 3.1 model. The endpoint begins by establishing a system Prompt that directs the AI's behavior, ensuring that interactions are friendly, clear, and supportive, particularly in relation to kitchen tasks like recipe suggestions, grocery list creation, and cooking tips. Upon receiving a POST request, the system extracts the user's command from the request body and forwards it with the system Prompt to the OpenRouter AI API.

The response from the AI, customized according to the command, is subsequently provided to the user in JSON format. If an error occurs during the process, an error message will be logged, and a 500-status code will be returned, ensuring a seamless user experience.

Under the app folder, create a subfolder named Recipe with a file name route.ts and follow the code given below:

import { NextRequest, NextResponse } from 'next/server';

export async function POST(req: NextRequest) {

const { availableItems } = await req.json();

// Check if the API key is available

const apiKey = process.env.OPENROUTER_API_KEY;

if (!apiKey) {

console.error('API key not found');

return NextResponse.json({ error: "API key is missing" }, { status: 500 });

}

const response = await fetch("https://openrouter.ai/api/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${apiKey}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "meta-llama/llama-3.1-8b-instruct",

messages: [

{ role: "system", content: "You are an assistant that provides specific recipe suggestions based on available ingredients. Only respond with a recipe or cooking instructions based on the provided ingredients." },

{ role: "user", content: `Here are the ingredients I have: ${availableItems}. Can you suggest a recipe using these ingredients?` }

],

})

});

if (!response.ok) {

console.error('LLaMA API request failed with status:', response.status);

return NextResponse.json({ error: "Failed to process recipe request with LLaMA" }, { status: 500 });

}

const completion = await response.json();

const recipe = completion.choices[0].message.content.trim();

return NextResponse.json({ recipe });

}

This code establishes a POST API endpoint that leverages the LLaMA 3.1 model to generate recipe suggestions tailored to the ingredients the user has on hand. The request involves a system prompt directing the AI to function as a kitchen helper, particularly recommending recipes according to the given ingredients. The AI receives a message that includes a list of available ingredients and requests suggestions for a recipe.

Upon a successful API request, the response from the AI, which includes a recipe, is extracted and provided in JSON format. If the operation fails, the code will log the error and provide a message that indicates the failure occurred. This code guarantees that the AI delivers tailored recipe recommendations based on the ingredients the user has on hand in the smart kitchen app.

Building the Core Components for the Smart Kitchen App

Step 1: Imports and State Setup

Import necessary dependencies and set up state variables for managing user input and Firebase integration.

"use client";

import React, { useState } from 'react';

import { db } from './firebase';

import { collection, updateDoc, arrayUnion, doc, getDoc } from 'firebase/firestore';

Step 2: Component Declaration and Initial State

Define the KitchenAssistant component and initialize state for user commands, response, and speech recognition.

export default function KitchenAssistant() {

const [command, setCommand] = useState('');

const [response, setResponse] = useState('');

const [recognition, setRecognition] = useState<SpeechRecognition | null>(null);

Step 3: Handling Voice Commands

This code section handles the user's voice command. It verifies whether the command has a closing statement (e.g., "thank you") to conclude the conversation or if it features phrases such as "suggest a recipe" or "add items to pantry." Based on the command given, it activates the related action (for instance, suggestRecipe or speak).

const handleVoiceCommand = async (commandText: string) => {

setCommand(commandText.toLowerCase());

const closingStatements = ["no thank you", "thank you", "that's all", "goodbye", "i'm done"];

const isClosingStatement = closingStatements.some(statement => commandText.includes(statement));

if (isClosingStatement) {

const closingResponse = "Thank you! If you need anything else, just ask. Have a great day!";

setResponse(closingResponse);

speak(closingResponse);

return;

}

if (commandText.includes("suggest a recipe")) {

await suggestRecipe();

} else if (commandText.includes("add items to pantry")) {

setResponse("Sure, start telling me the items you'd like to add.");

speak("Sure, start telling me the items you'd like to add.");

}

};

Step 4: General Command Handling

This function handles general commands using a POST request to the /api/llama endpoint, which processes the command through the LLaMA model. The response from the AI is set as the response state and read aloud using the speak function.

const handleGeneralCommand = async (commandText: string) => {

try {

const res = await fetch('/api/llama', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ command: commandText }),

});

const data = await res.json();

setResponse(data.response);

speak(data.response);

} catch (error) {

setResponse("Sorry, I couldn't process your request.");

speak("Sorry, I couldn't process your request.");

}

};

Step 5: Adding Items to the Grocery List

This function adds an item to the grocery list in Firebase. It calculates an expiry date, updates the Firestore document, and confirms the addition to the user by setting the response and speaking it aloud.

const addToGroceryList = async (itemName: string, quantity: number) => {

try {

const expiryDate = new Date();

expiryDate.setDate(expiryDate.getDate() + 7);

const docRef = doc(db, 'grocery-list', 'Available Grocery Items');

await updateDoc(docRef, {

items: arrayUnion({

itemName,

quantity,

expiryDate: expiryDate.toISOString(),

}),

});

const responseText = `Grocery list updated. You now have: ${itemName} (${quantity}).`;

setResponse(responseText);

speak(responseText);

} catch (error) {

setResponse("Sorry, I couldn't add the item to the list.");

speak("Sorry, I couldn't add the item to the list.");

}

};

Step 6: Speech Recognition

This function initializes the browser's Speech Recognition API, listens for user input, and processes the recognized text with handleVoiceCommand. It sets up the language and ensures continuous listening.

In this code, I am utilizing the Web Speech API's SpeechRecognition interface to enable voice command functionality in the app.

const startRecognition = () => {

const SpeechRecognition = window.SpeechRecognition || (window as any).webkitSpeechRecognition;

const newRecognition = new SpeechRecognition();

newRecognition.lang = 'en-US';

newRecognition.onresult = (event: any) => {

const speechToText = event.results[0][0].transcript;

handleVoiceCommand(speechToText);

};

newRecognition.start();

setRecognition(newRecognition);

};

The line checks for the presence of the native SpeechRecognition API in the browser or falls back to webkitSpeechRecognition for browsers like Chrome that support it through the webkit prefix. This API allows the app to listen to the user's voice input, convert it into text, and then process it as a command.

const SpeechRecognition = window.SpeechRecognition || (window as any).webkitSpeechRecognition;Using this API, the app can interact with users through voice commands, enabling features such as adding items to a grocery list, suggesting recipes, or retrieving pantry contents, making the experience more hands-free and interactive.

You can use Whisper or any other speech recognition of your choice.

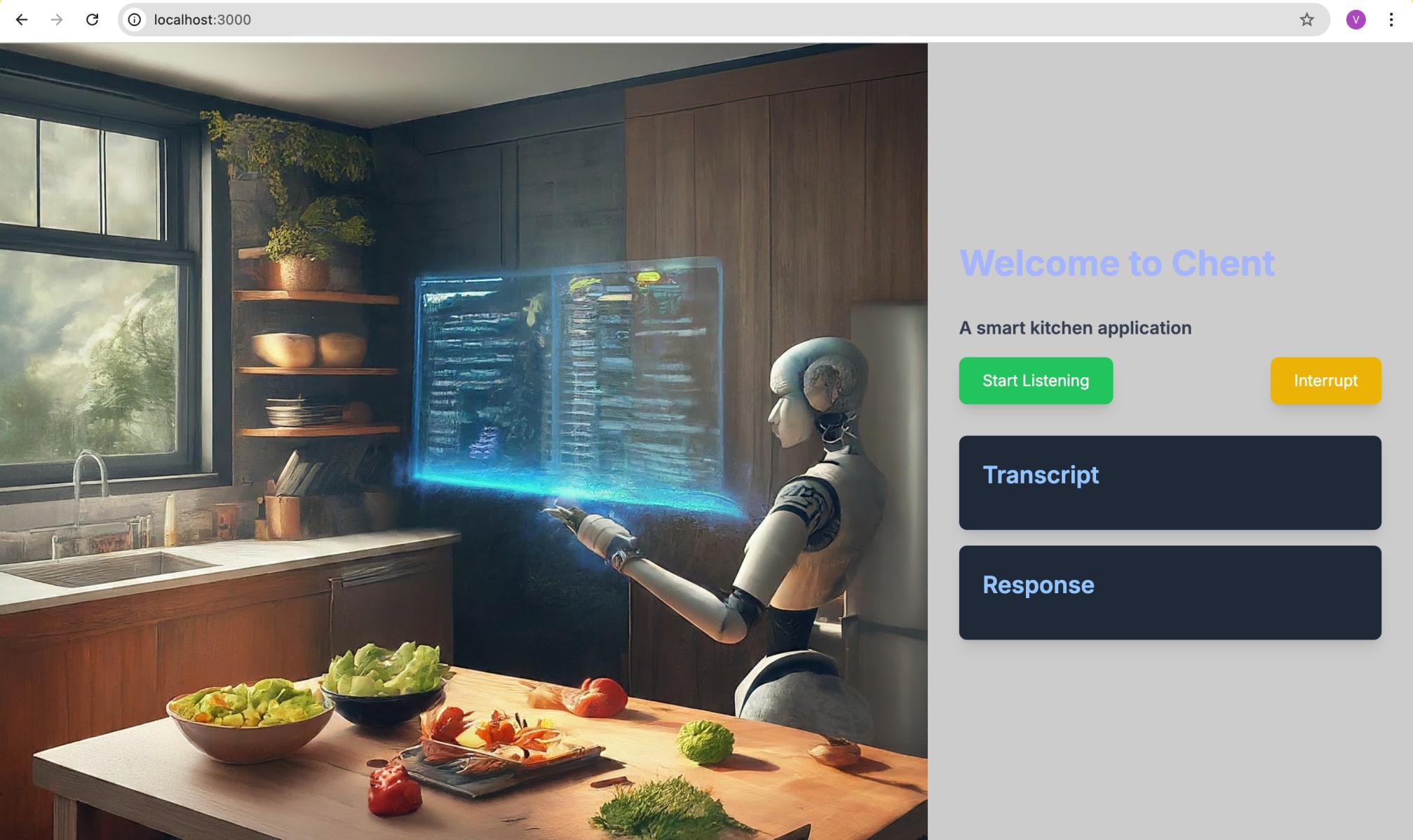

Creating Frontend Component

This TSX layout defines the structure of the user interface for your smart kitchen app. The design uses Tailwind CSS classes for responsive and aesthetic styling.

return (

<div className="flex min-h-screen">

<div className="w-1/2 background-image"></div>

<div className="w-1/2 flex flex-col justify-center p-8 bg-white bg-opacity-80">

<h1 className="text-4xl font-bold mb-8 text-indigo-500">Welcome to Chent</h1>

<div className="button-group flex justify-between mb-8">

<button onClick={startRecognition} className="bg-green-500">Start Listening</button>

<button onClick={interruptSpeech} className="bg-yellow-500">Interrupt</button>

</div>

<div className="transcript">{command}</div>

<div className="response">{response}</div>

</div>

</div>

- The layout is a two-column design: the left side displays a background image, while the right side contains the app's main functionality.

- The right section includes a header, two action buttons, and two areas for showing dynamic text: one for the user's voice command (transcript) and one for the AI assistant's response (response).

- The interface is clean, minimal, and responsive, allowing users to interact with the smart kitchen app efficiently while maintaining a visually appealing design.

Once you finish it, you can have an interface that looks similar to the one below:

This wraps up the creation of our smart kitchen application. In this example, I have utilized the LLaMA 3.1 language model, but feel free to experiment with any other model of your choice.

Opinions expressed by DZone contributors are their own.

Comments