Crafting Database Models With Knex.js and PostgreSQL

Discover the magic of building robust models with ease, as we journey through the world of Knex.js, making database modeling and testing fun and foolproof.

Join the DZone community and get the full member experience.

Join For FreeIn today’s dynamic world of web development, the foundation upon which we build our applications is crucial. At the heart of many modern web applications lies the unsung hero: the database. But how we interact with this foundation — how we query, shape, and manipulate our data — can mean the difference between an efficient, scalable app and one that buckles under pressure.

Enter the formidable trio of Node.js, Knex.js, and PostgreSQL. Node.js, with its event-driven architecture, promises speed and efficiency. Knex.js, a shining gem in the Node ecosystem, simplifies database interactions, making them more intuitive and less error-prone. And then there’s PostgreSQL — a relational database that’s stood the test of time, renowned for its robustness and versatility.

So, why this particular blend of technologies? And how can they be harnessed to craft resilient and reliable database models? Journey with us as we unpack the synergy of Node.js, Knex.js, and PostgreSQL, exploring the myriad ways they can be leveraged to elevate your web development endeavors.

Initial Setup

In a previous article, I delved into the foundational setup and initiation of services using Knex.js and Postgres. However, this article hones in on the intricacies of the model aspect in service development. I won’t be delving into Node.js setups or explaining the intricacies of Knex migrations and seeds in this piece, as all that information is covered in the previous article.

Postgres Connection

Anyway, let’s briefly create a database using docker-compose:

version: '3.6'

volumes:

data:

services:

database:

build:

context: .

dockerfile: postgres.dockerfile

image: postgres:latest

container_name: postgres

environment:

TZ: Europe/Paris

POSTGRES_DB: ${DB_NAME}

POSTGRES_USER: ${DB_USER}

POSTGRES_PASSWORD: ${DB_PASSWORD}

networks:

- default

volumes:

- data:/var/lib/postgresql/data

ports:

- "5432:5432"

restart: unless-stoppedDocker Compose Database Setup

And in your .env file values for connection:

DB_HOST="localhost"

DB_PORT=5432

DB_NAME="modeldb"

DB_USER="testuser"

DB_PASSWORD="DBPassword"Those environment variables will be used in docker-compose file for launching your Postgres database. When all values are ready we can start to run it with docker-compose up.

Kenx Setup

Before diving into Knex.js setup, we’ll be using Node.js version 18. To begin crafting models, we only need the following dependencies:

"dependencies": {

"dotenv": "^16.3.1",

"express": "^4.18.2",

"knex": "^2.5.1",

"pg": "^8.11.3"

}

Create knexfile.ts and add the following content:

require('dotenv').config();

require('ts-node/register');

import type { Knex } from 'knex';

const environments: string[] = ['development', 'test', 'production'];

const connection: Knex.ConnectionConfig = {

host: process.env.DB_HOST as string,

database: process.env.DB_NAME as string,

user: process.env.DB_USER as string,

password: process.env.DB_PASSWORD as string,

};

const commonConfig: Knex.Config = {

client: 'pg',

connection,

migrations: {

directory: './database/migrations',

},

seeds: {

directory: './database/seeds',

}

};

export default Object.fromEntries(environments.map((env: string) => [env, commonConfig]));Knex File Configuration

Next, in the root directory of your project, create a new folder named database. Within this folder, add a index.ts file. This file will serve as our main database connection handler, utilizing the configurations from knexfile. Here's what the content index.ts should look like:

import Knex from 'knex';

import configs from '../knexfile';

export const database = Knex(configs[process.env.NODE_ENV || 'development']);Export database with applied configs

This setup enables a dynamic database connection based on the current Node environment, ensuring that the right configuration is used whether you’re in a development, test, or production setting.

Within your project directory, navigate to src/@types/index.ts. Here, we'll define a few essential types to represent our data structures. This will help ensure consistent data handling throughout our application. The following code outlines an enumeration of user roles and type definitions for both a user and a post:

export enum Role {

Admin = 'admin',

User = 'user',

}

export type User = {

email: string;

first_name: string;

last_name: string;

role: Role;

};

export type Post = {

title: string;

content: string;

user_id: number;

};Essential Types

These types act as a blueprint, enabling you to define the structure and relationships of your data, making your database interactions more predictable and less prone to errors.

After those setups, you can do migrations and seeds. Run npx knex migrate:make create_users_table:

import { Knex } from "knex";

import { Role } from "../../src/@types";

const tableName = 'users';

export async function up(knex: Knex): Promise<void> {

return knex.schema.createTable(tableName, (table: Knex.TableBuilder) => {

table.increments('id');

table.string('email').unique().notNullable();

table.string('password').notNullable();

table.string('first_name').notNullable();

table.string('last_name').notNullable();

table.enu('role', [Role.User, Role.Admin]).notNullable();

table.timestamps(true, true);

});

}

export async function down(knex: Knex): Promise<void> {

return knex.schema.dropTable(tableName);

}Knex Migration File for Users

And npx knex migrate:make create_posts_table:

import { Knex } from "knex";

const tableName = 'posts';

export async function up(knex: Knex): Promise<void> {

return knex.schema.createTable(tableName, (table: Knex.TableBuilder) => {

table.increments('id');

table.string('title').notNullable();

table.string('content').notNullable();

table.integer('user_id').unsigned().notNullable();

table.foreign('user_id').references('id').inTable('users').onDelete('CASCADE');

table.timestamps(true, true);

});

}

export async function down(knex: Knex): Promise<void> {

return knex.schema.dropTable(tableName);

}Knex Migration File for Posts

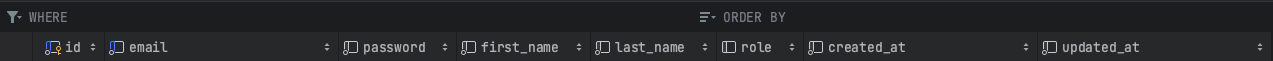

After setting things up, proceed by running npx knex migrate:latest to apply the latest migrations. Once this step is complete, you're all set to inspect the database table using your favorite GUI tool:

Created Table by Knex Migration

We are ready for seeding our tables. Run npx knex seed:make 01-users with the following content:

import { Knex } from 'knex';

import { faker } from '@faker-js/faker';

import { User, Role } from '../../src/@types';

const tableName = 'users';

export async function seed(knex: Knex): Promise<void> {

await knex(tableName).del();

const users: User[] = [...Array(10).keys()].map(key => ({

email: faker.internet.email().toLowerCase(),

first_name: faker.person.firstName(),

last_name: faker.person.lastName(),

role: Role.User,

}));

await knex(tableName).insert(users.map(user => ({ ...user, password: 'test_password' })));

}Knex Seed Users

And for posts run npx knex seed:make 02-posts with the content:

import { Knex } from 'knex';

import { faker } from '@faker-js/faker';

import type { Post } from '../../src/@types';

const tableName = 'posts';

export async function seed(knex: Knex): Promise<void> {

await knex(tableName).del();

const usersIds: Array<{ id: number }> = await knex('users').select('id');

const posts: Post[] = [];

usersIds.forEach(({ id: user_id }) => {

const randomAmount = Math.floor(Math.random() * 10) + 1;

for (let i = 0; i < randomAmount; i++) {

posts.push({

title: faker.lorem.words(3),

content: faker.lorem.paragraph(),

user_id,

});

}

});

await knex(tableName).insert(posts);

}Knex Seed Posts

The naming convention we’ve adopted for our seed files, 01-users and 02-posts, is intentional. This sequential naming ensures the proper order of seeding operations. Specifically, it prevents posts from being seeded before users, which is essential to maintain relational integrity in the database.

Models and Tests

As the foundation of our database is now firmly established with migrations and seeds, it’s time to shift our focus to another critical component of database-driven applications: models. Models act as the backbone of our application, representing the data structures and relationships within our database. They provide an abstraction layer, allowing us to interact with our data in an object-oriented manner. In this section, we’ll delve into the creation and intricacies of models, ensuring a seamless bridge between our application logic and stored data.

In the src/models/Model/index.ts directory, we'll establish the foundational setup:

import { database } from 'root/database';

export abstract class Model {

protected static tableName?: string;

private static get table() {

if (!this.tableName) {

throw new Error('The table name must be defined for the model.');

}

return database(this.tableName);

}

}Initial Setup for Model

To illustrate how to leverage our Model class, let's consider the following example using TestModel:

class TestModel extends Model {

protected static tableName = 'test_table';

}Usage of Extended Model

This subclass, TestModel, extends our base Model and specifies the database table it corresponds to as 'test_table'.

To truly harness the potential of our Model class, we need to equip it with methods that can seamlessly interact with our database. These methods would encapsulate common database operations, making our interactions not only more intuitive but also more efficient. Let's delve into and enhance our Model class with some essential methods:

import { database } from 'root/database';

export abstract class Model {

protected static tableName?: string;

private static get table() {

if (!this.tableName) {

throw new Error('The table name must be defined for the model.');

}

return database(this.tableName);

}

protected static async insert<Payload>(data: Payload): Promise<{

id: number;

}> {

const [result] = await this.table.insert(data).returning('id');

return result;

}

protected static async findOneById<Result>(id: number): Promise<Result> {

return this.table.where('id', id).select("*").first();

}

protected static async findAll<Item>(): Promise<Item[]> {

return this.table.select('*');

}

}Essential Methods of Model

In the class, we’ve added methods to handle the insertion of data (insert), fetch a single entry based on its ID (findOneById), and retrieve all items (findAll). These foundational methods will streamline our database interactions, paving the way for more complex operations as we expand our application.

How should we verify its functionality? By crafting an integration test for our Model. Let's dive into it.

Yes, I'm going to use Jest for integration tests as I have the same tool and for unit tests. Of course, Jest is primarily known as a unit testing framework, but it’s versatile enough to be used for integration tests as well.

Ensure that your Jest configuration aligns with the following:

import type { Config } from '@jest/types';

const config: Config.InitialOptions = {

clearMocks: true,

preset: 'ts-jest',

testEnvironment: 'node',

coverageDirectory: 'coverage',

verbose: true,

modulePaths: ['./'],

transform: {

'^.+\\.ts?$': 'ts-jest',

},

testRegex: '.*\\.(spec|integration\\.spec)\\.ts$',

testPathIgnorePatterns: ['\\\\node_modules\\\\'],

moduleNameMapper: {

'^root/(.*)$': '<rootDir>/$1',

'^src/(.*)$': '<rootDir>/src/$1',

},

};

export default config;Jest Configurations

Within the Model directory, create a file named Model.integration.spec.ts.

import { Model } from '.';

import { database } from 'root/database';

const testTableName = 'test_table';

class TestModel extends Model {

protected static tableName = testTableName;

}

type TestType = {

id: number;

name: string;

};

describe('Model', () => {

beforeAll(async () => {

process.env.NODE_ENV = 'test';

await database.schema.createTable(testTableName, table => {

table.increments('id').primary();

table.string('name');

});

});

afterEach(async () => {

await database(testTableName).del();

});

afterAll(async () => {

await database.schema.dropTable(testTableName);

await database.destroy();

});

it('should insert a row and fetch it', async () => {

await TestModel.insert<Omit<TestType, 'id'>>({ name: 'TestName' });

const allResults = await TestModel.findAll<TestType>();

expect(allResults.length).toEqual(1);

expect(allResults[0].name).toEqual('TestName');

});

it('should insert a row and fetch it by id', async () => {

const { id } = await TestModel.insert<Omit<TestType, 'id'>>({ name: 'TestName' });

const result = await TestModel.findOneById<TestType>(id);

expect(result.name).toEqual('TestName');

});

});Model Integration Test

In the test, it showcased an ability to seamlessly interact with a database. I've designed a specialized TestModel class that inherits from our foundational, utilizing test_table as its designated test table. Throughout the tests, I'm emphasizing the model's core functions: inserting data and subsequently retrieving it, be it in its entirety or via specific IDs. To maintain a pristine testing environment, I've incorporated mechanisms to set up the table prior to testing, cleanse it post each test, and ultimately dismantle it once all tests are concluded.

Here leveraged the Template Method design pattern. This pattern is characterized by having a base class (often abstract) with defined methods like a template, which can then be overridden or extended by derived classes.

Following the pattern you’ve established with the Model class, we can create a UserModel class to extend and specialize for user-specific behavior.

In our Model change private to protected for reusability in sub-classes.

protected static tableName?: string;And then create UserModel in src/models/UserModel/index.ts like we did for the baseModel with the following content:

import { Model } from 'src/models/Model';

import { Role } from 'src/@types';

type UserType = {

id: number;

email: string;

first_name: string;

last_name: string;

role: Role;

}

class UserModel extends Model {

protected static tableName = 'users';

public static async findByEmail(email: string): Promise<UserType | null> {

return this.table.where('email', email).select('*').first();

}

}UserModel class

To conduct rigorous testing, we need a dedicated test database where table migrations and deletions can occur. Recall our configuration in the knexfile, where we utilized the same database name across environments with this line:

export default Object.fromEntries(environments.map((env: string) => [env, commonConfig]));To both develop and test databases, we must adjust the docker-composeconfiguration for database creation and ensure the correct connection settings. The necessary connection adjustments should also be made in the knexfile.

// ... configs of knexfile.ts

export default {

development: {

...commonConfig,

},

test: {

...commonConfig,

connection: {

...connection,

database: process.env.DB_NAME_TEST as string,

}

}

}knexfile.ts

With the connection established, setting process.env.NODE_ENV to "test" ensures that we connect to the appropriate database. Next, let's craft a test for the UserModel.

import { UserModel, UserType } from '.';

import { database } from 'root/database';

import { faker } from '@faker-js/faker';

import { Role } from 'src/@types';

const test_user: Omit<UserType, 'id'> = {

email: faker.internet.email().toLowerCase(),

first_name: faker.person.firstName(),

last_name: faker.person.lastName(),

password: 'test_password',

role: Role.User,

};

describe('UserModel', () => {

beforeAll(async () => {

process.env.NODE_ENV = 'test';

await database.migrate.latest();

});

afterEach(async () => {

await database(UserModel.tableName).del();

});

afterAll(async () => {

await database.migrate.rollback();

await database.destroy();

});

it('should insert and retrieve user', async () => {

await UserModel.insert<typeof test_user>(test_user);

const allResults = await UserModel.findAll<UserType>();

expect(allResults.length).toEqual(1);

expect(allResults[0].first_name).toEqual(test_user.first_name);

});

it('should insert user and retrieve by email', async () => {

const { id } = await UserModel.insert<typeof test_user>(test_user);

const result = await UserModel.findOneById<UserType>(id);

expect(result.first_name).toEqual(test_user.first_name);

});

});UserModel Integration Test

Initially, this mock user is inserted into the database, after which a retrieval operation ensures that the user was successfully stored, as verified by matching their first name. In another segment of the test, once the mock user finds its way into the database, we perform a retrieval using the user’s ID, further confirming the integrity of our insertion mechanism. Throughout the testing process, it’s crucial to maintain an isolated environment. To this end, before diving into the tests, the database is migrated to the most recent structure. Post each test, the user entries are cleared to avoid any data residue. Finally, as the tests wrap up, a migration rollback cleans the slate, and the database connection gracefully closes.

Using this approach, we can efficiently extend each of our models to handle precise database interactions.

import { Model } from 'src/models/Model';

export type PostType = {

id: number;

title: string;

content: string;

user_id: number;

};

export class PostModel extends Model {

public static tableName = 'posts';

protected static async findAllByUserId(user_id: number): Promise<PostType[]> {

if (!user_id) return [];

return this.table.where('user_id', user_id).select('*');

}

}PostModel.ts

The PostModel specifically targets the 'posts' table in the database, as indicated by the static tableName property. Moreover, the class introduces a unique method, findAllByUserId, designed to fetch all posts associated with a specific user. This method checks the user_id attribute, ensuring posts are only fetched when a valid user ID is provided.

If necessary to have a generic method for updating, we can add an additional method in the base Model:

public static async updateOneById<Payload>(

id: number,

data: Payload

): Promise<{

id: number;

} | null> {

const [result] = await this.table.where({ id }).update(data).returning('id');

return result;

}Update by id in base Model

So, this method updateOneById can be useful for all model sub-classes.

Conclusion

In wrapping up, it’s evident that a modular approach not only simplifies our development process but also enhances the maintainability and scalability of our applications. By compartmentalizing logic into distinct models, we set a clear path for future growth, ensuring that each module can be refined or expanded upon without causing disruptions elsewhere.

These models aren’t just theoretical constructs — they’re practical tools, effortlessly pluggable into controllers, ensuring streamlined and reusable code structures. So, as we journey through, let’s savor the transformative power of modularity, and see firsthand its pivotal role in shaping forward-thinking applications.

I welcome your feedback and am eager to engage in discussions on any aspect.

References

Published at DZone with permission of Anton Kalik. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments