A Guide to Deploying AI for Real-Time Content Moderation

A step-by-step guide to deploying an AI-powered real-time content moderation system, covering data collection, processing, models, and human review.

Join the DZone community and get the full member experience.

Join For FreeContent moderation is crucial for any digital platform to ensure the trust and safety of the users. While human moderation can handle some tasks, AI-driven real-time moderation becomes essential as platforms scale. Machine learning (ML) powered systems can moderate content efficiently at scale with minimal retraining and operational costs. This step-by-step guide outlines an approach to deploying an AI-powered real-time moderation system.

Attributes of Real-Time Moderation System

A real-time content moderation system evaluates user-submitted content — text, images, videos, or other formats — to ensure compliance with platform policies. Key attributes of an effective system include:

- Speed: Ability to review content without degrading the user experience or introducing significant latency.

- Scalability: Ability to handle thousands of requests per second in a timely manner.

- Accuracy: Minimizing false positives and false negatives for reliability.

Step-by-Step Guide to Deploying AI Content Moderation System

Step 1: Define Policies

Policies are the foundation for any content moderation system. A policy defines the rules against which the content would be evaluated. There can be different policies such as hate speech, fraud prevention, adult and sexual content, etc. Here is an example of policies defined by X (Twitter).

These policies are defined as objective rules, which can be stored as a configuration for easy access and evaluation.

Once the policies are defined, we need to collect data to serve as samples for training machine learning models. The dataset should include a good mix of different types of content expected on the platform, as well as both policy-compliant and non-compliant examples, to avoid bias.

Sources of data:

- Synthetic data generation: Use generative AI to create data.

- Open-source datasets: Multiple datasets are available online on platforms and other open-source websites. Choose the dataset that fits the platform's needs.

- Historical user-generated content: Ethically utilize the historical content posted by the users.

Once the data is collected, it needs to be labeled with highly trained human reviewers who have a strong understanding of the platform's policies. This labeled data would be treated as a "Golden Set" and can be used to train or fine-tune the ML models.

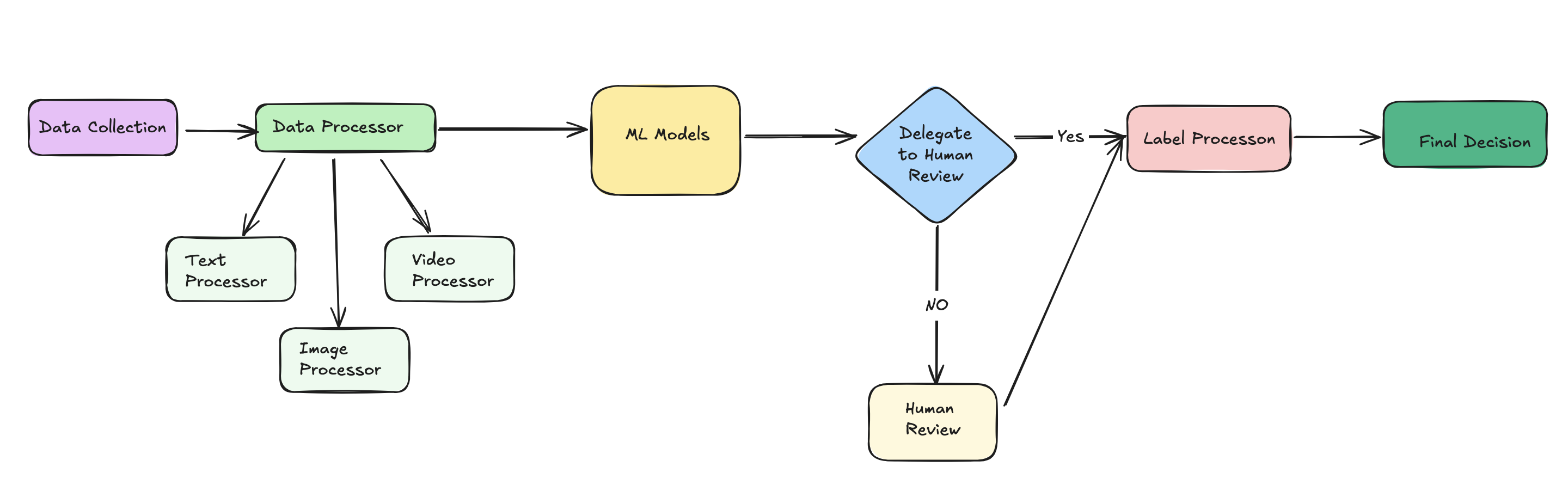

Before the ML models can operate on data and produce results, the data must be processed for efficiency and compatibility purposes. Some preprocessing techniques might include:

- Text data: Normalize the text by removing stop words and breaking it down into n-grams, depending on how the data is supposed to be consumed.

- Image data: Standardize images to certain resolution or pixels or size or format for model compatibility.

- Video: Extract different frames to process them as images.

- Audio: Transcribe audio into text using widely available NLP models and use the text models afterward. However, this approach may miss out on any non-verbal content that needs to be moderated.

Step 3: Model Training and Selection

A variety of models can be used depending on the platform's needs and the content type being supported. Some options to consider are:

Text

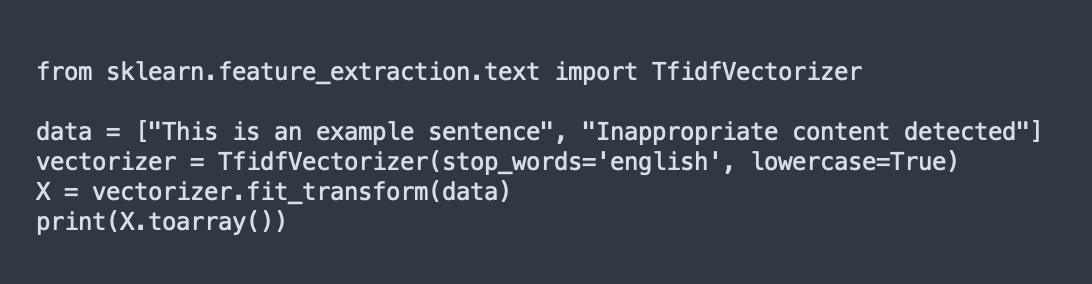

- Bag of words/Term Frequency-Inverse Document Frequency (TF-IDF): Harmful or policy-violating words can be assigned high weights, making it possible to catch policy violations even if they occur infrequently. However, this approach can have limitations as the word list to match the violating text would be limited, and sophisticated actors can find loopholes.

- Transformers: This is the idea behind GPTs and can be effective in capturing euphemisms or subtle forms of harmful text. One possible approach is to fine-tune GPT based on the platform's policies.

Image

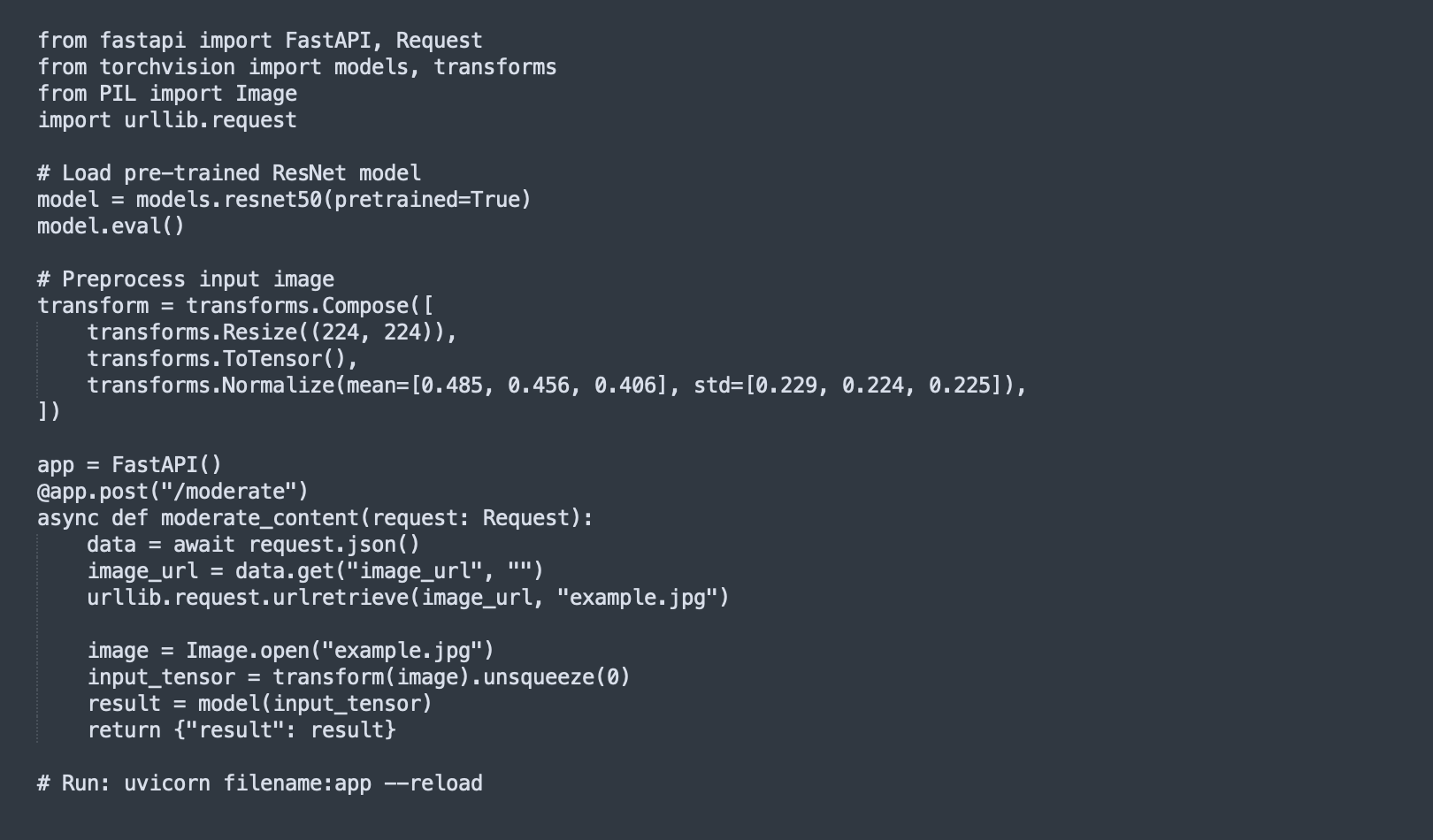

- Pre-Trained Convolutional Neural Networks (CNNs): These models are trained on a large dataset of images and can identify harmful content like nudity, violence, etc. Some common models are VGG and ResNet

- Custom CNNS: For improved precision and recall, CNNs can be fine-tuned for specific categories and adapted for the platform's policy needs.

All of these models must be trained and evaluated against the "Golden Data Set" to achieve the desired performance before deployment. The models can be trained to generate labels which can then be processed to provide the decision regarding the content.

Step 4: Deployment

Once the models are ready for deployment, they can be exposed using some APIs that different services can call for real-time moderation. If real-time moderation is not required for less urgent tasks, a batch processing system can be set up instead.

Step 5: Human Review

AI/ML systems may not be able to confidently make decisions for all cases. Ambiguous decisions may arise where the predicted ML score can be lower than the selected thresholds for confident decision-making. In these scenarios, the content should be reviewed by human moderators for accurate decision-making. Human reviewers are essential for reviewing the false positive decisions made by the AI system. Human reviewers can generate similar labels as ML models using a decision tree (with policies coded in the form of a decision tree), and these labels can be used to finalize decisions.

Step 6: Label Processor

A label processor can be used to interpret the labels generated by ML systems and human reviewers and convert them into actionable decisions for users. This could be a straightforward system that maps system-generated strings to human-readable strings.

Step 7: Analytics and Reporting

Tools like Tableau and Power BI can be used to track and visualize the moderation metrics, and Apache airflow can be used to generate insights. Key metrics to monitor include precision and recall for the ML systems, human review time, throughput, and response time.

Conclusion

Building and deploying an AI-powered real-time moderation system ensures the scalability and safety of digital platforms. This guide provides a roadmap to balancing speed, accuracy, and human oversight, ensuring content aligns with your platform’s policies and values.

Opinions expressed by DZone contributors are their own.

Comments