Math Behind Software and Queueing Theory

I will be taking a closer look at a branch of mathematics known as queueing theory and its potential applications in the world of software engineering.

Join the DZone community and get the full member experience.

Join For FreeIn this part of the Math Behind Software series, I will be taking a closer look at a branch of mathematics known as queueing theory and its potential applications in the world of software engineering. I will describe some basics around the queueing theory, explain the basic laws from said theory, and show you the equations which can be used to estimate queue throughput. I will end with a short example of calculating service capacity with all the things explained earlier. Let’s start with a quick recap of what was described in the previous part, which can be found here.

Contention and Coherency — Recap

In the previous part of the series, I explained contention and coherency delay and their impact on the performance of our system. I brought up concepts like Amdahl’s Law, which is focused on measuring the impact of contention, and Universal Scalability Law (Gunther’s Law) which additionally accounts for coherency delay. I described them and presented their equations. I also plotted graphs to show the difference in numbers between both Laws.

How Both Parts Are Related

As you may have noticed while reading through the previous part, getting real numbers from either Amdahl’s Law or Universal Scalability Law is quite a task and may require lots of checks, tests and preparations. Fortunately, the queueing theory seems to help us solve this problem. It contains laws, equations and relations which can be used to calculate the same metrics as Universal Scalability Law and their arguments are way easier to get than ones of USL. As a side note, I can add that Universal Scalability Law can be derived from one of the models from queueing theory, namely the machine repairman model — according to Neil Gunther himself.

What Is Queueing Theory?

Unsurprisingly, queueing theory is a branch of mathematics, focused on studying and describing queues (or, in more professional terms, lines). The whole theory is all about how lines are created, how they behave, and, most important, how and why they malfunction.

It is one of these branches of mathematics which are useful in real life; e.g., it can be used in many branches of industry. From general logistics — to estimating and calculating the flow of goods between stores, through telecoms — the quality of service assurance or teleco flags requires the capacity to even our world of software — all the message queues around like Kafka or RabbitMQ.

What Is Kendall Notation — Describing Queuing Systems?

Kendall’s notation or Kendall notion is a system for describing and classifying queuing systems proposed in 1953 by D.G. Kendall. Originally it was based on three parameters A/S/c but later another three parameters were added and the notation ended up as A/S/c/K/N/D. Of course, each one of the parameters has its unique meaning and represents a different aspect of a particular system.

Below you can see the description of all the parameters and their meaning:

- A is the time between the arrival of customers in the queue

- S is the service time distribution

- c is the number of servers

- K is the capacity of the queue,

- N is the overall maximum number of customers

- D is the queuing discipline

Parameters A, B, and D have a finite set of possible values — you can find more information about the most common values of these parameters here. On the other hand, parameters c, K, and N have an infinite set of possible values and can be assigned values from the whole set of whole numbers.

Examples of queuing systems descriptions in Kendall notation:

- M/M/c

- M/M/2

- G/M/c/5

- D/D/3/5/PS

If any of the last three elements are missing, for example in M/M/c queue, then one should assume that K and N are equal to infinity and D is equal to FIFO.

Queueing Theory in Mathematical Symbols

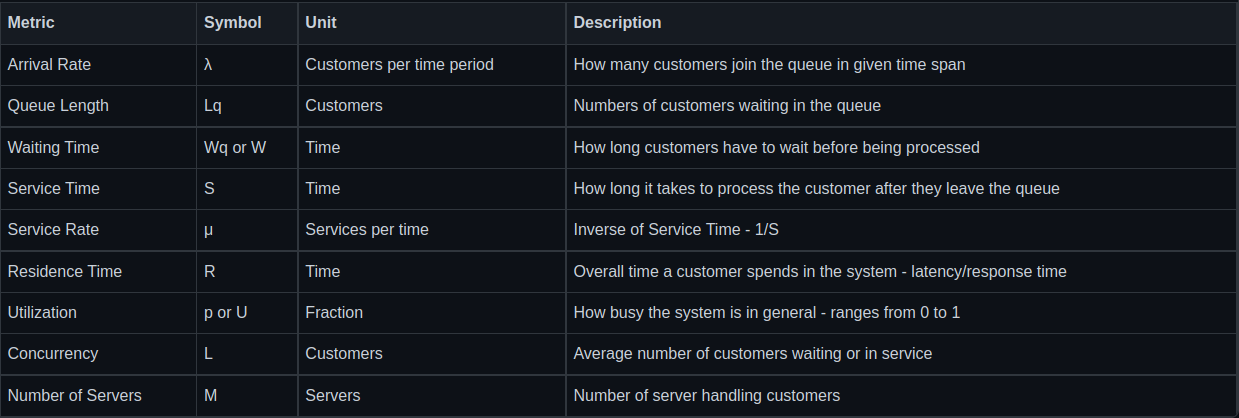

Before we move to describe laws and relationships between various concepts within queueing theory, let’s make a short description of metrics and symbols that are commonly used to represent them.

As you can see, most of the metrics are averaged calculations. It works this way to simplify the computation as otherwise, it will end up with different outputs based on temporal traffic, while in queueing theory it is more about the long-term averaged results.

Another important thing is that most of the metrics are, in fact, unitless and do not have any explainable units like meters or grams. They are just pure values so we do not have to deal with verifying units and unit correctness.

What is more, we must remember to use time periods everywhere — for every metric based on time. For example, if our arrival rate is 4000 customers per hour and the service rate is 5 per second, we have to normalize the values either to hours or seconds but we cannot compute them before such an operation as our results would not be logically correct.

Queueing Theory Laws

I will start with Little’s Law which is one of the most important laws concerning queueing theory. It was presented by John Little in 1954 and for many years taken for granted and used without any formal mathematical proof, which Little finally published in 1961. The law describes the relationship between the long-term average number of customers in a stable system, the arrival time, and residence time — the number of customers is equal to the arrival rate multiplied by residence time. In symbolic notation:

L = AR

It can be applied to any system including systems within systems. Another notable thing related to this law is that “it is not influenced by the arrival process distribution, the service distribution, the service order, or practically anything else.” — quoting from Simchi-Levi, D.; Trick, M. A. (2013) Introduction to Little’s Law as Viewed on Its 50th Anniversary.

Be Aware

This law can only be applied to stable (stationary) systems — systems whose “unconditional joint probability distribution does not change when shifted in time. Consequently, parameters such as mean and variance also do not change over time”. For our needs we can assume that it means that our system is not affected by any outside factors while it is up and running, e.g. changing resources it uses.

Utilization Law

Describes the relationship between, unsurprisingly, utilization, throughput, service time, and the number of servers. Utilization is equal to throughput multiplied by service time. In symbolic notation:

p = λS/M

There is also an alternative equation of utilization law, which states that utilization is equal to the arrival rate divided by the service rate.

p = λ/u

In the second form of the equation, we can notice that when customers arrive faster than they are processed, the utilization will be higher than 100% and the queue will grow infinitely.

The last important equation is not a law per se. However, it describes quite a significant relation, namely, the relationship between residence time, waiting time, and service time. The resident time is equal to waiting time plus service time. In mathematical notation:

R = Wq + S

There is yet another equation for R that we can use for the M/M/c queue and which I will be using quite extensively later on. In mathematical notation:

R = S/(1-(p)^M)

where M is the number of servers taken from the system description in Kendall’s notation.

Proving it would require a separate article focused only on this topic so I will not do it in this article. For those of you who are more interested in mathematics with formal proof, beware: the math there has the potential to burn one’s brain at once.

How Queueing Theory Relates to Software

Basically, we can treat most of the systems like a queue so users send requests, the request process, and the response return to the user, or when the system is too busy to process the request right away, the request waits until some arbitrary timeout is reached or it will be processed.

The real problem is to correctly identify the class of the system we are working on. In most cases, it will be the variation of M/M/c or Mx/m/c. Unfortunately, it can result in our calculations not being very in line with real life. As long as we are taking care of long-term average system performance then M/M/c is an appropriate description and most of the inconsistencies should be kept in line with averaged results.

With all this theoretical introduction done, we can move to the most important part — the example.

Example

Assume that there is a service somewhere and that it is doing some arbitrary work. The team responsible for its development and maintenance estimated that average traffic will be around 100 requests per minute, not great, not terrible. Through some simple performance tests, they learned that processing a single request takes around 200 milliseconds.

In the beginning, they want to check how long the service will be processing requests in the long term. Then they want to check what will happen when the number of requests increases to 200 per minute. They make the assumption that their service is an M/M/1 queue.

Let’s Start With Normalizing Both Values To Use the Same Time Period.

λ = 100 RPM = 1.6 RPS => λ2 = 3.2 RPS

S = 200 ms = 0.2 s

M = 1

Calculate p (Utilization) As It Is Needed Later in the R (Residence Time) Equation.

p = (A * S)/M

p = 1.6 * 0.2 / 1= 0.32

utilization is 0.32 %

Calculating R (Latency or Residence Time)

R = S/(1-p^M)

R = 0.2/(1- 0.32)= 0.2/(0.68)=0.29

R = 0.29

The average long-term latency in case of 100 requests per minute is 0,29 seconds or 290 milliseconds.

Now we can check and see what happens when the number of requests will be increased to 3.2 RPS

Recalculation Utilization

p = 3.2 * 0.2 = 0.64

Utilization is 2 times higher than what was expected before because we doubled the number of requests.

Recalculating Latency for New Value of Utilization

R = 0.2/(1–0.64)= 0.2/(0.36)=0.56

R = 0.56

The average long-term latency was increased to 0,56 seconds or 560 milliseconds which is slightly better than utilization.

Our maintainers were able to successfully calculate how their system will behave so they decided to check how many additional instances of service will be needed to keep latency for 200 RPM on the same level as for 100 RPM while keeping utilization at 64%.

First, I will have to make a few transformations to our R equation.

Given that R = S/(1-p^M) and we need to find M we need such an equation;

M = (ln (1-S/R))/ln(p)

M = ln(1–0.2/0.29)/ln(0.64) = ln(0.31)/ln(0.64) = 2.62

M is equal to 2.62 but we have to round it up to whole numbers because we cannot add 2,6 nodes to only 2 or 3. We need at least 2 additional servers to keep up with latency after the number of requests was doubled.

One last thing I want to show you is what will happen if the number of RPS is huge like 1000 RPS. Like previously, I will start with calculating utilization.

P = 1000 * 0,2 = 200

Utilization is equal to 200 while it should be equal to, at most, 1. It means that the system utilization is 200 times bigger than it should be — the system is down, dead, and without any redeeming qualities.

Summary

It was quite a brief and simple introduction to queueing theory and its applications in the world of software engineering. I have proposed an assumption we can make while applying queueing theory to software and describe problems that may occur while doing so. I have also provided an example to show how we can use all described laws and relations in real-life-ish cases. I hope that my description was clear and cohesive and that it will be useful for you sometime in the future or that you learn something new at least.

Published at DZone with permission of Bartłomiej Żyliński. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments