The Maturing of Cloud-Native Microservices Development: Effectively Embracing Shift Left to Improve Delivery

Shifting left is a focus on app delivery from the outset of a project, where software engineers are just as focused on the delivery process as they are on writing code.

Join the DZone community and get the full member experience.

Join For FreeEditor's Note: The following is an article written for and published in DZone's 2024 Trend Report, Cloud Native: Championing Cloud Development Across the SDLC.

When it comes to software engineering and application development, cloud native has become commonplace in many teams' vernacular. When people survey the world of cloud native, they often come away with the perspective that the entire process of cloud native is for the large enterprise applications. A few years ago, that may have been the case, but with the advancement of tooling and services surrounding systems such as Kubernetes, the barrier to entry has been substantially lowered. Even so, does adopting cloud-native practices for applications consisting of a few microservices make a difference?

Just as cloud native has become commonplace, the shift-left movement has made inroads into many organizations' processes. Shifting left is a focus on application delivery from the outset of a project, where software engineers are just as focused on the delivery process as they are on writing application code. Shifting left implies that software engineers understand deployment patterns and technologies as well as implement them earlier in the SDLC.

Shifting left using cloud native with microservices development may sound like a definition containing a string of contemporary buzzwords, but there's real benefit to be gained in combining these closely related topics.

Fostering a Deployment-First Culture

Process is necessary within any organization. Processes are broken down into manageable tasks across multiple teams with the objective being an efficient path by which an organization sets out to reach a goal. Unfortunately, organizations can get lost in their processes. Teams and individuals focus on doing their tasks as best as possible, and at times, so much so that the goal for which the process is defined gets lost.

Software development lifecycle (SDLC) processes are not immune to this problem. Teams and individuals focus on doing their tasks as best as possible. However, in any given organization, if individuals on application development teams are asked how they perceive their objectives, responses can include:

- "Completing stories"

- "Staying up to date on recent tech stack updates"

- "Ensuring their components meet security standards"

- "Writing thorough tests"

Most of the answers provided would demonstrate a commitment to the process, which is good. However, what is the goal? The goal of the SDLC is to build software and deploy it. Whether it be an internal or SaaS application, deploying software helps an organization meet an objective. When presented with the statement that the goal of the SDLC is to deliver and deploy software, just about anyone who participates in the process would say, "Well, of course it is." Teams often lose sight of this "obvious" directive because they're far removed from the actual deployment process. A strategic investment in the process can close that gap.

Cloud-native abstractions bring a common domain and dialogue across disciplines within the SDLC. Kubernetes is a good basis upon which cloud-native abstractions can be leveraged. Not only does Kubernetes' usefulness span applications of many shapes and sizes, but when it comes to the SDLC, Kubernetes can also be the environment used on systems ranging from local engineering workstations, though the entire delivery cycle, and on to production. Bringing the deployment platform all the way "left" to an engineer's workstation has everyone in the process speaking the same language, and deployment becomes a focus from the beginning of the process.

Various teams in the SDLC may look at "Kubernetes Everywhere" with skepticism. Work done on Kubernetes in reducing its footprint for systems such as edge devices has made running Kubernetes on a workstation very manageable. Introducing teams to Kubernetes through automation allows them to iteratively absorb the platform. The most important thing is building a deployment-first culture.

Plan for Your Deployment Artifacts

With all teams and individuals focused on the goal of getting their applications to production as efficiently and effectively as possible, how does the evolution of application development shift? The shift is subtle. With a shift-left mindset, there aren't necessarily a lot of new tasks, so the shift is where the tasks take place within the overall process. When a detailed discussion of application deployment begins with the first line of code, existing processes may need to be updated.

Build Process

If software engineers are to deploy to their personal Kubernetes clusters, are they able to build and deploy enough of an application that they're not reliant on code running on a system beyond their workstation? And there is more to consider than just application code. Is a database required? Does the application use a caching system?

It can be challenging to review an existing build process and refactor it for workstation use. The CI/CD build process may need to be re-examined to consider how it can be invoked on a workstation. For most applications, refactoring the build process can be accomplished in such a way that the goal of local build and deployment is met while also using the refactored process in the existing CI/CD pipeline.

For new projects, begin by designing the build process for the workstation. The build process can then be added to a CI/CD pipeline. The local build and CI/CD build processes should strive to share as much code as possible. This will keep the entire team up to date on how the application is built and deployed.

Build Artifacts

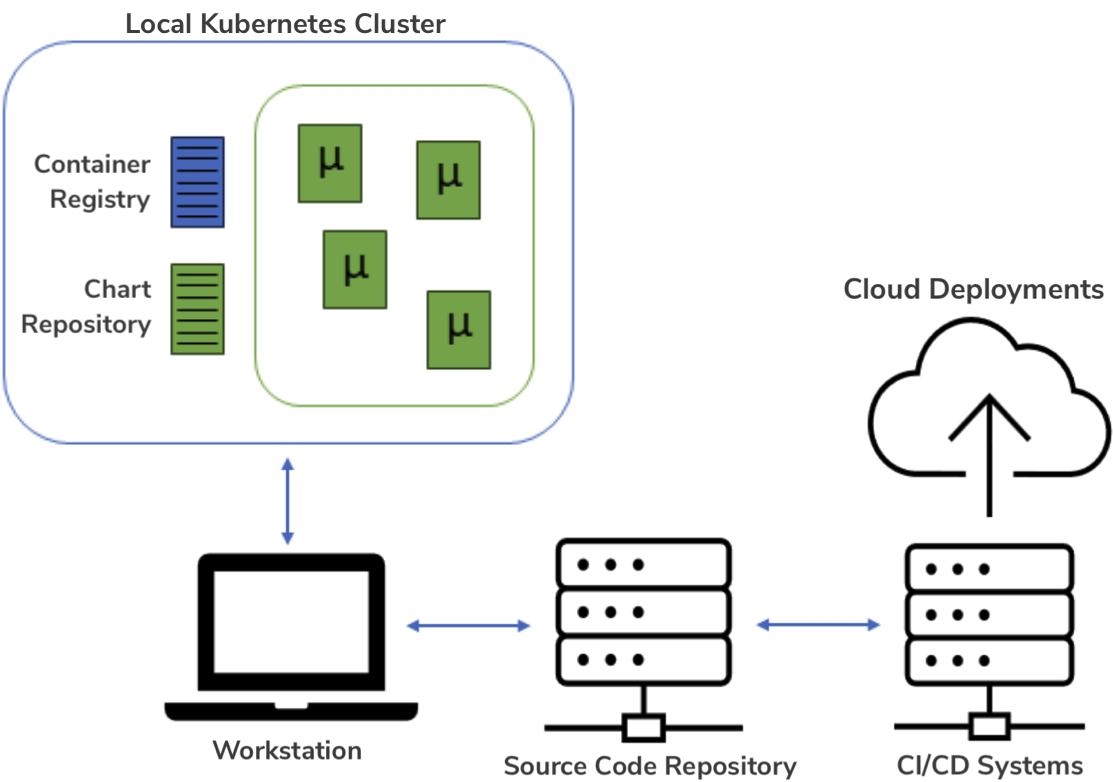

The primary deliverables for a build process are the build artifacts. For cloud-native applications, this includes container images (e.g., Docker images) and deployment packages (e.g., Helm charts). When an engineer is executing the build process on their workstation, the artifacts will likely need to be published to a repository, such as a container registry or chart repository.

The build process must be aware of context. Existing processes may already be aware of their context with various settings for environments ranging from test and staging to production. Workstation builds become an additional context. Given the awareness of context, build processes can publish artifacts to workstation-specific registries and repositories. For cloud-native development, and in keeping with the local workstation paradigm, container registries and chart repositories are deployed as part of the workstation Kubernetes cluster. As the process moves from build to deploy, maintaining build context includes accessing resources within the current context.

Parameterization

Central to this entire process is that key components of the build and deployment process definition cannot be duplicated based on a runtime environment. For example, if a container image is built and published one way on the local workstation and another way in the CI/CD pipeline. How long will it be before they diverge?

Most likely, they diverge sooner than expected. Divergence in a build process will create a divergence across environments, which leads to divergence in teams and results in the eroding of the deployment-first culture. That may sound a bit dramatic, but as soon as any code forks — without a deliberate plan to merge the forks — the code eventually becomes, for all intents and purposes, unmergeable.

Parameterizing the build and deployment process is required to maintain a single set of build and deployment components. Parameters define build context such as the registries and repositories to use. Parameters define deployment context as well, such as the number of pod replicas to deploy or resource constraints. As the process is created, lean toward over-parameterization. It's easier to maintain a parameter as a constant rather than extract a parameter from an existing process.

Figure 1. Local development cluster

Cloud-Native Microservices Development in Action

In addition to the deployment-first culture, cloud-native microservices development requires tooling support that doesn't impede the day-to-day tasks performed by an engineer. If engineers can be shown a new pattern for development that allows them to be more productive with only a minimum-to-moderate level of understanding of new concepts, while still using their favorite tools, the engineers will embrace the paradigm. While engineers may push back or be skeptical about a new process, once the impact on their productivity is tangible, they will be energized to adopt the new pattern.

Easing Development Teams Into the Process

Changing culture is about getting teams on board with adopting a new way of doing something. The next step is execution. Shifting left requires that software engineers move from designing and writing application code to becoming an integral part of the design and implementation of the entire build and deployment process. This means learning new tools and exploring areas in which they may not have a great deal of experience. Human nature tends to resist change. Software engineers may look at this entire process and think, "How can I absorb this new process and these new tools while trying to maintain a schedule?" It's a valid question. However, software engineers are typically fine with incorporating a new development tool or process that helps them and the team without drastically disrupting their daily routine.

Whether beginning a new project or refactoring an existing one, adoption of a shift-left engineering process requires introducing new tools in a way that allows software engineers to remain productive while iteratively learning the new tooling. This starts with automating and documenting the build out of their new development environment — their local Kubernetes cluster. It also requires listening to the team's concerns and suggestions as this will be their daily environment.

Dev(elopment) Containers

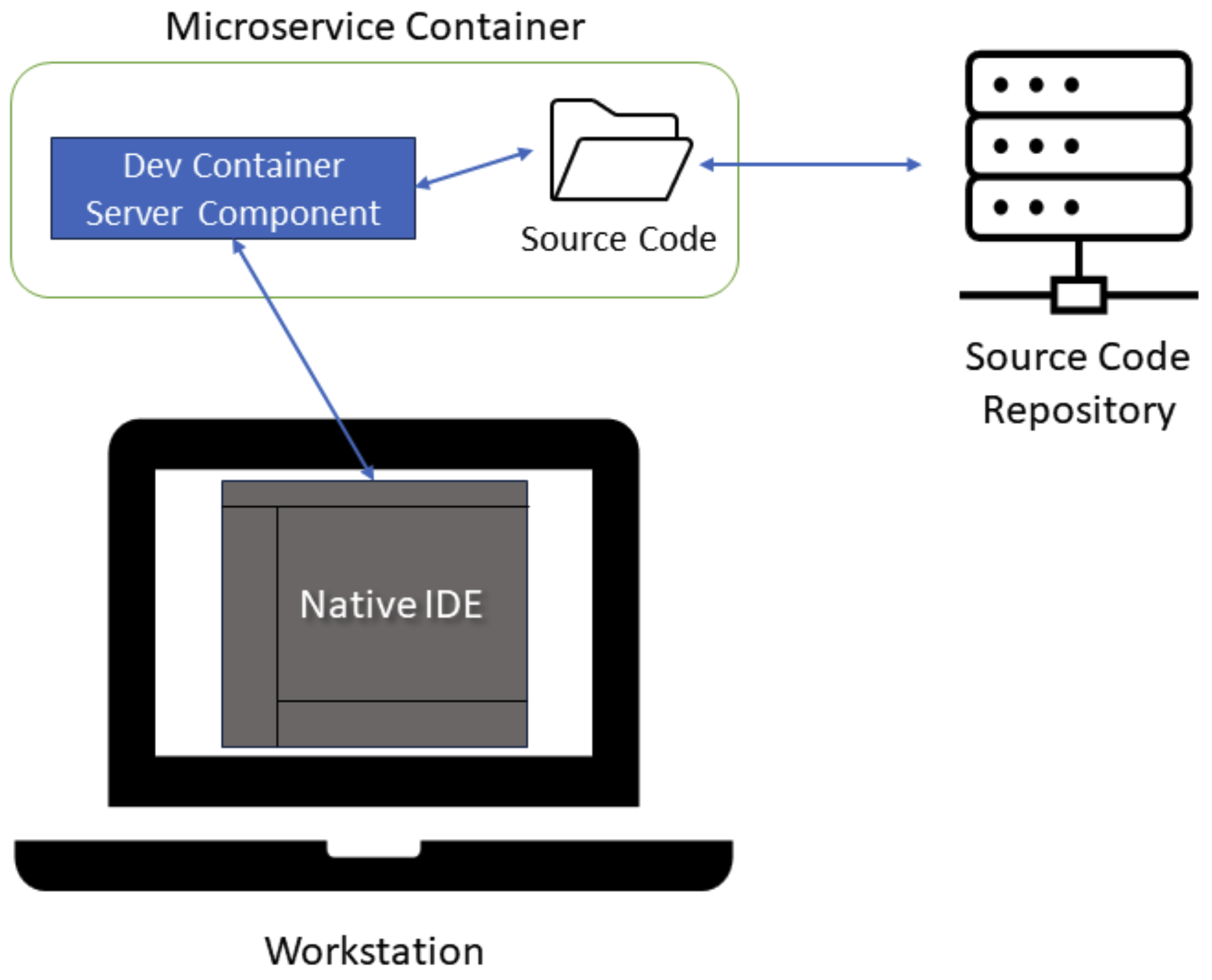

The Development Containers specification is a relatively new advancement based on an existing concept in supporting development environments. Many engineering teams have leveraged virtual desktop infrastructure (VDI) systems, where a developer's workstation is hosted on a virtualized infrastructure. Companies that implement VDI environments like the centralized control of environments, and software engineers like the idea of a pre-packaged environment that contains all the components required to develop, debug, and build an application.

What software engineers do not like about VDI environments is network issues where their IDEs become sluggish and frustrating to use. Development containers leverage the same concept as VDI environments but bring it to a local workstation, allowing engineers to use their locally installed IDE while being remotely connected to a running container. This way, the engineer has the experience of local development while connected to a running container. Development containers do require an IDE that supports the pattern.

What makes the use of development containers so attractive is that engineers can attach to a container running within a Kubernetes cluster and access services as configured for an actual deployment. In addition, development containers support a first-class development experience, including all the tools a developer would expect to be available in a development environment. From a broader perspective, development containers aren't limited to local deployments. When configured for access, cloud environments can provide the same first-class development experience. Here, the deployment abstraction provided by containerized orchestration layers really shines.

Figure 2. Microservice development container configured with dev containers

The Synergistic Evolution of Cloud-Native Development Continues

There's a synergy across shift-left, cloud-native, and microservices development. They present a pattern for application development that can be adopted by teams of any size. Tooling continues to evolve, making practical use of the technologies involved in cloud-native environments accessible to all involved in the application delivery process. It is a culture change that entails a change in mindset while learning new processes and technologies. It's important that teams aren't burdened with a collection of manual processes where they feel their productivity is being lost. Automation helps ease teams into the adoption of the pattern and technologies.

As with any other organizational change, upfront planning and preparation is important. Just as important is involving the teams in the plan. When individuals have a say in change, ownership and adoption become a natural outcome.

This is an excerpt from DZone's 2024 Trend Report, Cloud Native: Championing Cloud Development Across the SDLC.

Read the Free Report

Opinions expressed by DZone contributors are their own.

Comments