Migrating a NorthWind Database to a NoSQL Database

Learn the reasoning, the data collection, the schema definition, and the migration procedures involved in moving to a NoSQL database.

Join the DZone community and get the full member experience.

Join For FreeIt's now a fact that databases need to have the ability to scale-out to handle data that's produced and accessed at great velocity and in huge volumes. Traditional databases (RDBMS) were never meant to handle such high a flow of information. To keep up, you either incorporate a distributed cache like NCache to overcome the database bottlenecks, or replace the RDBMS with a NoSQL database. NosDB is one such NoSQL database and is made from the ground up in .NET. It easily handles peak loads, and scales out at runtime — and therefore increases throughput on an ‘as-needed’ basis.

It's clear at this point that considering a NoSQL solution will free you of RDBMS bottlenecks. But what's less clear is how to properly migrate from a relational database to NoSQL. NoSQL is just so easy to use that developers often dive in head-on without properly understanding and constructing data models. With the infinite flexibility and features that a NoSQL database provides, it is very easy to mess up your data during migration, which causes a lot of problems later on.

Planning will help to properly migrate your existing data from an RDBMS to a NoSQL database without hurting your existing business processes. To completely demonstrate it, we are going to use Microsoft's sample application, NorthWind, as an example. To begin, let’s understand some of the basic business operations of this fictitious company, ‘NorthWind Traders.’

Why understand business operations? Well, with a traditional RDBMS, you are locked into database-driven development. But with a NoSQL database, your development is always domain-driven, giving you complete control over how you want your data to be stored. Therefore, the easiest approach is to build the database schema around your business use case and your application.

The application we are using is open source and publicly hosted at CodePlex. The project also contains the SQL scripts to load data into your MSSQL database.

Business Model

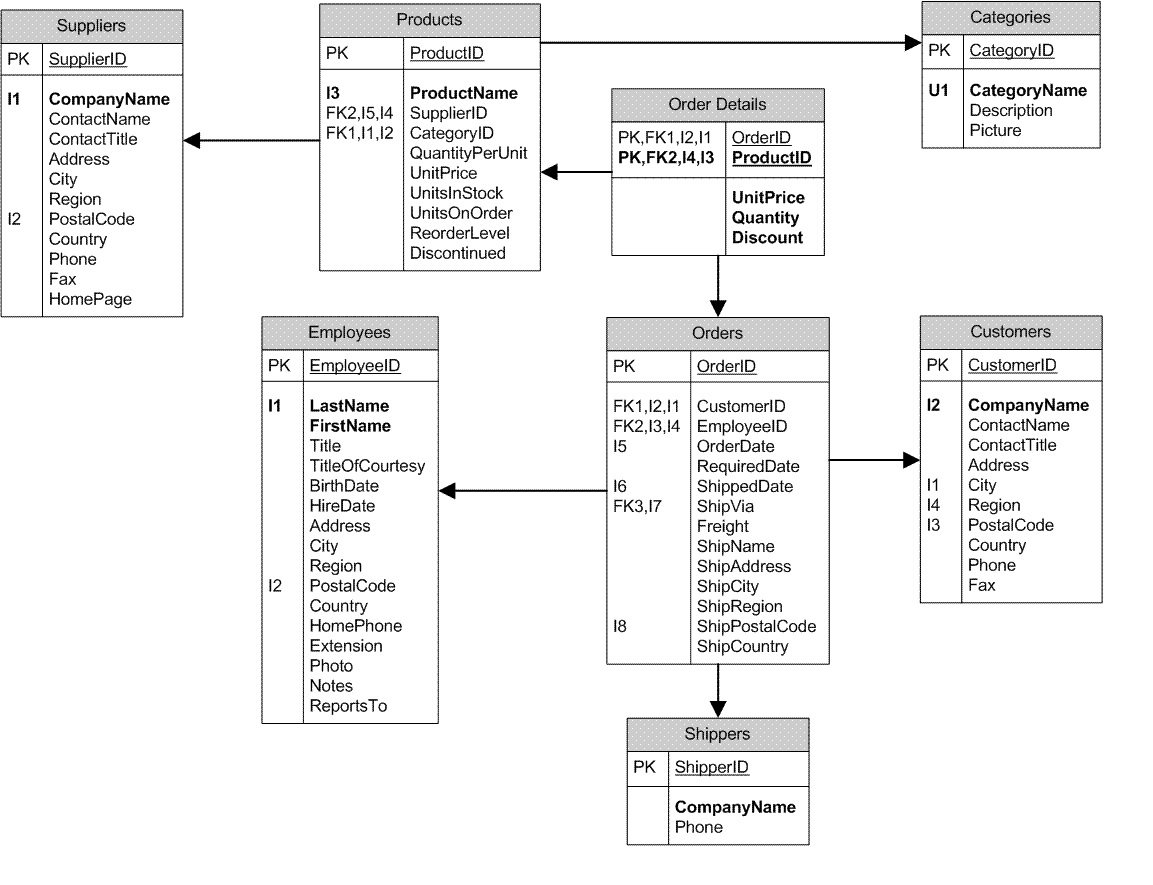

Following is the schema model of a NorthWind database in a Microsoft SQL Database.

If you look at the schema model, you'll see the following entities.

- Customers

- Employees

- Products

- Categories

- Orders

- OrderDetails

- Shippers

- Suppliers

Since NorthWind is a Trading company, let’s assume new Orders are created every day. The number of orders created could be in the tens of thousands, hourly, from all over the globe. With this assumption, we can further assume the Orders entity is write intensive. ‘Reads’, on the other hand, will not be as voluminous as ‘writes’.

Next, let's consider the entities of Customers, Employees, Products, Shippers, and Suppliers. These entities will be created, updated, or removed at a low frequency. The exception is the Productse entity. Products 'reads' will be high against every Order.

Building a Database Schema in a NoSQL database

With the business model clear, let’s construct a basic data model. We'll worry about the many-to-many and other relations between the entities at a later stage.

For starters, let us just convert the above schema into its respective JSONs. As an example, let's see how theProduct and Order JSON looks:

"Product" {

"_id": "pk",

"name": "string",

"quantityPerUnit": "string",

"unitPrice": "int",

"unitsInStock": "int",

"unitsOnorder": "int",

"reorderLevel": "int",

"discontinued": "boolean",

"supplier": "FORIEGN KEY",

"category": "FORIEGN KEY"

}

"Order" {

"_id": "pk",

"orderDate": "Date",

"requiredDate": "Date",

"shippedDate": "Date",

"freight": "string",

"shipName": "string",

"shipAddress": "string",

"shipCity": "string",

"shipRegion": "string",

"shipPostalCode": "string",

"shipcountry": "string",

"customerID": "FORIEGN KEY",

"employeeID": "FORIEGN KEY",

"shipperID": "FORIEGN KEY",

"orderDetails": "FORIEGN KEY"

}Similar conversions will be done for other entities. You'll see that we haven't yet covered Categories and OrderDetails, but now is the time to do so.

These objects do not mean anything by themselves unless referenced by another entity. The Categories object is used in Products whereas OrderDetails is used against every product in an Order. Therefore, the plan is that OrderDetail objects are always created along with a new Order and are also fetched in relation to an Order as well.

So to fetch an Ordera and its related OrderDetail objects in an RDBMS, you would usually perform a JOIN.

SELECT * FROM OrderDetials INNER JOIN Orders ON OrderDetail.OrderID = Order.IDBut since data is distributed in a NoSQL database, it is best for you to avoid JOINS. We’ll cover cases when you can't avoid it at the end of this article.

Anyhow, in this case, since OrderDetailsw won't be accessed independently and we also want to avoid JOINS, it is better to embed OrderDetails inside the Ordere entity. It will help us to fetch OrderDetails automatically whenever we fetch Orders. This will also help us avoid extra network calls when writing new Orders. The same is the case with Products. Storing Category inside Products will help improve Products entity ‘read’ speeds by a huge margin.

As you can see, keeping OrderDetails as an array inside the Orders collection is speedy since one Order can have multiple OrderDetails. However, considering that Categories exhibits a many-to-oner relation with Product, an array is not required. The Order and Product JSON will now be expressed as follows:

"Product" {

"_id": "pk",

"name": "string",

"quantityPerUnit": "string",

"unitPrice": "int",

"unitsInStock": "int",

"unitsOnorder": "int",

"reorderLevel": "int",

"discontinued": "boolean",

"supplier": "TO BE DECIDED",

"category": {

"name": "string",

"description": "string",

"picture": "byte[]"

}

}

"Order" {

"_id": "pk",

"orderDate": "Date",

"requiredDate": "Date",

"shippedDate": "Date",

"freight": "string",

"shipName": "string",

"shipAddress": "string",

"shipCity": "string",

"shipRegion": "string",

"shipPostalCode": "string",

"shipcountry": "string",

"customerID": "TO BE DECIDED",

"employeeID": "TO BE DECIDED",

"shipperID": "TO BE DECIDED",

"orderDetails": [

{

"productID": "pk",

"unitPrice": "int",

"quantity": "int",

"discount": "int"

},

{

"productID": "pk",

"unitPrice": "int",

"quantity": "int",

"discount": "int"

}

]

}We have covered creating a basic data model of our NorthWind database. The next step is understanding the relationships and options for further normalization or de-normalization of our individual entities.

Migrating Relations

The most crucial entities in terms of relationships in the NorthWind database, as seen from the schema model above, are Products, Orders , and OrderDetails. These entities exhibit one-to-one, one-to-many, and many-to-many relationships with other entities.

Let's start with what we already know. OrderDetail has a many-to-one relationship with Products — one OrderDetail can only be associated with a single product, but a Product can be associated with multiple OrderDetails.

So, should we embed the Product entity inside the Order or not? To answer, consider the use case of NorthWind Traders. We know that Orders will be created at high velocity every day, so we want to avoid storing duplicate data in it and also keep the object size small to save network bandwidth. Another reason to avoid duplication of our Product object is that if a single attribute of a Product changes, we will have to reflect that change in all the OrderDetails. And since the number of Orders will be huge over time, normalizing the Product entity is the best option.

The same applies with the one-to-many relation of Employees, Shippers, Customers with Orders, and Suppliers with Products. We'll keep them all as separate entities but store their respective IDs to maintain their associations.

Now let’s turn to the discussion of Categories. Since we have already embedded Categories inside Products, one issue remains. What if we want to fetch all the unique Categories? The answer is in SQL on NosDB.

SELECT DISTINCT product.Category.CategoryName FROM Products;

Although SQL meets our requirement, searching for unique Categories throughout all the products just to get a Categories list will be computationally expensive on your servers. The solution is to narrow the list to just 10 categories. The better optimization here is to purposefully limit duplication i.e. to create a Categories collection as well as embedding it inside the Product entity. The application will be responsible for creating an entry in this collection if a new Categories entity is created at any point. So finally, the Product JSON becomes:

"Product" {

"_id": "pk",

"name": "string",

"quantityPerUnit": "string",

"unitPrice": "int",

"unitsInStock": "int",

"unitsOnorder": "int",

"reorderLevel": "int",

"discontinued": "boolean",

"supplier": "PrimaryKey",

"category": {

"name": "string",

"description": "string",

"picture": "byte[]"

}

}

//Storing Category separately as well

"Category" {

"name": "string",

"description": "string",

"picture": "byte[]"

}But there is still room for more optimization. Let's take a look at the Categories object inside the Product. Do we need to store the Categories picture with every Product? Yes, you're right; we don't. As far as the Products document is concerned, we can use a hybrid data model i.e. we will partially normalize the data model. We will embed only those parts of Categories which are required and not the whole JSON object. Therefore, the Products object becomes:

"Product" {

"_id": "pk",

"name": "string",

"quantityPerUnit": "string",

"unitPrice": "int",

"unitsInStock": "int",

"unitsOnorder": "int",

"reorderLevel": "int",

"discontinued": "boolean",

"supplier": "PrimaryKey",

"category": {

"name": "string",

"description": "string",

}

}You can also opt to store the Categories pictures as Attachments, but that's a discussion left for another day.

Migrating Data to NosDB

With the schema completely defined, the next step is to write an ETL to transfer all the data from MSSQL to NosDB. This is easy, given the fact that the object model in your application should mirror what you want to store in NosDB. That data will automatically be converted to its respective JSON. For example, the Product object has Categories objects embedded as a whole, but the Supplier is only referenced. See the following implementation of the product model:

public class Product

{

[JsonProperty(PropertyName = "_key")]

public string ID { get; set; }

public string Name { get; set; }

public string QuantityPerUnit { get; set; }

public double UnitPrice { get; set; }

public short UnitsInStock { get; set; }

public short UnitsOnOrder { get; set; }

public short ReorderLevel { get; set; }

public bool Discontinued { get; set; }

//ID of corresponding Supplier Object

public string Supplier { get; set; }

//Embedded Category Object (One-to-One relation)

public Category Category { get; set; }

}Here, theID is used as the primary key for the database. The Supplier is stored as a reference just by keeping the IDs. The Categories object, on the other hand, is kept embedded within the Products object.

After we have the Products document created, we'll insert the object in the database and the .NET object will automatically be converted into a JSON object ready to be fetched from any language.

// Fetch a CommandText object for Product Table

// "SELECT * FROM [Product], [Supplier], [Category]

// WHERE ([Product].[SupplierID] = [Supplier].[ID])

// AND ([Product].[CategoryID] = [Category].[ID])

command.CommandText = TableQueryMap[Table.Product];

List<Product> allProducts = new List<Product>();

IDataReader reader = command.ExecuteReader();

while (reader.Read())

{

// Convert table information to Product Object

Product product = ReadingHelper.ReadProduct(reader);

// Convert table information to Category Object

product.Category = ReadingHelper.ReadCategory(reader);

// Add Product in a list for Bulk Add later on

allProducts.Add(product);

if (allProducts.Count == MaxBulkSize)

{

// Bulk Add Product objects into NosDB

products.InsertDocuments(allProducts);

allProducts.Clear();

}

}

// Insert remaining Products

if (allProducts.Count > 0)

{

products.InsertDocuments(allProducts);

}

reader.Close();For the complete ETL sample, download it from the following link: Download utility

Now that we have a complete understanding of our NorthWind database in NosDB and we have also migrated it, our next step is to convert our NorthWind application.

Migrating a NorthWind Sample Application

It's easier to go through this simple process if we divide it into basic steps:

- First download and extract the NorthWind application from the following location.

- Open Northwind.NET.VS2010.sln .

- This solution uses EntityFramework as an ORM to work with Microsoft SQL server. Since NoSQL development is domain driven you don't need an ORM framework to work with. You'll need to remove EF from the solution and simply work with .NET objects using the ADO.NET framework. Delete the EDMX file from the solution which holds the entity model.

After removing EF, we are going to access NosDB with something familiar; we are going to use the ADO.NET framework. First add NosDB references from NosDBInstallationFolder\bin\assembly and NosDBInstallationFolder\integrations\ADO.NET Data Provider\. Now open the app.config and change the EF binding to ADO.NET provider from NosDB as follows:

<DbProviderFactories>

<add invariant="Alachisoft.NosDB.ADO.NETProvider"

name="ADOProvider for .NET"

description="ADO.NET Provider for .NET"

type="Alachisoft.NosDB.ADO.NETProvider.NosProviderFactory,

Alachisoft.NosDB.ADO.NETProvider,

Version=1.0.0.0, Culture=neutral,

PublicKeyToken=9735cab1d68cdb50"/>

</DbProviderFactories>- Next step is to change the connection string from MSSQL database to NosDB. Once again, we'll be making the changes in the app.config.

<connectionStrings>

<add name="NosDbConnection"

connectionString="Data Source=20.200.20.48; Port=9950;

Database=database; Integrated Security=false; Local Instance=false;"

providerName="Alachisoft.NosDB.ADO.NETProvider"/>

</connectionStrings>- Next, we'll make a change in the code to reflect the changes made in the app.config. First, create a connection with the NosDB server:

//Fetch connection string from app.config

ConnectionStringSettings connectionString =

ConfigurationManager.ConnectionStrings["NosDbConnection"];

// Load the factory

DbProviderFactory Factory =

DbProviderFactories.GetFactory(connectionString.ProviderName);- Now change your Northwind.NET.Model objects to the one which reflects the NosDB collections.

- Next use SQL to work with the NoSQL database as you would with an RDBMS:

// Fetch a connection from Connection Pool

using (DbConnection connection = Factory.CreateConnection())

{

connection.ConnectionString = connectionString.ConnectionString;

connection.Open();

DbCommand command = Factory.CreateCommand();

command.Connection = connection;

command.CommandText = "SELECT ID, Name FROM Products";

IDataReader reader = command.ExecuteReader();

while(reader.Read())

{

// read it here

}

}That's it! Now your NorthWind application now has a scalable database to work with. It is this simple to convert your existing application to a NosDB database and still enjoy all the advantages provided by a NoSQL database.

The final step is to review how to mimic complex JOINS in a NoSQL database. There are two ways to do this. One is performing JOINs at the client end and the second is to write MapReduce code in .NET and perform JOINS at the server end. For our scenario, we'll be using JOINs at the client end.

For JOINs to work correctly you need all the related data in a single place. To make this happen we divide a JOIN query into multiple queries and execute them individually. For an example, we are going to consider a report generated of all orders from each employee.

In an RDBMS you would perform the query the following way:

SELECT Order.ID,Order.OrderDate,Order.ShippedDate,

Employee.Title,Employee.FirstName

FROM Order INNER JOIN Employee ON

Order.EmployeeID= Employee.IDTo perform the same query on a distributed dataset you'll have to break the query into two parts. First get all the employees, then merge them with all the orders. For the merging, you can query NosDB with the WHERE clause. See the following self-explanatory code.

if (database != null)

{

// ...

// execute employee query

string queryInner = "Select ID,Title,FirstName from employee";

ICollectionReader reader = employeeCollection.ExecuteReader(queryInner);

// Fetch all employee ID

while (reader.ReadNext())

{

JSONDocument employee = reader.GetObject<JSONDocument>();

employeeList.Add(employee.Get<string>("ID"), employee);

}

// execute order query

string queryOuter =

"Select ID,OrderDate,ShippedDate,EmployeeID FROM $order$" +

" WHERE EmployeeID IN (" + inParam + ")";

reader = orderCollection.ExecuteReader(queryOuter, queryParam);

// Find the INNER Criteria

while (reader.ReadNext())

{

string empID;

JSONDocument order = reader.GetObject<JSONDocument>();

order.TryGet<string>("EmployeeID", out empID);

if (!string.IsNullOrEmpty(empID))

{

if (employeeList.ContainsKey(empID))

{

JSONDocument emp = employeeList[empID] as JSONDocument;

JSONDocument res = new JSONDocument();

res.Add("orderID", order.Get<string>("ID"));

res.Add("Employee_Title", emp.Get<string>("Title"));

// Create resultant JSON

// ...

result.Add(res);

}

}

}

}The above example is well-suited if your data volume is low and your application is in the same network as your databases. If your case is different, then it is better to write your own custom MapReduce code to perform JOINs on the server side allowing you to skip the network cost of requesting all the data to the client.

The updated application can be downloaded from the following location: northwindnosql.codeplex.com

Summary

In this article, we gained hands-on experience of how to properly migrate a relational database to a NoSQL database. This includes:

How to develop a schema that fits your business use case

Knowing how to write .NET ETL code to fetch and put data in NosDB

How to easily convert your existing .NET application to become NoSQL compliant by using ADO.NET

And finally, we used SQL in a NoSQL database and learned how to do JOINs.

Published at DZone with permission of Basit Anwer. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments