Migration From One Testing Framework to Another

The article discusses two popular Python frameworks for end-to-end testing, their pros and cons, and explores the challenges of transitioning from one to another.

Join the DZone community and get the full member experience.

Join For FreeSome time ago (quite a long time actually), I joined a startup company that developed a storage system. This system had multiple interfaces available for end users — CLI, UI, and REST API. At first, there were only developers on this project. For big tests (end-to-end), they decided to choose Robot Framework — a popular solution with very extensive documentation. It supports so-called 'keywords,' allowing you to write tests in human-readable sentences and then execute them in the way you want:

*** Keywords ***

Open Login Page

Open Browser http://host/login.html

Title Should Be Login Page

Title Should Start With

[Arguments] ${expected}

${title} = Get Title

Should Start With ${title} ${expected}The actual code is hidden inside libraries written in Python. But the provided DSL is a separate language with its own constructions, and you can actually do almost everything with it (but it is a very, very, very tricky task). For instance, syntax for WHILE loop:

*** Test Cases ***

Example

${rc} = Set Variable 1

WHILE ${rc} != 0

${rc} = Keyword that returns zero on success

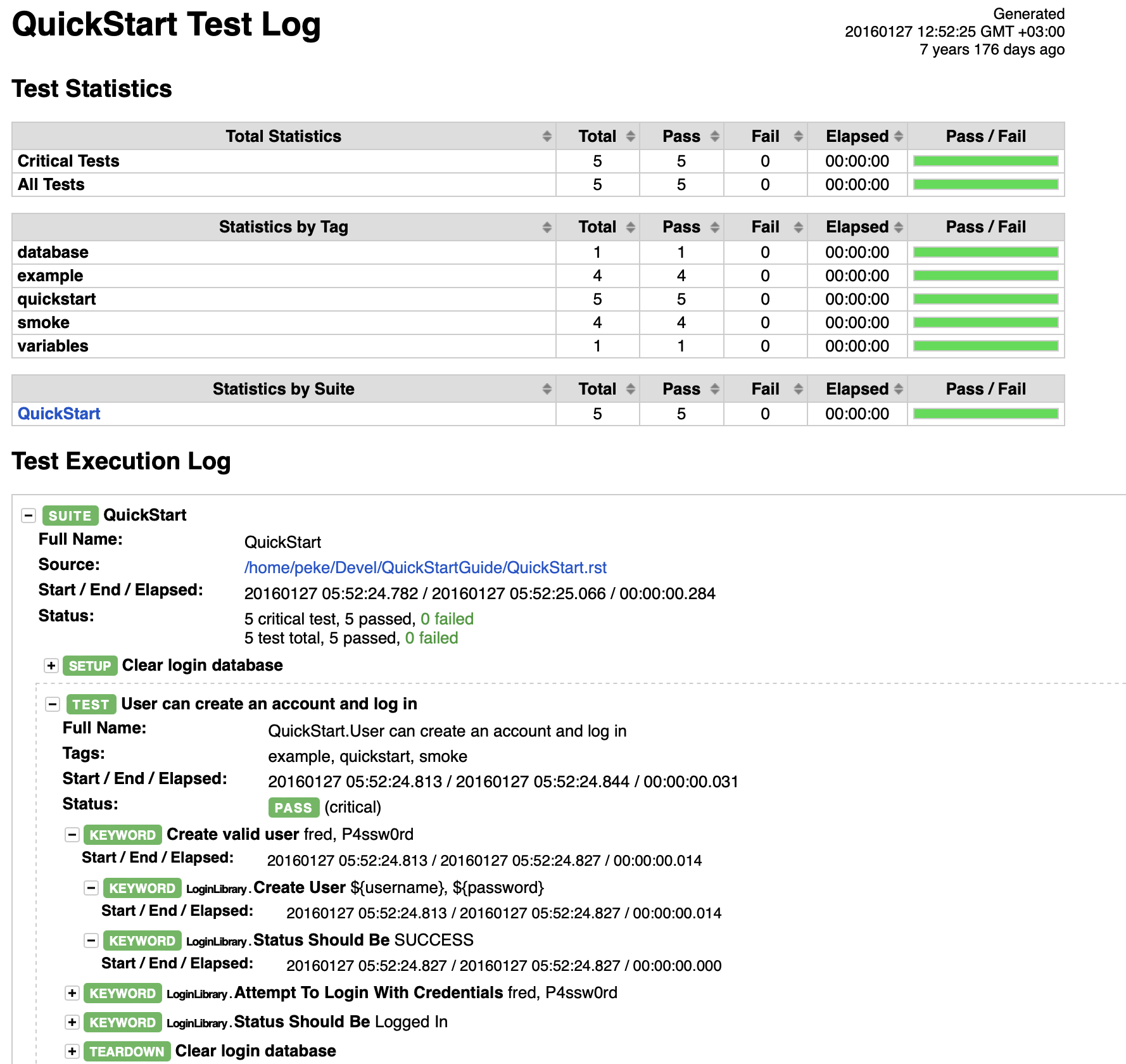

ENDDevelopers wanted to have the same tests for all interfaces (CLI, UI, REST API), and Robot Framework sounded like a top solution. In just a month, the first 200 tests were created. The speed of writing new tests was good, and developers were happy. Reports after tests were generated in an HTML format and were very detailed, already out of the box, with no need to adjust anything:

Besides, there are a lot of ready-to-use libraries for almost any task that comes up during testing.

First Steps With Robot

The product started evolving, and new people arrived. Finally, a new QA department was organized, which was the moment I joined the company. I had a background in Java programming and had previously used JUnit for writing end-to-end tests. At that point, there were around 600 tests written with Robot Framework.

My first task was to deal with failed tests inside our CI. There were 15 flippers, and actually, there were no green runs inside the CI. As a result, developers committed their code to a red CI without paying attention to failed tests, even if they were related to their code; they just skipped the failed job since it was always red.

The first thing I faced was difficulties with keyword debugging. We were not able to run tests locally due to some infrastructure limitations. Therefore, we had to run robot tests remotely on virtual machines inside our inner cloud. Although I knew how to deal with remote Python processes, there was no clear solution on how to debug keywords there. After struggling with this for a week, I managed to initiate a debug session, but the whole process was much less convenient than usual Python code debugging. I started to think that a simple, plain Python code would be much easier to debug in this case. However, if you run tests locally, normal debugging is available — here's how to set it up. Unfortunately, the whole week was spent for nothing because it didn't help me; the tests were passing when I ran them manually but not inside the CI.

So, I started to look closely at the reports — there were a lot of details, and actually, that was the problem. There were many tests, keywords, and information, but almost all of it was useless; the actual problems were inside Python libraries behind the keywords, and the logging there was not sufficient. After a lot of effort, I managed to find the root causes and fix the tests, and everyone was happy, including me.

Troubles

After my first year at the company, we had a team of 10 testing engineers, each with different levels of coding experience. Some had prior coding experience, while others did not. The team consisted of senior, middle-level, and a pair of junior engineers. All of them were responsible for maintaining the solution written in Robot Framework. As a senior engineer, it was quite challenging to manage. We had around 1,500 tests written in keywords, which were also written using keywords. The Robot DSL was extensively used, and the team attempted to code in this language, which was, of course, not Python. This led to constant googling on how to perform tasks like IF/ELSE/WHILE/FOR with some modifications.

You couldn't simply assume that a piece of code would or would not work; you always needed to test it. Since Robot DSL doesn't support Object-Oriented Programming, there was a lot of duplicate code and very strange solutions. Despite our efforts to conduct code reviews and move code to Python libraries, we still ended up with different keywords that performed almost the same tasks but with slight modifications.

We had data-driven tests, which are a form of test parameterization. They look like this:

*** Settings ***

Test Template Login with invalid credentials should fail

*** Test Cases *** USERNAME PASSWORD

Invalid User Name invalid ${VALID PASSWORD}

Invalid Password ${VALID USER} invalid

Invalid User Name and Password invalid invalid

Empty User Name ${EMPTY} ${VALID PASSWORD}

Empty Password ${VALID USER} ${EMPTY}

Empty User Name and Password ${EMPTY} ${EMPTY}

*** Keywords ***

Login with invalid credentials should fail

[Arguments] ${username} ${password}

Log Many ${username} ${password}We specify several test cases here, which are then passed to a single keyword. Additionally, we utilized a prerunmodifier feature of Robot to dynamically modify test cases. Here is an example. In short, it is a hook that Robot calls before actual test execution. This feature can allow us to, for example, create three tests out of one and add some additional keywords to the tests:

Simple cool test

[Tags] S1 S2 S3

Log Some cool test

After prerunmodifier there will be three tests:

Simple cool test: S1

[Tags] S1 S2 S3

Precondition for S1

Log Some cool test

Simple cool test: S2

[Tags] S1 S2 S3

Precondition for S2

Log Some cool test

Simple cool test: S3

[Tags] S1 S2 S3

Precondition for S3

Log Some cool testWe started encountering problems with IDE support. Although vscode/pycharm supports Robot syntax, on large projects with numerous variables and keywords consisting of variables, it just can't accurately figure out the connections and dependencies between them. Consequently, it became challenging to understand what calls what until we reviewed the report after the actual test run. This situation was detrimental as we had to spend time on test runs to debug syntax issues, causing new tests to be developed at a much slower pace.

Looking back, I assume we had all the bad practices in our solution, and Robot syntax didn't restrict us from following that path. It became evident that we needed to refactor what we had created. Very soon, we realized that the Robot DSL was actually causing problems for us. The original idea of having the same tests for different interfaces (UI, CLI, REST) didn't work out. Tests for each interface had unique requirements — for UI, we wanted to check UI-specific elements and workflows, while for CLI, the focus was on CLI-related aspects. Although the general workflows were similar, the details were significantly different. Consequently, we ended up with separate tests for each interface, each using separate keywords that were essentially the same but with slight differences.

Migration, Part 1

From time to time, new features arrived, and some of them required updating a lot of keywords (for instance, when we started having three nodes in our system cluster instead of two). Without the support of OOP and programming patterns, it became a nightmare to manage. I vividly remember the month when we received a new feature for testing — remote replication. I spent the entire week trying to update the code, and at that moment, it dawned on me — this framework (and its keywords) was not suitable for our needs. Promptly, I decided to explore a different framework — Pytest. I had already invested some spare time investigating it and creating demo tests. Within a week, I developed a test suite written in pure Python to check the replication feature. It was merely a proof of concept, but it was enough for me to realize that we needed to switch from the Robot Framework, and the sooner, the better. Our current solution was too cumbersome to maintain and refactor. But then, I faced the challenge of convincing others to join me in this decision.

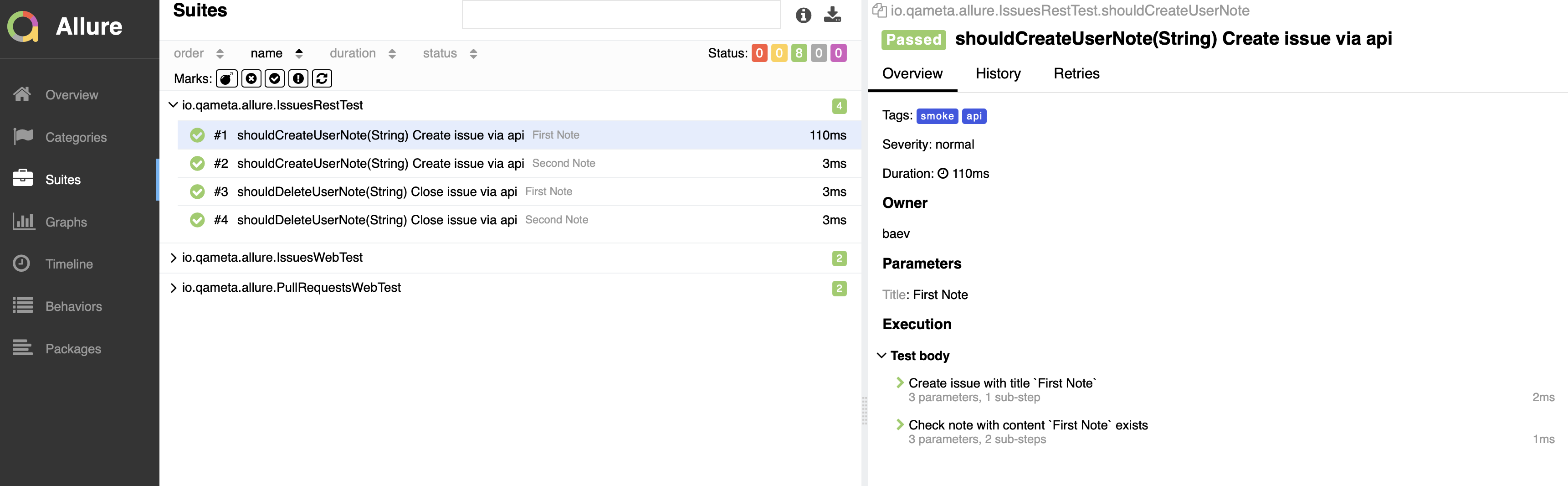

A few words about Pytest — it is a general-purpose and, nowadays, extremely popular framework that can be used for all kinds of testing, with everything written in pure Python. If needed, the BDD style can be supported through the BDD plugin. In fact, there are plugins available for almost any real-world scenario. As for reporting, the allure plugin is a good choice. It allows you to customize your reports according to your requirements fully.

Now, going back to convincing other people — the first step was to persuade my own team, who had been using Robot Framework for two years and had become accustomed to it. They had learned to mitigate its drawbacks, and they were hesitant to make a change because it involved something new, and there were no apparent benefits for them, except for my words. I began by incorporating Pytest examples during the code review of Robot pull requests and showing others the advantages of using Pytest during lunch or small breaks. Tasks that were very difficult with keywords suddenly became straightforward in pure Python. The issue of code duplication was effectively addressed with OOP. Static analysis tools (flake, Python style guide) helped prevent syntax and code style issues. Parametrization helped reduce the number of tests that needed to be written. In addition to the base parametrization provided by Pytest, we were also able to utilize property-based testing with the great hypothesis library. It helped us increase the overall coverage. Allure reports proved to be fully customizable. Over the course of a month and through numerous one-on-one meetings, the team became at least ready to give Pytest a try. Having the whole team behind you is vital for success. I would not have achieved anything without their support.

Migration, Part 2

The second step was to convince the developers. In short — I didn't manage to do it. Robot was their idea; they were convinced that we just did not know how to handle it and were simply complaining without reason. Despite presenting the same arguments as to my own team, they insisted that it would be much easier to fix 300 flippers than to switch to a new framework.

Finally, I decided not to take any further action regarding the developers' opinions on testing frameworks. I believe that the responsibility for managing tests lies within the QA department, and it is up to us to choose the tools we use. Although the developers were accustomed to Robot HTML reports, I was confident that they would also appreciate Allure reports. If not, we would simply add to Allure everything they wanted.

Migration, The End

The last step was to talk to our managers, who were obviously against spending time rewriting all the tests on a new framework. Given that releases were ongoing and time was critical, they saw no immediate business value in such a rewrite. Nevertheless, I referred back to the replication feature and proposed that I could create an automated test suite within three weeks using Pytest, compared to approximately two months with Robot Framework. It was quite a risky move, but I had the support of my team, who backed me up. We managed to get approval for writing new tests using the new framework, although we were required to continue supporting our existing Robot tests.

In those three weeks, I developed a simple CI setup for Pytest with the basic tests required for this feature. Allure reporting looked good, and when I showed it to the managers, they loved it too. As a result, we began supporting both frameworks simultaneously — new tests were written for Pytest, while old ones remained in Robot Framework. During any available free time, some of the team members from the QA department migrated the old tests to Pytest. Within six months, we had fully migrated to Pytest.

During the migration process, we got rid of all outdated elements, discovered and fixed some old bugs, and addressed issues with flippers. We introduced linters, code styles, and documentation. The usual Python debugger was available, making it easy to resolve any bugs quickly, and the IDE helped us write the code efficiently. Everyone was happy except for the developers. They were familiar with how to handle Robot Framework problems and fix issues, but with the new Pytest framework, they had to rely on us. However, this had its advantages, too, as they stopped skipping tests without any specific reason.

Conclusion

Moving from one framework to another is a difficult process with various stakeholders, each having their own interests. However, the decision should be made by those responsible for the field. If you are a manager and your colleagues constantly express concerns, try to empathize with their perspective and give them some time to address the issues. There is always an opportunity for refactoring, choosing a new framework, or adopting new technologies. Testing frameworks, methodologies, and strategies need to evolve with a product — things that worked previously might stop working over time. And this is a normal process. Being aware of this is crucial; otherwise, more serious problems can arise beyond engineers requesting time to create better solutions.

If you are starting a new project and want to use Python for end-to-end tests but are unsure about which framework to pick, I would recommend choosing Pytest. Robot Framework is good, but only if you have experience with it, know why you need to use keywords and understand the benefits of its suggested structure for your specific needs. If you are unsure, pick Pytest and enjoy a smoother experience. Even if you don't have programming experience, programming in Python is much easier than programming in keywords. If you are already working on a legacy project and find it cumbersome to work with a Robot, the pain is not normal. It might be time to try something new.

I see only one reason for continuing to support a solution that brings a bad experience — if the project is no longer under active development, has been stabilized, and no new features are expected in the near future. Only in such cases you might choose to stick with what you have. However, always remember that testing, coding, and programming should be enjoyable; if they are not, it's essential to address the issues and make necessary changes.

Opinions expressed by DZone contributors are their own.

Comments