Implementing Opinion Mining With Python

Learn about the process of opinion mining with Python through reviews of shopping sites like Amazon, Flipkart, and GSM Arena.

Join the DZone community and get the full member experience.

Join For FreeREVIEW COLLECTION

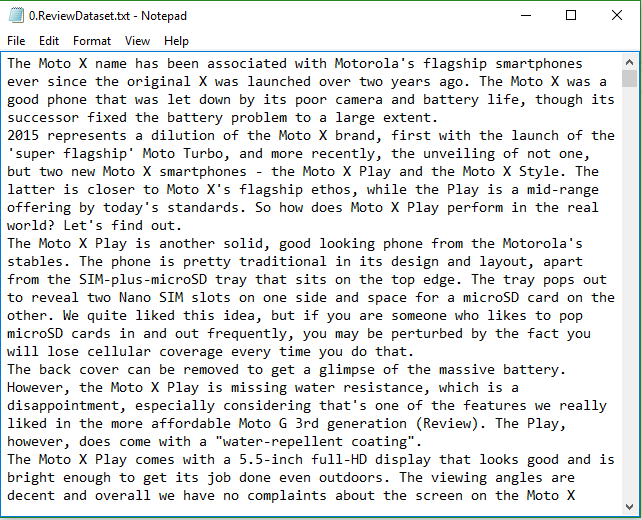

Input to the opinion mining process is the set of reviews. Following are the reviews of mobile “moto x play” downloaded from various shopping sites like Amazon, Flipkart, GSM Arena etc. The collected reviews are store in a database which are used for the Opinion Mining process.

FUNCTIONS

Following is the main function, i.e. entry point, to the Opinion Mining System (OMS) which calls the other subfunctions in “omsFunction package”.

File Name : oms.py

import omsFunctions

def printResultChoice():

userChoice = str(input('\nDo you want to print the result on output window? (Y/N) :'))

if(userChoice=='Y' or userChoice=='y'):

return True

else:

return False

#_FolderName='Data\\OppoF1\\'

_FolderName='Data\\MotoXPlay\\'

_ReviewDataset=_FolderName+'0.ReviewDataset.txt'

_PreProcessedData=_FolderName+'1.PreProcessedData.txt'

_TokenizedReviews=_FolderName+'2.TokenizedReviews.txt'

_PosTaggedReviews=_FolderName+'3.PosTaggedReviews.txt'

_Aspects=_FolderName+'4.Aspects.txt'

_Opinions=_FolderName+'5.Opinions.txt'

print("\nWELCOME TO OPINION MINING SYSTEM ")

print("-------------------------------------------------------------")

input("Please Enter any key to continue...")

print("\n\n\n\n\n\nPREPROCESSING DATA")

omsFunctions.preProcessing(_ReviewDataset,_PreProcessedData,printResultChoice())

print("\n\n\n\n\n\nREADING REVIEW COLLECTION...")

omsFunctions.tokenizeReviews(_ReviewDataset,_TokenizedReviews,printResultChoice())

print("\n\n\n\n\n\nPART OF SPEECH TAGGING...")

omsFunctions.posTagging(_TokenizedReviews,_PosTaggedReviews,printResultChoice())

print("\nThis function will list all the nouns as aspect")

omsFunctions.aspectExtraction(_PosTaggedReviews,_Aspects,printResultChoice())

print("\n\n\n\n\n\nIDENTIFYING OPINION WORDS...")

omsFunctions.identifyOpinionWords(_PosTaggedReviews,_Aspects,_Opinions,printResultChoice())PRE-PROCESSING

Pre-processing is done to improve accuracy of Data. It also avoids unnecessary data processing in each phase. It includes Remove Unnecessary Words (Like Oh!, OMG!, Smileys, hello guys, thanks, etc.) and Non Alphabetical characters.

File Name : omsFunctions.py

import nltk

import ast

import re

from nltk.tokenize import word_tokenize

from nltk.corpus import wordnet

from nltk.corpus import sentiwordnet

def preProcessing(inputFileStr,outputFileStr,printResult):

inputFile = open(inputFileStr,"r").read()

outputFile=open (outputFileStr,"w+")

cachedStopWords = nltk.corpus.stopwords.words("english")

cachedStopWords.append('OMG')

cachedStopWords.append(':-)')

result=(' '.join([word for word in inputFile.split() if word not in cachedStopWords]))

if(printResult):

print('Following are the Stop Words')

print(cachedStopWords)

print(str(result))

outputFile.write(str(result))

outputFile.close()The Function nltk.corpus.stopwords.words(“english”) returns some list of common stop words like:

['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', 'your', 'yours', 'yourself', 'yourselves', 'he', 'him', 'his', 'himself', 'she', 'her', 'hers', 'herself', 'it', 'its', 'itself', 'they', 'them', 'their', 'theirs', 'themselves', 'what', 'which', 'who', 'whom', 'this', 'that', 'these', 'those', 'am', 'is', 'are', 'was', 'were', 'be', 'been', 'being', 'have', 'has', 'had', 'having', 'do', 'does', 'did', 'doing', 'a', 'an', 'the', 'and', 'but', 'if', 'or', 'because', 'as', 'until', 'while', 'of', 'at', 'by', 'for', 'with', 'about', 'against', 'between', 'into', 'through', 'during', 'before', 'after', 'above', 'below', 'to', 'from', 'up', 'down', 'in', 'out', 'on', 'off', 'over', 'under', 'again', 'further', 'then', 'once', 'here', 'there', 'when', 'where', 'why', 'how', 'all', 'any', 'both', 'each', 'few', 'more', 'most', 'other', 'some', 'such', 'no', 'nor', 'not', 'only', 'own', 'same', 'so', 'than', 'too', 'very', 's', 't', 'can', 'will', 'just', 'don', 'should', 'now', 'd', 'll', 'm', 'o', 're', 've', 'y', 'ain', 'aren', 'couldn', 'didn', 'doesn', 'hadn', 'hasn', 'haven', 'isn', 'ma', 'mightn', 'mustn', 'needn', 'shan', 'shouldn', 'wasn', 'weren', 'won', 'wouldn']

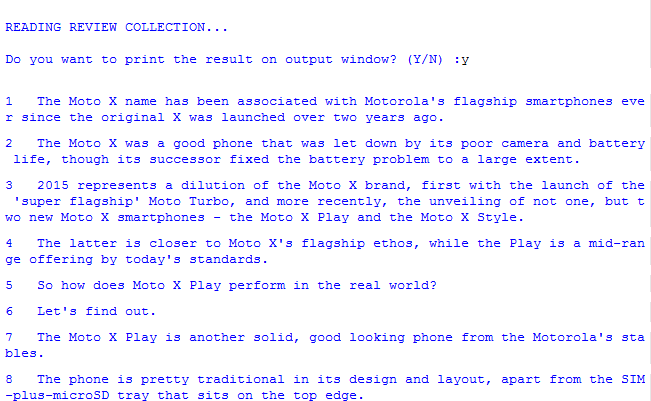

TOKENIZE THE REVIEWS

This function will read reviews from Text File. The Text Files consists of paragraphs. So the entire file will break into sentence. This sentence can individually use for mining.

File Name : omsFunctions.py (cont.)

def tokenizeReviews(inputFileStr,outputFileStr,printResult):

tokenizedReviews={}

inputFile = open(inputFileStr,"r").read()

outputFile=open (outputFileStr,"w")

tokenizer = nltk.tokenize.punkt.PunktSentenceTokenizer()

uniqueId=1;

cachedStopWords = nltk.corpus.stopwords.words("english")

for sentence in tokenizer.tokenize(inputFile):

tokenizedReviews[uniqueId]=sentence

uniqueId+=1

outputFile.write(str(tokenizedReviews))

if(printResult):

for key,value in tokenizedReviews.items():

print(key,' ',value)

outputFile.close()

Output

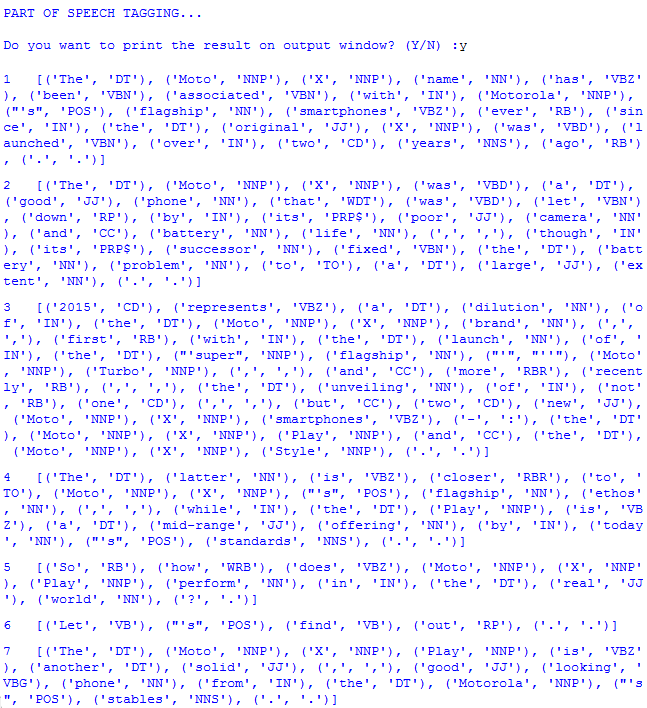

PART OF SPEECH TAGGING

Tagging is the process of assigning a part of speech marker to each word in an input text. Because tags are generally also applied to punctuation, tokenization is usually performed before, or as part of, the tagging process.

File Name : omsFunctions.py (cont.)

def posTagging(inputFileStr,outputFileStr,printResult):

inputFile = open(inputFileStr,"r").read()

outputFile=open (outputFileStr,"w")

inputTupples=ast.literal_eval(inputFile)

outputPost={}

for key,value in inputTupples.items():

outputPost[key]=nltk.pos_tag(nltk.word_tokenize(value))

if(printResult):

for key,value in outputPost.items():

print(key,' ',value)

outputFile.write(str(outputPost))

outputFile.close() Output

ASPECT EXTRACTION

Aspects are the important features rated by the reviewers. The aspects for a particular domain are identified through the training process. An aspect may be a single word or a phrase. In order to extract the aspect, searching of noun and noun phrases of the reviews are needed.

File Name : omsFunctions.py (cont.)

def aspectExtraction(inputFileStr,outputFileStr,printResult):

inputFile = open(inputFileStr,"r").read()

outputFile=open (outputFileStr,"w")

inputTupples=ast.literal_eval(inputFile)

prevWord=''

prevTag=''

currWord=''

aspectList=[]

outputDict={}

#Extracting Aspects

for key,value in inputTupples.items():

for word,tag in value:

if(tag=='NN' or tag=='NNP'):

if(prevTag=='NN' or prevTag=='NNP'):

currWord= prevWord + ' ' + word

else:

aspectList.append(prevWord.upper())

currWord= word

prevWord=currWord

prevTag=tag

#Eliminating aspect which has 1 or less count

for aspect in aspectList:

if(aspectList.count(aspect)>1):

if(outputDict.keys()!=aspect):

outputDict[aspect]=aspectList.count(aspect)

outputAspect=sorted(outputDict.items(), key=lambda x: x[1],reverse = True)

if(printResult):

print(outputAspect)

outputFile.write(str(outputAspect))

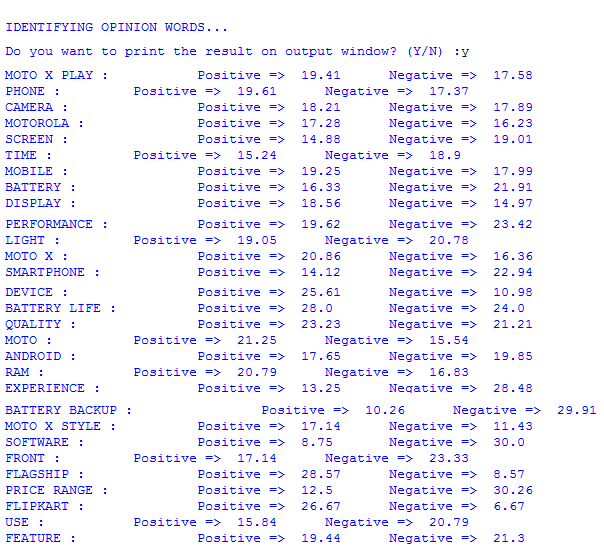

outputFile.close()IDENTIFYING OPINION WORDS AND THEIR ORIENTATION

Opinion words are the words which express opinion towards aspects. In the aspect-based opinion mining, the aspect related opinion words should be identified. The opinion words are mostly adjectives, verbs, adverb adjective, and adverb verb combinations. For each opinion word, we need to identify its semantic orientation, which will be used to predict the semantic orientation of each opinion sentence.

Negations should be handled appropriately to get the contextual information of a sentence. If the opinion word is in negative relation, then its priority score is reversed for negation handling purpose.

File Name : omsFunctions.py (cont.)

def identifyOpinionWords(inputReviewListStr, inputAspectListStr, outputAspectOpinionListStr,printResult):

inputReviewList = open(inputReviewListStr,"r").read()

inputAspectList = open(inputAspectListStr,"r").read()

outputAspectOpinionList=open (outputAspectOpinionListStr,"w")

inputReviewsTuples=ast.literal_eval(inputReviewList)

inputAspectTuples=ast.literal_eval(inputAspectList)

outputAspectOpinionTuples={}

orientationCache={}

negativeWordSet = {"don't","never", "nothing", "nowhere", "noone", "none", "not",

"hasn't","hadn't","can't","couldn't","shouldn't","won't",

"wouldn't","don't","doesn't","didn't","isn't","aren't","ain't"}

for aspect,no in inputAspectTuples:

aspectTokens= word_tokenize(aspect)

count=0

for key,value in inputReviewsTuples.items():

condition=True

isNegativeSen=False

for subWord in aspectTokens:

if(subWord in str(value).upper()):

condition = condition and True

else:

condition = condition and False

if(condition):

for negWord in negativeWordSet:

if(not isNegativeSen):#once senetence is negative no need to check this condition again and again

if negWord.upper() in str(value).upper():

isNegativeSen=isNegativeSen or True

outputAspectOpinionTuples.setdefault(aspect,[0,0,0])

for word,tag in value:

if(tag=='JJ' or tag=='JJR' or tag=='JJS'or tag== 'RB' or tag== 'RBR'or tag== 'RBS'):

count+=1

if(word not in orientationCache):

orien=orientation(word)

orientationCache[word]=orien

else:

orien=orientationCache[word]

if(isNegativeSen and orien is not None):

orien= not orien

if(orien==True):

outputAspectOpinionTuples[aspect][0]+=1

elif(orien==False):

outputAspectOpinionTuples[aspect][1]+=1

elif(orien is None):

outputAspectOpinionTuples[aspect][2]+=1

if(count>0):

#print(aspect,' ', outputAspectOpinionTuples[aspect][0], ' ',outputAspectOpinionTuples[aspect][1], ' ',outputAspectOpinionTuples[aspect][2])

outputAspectOpinionTuples[aspect][0]=round((outputAspectOpinionTuples[aspect][0]/count)*100,2)

outputAspectOpinionTuples[aspect][1]=round((outputAspectOpinionTuples[aspect][1]/count)*100,2)

outputAspectOpinionTuples[aspect][2]=round((outputAspectOpinionTuples[aspect][2]/count)*100,2)

print(aspect,':\t\tPositive => ', outputAspectOpinionTuples[aspect][0], '\tNegative => ',outputAspectOpinionTuples[aspect][1])

if(printResult):

print(outputAspectOpinionList)

outputAspectOpinionList.write(str(outputAspectOpinionTuples))

outputAspectOpinionList.close();

#-----------------------------------------------------------------------------------

def orientation(inputWord):

wordSynset=wordnet.synsets(inputWord)

if(len(wordSynset) != 0):

word=wordSynset[0].name()

orientation=sentiwordnet.senti_synset(word)

if(orientation.pos_score()>orientation.neg_score()):

return True

elif(orientation.pos_score()<orientation.neg_score()):

return False

Output

Opinions expressed by DZone contributors are their own.

Comments