A Tool to Ease Your Transition From Oracle PL/SQLs to Couchbase JavaScript UDFs

In this article, take a deep dive into an AI Translator tool used to automate the conversion of PL/SQL to JSUDF.

Join the DZone community and get the full member experience.

Join For FreeBackground

What Is PL/SQL?

PL/SQL is a procedural language designed specifically to embrace SQL statements within its syntax. It includes procedural language elements such as conditions and loops and can handle exceptions (run-time errors).

PL/SQL is native to Oracle databases, and databases like IBM DB2, PostgreSQL, and MySQL support PL/SQL constructs through compatibility features.

What Is a JavaScript UDF?

JavaScript UDF is Couchbase’s alternative to PL/SQL.

JavaScript UDF brings JavaScript's general-purpose scripting flexibility to databases, allowing for dynamic and powerful operations across modern database systems and enhancing flexibility in data querying, processing, and transformation.

Most modern databases like Couchbase, MongoDB, Snowflake, and Google BigQuery support Javascript UDF.

The Problem

A common problem seen by users migrating from Oracle to Couchbase is porting their PL/SQL scripts. Instead of supporting PL/SQL, Couchbase lets users construct user-defined functions in JavaScript (supported since 2021).

JavaScript UDFs allow easy, intuitive manipulation of variant and JSON data. Variant objects passed to a UDF are transformed into native JavaScript types and values.

The unintended consequence of this is that the majority of RDBMS that have been in existence for the last ten years have strongly encouraged developers to access the database using their procedural extensions to SQL (PL/pgSQL, PL/SQL), which support procedural constructs, integration with SQL, error handling, functions and procedures, triggers, and cursors, or at the very least, functions and procedures (like Sakila). For any attempt to move away from them, all of their scripts would need to be rewritten.

Rewriting code is often a tedious task, especially when dealing with PL/SQL scripts that have been written in the 2000s and maintained since then. These scripts can be complex, often extending to thousands of lines, which can be overwhelming for the average enterprise user.

Solution

The ideal approach would be to develop a whole new PL/SQL evaluator, but that would require an excessive amount of engineering hours, and for the same use case, we already have a modern, stable, and fast JsEvaluator — so why support another evaluator?

This makes the problem a perfect use case to leverage the ongoing advances in AI and LLMs — and that's what we have done here. We have used Generative AI models to automate the conversion of PL/SQL to JSUDF.

As of June 2024, models have a limited context window, which means longer PL/SQLs get hit with the error:

"This model's maximum context length is 8192 tokens. However, your messages resulted in <More-than-8192> tokens. Please reduce the length of the messages.”

- Note that this is for GPT4.

So do we wait for AI to become more powerful and allow more tokens (like Moore’s Law, but for the AI’s context-length-vs-precision)?

No: that’s where the ANTLR parser generator tool comes in. ANTLR is well-known to be used for Compiler and Interpreter Development. That way we can break the big script into smaller units that can be translated independently.

So now are we building a transpiler? Well, yes and no.

The stages in a transpiler are as follows:

- Lexical analysis (tokenization)

- Syntactic analysis (parsing)

- Semantic analysis

- Intermediate Representation (IR) generation

- Optimization (optional)

- Target code generation

How the AI Translator Works

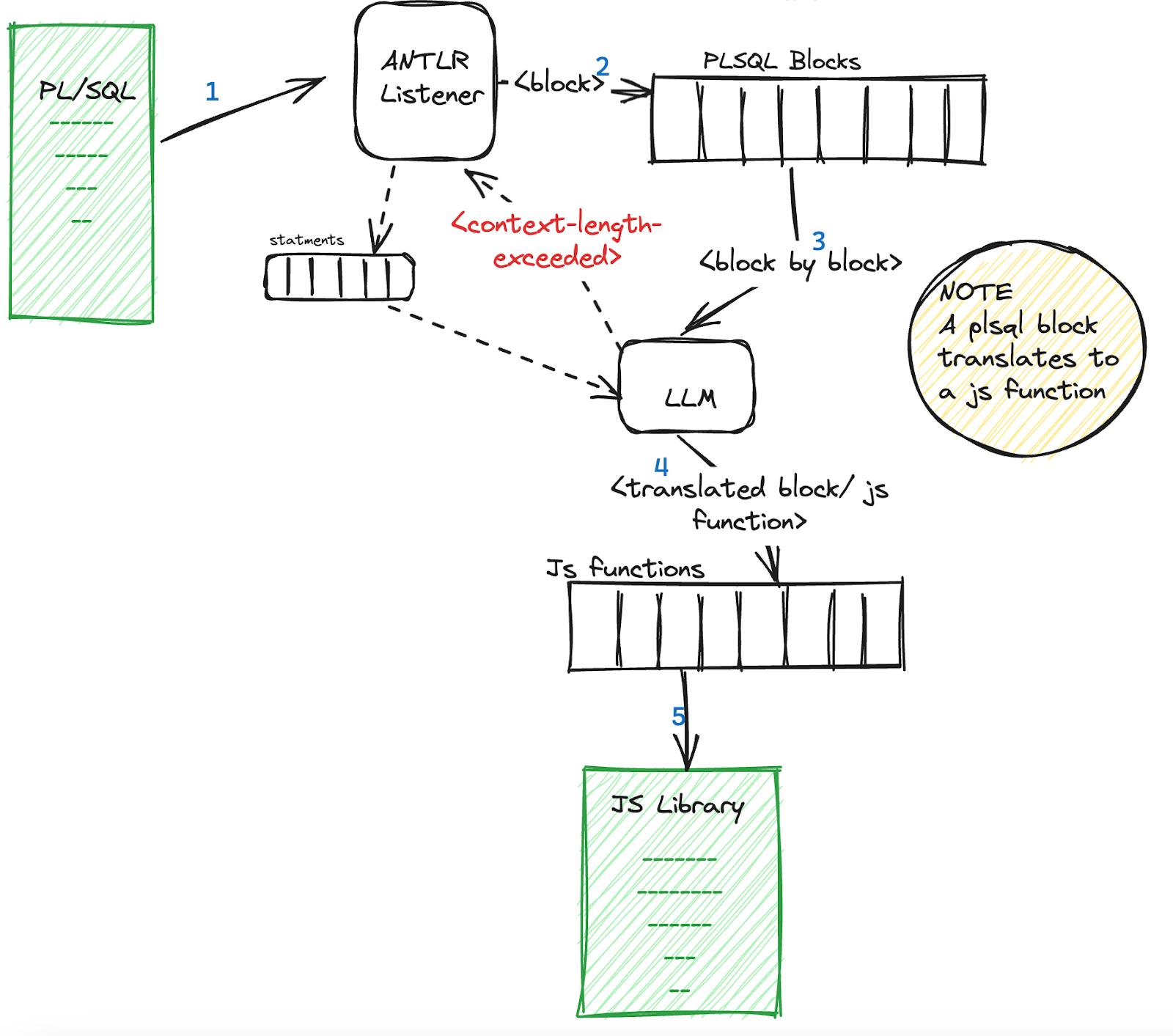

Steps 1 and 2 are done using ANTLR.

We use ANTLR’s Listener interface to grab individual Procedure/Function/Anonymous blocks, as they are independent blocks of code. In a case where the Procedure/Function/Anonymous blocks themselves exceed the context window, we translate at a statement level (where the LLM assumes the existence of use of variables/function calls that aren’t defined here but somewhere before).

Subsequently, steps 3, 4, 5, and 6 are left to the LLM (GPT), i.e., translating each PL/SQL block into a JavaScript function to the best of its ability that also preserves the operational semantics of the block and is syntactically accurate.

The results are surprisingly quite positive: the translation is 80-85% accurate.

Another benefit of the solution is that we reduce hallucination by focusing on one task at a time, resulting in more accurate translations.

To visualize:

How to Use the Tool

The executable expects the following command-line arguments:

-u: Capella sign-in email-p: Capella sign-in password-cpaddr: Capella-url for chat-completions API-orgid: Organization ID in the chat-completions API path-cbhost: node-ip:cbclusternode-cbuser: cluster-user-name:cbclusteruser, added through database access-cbpassword: cluster-password:cbclusterpassword, added through database access-cbport:query-serviceTLS port (usually 18093)filepath, i.e., path to the PL/SQL script that has to be translatedoutput->: In the output directory, a file with the same name as the plsql file is generated with translated JavaScript Library code.

For example, cat example1.sql:

DECLARE

x NUMBER := 0;

counter NUMBER := 0;

BEGIN

FOR i IN 1..4 LOOP

x := x + 1000;

counter := counter + 1;

INSERT INTO temp VALUES (x, counter, 'in OUTER loop');

--start an inner block

DECLARE

x NUMBER := 0; -- this is a local version of x

BEGIN

FOR i IN 1..4 LOOP

x := x + 1; -- this increments the local x

counter := counter + 1;

INSERT INTO temp VALUES (x, counter, 'inner loop');

END LOOP;

END;

END LOOP;

COMMIT;

END;

To briefly explain the above script, an outer loop runs for 4 iterations, incrementing x by 1000, counter by 1. The inner loop runs for 4 iterations, incrementing x by 1, counter by 1.

Running the translator on the test PL/SQL:

./plsql-to-jsudf -u «capella-signin-mailid» -p «capella-signin-password» -cpaddr https://api.cloud.couchbase.com -orgid «capella-organisation-id» -cbhost «hostname of data node» -cbuser «cbcluster username» -cbpassword «cbcluster password» -cbport 18093 ./translator/test/plsql/example1.sql

Output JsUDF: cat output/example1.js:

function nestedloop(){

var x = 0;

var counter = 0;

var querybegin = BEGIN WORK;

querybegin.close();

for (var i = 1; i <= 4; i++){

x = x + 1000;

counter = counter + 1;

var params = [x, counter];

var query = N1QL('INSERT INTO test.testscope.temp VALUES (uuid(),{"val1":$1,"val2":$2,"val3":"in OUTER loop"})',params);

query.close();

var x_inner = 0;

for (var j = 1; j <= 4; j++){

x_inner = x_inner + 1;

counter = counter + 1;

var params_inner = [x_inner, counter];

var query_inner = N1QL('INSERT INTO test.testscope.temp VALUES (uuid(),{"val1":$1,"val2":$2,"val3":"inner loop"})',params_inner);

query_inner.close();

}

}

var querycommit = COMMIT WORK;

querycommit.close();

}The translated script has a function nestedloop (name generated by LLM) that does exactly what the original Anonymous PL/SQL block specifies.

- Side note: For named functions/procedures, translated JS functions will have the same name. For anonymous blocks, the LLM uses a name it comes up with.

Known Issues

PL/SQL and JS are two different languages, and the way they are supported in Oracle and Couchbase doesn’t allow for a clean direct mapping between the two. Below are some limitations we discovered and the workarounds we have implemented for the same:

1. console.log Is Not Supported

DBMS_OUTPUT.PUT built-in procedure and two other similar built-ins, DBMS_OUTPUT.PUT_LINE and DBMS_OUTPUT.NEW_LINE are translated to console.log(), but console.log is a browser API and is not supported by Couchbase's JavaScript evaluation implementation. This has been a frequent ask, considering the Couchbase eventing function does support print() statements but not in JavaScript UDFs.

Workaround

Users are expected to create a logging bucket.

Logs are inserted as part of a document INSERT into the `default`.`default` collection. The document would look something like this:

{

"udf": «func-name»,

"log": «argument to console.log», // the actual log line

"time": «current ISO time string»

}The user can look at his logs by selecting logging:

SELECT * FROM logging WHERE udf= "«func-name»";

SELECT * FROM logging WHERE time BETWEEN "«date1»" AND "«date2»";Example:

- Original

BEGIN

DBMS.OUTPUT.PUT("Hello world!");

END;

/

- Translation

function helloWorld() {

// workaround for console.log("Hello world!");

var currentDate = new Date();

var utcISOString = currentDate.toISOString();

var params = [utcISOString,'anonymousblock1',"Hello world!"];

var logquery = N1QL('INSERT INTO logging VALUES(UUID(),{"udf":$2, "log":$3, "time":$1}, {"expiration": 5*24*60*60 })', params);

logquery.close();

}

This is already implemented in the tool.

To view the log:

EXECUTE FUNCTION helloWorld();

"results": [

null

]

CREATE PRIMARY INDEX ON logging;

"results": [

]

SELECT * FROM logging;

"results": [

{"logging":{"log":"Hello world!","time":"2024-06-26T09:20:56.000Z","udf":"anonymousblock1"}}

]2. Cross-Package Function Calls

Procedures/Functions listed in the package specification are global and can be used from other packages via «package_name».«public_procedure/function». However, the same is not true for a JavaScript Library in Couchbase, as import-export constructs are not supported by Couchbase's JavaScript evaluation implementation.

Workaround

- In case of an interlibrary function call

«lib_name».«function»(), the user is expected to have the referenced library«lib_name»already created; you can verify this viaGET /evaluator/v1/libraries. - The referenced function

«function»also is expected to be created as a global UDF; this can be verified viaGET /admin/functions_cacheor selectsystem:functionskeyspace. This way we can access the function vian1ql.

Example:

math_utils Package

CREATE OR REPLACE PACKAGE math_utils AS

-- Public function to add two numbers

FUNCTION add_numbers(p_num1 NUMBER, p_num2 NUMBER) RETURN NUMBER;

END math_utils;

/

CREATE OR REPLACE PACKAGE BODY math_utils AS

FUNCTION add_numbers(p_num1 NUMBER, p_num2 NUMBER) RETURN NUMBER IS

BEGIN

RETURN p_num1 + p_num2;

END add_numbers;

END math_utils;

/show_sumpackage

CREATE OR REPLACE PACKAGE show_sum AS

-- Public procedure to display the sum of two numbers

PROCEDURE display_sum(p_num1 NUMBER, p_num2 NUMBER);

END show_sum;

/

CREATE OR REPLACE PACKAGE BODY show_sum AS

PROCEDURE display_sum(p_num1 NUMBER, p_num2 NUMBER) IS

v_sum NUMBER;

BEGIN

-- Calling the add_numbers function from math_utils package

v_sum := math_utils.add_numbers(p_num1, p_num2);

-- Displaying the sum using DBMS_OUTPUT.PUT_LINE

DBMS_OUTPUT.PUT_LINE('The sum of ' || p_num1 || ' and ' || p_num2 || ' is ' || v_sum);

END display_sum;

END show_sum;

/

- Translated code:

function show_sum(a, b) {

var sum_result;

// Workaround for cross library function call math_utils.add_numbers(a, b)

var crossfunc = N1QL("EXECUTE FUNCTION add_numbers($1,$2)",[a, b])

var crossfuncres = []

for(const doc of crossfunc) {

crossfuncres.push(doc);

}

// actual replacement for math_utils.add_numbers(a, b)

sum_result = crossfuncres[0];

// workaround for console.log('The sum of ' + a + ' and ' + b + ' is: ' + sum_result);

var currentDate = new Date();

var utcISOString = currentDate.toISOString();

var params = [utcISOString,'SHOW_SUM','The sum of ' + a + ' and ' + b + ' is: ' + sum_result];

var logquery = N1QL('INSERT INTO logging VALUES(UUID(),{"udf":$2, "log":$3, "time":$1}, {"expiration": 5*24*60*60 })', params);

logquery.close();

}It is auto-handled by the program — with a warning that it should be verified by a human set of eyes!

3. Global Variables

PL/SQL supports package level and session level global variables, but global variables are not supported in JsUDF deliberately by design, as this causes concern for memory leaks.

Workaround

The suggested workaround requires manual tweaking of the generated translation. For example:

CREATE OR REPLACE PACKAGE global_vars_pkg AS

-- Global variable declarations

g_counter NUMBER := 0;

g_message VARCHAR2(100) := 'Initial Message';

-- Public procedure declarations

PROCEDURE increment_counter;

PROCEDURE set_message(p_message VARCHAR2);

PROCEDURE show_globals;

END global_vars_pkg;

/

CREATE OR REPLACE PACKAGE BODY global_vars_pkg AS

-- Procedure to increment the counter

PROCEDURE increment_counter IS

BEGIN

g_counter := g_counter + 1;

END increment_counter;

-- Procedure to set the global message

PROCEDURE set_message(p_message VARCHAR2) IS

BEGIN

g_message := p_message;

END set_message;

-- Procedure to display the current values of global variables

PROCEDURE show_globals IS

BEGIN

DBMS_OUTPUT.PUT_LINE('g_counter = ' || g_counter);

DBMS_OUTPUT.PUT_LINE('g_message = ' || g_message);

END show_globals;

END global_vars_pkg;

/Any function that modifies a global variable must accept it as an argument and return it to the caller.

increment_counter:

function increment_counter(counter){

counter = counter + 1;

return counter

}Any function that only reads a global can accept it as an argument.

show_globals:

function show_globals(counter, message){

// workaround for console.log(counter);

var currentDate = new Date();

var utcISOString = currentDate.toISOString();

var params = [utcISOString,'SHOW_GLOBALS',couter];

var logquery = N1QL('INSERT INTO logging VALUES(UUID(),{"udf":$2, "log":$3, "time":$1}, {"expiration": 5*24*60*60 })', params);

logquery.close();

// workaround for console.log(message);

var currentDate = new Date();

var utcISOString = currentDate.toISOString();

var params = [utcISOString,'SHOW_GLOBALS',message];

var logquery = N1QL('INSERT INTO logging VALUES(UUID(),{"udf":$2, "log":$3, "time":$1}, {"expiration": 5*24*60*60 })', params);

logquery.close();

}

Package to Library

This section shows an end-to-end package-to-library conversion using the tool.

- Sample PL/SQL package:

CREATE OR REPLACE PACKAGE emp_pkg IS

PROCEDURE insert_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name IN employees.first_name%TYPE,

p_last_name IN employees.last_name%TYPE,

p_salary IN employees.salary%TYPE

);

PROCEDURE update_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name IN employees.first_name%TYPE,

p_last_name IN employees.last_name%TYPE,

p_salary IN employees.salary%TYPE

);

PROCEDURE delete_employee(

p_emp_id IN employees.emp_id%TYPE

);

PROCEDURE get_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name OUT employees.first_name%TYPE,

p_last_name OUT employees.last_name%TYPE,

p_salary OUT employees.salary%TYPE

);

END emp_pkg;

/

CREATE OR REPLACE PACKAGE BODY emp_pkg IS

PROCEDURE insert_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name IN employees.first_name%TYPE,

p_last_name IN employees.last_name%TYPE,

p_salary IN employees.salary%TYPE

) IS

BEGIN

INSERT INTO employees (emp_id, first_name, last_name, salary)

VALUES (p_emp_id, p_first_name, p_last_name, p_salary);

END insert_employee;

PROCEDURE update_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name IN employees.first_name%TYPE,

p_last_name IN employees.last_name%TYPE,

p_salary IN employees.salary%TYPE

) IS

BEGIN

UPDATE employees

SET first_name = p_first_name,

last_name = p_last_name,

salary = p_salary

WHERE emp_id = p_emp_id;

END update_employee;

PROCEDURE delete_employee(

p_emp_id IN employees.emp_id%TYPE

) IS

BEGIN

DELETE FROM employees

WHERE emp_id = p_emp_id;

END delete_employee;

PROCEDURE get_employee(

p_emp_id IN employees.emp_id%TYPE,

p_first_name OUT employees.first_name%TYPE,

p_last_name OUT employees.last_name%TYPE,

p_salary OUT employees.salary%TYPE

) IS

BEGIN

SELECT first_name, last_name, salary

INTO p_first_name, p_last_name, p_salary

FROM employees

WHERE emp_id = p_emp_id;

END get_employee;

END emp_pkg;

/

- Translation:

./plsql-to-jsudf -u «capella-signin-mailid» -p «capella-signin-password» -cpaddr https://api.cloud.couchbase.com -orgid «capella-organisation-id» -cbhost «hostname of data node» -cbuser «cbcluster username» -cbpassword «cbcluster password» -cbport 18093 translator/test/plsql/blog_test.sql- Code:

function insert_employee(p_emp_id, p_first_name, p_last_name, p_salary){

var params = [p_emp_id, p_first_name, p_last_name, p_salary];

var query = N1QL('INSERT INTO test.testscope.employees VALUES ($1, {"emp_id":$1, "first_name":$2, "last_name":$3, "salary":$4})', params);

query.close();

}

function update_employee(p_emp_id, p_first_name, p_last_name, p_salary){

var params = [p_first_name, p_last_name, p_salary, p_emp_id];

var query = N1QL('UPDATE test.testscope.employees SET first_name = $1, last_name = $2, salary = $3 WHERE emp_id = $4', params);

query.close();

}

function delete_employee(p_emp_id){

var querybegin=BEGIN WORK;

var params = [p_emp_id];

var query= N1QL('DELETE FROM test.testscope.employees WHERE emp_id = $1',params);

query.close();

var querycommit=COMMIT WORK;

querycommit.close();

}

function get_employee(p_emp_id){

var query = N1QL('SELECT first_name, last_name, salary FROM test.testscope.employees WHERE emp_id = $1', [p_emp_id]);

var rs = [];

for (const row of query) {

rs.push(row);

}

query.close();

var p_first_name = rs[0]['first_name'];

var p_last_name = rs[0]['last_name'];

var p_salary = rs[0]['salary'];

return {first_name: p_first_name, last_name: p_last_name, salary: p_salary};

}

Let’s insert a new employee document.

- Create employee collection:

curl -u Administrator:password http://127.0.0.1:8091/pools/default/buckets/test/scopes/testscope/collections -d name=employees Insert an Employee

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION insert_employee(1, "joe", "briggs", 10000)' -k

{

"requestID": "2c0854c1-d221-42e9-af47-b6aa0801a46c",

"signature": null,

"results": [

],

"errors": [{"code":10109,"msg":"Error executing function 'insert_employee' (blog_test:insert_employee)","reason":{"details":{"Code":" var query = N1QL('INSERT INTO test.testscope.employees VALUES ($1, {\"emp_id\":$1, \"first_name\":$2, \"last_name\":$3, \"salary\":$4})', params);","Exception":{"_level":"exception","caller":"insert_send:207","code":5070,"key":"execution.insert_key_type_error","message":"Cannot INSERT non-string key 1 of type value.intValue."},"Location":"functions/blog_test.js:5","Stack":" at insert_employee (functions/blog_test.js:5:17)"},"type":"Exceptions from JS code"}}],

"status": "fatal",

"metrics": {"elapsedTime": "104.172666ms","executionTime": "104.040291ms","resultCount": 0,"resultSize": 0,"serviceLoad": 2,"errorCount": 1}

}

This errors out, and that’s ok — we can fix it manually.

Reading the reason and exception: Cannot INSERT non-string key 1 of type value.intValue, ah! The key is always expected to be a string: passing insert_employee("1", "joe", "briggs", 10000) would do the trick, but it is unintuitive to expect employee_id to be a string.

Let’s alter the generated code:

function insert_employee(p_emp_id, p_first_name, p_last_name, p_salary){

var params = [p_emp_id.toString(), p_emp_id, p_first_name, p_last_name, p_salary];

var query = N1QL('INSERT INTO test.testscope.employees VALUES ($1, {"emp_id":$2, "first_name":$3, "last_name":$4, "salary":$5})', params);

query.close();

}And recreate the UDF:

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=CREATE OR REPLACE FUNCTION insert_employee(p_emp_id, p_first_name, p_last_name, p_salary) LANGUAGE JAVASCRIPT AS "insert_employee" AT "blog_test"' -k

{

"requestID": "89df65ac-2026-4f42-8839-b1ce7f0ea2be",

"signature": null,

"results": [

],

"status": "success",

"metrics": {"elapsedTime": "27.730875ms","executionTime": "27.620083ms","resultCount": 0,"resultSize": 0,"serviceLoad": 2}

}

Trying to insert it again:

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION insert_employee(1, "joe", "briggs", 10000)' -k

{

"requestID": "41fb76bf-a87f-4472-b8ba-1949789ae74b",

"signature": null,

"results": [

null

],

"status": "success",

"metrics": {"elapsedTime": "62.431667ms","executionTime": "62.311583ms","resultCount": 1,"resultSize": 4,"serviceLoad": 2}

}

Update an Employee

Shoot! There’s a goof-up: employee 1 isn’t Joe, it’s Emily.

Let’s update employee 1:

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION update_employee(1, "Emily", "Alvarez", 10000)' -k

{

"requestID": "92a0ca70-6d0d-4eb1-bf8d-0b4294ae987d",

"signature": null,

"results": [

null

],

"status": "success",

"metrics": {"elapsedTime": "100.967708ms","executionTime": "100.225333ms","resultCount": 1,"resultSize": 4,"serviceLoad": 2}

}View the Employee

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION get_employee(1)' -k

{

"requestID": "8f180e27-0028-4653-92e0-606c80d5dabb",

"signature": null,

"results": [

{"first_name":"Emily","last_name":"Alvarez","salary":10000}

],

"status": "success",

"metrics": {"elapsedTime": "101.995584ms","executionTime": "101.879ms","resultCount": 1,"resultSize": 59,"serviceLoad": 2}

}

Delete the Employee

Emily left.

curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION delete_employee(1)' -k

{

"requestID": "18539991-3d97-40e2-bde3-6959200791b1",

"signature": null,

"results": [

],

"errors": [{"code":10109,"msg":"Error executing function 'delete_employee' (blog_test:delete_employee)","reason":{"details":{"Code":" var querycommit=N1QL('COMMIT WORK;', {}, false); ","Exception":{"_level":"exception","caller":"txcouchbase:240","cause":{"cause":{"bucket":"test","collection":"_default","document_key":"_txn:atr-988-#1b0","error_description":"Durability requirements are impossible to achieve","error_name":"DurabilityImpossible","last_connection_id":"eda95f8c35df6746/d275e8398a49e515","last_dispatched_from":"127.0.0.1:50069","last_dispatched_to":"127.0.0.1:11210","msg":"durability impossible","opaque":7,"scope":"_default","status_code":161},"raise":"failed","retry":false,"rollback":false},"code":17007,"key":"transaction.statement.commit","message":"Commit Transaction statement error"},"Location":"functions/blog_test.js:29","Stack":" at delete_employee (functions/blog_test.js:29:21)"},"type":"Exceptions from JS code"}}],

"status": "fatal",

"metrics": {"elapsedTime": "129.02975ms","executionTime": "128.724ms","resultCount": 0,"resultSize": 0,"serviceLoad": 2,"errorCount": 1}

}Again, we have an error with the generated code. Looking at the reason and exception, we can confirm that the translated code encloses delete in a transaction, which wasn’t the case in the original.

For transactions, buckets need to have durability set, but this requires more than one data server; hence, the error.

The fix here is to alter the code to remove the enclosing translation.

function delete_employee(p_emp_id){

var params = [p_emp_id];

var query= N1QL('DELETE FROM test.testscope.employees WHERE emp_id = $1',params);

query.close();

}curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=CREATE OR REPLACE FUNCTION delete_employee(p_emp_id) LANGUAGE JAVASCRIPT AS "delete_employee" AT "blog_test"' -k

{

"requestID": "e7432b82-1af8-4dc4-ad94-c34acea59334",

"signature": null,

"results": [

],

"status": "success",

"metrics": {"elapsedTime": "31.129459ms","executionTime": "31.022ms","resultCount": 0,"resultSize": 0,"serviceLoad": 2}

}curl -u Administrator:password https://127.0.0.1:18093/query/service -d 'statement=EXECUTE FUNCTION delete_employee(1)' -k

{

"requestID": "d440913f-58ff-4815-b671-1a72b75bb7eb",

"signature": null,

"results": [

null

],

"status": "success",

"metrics": {"elapsedTime": "33.8885ms","executionTime": "33.819042ms","resultCount": 1,"resultSize": 4,"serviceLoad": 2}

}

Now, all functions in the original PL/SQL work in Couchbase via JS UDFs. Yes, the example is pretty trivial, but you get the gist of how to go about using the tool to migrate your PL/SQL scripts with little manual supervision.

Remember the tool is supposed to take you 80%: the other 20% still needs to be done by you, but much better than writing all of that code yourself!

The Future

This project is open-source, so feel free to contribute. Some ideas that were thrown at us included:

- Critic AI that can criticize generated code to ensure manual intervention is not needed at all

- Currently, the source code is code that just works; no thoughts for parallelism or code reuse were put to use.

And also include the limitations discussed earlier.

Finally, I’d like to thank Kamini Jagtiani for guiding me and Pierre Regazzoni for helping me test the conversion tool.

Opinions expressed by DZone contributors are their own.

Comments