The Exponential Cost of Fixing Bugs

We talk about why the best time to detect and fix bugs is before you push code and how to mitigate this process to save your time and energy resources.

Join the DZone community and get the full member experience.

Join For FreeThe cost of detecting and fixing defects in software increases exponentially with time in the software development workflow. Fixing bugs in the field is incredibly costly and risky — often by an order of magnitude or two. The cost is not just in the form of time and resources wasted in the present, but also in form of lost opportunities in the future.

Most defects end up costing more than it would have cost to prevent them. Defects are expensive when they occur, both the direct costs of fixing the defects and the indirect costs because of damaged relationships, lost business, and lost development time. — Kent Beck, Extreme Programming Explained

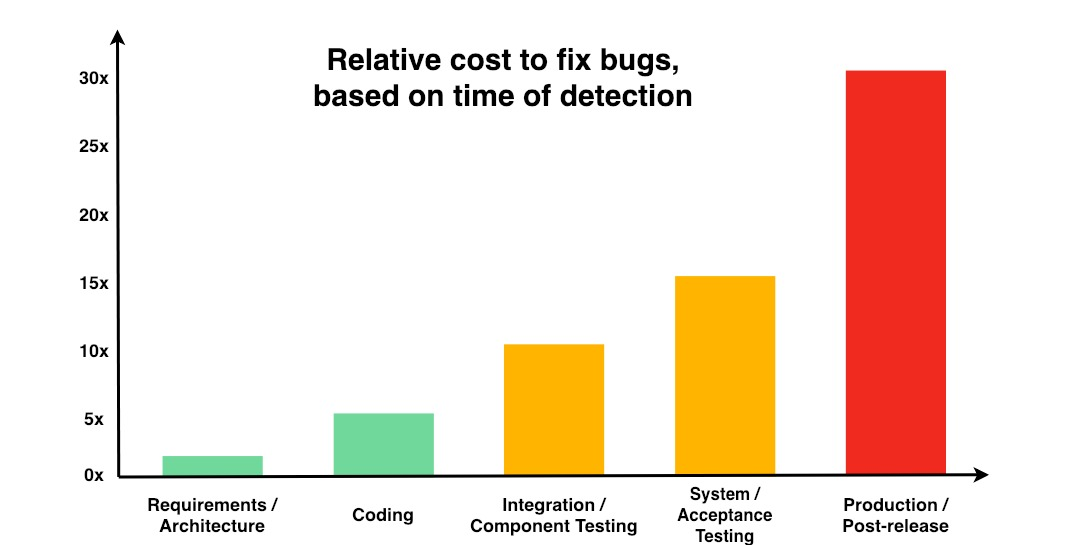

The following graph, courtesy the NIST, helps in visualizing how the effort in detecting and fixing defects increases as the software moves through the five broad phases of software development.

To understand why the costs increase in this manner, let’s consider the following points:

It is much easier to detect issues in code when developers are still writing the code. Since the code is still fresh in the mind, it’s trivial to fix even complex problems. As time passes and the code moves to later stages, developers need to remember everything and hunt down issues before they can fix them. If an automated system, such as a continuous quality integration, highlights issues in code when the developers are still writing the code, they are much more amenable to incorporate the fix for the same reason.

Once the software is in the testing phase, reproducing the defects on a developer’s local environment presents another time-consuming task. Additionally, while it’s very easy to catch something that’s obviously broken or that doesn't follow the requirements, it is incredibly difficult to uncover defects that are more fundamental — think about memory leaks, race conditions, etc. If these issues escape the coding phase, they generally don’t present themselves until the production phase, unfortunately.

After the software has been released and is out in the field, it’s not just difficult to find defects — it’s incredibly risky as well. In addition to preventing live users from being affected by the problems, ensuring the availability of the service is business-critical. These effects compound and the heightened cost is as high as 30x as compared to if these defects are fixed early on.

Mitigation

The arguments above withstanding, it is valuable to implement processes that enable developers to detect early, detect often. Essentially, the development workflow should ensure that defects can be detected as early as possible — preferably while the code is being written by the developer or is in the code review stage before being merged to the main development branch.

Processes like continuous integration help ensure that changes to the code are small and manageable, so it’s easier to detect issues. Track code coverage and ensure that a certain threshold is helpful, and facilitate iterations on the code to fix these issues. Implementing static analysis can help keep code clean by automatically detecting hundreds of bug risks, anti-patterns, security vulnerabilities, and so on.

In essence, processes and conventions should be designed around moving defect detection as early in the workflow and as close to the developer’s coding environment as possible. This way, the same compounding effects which inflate the negative impacts of late defect detection work in favor of increasing software quality and resilience.

Published at DZone with permission of Sanket Saurav. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments