Top 7 Notable Trends in Deep Learning and Neural Networks

Let's take a look at 6 notable trends that are in Deep Learning and Neural Networks. Also explore capsule networks.

Join the DZone community and get the full member experience.

Join For FreeThe fundamental idea behind a neural network is to simulate multiple interconnected cells within a computer’s "brain" so that it can learn from its environment, recognize different patterns and, in general, make decisions similar to a human being.

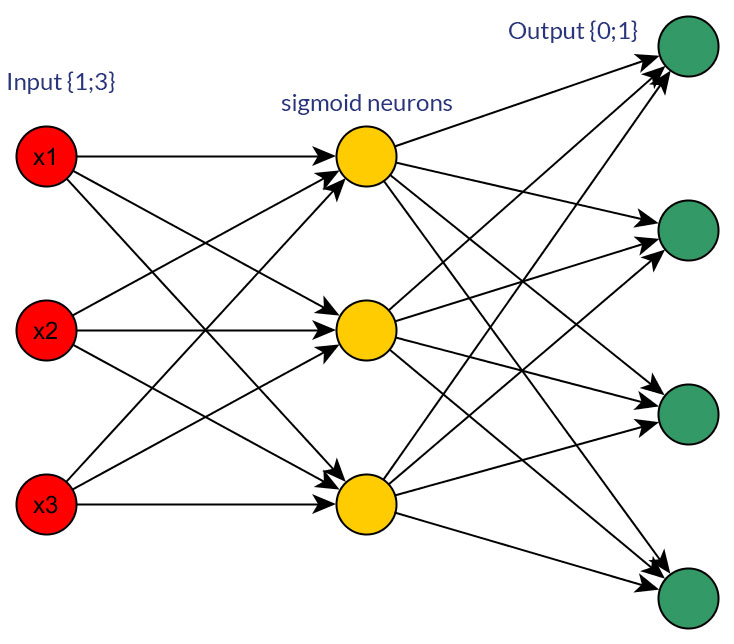

A basic neural network contains about millions of artificial neurons known as units. These units are arranged in layers with each layer connecting to the other side. The units are divided as follows:

Input Units — Designed to receive information from the outside environment

Hidden Units — These eventually feed into the Output Units. Each Hidden Unit is a squeezed linear function of its inputs.

Output Units — These signal how the Network should respond to the information it has recently acquired.

Most of the neural networks are "Fully Connected." This implies that every hidden unit and every output unit is connected to every unit on the other side of the layer. The connections between each of the units are termed as "weight." The weight can be either positive or negative depending on the amount of influence it has on the other unit. Higher weights carry higher authority over interconnected units.

When a neural network gets trained, or just after the training when it starts operating, different patterns of information are fed into the network using different input units. These trigger the layers of the hidden groups, which then reach the output units. This is known as a Feedforward Network and is among the more commonly used designs.

After you've sufficiently trained the neural networks with different learning examples, it then arrives at a stage where it can be presented with an entirely new set of inputs, not encountered in the training phase, and it can predict an output which is satisfactorily accurate.

Below are some of the significant trends in neural networks and deep learning today.

Capsule Networks

Capsule Networks are an emerging form of deep neural network. They process information in a way similar to the human brain. This essentially means that a Capsule Network can maintain hierarchical relationships.

This is in contrast to convolutional neural networks. Though convolutional neural networks are by far one of the most widely used neural networks, they fail to consider critical spatial hierarchies that exist between simple and complex objects. This leads to misclassification and a higher error rate.

When undertaking simple identification tasks, capsule networks provide a higher level of accuracy with a decrease in the number of errors. They also do not require a significant amount of data for training models.

Convolutional Neural Networks (CNN)

Convolutional neural networks have been around for ages and were inspired by biological processes - particularly the way how brain understands the signals it receives from the eyes. The state of the art visual recognition systems today use CNN algorithms to perform image classification, localization and object detection.

The interest in convolutional neural networks has been renewed because it's been heavily used for smart surveillance and monitoring, social network photo tagging and image classification , robotics, drones, and self-driving cars. The data scientists at Google, Amazon, Facebook etc. use this to do all sorts of image filtering and classification.

A closely related field is deep learning for computer vision which is what powers a barcode scanner's ability to "see" and "understand" the stripes in a barcode. That's also how Apple's Face ID recognizes you when it sees your face. To get started with deep learning for computer vision, there's tons of platforms offered that include Google's Vision API, Allegro.ai, Missinglink.ai etc.

Deep Reinforcement Learning (DRL)

Deep Reinforcement Learning is the form of a neural network which learns by communicating with its environment via observations, actions, and rewards. DRL has been successfully used to determine game strategies like those in Atari and Go. The famous AlphaGo program was used to defeat a human champion and has been also been successful.

DRL is essential as it is among the most general purpose learning techniques that you can use for developing business applications. It also requires significantly less data for training models. Another advantage is that you can train it by using simulation. This completely removes the need for labeled data.

Lean and Augmented Learning

By far, the biggest obstacle in Machine Learning in general and Deep Learning, in particular, is the availability of a significant amount of labeled data for training neural models. Two techniques can help address this – synthesizing new data and transferring a trained model for task A to task B.

Techniques like Transfer Learning (transfer the learning from one task to another) or One-Shot Learning (where learning occurs with only one or no relevant examples) make them Lean Data Learning techniques. Similarly, when new data is synthesized using interpolations or simulations, it helps to obtain more training data. ML experts usually refer to this as augmenting the existing data to improve learning.

Techniques such as these can be used to address a broader range of problems, especially where less historical data exists.

Supervised Model

A Supervised Model is a form of learning that infers a particular function from previously labeled training data. It uses a supervised learning algorithm that contains a set of inputs with the corresponding labeled correct outputs.

The labeled inputs are compared with the labeled outputs. Given the variation between the two, you can calculate an error value, and an algorithm is then used to learn the mapping between the input and the output.

The end goal here is to approximate the mapping function to the extent that if a new input data is received an accurate output data can be predicted. Similar to a situation where a teacher supervises a learning process, the learning process halts when the algorithm has achieved a satisfactory level of performance or accuracy.

Networks With Memory Model

One important aspect that distinguishes human beings from machines is the ability to work and think discreetly. Computers can undoubtedly be pre-programmed to complete a specific task with extremely high accuracy. However, the problem occurs when you need them to work in diverse environments.

For machines to be suitable in real-world environments, neural networks have to be capable of learning sequential tasks without forgetting. It is essential for neural networks to be able to overcome catastrophic forgetting using the help of many different powerful architectures. These can include

Long-Term Memory Networks that can process as well as predict time series

Elastic Weight Consolidation Algorithm that can slow down learning based on priority defined by previously completed tasks

Progressive Neural Networks that are immune from catastrophic forgetting is also capable of extracting useful features from already learned networks for use in a new task.

Hybrid Learning Models

Various types of Deep Neural Networks, including GANs and DRL, have shown a lot of promise when it comes to their performance and widespread application concerning different sorts of data. That said, Deep Learning Models cannot model uncertainty in a way that Bayesian or Probabilistic approaches can.

Hybrid Learning Models can combine the two approaches and utilize the strength of each. Some examples of such Hybrid Models include Bayesian GANs and Bayesian Conditional GANs.

Hybrid Learning Models allow for the possibility to expand the range of business problems that can be addressed to include Deep Learning with Uncertainty. This will allow for higher performance as well as explainability of models which can encourage more widespread adoption.

Summary

AI lays the foundation for a new era and many of the breakthroughs in technology are purely based on the this. In this post, we’ve covered some of the notable trends in deep learning and neural networks. Self-driving cars use a combination of multiple models such as deep reinforced learning and convolutional neural networks for visual recognition.

There are many other notable trends that we might have missed. What do you think are the notable trends in Deep Learning and neural networks? Share your thoughts in the comments.

Opinions expressed by DZone contributors are their own.

Comments