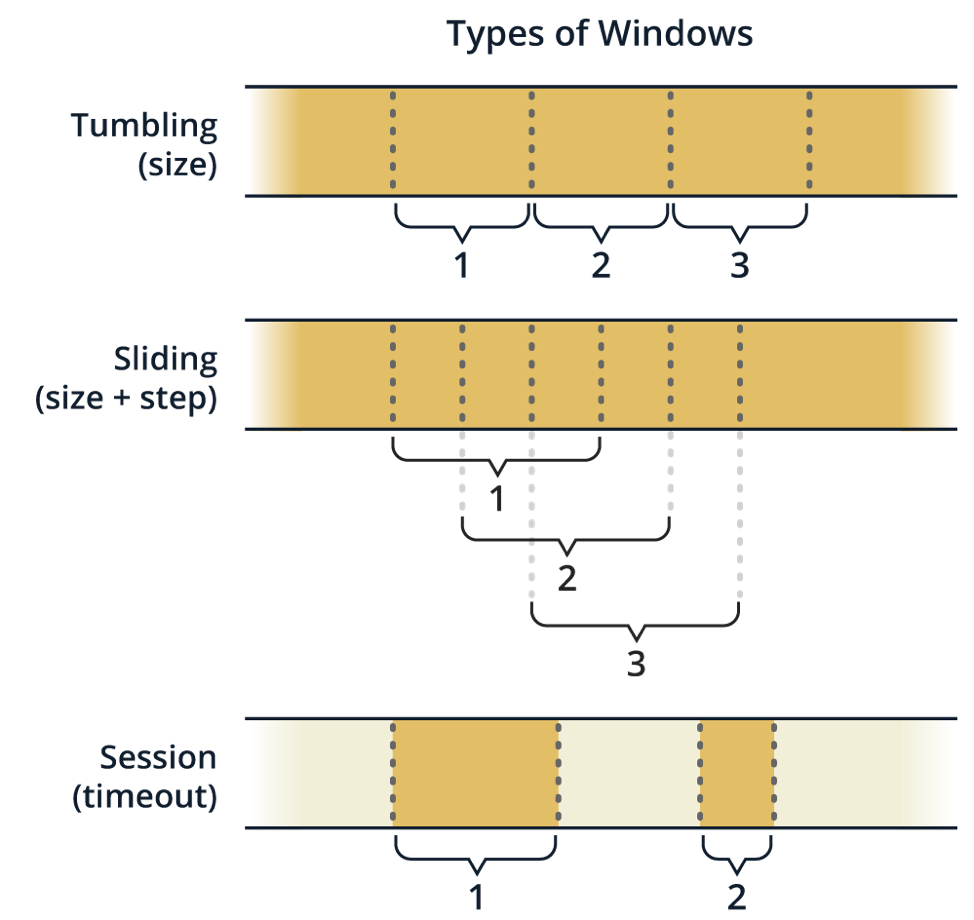

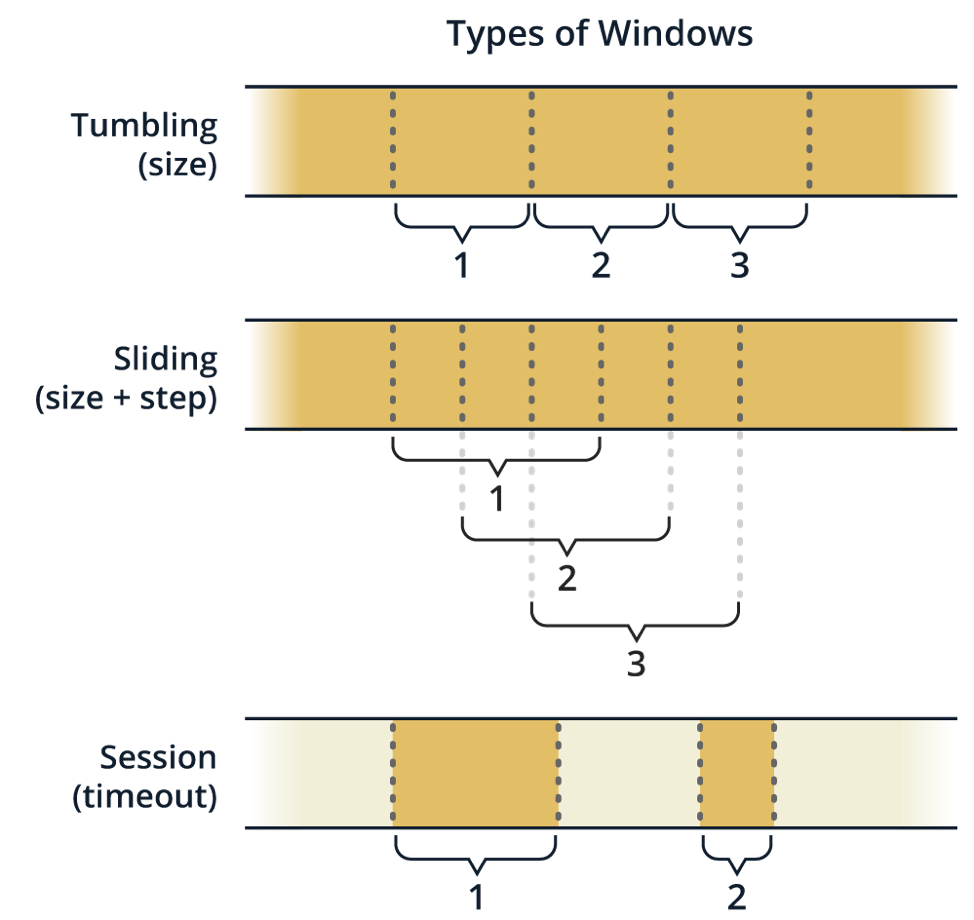

Windows provide you with a finite, bounded view on top of an infinite stream. The window defines how records are selected from the stream and grouped together to a meaningful frame. Your transformation is executed just on the records contained in the window.

How to Define Windows

A very simple example is a tumbling window. It divides the continuous stream into discrete parts that don’t overlap. The window is usually defined by a time duration or record count. The new window is opened as soon as the time passes (for time-based windows) or as soon as the count reaches the limit (count-based windows).

Examples:

- Time-based tumbling windows: Counting system usage stats, e.g. a count of accesses in the last minute.

- Count-based tumbling windows: Maximum score in a gaming system over last 1,000 results.

The sliding window is also of a fixed size; however, consecutive windows can overlap. It is defined by the size and sliding step.

Example:

- Time-based sliding windows: Counting system usage stats. Number of accesses in the last minute with updates every ten seconds.

A session is a burst of user activity followed by period of inactivity (timeout). A session window collects the activity belonging to the same session. As opposed to tumbling or sliding windows, session windows don’t have a fixed start or duration — their scope is data-driven.

Example: When analyzing the web site traffic data, the activity of one user forms one session. The session is considered closed after some period of inactivity (let’s say one hour). When the user starts browsing later, it’s considered a new session.

Take into account that the windows may be keyed or global. The keyed window contains just the records that belong to that window and have the same key. See the Keyed or Global Transformations section.

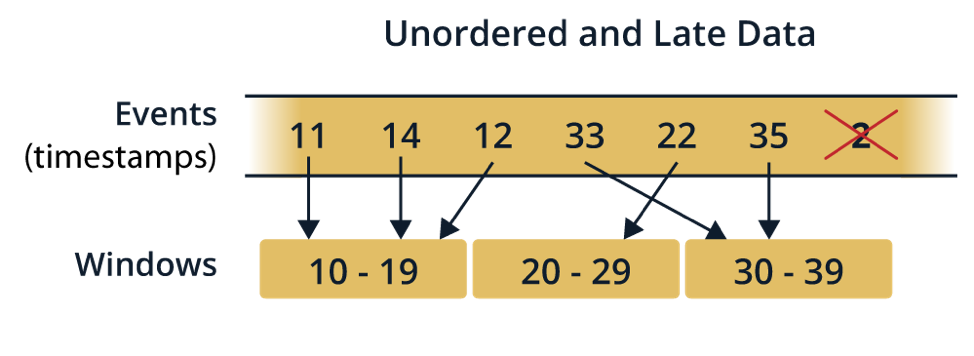

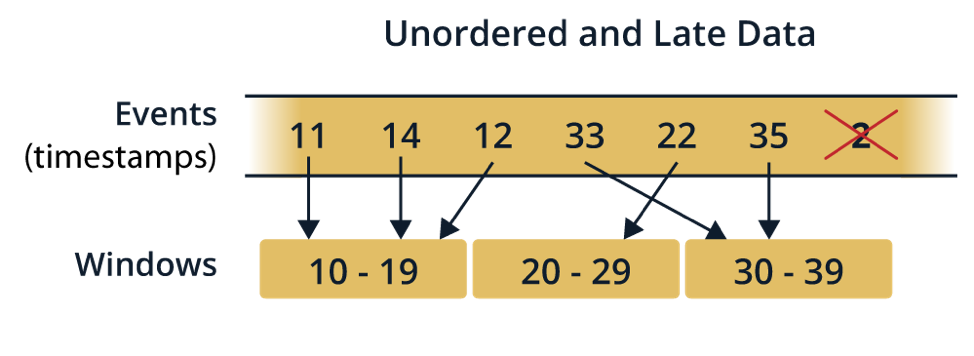

Dealing With Late Events

Very often, a stream record represents an event that happened in the real world and has its own timestamp (called the event time), which can be quite different from the moment the record is processed (called the processing time). This can happen due to the distributed nature of the system — the record could be delayed on its way from source to stream processing system, the link speed from various sources may vary, the originating device may be offline when the event occurred (e.g. IoT sensor or mobile device in flight mode), etc. The difference between processing time and event time is called event time skew.

Processing time semantics should be used when:

- The business use case emphasizes low latency. Results are computed as soon as possible, without waiting for stragglers.

- The business use case builds on the time when the stream processing engine observed the event, ignoring when it originated. Sometimes, you simply don’t trust the timestamp in the event coming from a third party.

Event time semantics should be used when the timestamp of the event origin matters for the correctness of the computation. When your use case is event time-sensitive, it requires more of your attention. If event time is ignored, records can be assigned to improper windows, resulting in incorrect computation results.

The system has to be instructed where in the record the information about event time is. Also, it has to be decided how long to wait for late events. If you tolerate long delays, it is more probable that you’ve captured all the events. Short waiting, on the other hand, gives you faster responses (lower latency) and less resource consumption — there is less data to be buffered.

In a distributed system, there is no “upper limit” on event time skew. Imagine an extreme scenario where there is a game score from a mobile game. The player is on a plane, so the device is in flight mode and the record cannot be sent to be processed. What if the mobile device never comes online again? The event just happened, so in theory, the respective window has to stay open forever for the computation to be correct.

Stream processing frameworks provide heuristic algorithms to help you assess window completeness, sometimes called watermarks.

When your system handles event time-sensitive data, make sure that the underlying stream processing platform supports event time-based processing.