A Deep Dive Into Data Orchestration With Airbyte, Airflow, Dagster, and Prefect

This article delves into the integration of Airbyte with some of the most popular data orchestrators in the industry – Apache Airflow, Dagster, and Prefect.

Join the DZone community and get the full member experience.

Join For FreeThis article delves into the integration of Airbyte with some of the most popular data orchestrators in the industry – Apache Airflow, Dagster, and Prefect. We'll not only guide you through the process of integrating Airbyte with these orchestrators but also provide a comparative insight into how each one can uniquely enhance your data workflows.

We also provide links to working code examples for each of these integrations. These resources are designed for quick deployment, allowing you to seamlessly integrate Airbyte with your orchestrator of choice.

Whether you're looking to streamline your existing data workflows, compare these orchestrators, or explore new ways to leverage Airbyte in your data strategy, this post is for you. Let's dive in and explore how these integrations can elevate your data management approach.

Overview of Data Orchestrators: Apache Airflow, Dagster, and Prefect

In the dynamic arena of modern data management, interoperability is key, yet orchestrating data workflows remains a complex challenge. This is where tools like Apache Airflow, Prefect, and Dagster become relevant for data engineering teams, each bringing unique strengths to the table.

Apache Airflow: The Veteran of Workflow Orchestration

Background

Born at Airbnb and developed by Maxime Beauchemin, Apache Airflow has evolved into a battle-tested solution for orchestrating complex data pipelines. Its adoption by the Apache Software Foundation has only solidified its position as a reliable open-source tool.

Strengths

- Robust community and support: With widespread use across thousands of companies, Airflow boasts a vast community, ensuring that solutions to common problems are readily available.

- Rich integration ecosystem: The platform's plethora of providers makes it easy to integrate with nearly any data tool, enhancing its utility in diverse environments.

Challenges

As the data landscape evolves, Airflow faces hurdles in areas like testing, non-scheduled workflows, parametrization, data transfer between tasks, and storage abstraction, prompting the exploration of alternative tools.

Dagster: A New Approach to Data Engineering

Background

Founded in 2018 by Nick Schrock, Dagster takes a first-principles approach to data engineering, considering the entire development lifecycle.

Features

- Development life cycle-oriented: It excels in handling the full spectrum from development to deployment, with a strong focus on testing and observability.

- Flexible data storage and execution: Dagster abstracts compute and storage, allowing for more adaptable and environment-specific data handling.

Prefect: Simplifying Complex Pipelines

Background

Conceived by Jeremiah Lowin, Prefect addresses orchestration by taking existing code and embedding it into a distributed pipeline backed by a powerful scheduling engine.

Features

- Engineering philosophy: Prefect operates on the assumption that users are proficient in coding, focusing on easing the transition from code to distributed pipelines.

- Parametrization and fast scheduling: It excels in parameterizing flows and offers quick scheduling, making it suitable for complex computational tasks.

Each orchestrator responds to the challenges of data workflow management in unique ways: Apache Airflow's broad adoption and extensive integrations make it a safe and reliable choice. Dagster's life cycle-oriented approach offers flexibility, especially in development and testing. Prefect's focus on simplicity and efficient scheduling makes it ideal for quickly evolving workflows.

Integrating Airbyte With Airflow, Dagster and Prefect

In this section, we will briefly discuss the unique aspects of integrating Airbyte with these three popular data orchestrators at a low level. While the detailed, step-by-step instructions are available in their respective GitHub repositories, here we'll focus on what it looks like to integrate these tools.

Airbyte and Apache Airflow Integration

Find a working example of this integration in this GitHub repo.

The integration of Airbyte with Apache Airflow creates a powerful synergy for managing and automating data workflows. Both Airbyte and Airflow are typically deployed in containerized environments, enhancing their scalability and ease of management.

Deployment Considerations

- Containerized environments: Airbyte and Airflow's containerized deployment facilitates scaling, version control, and streamlined deployment processes.

- Network accessibility: When deployed in separate containers or Kubernetes pods, ensuring network connectivity between Airbyte and Airflow is essential for seamless integration.

Before delving into the specifics of the integration, it's important to note that the code examples and configuration details can be found in the Airbyte-Airflow GitHub repository, particularly under orchestration/airflow/dags/. This directory contains the essential scripts and files, including the elt_dag.py file, which is crucial for understanding the integration.

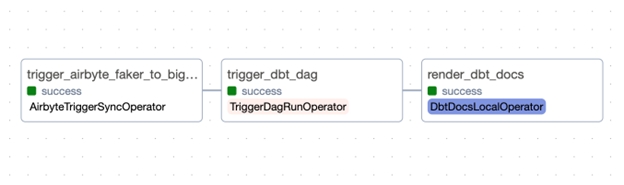

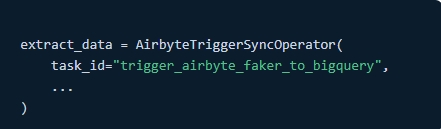

The elt_dag.py script exemplifies the integration of Airbyte within an Airflow DAG.

AirbyteTriggerSyncOperator: This operator is used to trigger synchronization tasks in Airbyte, connecting to data sources and managing data flow.![AirbyteTriggerSyncOperator]()

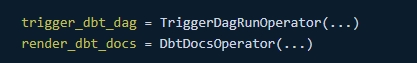

TriggerDagRunOperator and DbtDocsOperator: These operators show how Airflow can orchestrate complex workflows, like triggering a dbt DAG following an Airbyte task.![TriggerDagRunOperator and DbtDocsOperator]()

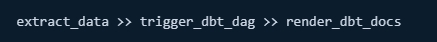

Task sequencing: The script defines the sequence of tasks, showcasing Airflow's intuitive and readable syntax:

![Task sequencing]()

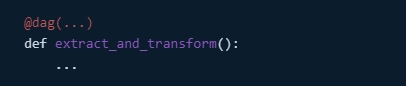

DAG definition: Using the

@dagdecorator, the DAG is defined in a clear and maintainable manner.![]()

Integration Benefits

- Automated workflow management: Integrating Airbyte with Airflow automates and optimizes the data pipeline process.

- Enhanced monitoring and error handling: Airflow's capabilities in monitoring and error handling contribute to a more reliable and transparent data pipeline.

- Scalability and flexibility: The integration offers scalable solutions for varying data volumes and the flexibility to customize for specific requirements.

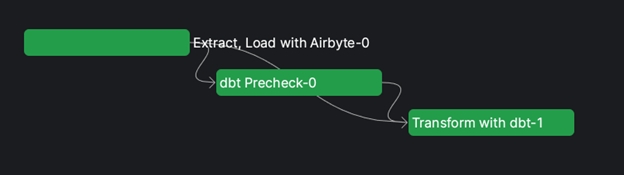

Airbyte and Dagster Integration

Find a working example of this integration in this GitHub repo.

The integration of Airbyte with Dagster brings together Airbyte's robust data integration capabilities with Dagster's focus on development productivity and operational efficiency, creating a developer-friendly approach for data pipeline construction and maintenance.

For a detailed understanding of this integration, including specific configurations and code examples, refer to the Airbyte-Dagster GitHub repository, particularly focusing on the orchestration/assets.py file.

The orchestration/assets.py file provides a clear example of how Airbyte and Dagster can be effectively integrated.

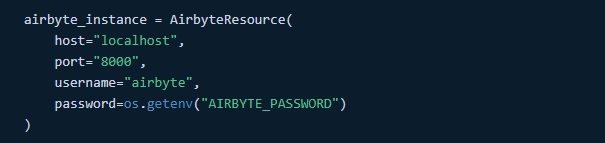

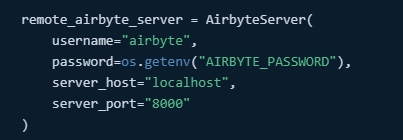

AirbyteResource configuration: This segment of the code sets up the connection to an Airbyte instance, specifying host, port, and authentication credentials. The use of environment variables for sensitive information like passwords enhances security.

![]()

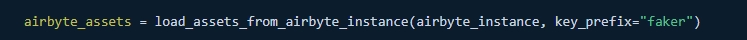

- Dynamic asset loading: The integration uses the

load_assets_from_airbyte_instancefunction to dynamically load assets based on the Airbyte instance. This dynamic loading is key for managing multiple data connectors and orchestrating complex data workflows.![Dynamic asset loading]()

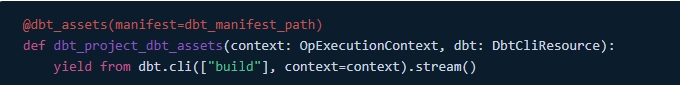

Dbt Integration: The snippet also demonstrates integrating dbt for data transformation within the same pipeline, showing how dbt commands can be executed within a Dagster pipeline.

![Dbt Integration]()

Integration Benefits

- Streamlined development: Dagster's approach streamlines the development and maintenance of data pipelines, making the integration of complex systems like Airbyte more manageable.

- Operational visibility: Improved visibility into data workflows helps monitor and optimize data processes more efficiently.

- Flexible pipeline construction: The integration's flexibility allows for the creation of robust and tailored data pipelines, accommodating various business requirements.

Airbyte and Prefect Integration

Find a working example of this integration in this GitHub repo.

The integration of Airbyte with Prefect represents a forward-thinking approach to data pipeline orchestration, combining Airbyte's extensive data integration capabilities with Prefect's modern, Pythonic workflow management.

For detailed code examples and configuration specifics, refer to the my_elt_flow.py file in the Airbyte-Prefect GitHub repository, located under orchestration/my_elt_flow.py.

This file offers a practical example of how to orchestrate an ELT (Extract, Load, Transform) workflow using Airbyte and Prefect.

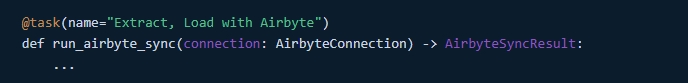

AirbyteServerconfiguration: The script starts by configuring a remote Airbyte server, specifying authentication details and server information. This setup is crucial for establishing a connection to the Airbyte instance.![AirbyteServer configuration]()

AirbyteConnectionand sync: AnAirbyteConnectionobject is created to manage the connection to Airbyte. Therun_airbyte_synctask triggers and monitors an Airbyte sync job, demonstrating how to integrate and control Airbyte tasks within a Prefect flow.![AirbyteConnection and sync]()

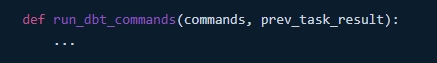

Dbt integration: The flow also integrates dbt operations using the

DbtCoreOperationtask. This shows how dbt commands can be incorporated into the Prefect flow, linking them to the results of the Airbyte sync task.![Dbt integration]()

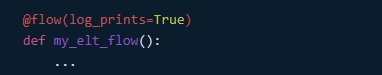

Flow definition: The Prefect

@flowdecorator is used to define the overall ELT workflow. The flow orchestrates the sequence of Airbyte sync and dbt tasks, showcasing Prefect's ability to manage complex data workflows elegantly.![Flow definition]()

Integration Benefits

- Dynamic workflow automation: Prefect's dynamic flow capabilities allow for flexible and adaptable pipeline construction, accommodating complex and evolving data needs.

- Enhanced error handling: The integration benefits from Prefect's robust error handling, ensuring more reliable and resilient data workflows.

- Pythonic elegance: Prefect's Python-centric approach offers a familiar and intuitive environment for data engineers, enabling the seamless integration of various data tools.

Wrapping Up

As we've explored throughout this post, integrating Airbyte with data orchestrators like Apache Airflow, Dagster, and Prefect can significantly elevate the efficiency, scalability, and robustness of your data workflows. Each orchestrator brings its unique strengths to the table — from Airflow's complex scheduling and dependency management, Dagster's focus on development productivity, to Prefect's modern, dynamic workflow orchestration.

The specifics of these integrations, as demonstrated through the code snippets and repository references, highlight the power and flexibility that these combinations offer.

We encourage you to delve into the provided GitHub repositories for detailed instructions and to experiment with these integrations in your own environments. The journey of learning and improvement is continuous, and the ever-evolving nature of these tools promises even more exciting possibilities ahead.

Remember, the most effective data pipelines are those that are not only well-designed but also continuously monitored, optimized, and updated to meet evolving needs and challenges. So, stay curious, keep experimenting, and don’t hesitate to share your experiences and insights with the community.

Published at DZone with permission of John Lafleur. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments