A Step-By-Step Guide to Data Migration: Unlocking Distributed Database Potential With ShardingSphere

This blog will delve into ShardingSphere's data migration function and provide a comprehensive, step-by-step guide to building a distributed database.

Join the DZone community and get the full member experience.

Join For FreeData Migration With ShardingSphere: A Closer Look

Let's now explore one of the core features of ShardingSphere — data migration — and gain insights into its functionality and benefits. Whether it is for database upgrades or backups, you can directly use the built-in data migration functionality in ShardingSphere-Proxy. There is no need to introduce additional modules so that the project's development and maintenance process can be simplified. In this section, we'll focus on using MySQL as the target database and provide a step-by-step demonstration of the entire migration process.Scope of Data Migration Support

Currently, ShardingSphere data migration supports the following database types:- MySQL

- PostgreSQL

- openGauss

Supported Database Versions and Configuration Examples

To ensure compatibility, ShardingSphere provides data migration support for various database versions. The table below showcases the database versions and offers configuration examples for a smooth migration:Database |

Supported Version |

Required Environment |

Required Permission |

MySQL |

v5.1.15 ~ v8.0.x |

log-bin=binlog binlog-format=row binlog-row-image=full |

GRANT REPLICATION SLAVE,REPLICATION CLIENT ON .TO ${usernamet}@${host} |

PostgreSQL |

v9.4 and above |

wal_level = logical max_wal_senders = 10 max_replication_slots = 10 max_connections = 600 |

host all ${username} 0.0.0.0/0 md5 |

openGauss |

v2.0.1 ~ v3.1.x |

wal_level = logical max_wal_senders = 10 max_replication_slots = 10 max_connections = 600 wal_sender_timeout = 0 |

host all ${username} 0.0.0.0/0 md5 |

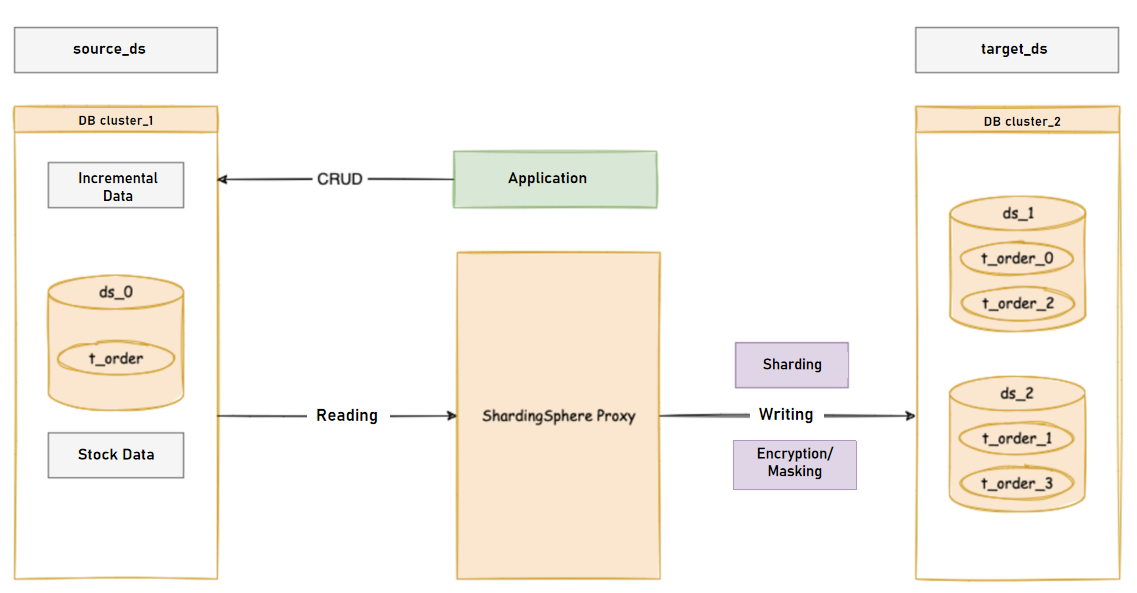

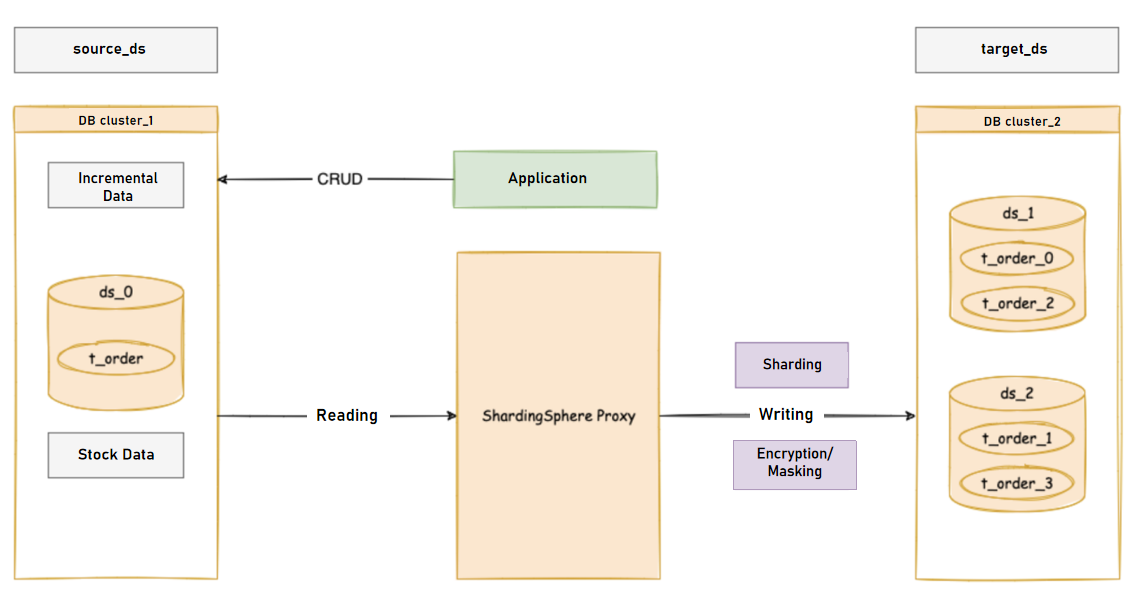

- Source Database: The source database refers to the storage cluster where the original data resides. This is the database that you intend to migrate from.

- Target Database: The target database represents the storage cluster where the original data will be migrated to. This is the database you aim to migrate your data into, transforming it into a distributed database.

- Stock Data: Stock data refers to the existing data present in the database node before initiating the migration process. This data forms the foundation of the migration process and serves as the starting point for data transfer.

- Incremental Data: Incremental data comprises the new data generated in the business system during the migration process. As migration unfolds, the business system continues to generate new data. Capturing and transferring this incremental data is crucial to ensuring data integrity and consistency during the migration process.

Data Migration Process

Data Migration Process

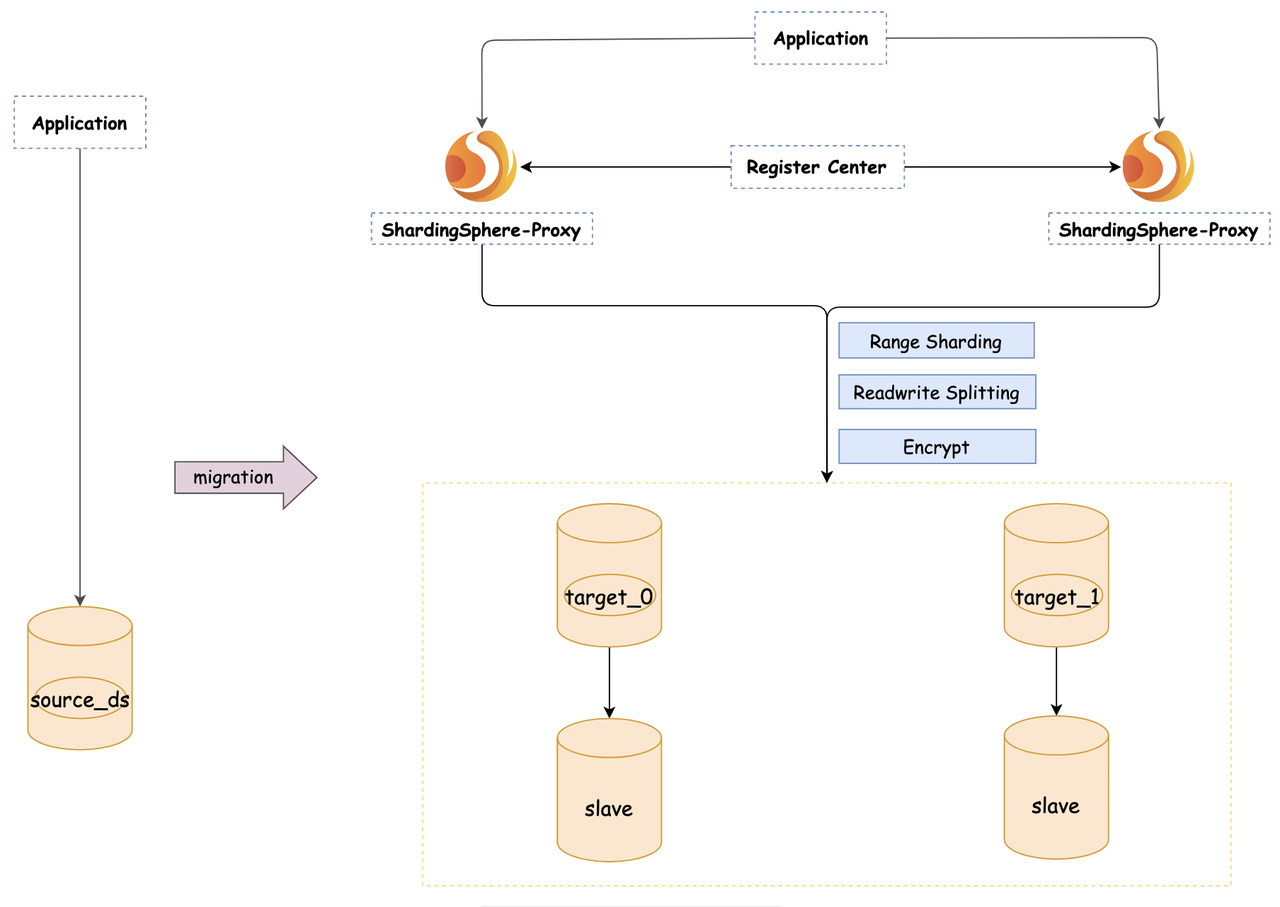

Deployment Architecture

Deployment Architecture

Deployment Architecture

Data Migration Stages

Data Migration Stages

Data Migration Stages

Environment Preparation

Before diving into the data migration process, ensure you have the following components set up:

- ShardingSphere Version: Make sure you have ShardingSphere version 5.4.0 or above installed. Alternatively, you can use the master branch version. Additionally, ensure that you are operating in cluster mode.

- MySQL Databases: Prepare one source database and two target databases—a master database and a replica. These databases will be involved in the data migration process.

- Register Center: Utilize ZooKeeper version 3.8.0 as the registry center for ShardingSphere cluster mode. This will facilitate seamless communication and coordination within the cluster.

Data Migration Process

- Preparation Stage: In this initial stage, the data migration module performs several tasks, including checking the connectivity and authority of the data source. It also conducts data stock statistics, logs recording sites, and initializes tasks to prepare for the migration process.

- Stock Data Migration: During this stage, JDBC queries are employed to directly read data from the source database and write it to the target database based on the configured sharding rules. This migration ensures that existing stock data is transferred accurately and securely.

- Incremental Data Migration: As the migration of stock data may take varying amounts of time due to factors like data volume and parallel processing, it is essential to synchronize the newly generated incremental data. For MySQL databases, this synchronization is achieved by subscribing to and parsing binlog logs. Once the initial synchronization of incremental data is complete, continuous traffic can be switched to the target database.

-

Traffic Switching: Upon completion of the data migration process, users can switch the read or write traffic to ShardingSphere, effectively utilizing the newly built distributed database.

- Monitoring and Data Consistency Verification: Throughout the migration process, users can monitor the progress in real-time using DistSQL. Additionally, the data consistency verification feature allows you to compare the data before and after migration, ensuring the accuracy and consistency of the migrated data.

Data Migration Steps

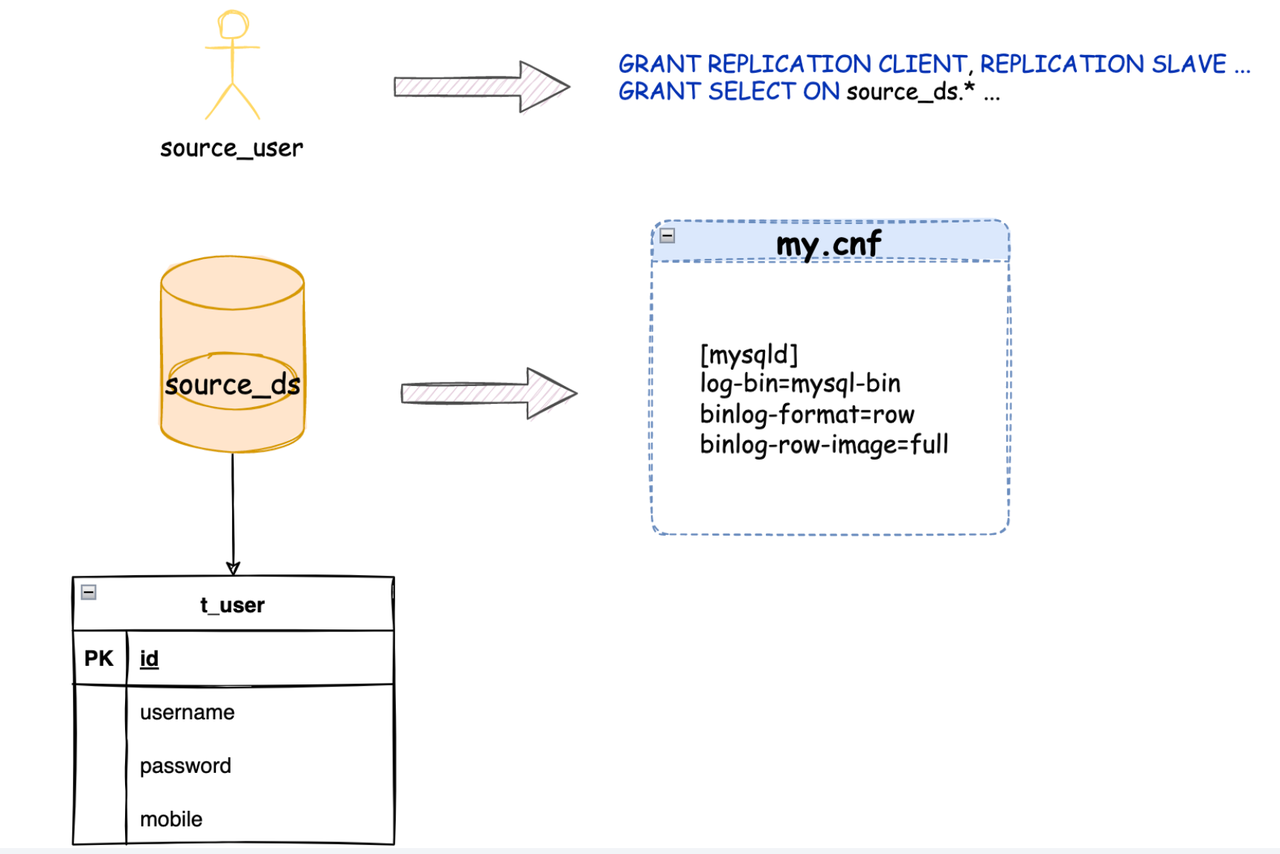

Let's first start by granting the relevant permissions.Database Permission Configuration

It is necessary to configure migration permissions on the source database, for the administrator account is generally not used for data migration. Database Permission Configuration

Database Permission Configuration

GRANT CREATE, ALTER, DROP, SELECT, INSERT, UPDATE, DELETE, INDEX ON target_ds_0.* TO target_user;

GRANT CREATE, ALTER, DROP, SELECT, INSERT, UPDATE, DELETE, INDEX ON target_ds_1.* TO target_user;Steps To Perform Data Migration

Step 1: Initialize Data in the Source Database

To begin with, you should initialize tables in the source database.Initialize tables in the source database: Create the necessary tables in the source database and populate them with initial data.

CREATE TABLE t_user

(

id int auto_increment,

username varchar(64) null,

password varchar(255) null,

mobile varchar(64) null,

constraint t_user_pk primary key (id)

);Simulate initial data: Generate simulated data to ensure the source database contains representative information for migration.

INSERT INTO t_user (id, username, password, mobile) VALUES (1, 'jack', '123456', '13111111111');

INSERT INTO t_user (id, username, password, mobile) VALUES (2, 'rose', '234567', '13111111112');

INSERT INTO t_user (id, username, password, mobile) VALUES (3, 'mike', 'aaa123', '13111111113');

INSERT INTO t_user (id, username, password, mobile) VALUES (4, 'bob', 'aaabbb', '13111111114');Step 2: Initialize ShardingSphere-Proxy Rules

Start ShardingSphere Proxy in cluster mode, log in through the database client, and execute the command to create the database.mysql> create database sharding_db;

Query OK, 0 rows affected (0.12 sec)

mysql> use sharding_db

Database changedUse DistSQL to add storage units: Configure the storage units for ShardingSphere-Proxy.

REGISTER STORAGE UNIT target_ds_0 (

URL="jdbc:mysql://localhost:3306/target_ds_0?serverTimezone=UTC&useSSL=false&allowPublicKeyRetrieval=true",

USER="target_user",

PASSWORD="root"

);

REGISTER STORAGE UNIT target_ds_1 (

URL="jdbc:mysql://localhost:3306/target_ds_1?serverTimezone=UTC&useSSL=false&allowPublicKeyRetrieval=true",

USER="target_user",

PASSWORD="root"

);

REGISTER STORAGE UNIT read_ds_0 (

URL="jdbc:mysql://localhost:3308/target_ds_0?serverTimezone=UTC&useSSL=false&allowPublicKeyRetrieval=true",

USER="target_user",

PASSWORD="root"

);

REGISTER STORAGE UNIT read_ds_1 (

URL="jdbc:mysql://localhost:3308/target_ds_1?serverTimezone=UTC&useSSL=false&allowPublicKeyRetrieval=true",

USER="target_user",

PASSWORD="root"

);Initialize Rule Definition

Initialize read/write splitting rules.

CREATE READWRITE_SPLITTING RULE rw_ds_0 (

WRITE_STORAGE_UNIT=target_ds_0,

READ_STORAGE_UNITS(read_ds_0),

TYPE(NAME="random")

);

CREATE READWRITE_SPLITTING RULE rw_ds_1 (

WRITE_STORAGE_UNIT=target_ds_1,

READ_STORAGE_UNITS(read_ds_1),

TYPE(NAME="random")

);CREATE SHARDING TABLE RULE t_user(

STORAGE_UNITS(rw_ds_0, rw_ds_1),

SHARDING_COLUMN=id,

TYPE(NAME="hash_mod",PROPERTIES("sharding-count"="4")),

KEY_GENERATE_STRATEGY(COLUMN=id,TYPE(NAME="snowflake"))

);CREATE ENCRYPT RULE t_user (

COLUMNS((NAME=password,CIPHER=password_cipher,ENCRYPT_ALGORITHM(TYPE(NAME='AES',PROPERTIES('aes-key-value'='123456abc'))))

));Step 3: Start Data Migration Job

Add the source of migration data: Register the source database as an external data source with DistSQL.

REGISTER MIGRATION SOURCE STORAGE UNIT source_ds (

URL="jdbc:mysql://${source_database_url:port}/source_ds?serverTimezone=UTC&useSSL=false",

USER="root",

PASSWORD="root"

);Execute the migration command: Use the appropriate command to begin the data migration process. The command should specify the source database and the target cluster.

MIGRATE TABLE source_ds.t_user INTO sharding_db.t_user;source_ds here indicates the data from the source database registered in the previous step, and sharding_dbindicated the database created in ShardingSphere-Proxy. Here is the example result:

mysql> MIGRATE TABLE source_ds.t_user INTO sharding_db.t_user;

Query OK, 0 rows affected (1.06 sec)Check Migration Progress

Query the migration list: Use the commandSHOW MIGRATION LISTto retrieve a list of active migration jobs, including their IDs, tables being migrated, and job statuses.

mysql> SHOW MIGRATION LIST;

+--------------------------------------------+------------------+----------------+--------+---------------------+-----------+

| id | tables | job_item_count | active | create_time | stop_time |

+--------------------------------------------+------------------+----------------+--------+---------------------+-----------+

| j0102p000041c4912117c302e9facd92f9a74a478c | source_ds.t_user | 1 | true | 2023-06-24 09:44:51 | NULL |

+--------------------------------------------+------------------+----------------+--------+---------------------+-----------+

1 row in set (0.03 sec)SHOW MIGRATION STATUS jobidto query job details. Here is the example result:

mysql> SHOW MIGRATION STATUS j0102p000041c4912117c302e9facd92f9a74a478c;

+------+-------------+------------------+--------------------------+--------+-------------------------+-------------------------------+--------------------------+---------------+

| item | data_source | tables | status | active | processed_records_count | inventory_finished_percentage | incremental_idle_seconds | error_message |

+------+-------------+------------------+--------------------------+--------+-------------------------+-------------------------------+--------------------------+---------------+

| 0 | source_ds | source_ds.t_user | EXECUTE_INCREMENTAL_TASK | true | 4 | 100 | 321 | |

+------+-------------+------------------+--------------------------+--------+-------------------------+-------------------------------+--------------------------+---------------+

1 row in set (0.04 sec)Step 4: Check Data Consistency

ShardingSphere data migration provides the ability to check data consistency through DistSQL. This is especially efficient when dealing with large amounts of data, as the data sources before and after migration are independent.- Incremental data migration has started.

- The business read-only window period with sufficient time is available.

mysql> CHECK MIGRATION j0102p000041c4912117c302e9facd92f9a74a478c BY TYPE (NAME='DATA_MATCH');

Query OK, 0 rows affected (0.48 sec)

mysql> SHOW MIGRATION CHECK STATUS j0102p000041c4912117c302e9facd92f9a74a478c;

+------------------+--------+---------------------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

| tables | result | check_failed_tables | finished_percentage | remaining_seconds | check_begin_time | check_end_time | duration_seconds | error_message |

+------------------+--------+---------------------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

| source_ds.t_user | true | | 100 | 0 | 2023-06-24 10:05:28.483 | 2023-06-24 10:05:29.821 | 1 | |

+------------------+--------+---------------------+---------------------+-------------------+-------------------------+-------------------------+------------------+---------------+

1 row in set (0.06 sec)Step 5: Submit the Migration Job

mysql> COMMIT MIGRATION j0102p000041c4912117c302e9facd92f9a74a478c;

Query OK, 0 rows affected (2.79 sec)Summary

Traditional standalone databases often become bottlenecks due to their large table data size and rapid data growth. Scaling up the application server is ineffective in such cases, making database scaling-out necessary. ShardingSphere aims to reduce the impact on business operations by providing a comprehensive data migration solution, allowing traditional standalone databases to seamlessly transition to ShardingSphere and transform into distributed databases.Published at DZone with permission of Xinze Guo. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments