Auto-Instrumenting Node.js Apps With OpenTelemetry

In this article, we will go through a working example of a Node.js application auto-instrumented with OpenTelemetry's Node.js client library.

Join the DZone community and get the full member experience.

Join For FreeIn this tutorial, we will go through a working example of a Node.js application auto-instrumented with OpenTelemetry. In our example, we’ll use Express, the popular Node.js web application framework.

Our example application is based on two locally hosted services sending data to each other. We will instrument this application with OpenTelemetry’s Node.js client library to generate trace data and send it to an OpenTelemetry Collector. The Collector will then export the trace data to an external distributed tracing analytics tool of our choice.

If you are new to the OpenTelemetry open source project, or if you are looking for more application instrumentation options, check out this guide.

Our Example Application

In this example, service “sample-a” calls service “sample-b”. The services listen on ports 7777 and 5555, respectively.

Both services have the same user interface, where we can enter our name and age and send it to the opposite service by clicking Button. The service will then generate a message to confirm that the data has been sent.

The complete example is available on Github.

Install and Run the Example Application

Let’s take a closer look at our example application. In order to run it, first, clone the repo to your machine:

git clone https://github.com/resdenia/openTelementry-nodejs-example.gitThen go to the root directory of the application and run:

npm installAfter this, navigate to the application directory of service-A to start the service:

cd nodejsSampleA

node index.js

Now we need to repeat the same steps for service-B.

Instead of the above steps, you can use the wrapper script in the example repo to initialize and run both service-A and service-B together, by running this from the root directory of the application:

npm run initAll

npm run dev

In our package.json we specify the script to run the initializer for both services.

Now we can access service-A at localhost:7777 and punch in some data (and service-B on localhost:5555 respectively).

Now that we’ve got the example application working, let’s take it down, and go through the steps for adding tracing to it with OpenTelemetry, then we’ll run the instrumented app and see the tracing in our Jaeger UI.

Step 1: Install OpenTelemetry Packages

In our next step, we will need to install all OpenTelemetry modules that are required to auto-instrument our app:

opentelemetry/api

opentelemetry/instrumentation

opentelemetry/tracing

opentelemetry/exporter-collector

opentelemetry/resources

opentelemetry/semantic-conventions

opentelemetry/auto-instrumentations-node

opentelemetry/sdk-node

To install these packages, we run the following command from our application directory:

npm install --save @opentelemetry/api

npm install --save @opentelemetry/instrumentation

npm install --save @opentelemetry/tracing

npm install --save @opentelemetry/exporter-collector

npm install --save @opentelemetry/resources

npm install --save @opentelemetry/semantic-conventions

npm install --save @opentelemetry/auto-instrumentations-node

npm install --save @opentelemetry/sdk-nodeThese packages provide good automatic instrumentation of our web requests across express, HTTP, and the other standard library modules used. Thanks to this auto-instrumentation, we don’t need to change anything in our application code apart from adding a tracer. We will do this in our next step.

Step 2: Add a Tracer to the Node.js Application

A Node.js SDK tracer is the key component of Node.js instrumentation. It takes care of the tracing setup and graceful shutdown. The repository of our example application already includes this module. If you would create it from scratch, you will just need to create a file called tracer.js with the following code:

"use strict";

const {

BasicTracerProvider,

ConsoleSpanExporter,

SimpleSpanProcessor,

} = require("@opentelemetry/tracing");

const { CollectorTraceExporter } = require("@opentelemetry/exporter-collector");

const { Resource } = require("@opentelemetry/resources");

const {

SemanticResourceAttributes,

} = require("@opentelemetry/semantic-conventions");

const opentelemetry = require("@opentelemetry/sdk-node");

const {

getNodeAutoInstrumentations,

} = require("@opentelemetry/auto-instrumentations-node");

const exporter = new CollectorTraceExporter({});

const provider = new BasicTracerProvider({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: "service-a",

}),

});

provider.addSpanProcessor(new SimpleSpanProcessor(exporter));

// provider.addSpanProcessor(new SimpleSpanProcessor(new ConsoleSpanExporter()));

provider.register();

const sdk = new opentelemetry.NodeSDK({

traceExporter: new opentelemetry.tracing.ConsoleSpanExporter(),

instrumentations: [getNodeAutoInstrumentations()],

});

sdk

.start()

.then(() => {

console.log("Tracing initialized");

})

.catch((error) => console.log("Error initializing tracing", error));

process.on("SIGTERM", () => {

sdk

.shutdown()

.then(() => console.log("Tracing terminated"))

.catch((error) => console.log("Error terminating tracing", error))

.finally(() => process.exit(0));

});

As you can see, the tracer.js takes care of instantiating the trace provider and configuring it with a trace exporter of our choice. As we’d like to send the trace data to an OpenTelemetry Collector (as we’ll see in the following steps), we use the CollectorTraceExporter.

If you want to see some trace output on the console to verify the instrumentation, you can use the ConsoleSpanExporter that will print to the console. The above tracer.js already has the ConsoleSpanExporter configured for you.

This is the only coding you need to do to instrument your Node.js app. In particular, you don’t need to make any code changes to the service modules themselves – they will be auto-instrumented for you.

Step 3: Set Up OpenTelemetry Collector to Collect and Export Traces to our Backend

The last component that we will need is the OpenTelemetry Collector, which we can download here.

In our example, we will be using the otelcontribcol_darwin_amd64 flavor, but you can choose any other version of the collector from the list, as long as the collector is compatible with your operating system.

The data collection and export settings in the OpenTelemetry Collector are defined by a YAML config file. We will create this file in the same directory as the collector file that we have just downloaded and call it config.yaml. This file will have the following configuration:

receivers:

otlp:

protocols:

grpc:

http:

exporters:

logzio:

account_token: "<<TRACING-SHIPPING-TOKEN>>"

#region: "<<LOGZIO_ACCOUNT_REGION_CODE>>" - (Optional)

processors:

batch:

extensions:

pprof:

endpoint: :1777

zpages:

endpoint: :55679

health_check:

service:

extensions: [health_check, pprof, zpages]

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [logzio]

In this example, we will send the traces to Logz.io’s distributed tracing service. So, we will configure the collector with the Logz.io exporter, which will send traces to the Logz.io account defined by the account token (if you don’t have an account, you can get a free one here). However, you can also export the trace data from the OpenTelemetry Collector to any other tracing backend by adding the required exporter configuration to this file (you can read more on exporters options here).

Step 4: Run it All Together and Verify in Jaeger UI

Now that we have everything set up, let’s launch our system again and send some traces.

First, we need to start the OpenTelemetry Collector. We do this by specifying the path to the collector and the required config.yaml file. In our example, we run both files from the application directory as follows:

./otelcontribcol_darwin_amd64 --config ./config.yamlThe collector is now running and listening to incoming traces on port 4317.

Our next step is to start each service similar to what we did at the beginning of this tutorial (the below snippet is for service A, then repeat for service B):

cd nodejsSampleA

node --require './tracer.js' index.jsThere is one important difference: this time we’ve added the --require './tracer.js' parameter when running the service, to require the tracer.js we composed in step 2 above, to enable the application instrumentation.

If you use the wrapper script from the repo to run both services together as discussed at the beginning, note that it already includes the parameter for the tracer.js, so all you need to do now is to run the following command from the root directory of the application:

npm run devAll that is left for us to do at this point is to visit http://localhost:5555 and http://localhost:7777 to perform some requests to the system so we have sample data to look at. The Collector will then pick up these traces and send them to the distributed tracing backend defined by the exporter in the collector config file. In addition, our tracer exports the traces to the console so we can see what is being generated and sent.

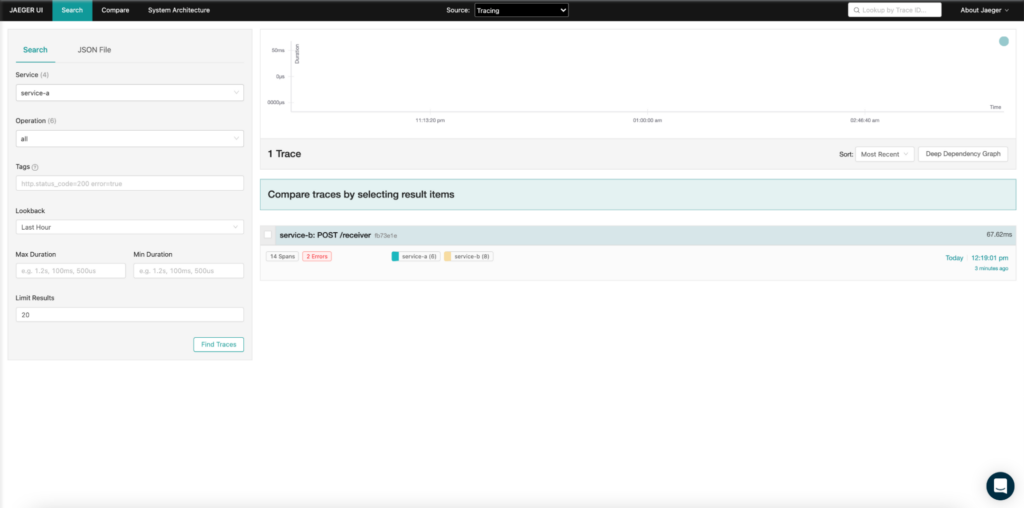

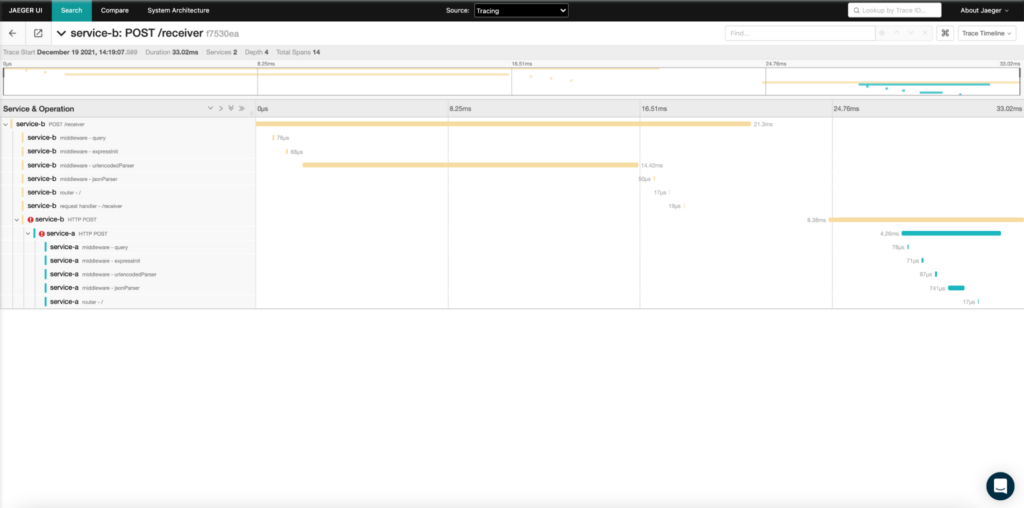

Let’s check the Jaeger UI to make sure our traces arrived ok:

Voilà! We can see the trace starting from one service, running a few operations, then invoking the other service to carry out the request we punched in at the beginning.

Summary

As you can see, OpenTelemetry makes it pretty simple to automatically instrument Node.js applications. All we had to do, was:

- Install required Node.js packages for OpenTelemetry instrumentation

- Add a

tracer.jsto the application - Deploy and configure OpenTelemetry Collector to receive the trace data and send it to our tracing analytics backend

- Re-run the instrumented application and explore the traces arriving at the tracing analytics backend with Jaeger UI

For more information on OpenTelemetry instrumentation, visit this guide. If you are interested in trying this integration out using Logz.io backend, feel free to sign up for a free account and then follow this documentation to set up auto-instrumentation for your own Node.js application.

Published at DZone with permission of Dotan Horovits. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments