Auto-Synchronization of a Whole MySQL Database for Data Analysis

Learn how to automatically ingest and update MySQL or Oracle database into an OLAP engine, and say goodbye to tedious manual configuration.

Join the DZone community and get the full member experience.

Join For FreeFlink-Doris-Connector 1.4.0 allows users to ingest a whole database (MySQL or Oracle) that contains thousands of tables into Apache Doris, a real-time analytic database, in one step.

With built-in Flink CDC, the Connector can directly synchronize the table schema and data from the upstream source to Apache Doris, which means users no longer have to write a DataStream program or pre-create mapping tables in Doris.

When a Flink job starts, the Connector automatically checks for data equivalence between the source database and Apache Doris. If the data source contains tables that do not exist in Doris, the Connector will automatically create those same tables in Doris and utilize the side outputs of Flink to facilitate the ingestion of multiple tables at once; if there is a schema change in the source, it will automatically obtain the DDL statement and make the same schema change in Doris.

Quick Start

For MySQL

Download JAR file.

Maven

<dependency>

<groupId>org.apache.doris</groupId>

<artifactId>flink-doris-connector-1.15</artifactId>

<!--artifactId>flink-doris-connector-1.16</artifactId-->

<!--artifactId>flink-doris-connector-1.17</artifactId-->

<version>1.4.0</version>

</dependency>For Oracle

Download JAR file: Flink 1.15, Flink 1.16, Flink 1.17

How To Use It

For example, to ingest a whole MySQL database mysql_db into Doris (the MySQL table names start with tbl or test), simply execute the following command (no need to create the tables in Doris in advance):

<FLINK_HOME>/bin/flink run \

-Dexecution.checkpointing.interval=10s \

-Dparallelism.default=1 \

-c org.apache.doris.flink.tools.cdc.CdcTools \

lib/flink-doris-connector-1.16-1.4.0.jar \

mysql-sync-database \

--database test_db \

--mysql-conf hostname=127.0.0.1 \

--mysql-conf username=root \

--mysql-conf password=123456 \

--mysql-conf database-name=mysql_db \

--including-tables "tbl|test.*" \

--sink-conf fenodes=127.0.0.1:8030 \

--sink-conf username=root \

--sink-conf password=123456 \

--sink-conf jdbc-url=jdbc:mysql://127.0.0.1:9030 \

--sink-conf sink.label-prefix=label1 \

--table-conf replication_num=1To ingest an Oracle database, please refer to the example code.

How It Performs

When it comes to synchronizing a whole database (containing hundreds or even thousands of tables, active or inactive), most users want it to be done within seconds. So we tested the Connector to see if it came up to scratch:

- 1000 MySQL tables, each having 100 fields. All tables were active (which meant they were continuously updated, and each data writing involved over a hundred rows)

- Flink job checkpoint: 10s

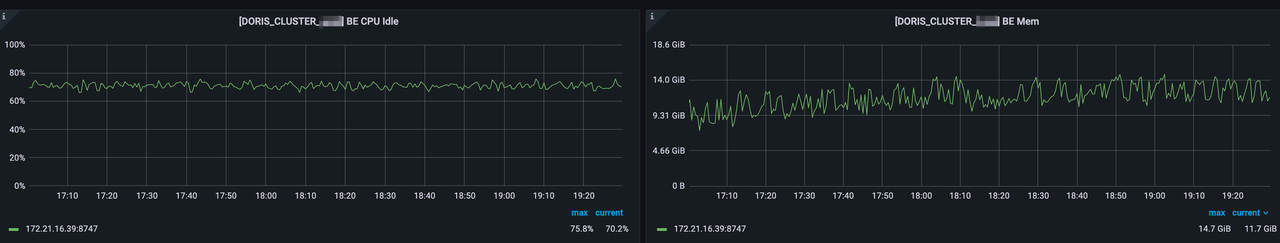

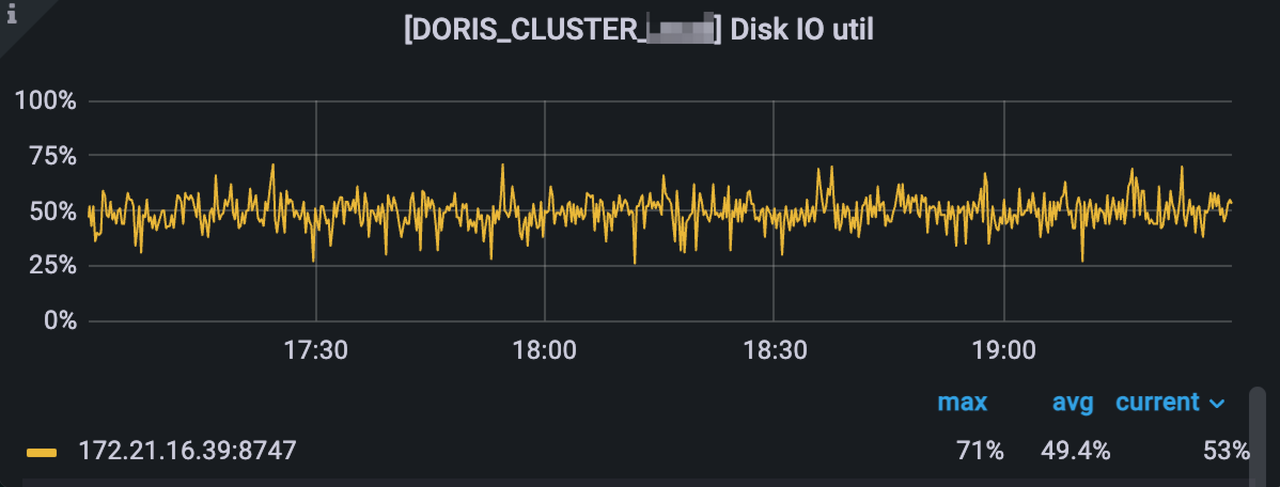

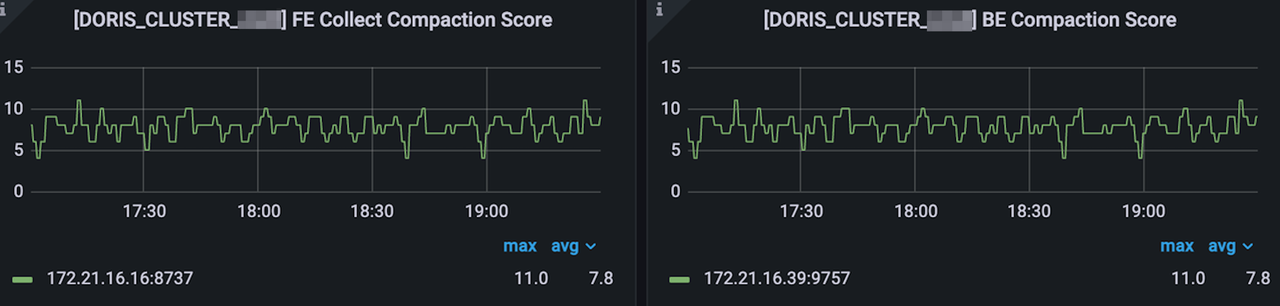

Under pressure test, the system showed high stability, with key metrics as follows:

According to feedback from early adopters, the Connector has also delivered high performance and system stability in 10,000-table database synchronization in their production environment. This proves that the combination of Apache Doris and Flink CDC is capable of large-scale data synchronization with high efficiency and reliability.

How It Benefits Data Engineers

Engineers no longer have to worry about table creation or table schema maintenance, saving them days of tedious and error-prone work. Previously, in Flink CDC, you needed to create a Flink job for each table and build a log parsing link at the source end, but now, with whole-database ingestion, resource consumption in the source database is largely reduced. It is also a unified solution for incremental updates and full updates.

Other Features

1. Joining Dimension Table and Fact Table

The common practice is to put dimension tables in Doris and run join queries via the real-time stream of Flink. Based on the Async I/O of Flink, Flink-Doris-Connector 1.4.0 implements asynchronous Lookup Join, so the Flink real-time stream won't be blocked due to queries. Also, the Connector allows you to combine multiple queries into one big query and send it to Doris at once for processing. This improves the efficiency and throughput of such join queries.

2. Thrift SDK

We introduced Thrift-Service SDK into the Connector so users no longer have to use Thrift plug-ins or configure a Thrift environment in compilation. This makes the compilation process much simpler.

3. On-Demand Stream Load

During data synchronization, when there is no new data ingestion, no Stream Load requests will be issued. This avoids unnecessary consumption of cluster resources.

4. Polling of Backend Nodes

For data ingestion, Doris calls a frontend node to obtain a list of the backend nodes and randomly chooses one to launch an ingestion request. That backend node will be the Coordinator. Flink-Doris-Connector 1.4.0 allows users to enable the polling mechanism, which is to have a different backend node be the Coordinator at each Flink checkpoint to avoid too much pressure on a single backend node for a long time.

5. Support for More Data Types

In addition to the common data types, Flink-Doris-Connector 1.4.0 supports DecimalV3/DateV2/DateTimev2/Array/JSON in Doris.

Example Usage

Read From Apache Doris

You can read data from Doris via DataStream or FlinkSQL (bounded stream). Predicate pushdown is supported.

CREATE TABLE flink_doris_source (

name STRING,

age INT,

score DECIMAL(5,2)

)

WITH (

'connector' = 'doris',

'fenodes' = '127.0.0.1:8030',

'table.identifier' = 'database.table',

'username' = 'root',

'password' = 'password',

'doris.filter.query' = 'age=18'

);

SELECT * FROM flink_doris_source;Join Dimension Table and Fact Table

CREATE TABLE fact_table (

`id` BIGINT,

`name` STRING,

`city` STRING,

`process_time` as proctime()

) WITH (

'connector' = 'kafka',

...

);

create table dim_city(

`city` STRING,

`level` INT ,

`province` STRING,

`country` STRING

) WITH (

'connector' = 'doris',

'fenodes' = '127.0.0.1:8030',

'jdbc-url' = 'jdbc:mysql://127.0.0.1:9030',

'lookup.jdbc.async' = 'true',

'table.identifier' = 'dim.dim_city',

'username' = 'root',

'password' = ''

);

SELECT a.id, a.name, a.city, c.province, c.country,c.level

FROM fact_table a

LEFT JOIN dim_city FOR SYSTEM_TIME AS OF a.process_time AS c

ON a.city = c.cityWrite to Apache Doris

CREATE TABLE doris_sink (

name STRING,

age INT,

score DECIMAL(5,2)

)

WITH (

'connector' = 'doris',

'fenodes' = '127.0.0.1:8030',

'table.identifier' = 'database.table',

'username' = 'root',

'password' = '',

'sink.label-prefix' = 'doris_label',

//json格式写入

'sink.properties.format' = 'json',

'sink.properties.read_json_by_line' = 'true'

);

Published at DZone with permission of Shirley H.. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments