Automatically Synchronizing Data in Two SQL Server Databases

This tutorial explains how to automatically synchronize data into 2 SQL Server databases.

Join the DZone community and get the full member experience.

Join For FreeData in SQL databases often needs to be synchronized in real-time — this can be achieved by checking one database for updates and then applying them to another database. In this case, the process of change detection and synchronization should be run automatically on a schedule and with no need for outside intervention.

How We Can Achieve This Goal

Data Compare is an external tool that allows you to compare data in SQL databases, backups, and script folders. With dbForge Data Compare for SQL Server, you can schedule almost real-time database synchronization.

You can set up the process by following these steps:

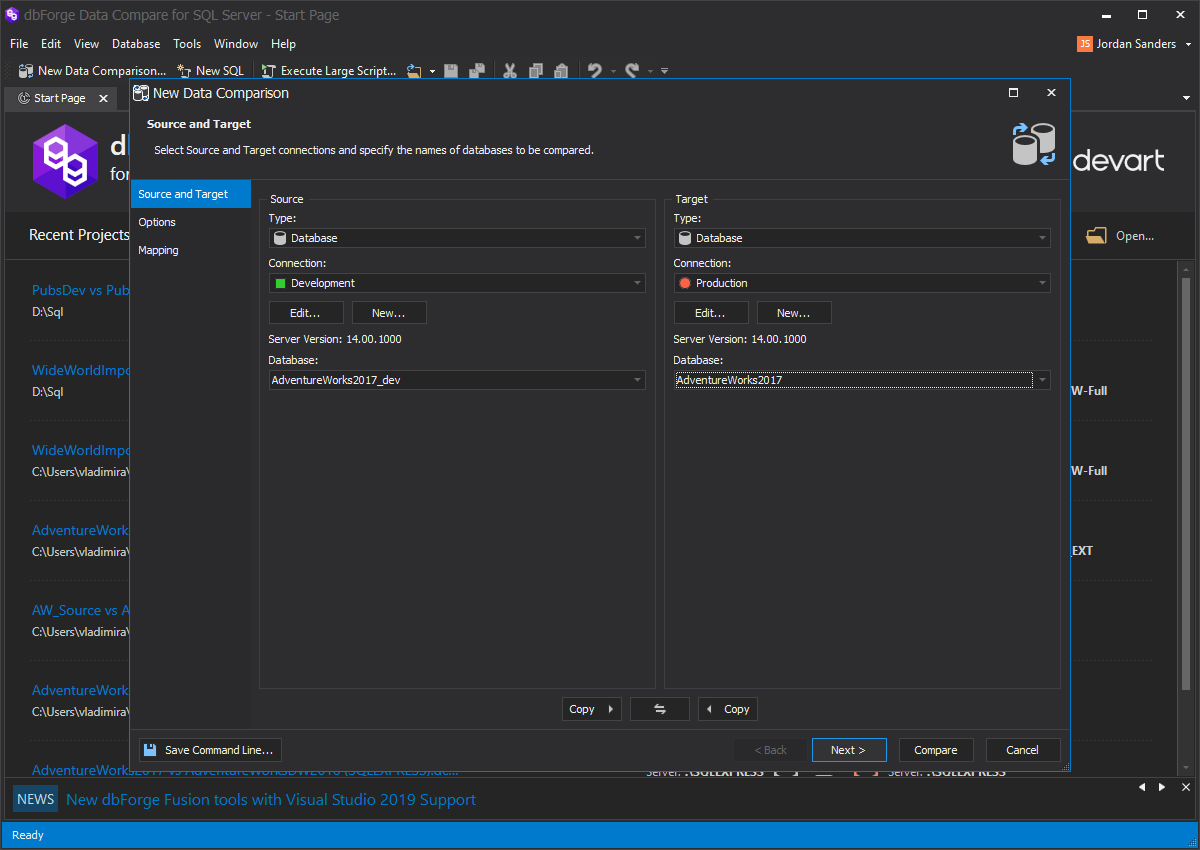

- Run Data Compare, and in the new Data Comparison window, choose the source and target databases in the corresponding tab:

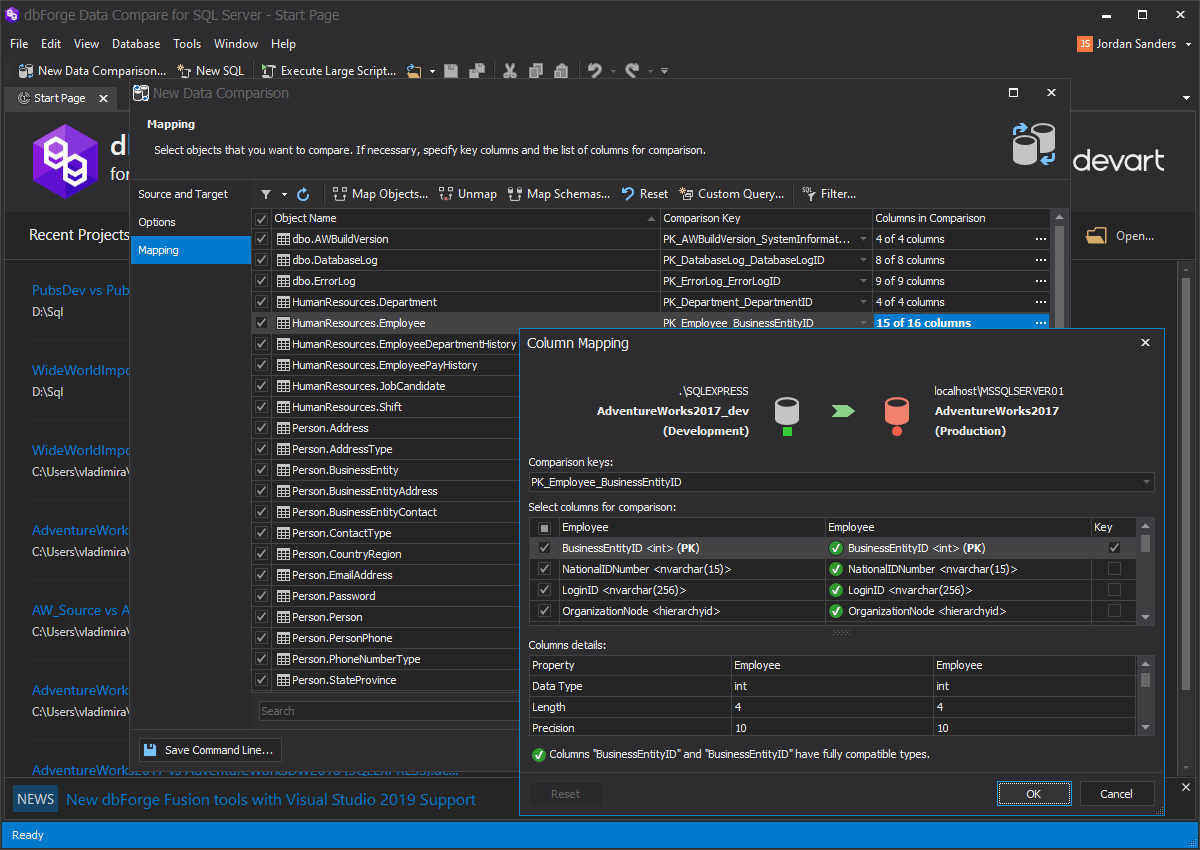

- You can set up various comparison settings in the Options tab if this is needed. Also, in the Mapping tab, you will be able to select which objects should be compared. Also, you can specify the key columns and the list of columns for comparison, if this is necessary:

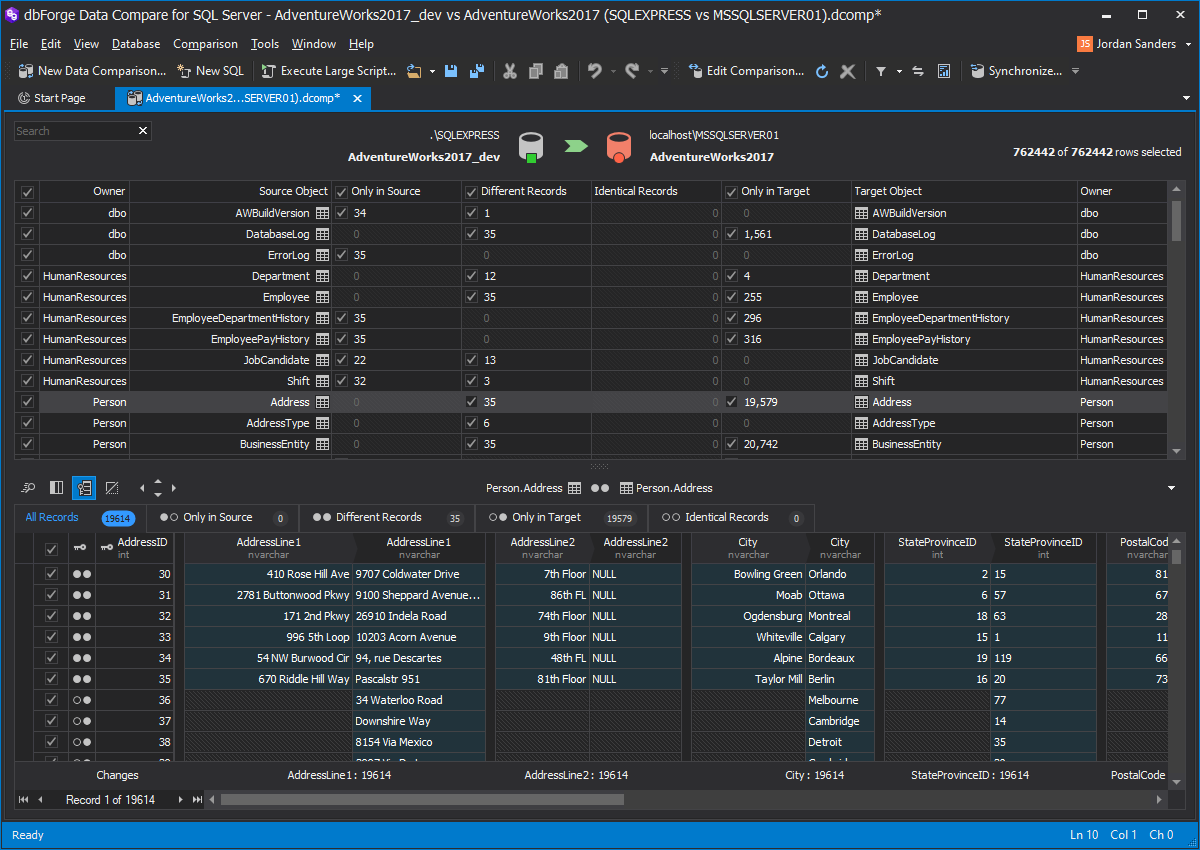

- When everything’s ready, press the Compare button in the bottom-right corner to start the comparison process. When the comparison is done, you can see the results in detail:

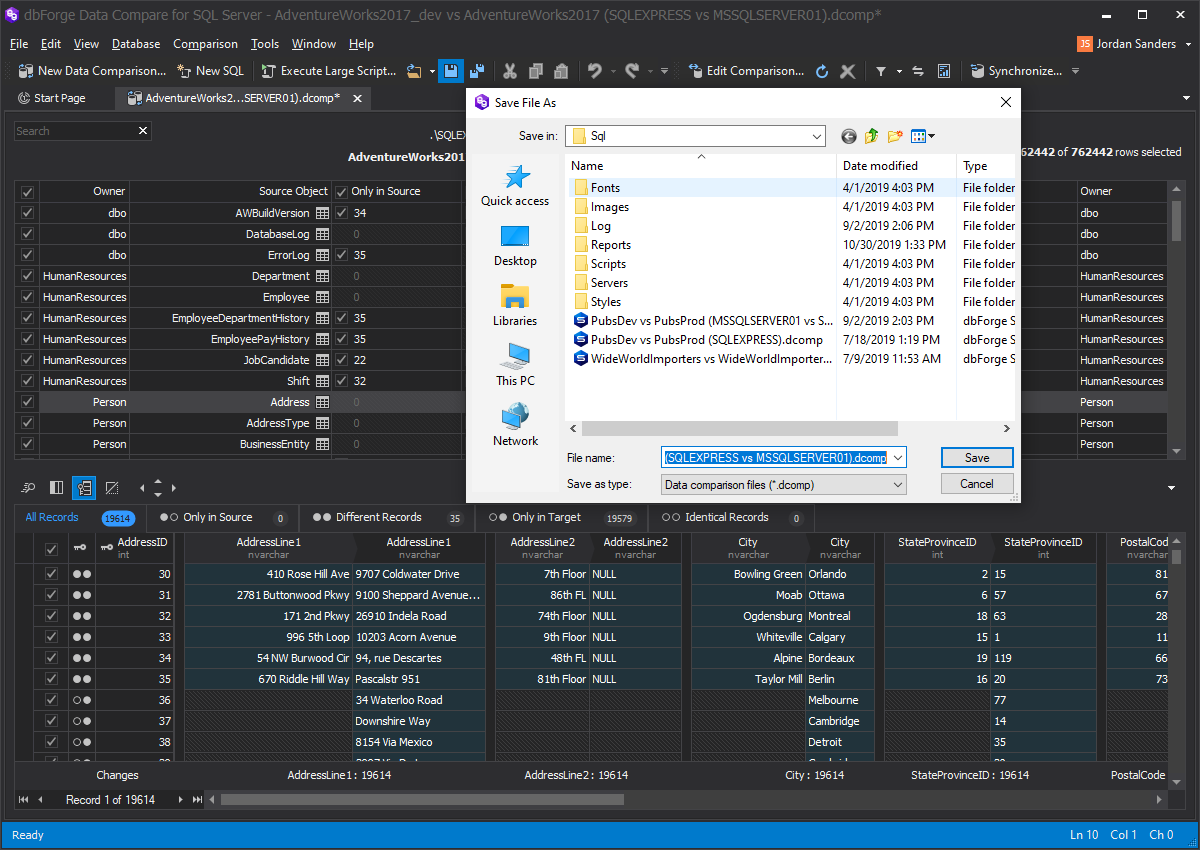

- Select all necessary objects by using the corresponding checkmarks and click Save. The saved project (dcomp) file will contain all objects and options needed for scheduling data synchronization.

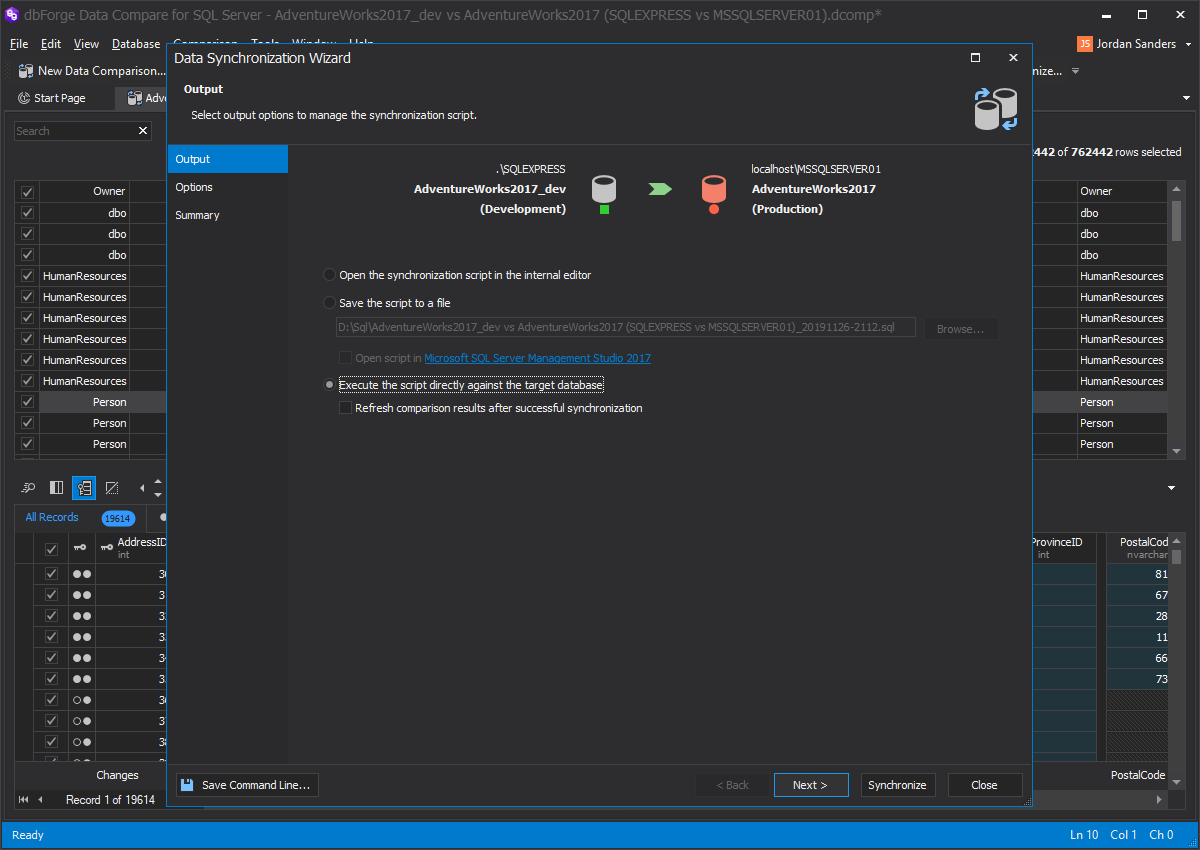

- When the project (dcomp) file is saved, press the Synchronize button which will open the Synchronization wizard.

- Choose ‘Execute the script directly against the target database’ so that the databases will be synchronized after you set up all necessary options, and press Synchronize. When the synchronization process is over, you can view synchronization results in the bottom pane of the window.

Automating the Process

As we have already successfully tested the synchronization process in Data Compare and saved the project (dcomp) file, let’s automate the process with a PowerShell script.

Setting Things Up

First, we’ll need to create a function that will check if the Outputs folder exists — it will be used to store date-stamped output summaries. We want to make sure that an easy-to-find application log of every synchronization is saved in case we will need to perform troubleshooting in the future:

#checks if the Outputs folder exists. If it doesn’t, the script creates it and returns its full path

function CheckAndCreateFolder($rootFolder, [switch]$Outputs)

{

$location = $rootFolder

#set the location based on the used switch

if($Outputs -eq $true)

{

$location += "\Outputs"

}

#create the folder if it doesn't exist and return its path

if(-not (Test-Path $location))

{ mkdir $location -Force:$true -Confirm:$false | Out-Null }

return $location

}

Next, let’s define the root folder and the location for date-stamped output summaries:

xxxxxxxxxx

#set the root folder

$rootFolder = "D:\DataSync\"

#set the location of output files

$outsLoc = CheckAndCreateFolder $rootFolder -Outputs

Variable and Switches

In this section, we define the application’s location along with the data stamp variable. Also, we define the variable containing the application’s parameters, such as:

- the path to the saved project (dcomp) file

- the /sync switch for direct synchronization of the destination database

- the /rece switch which returns the ‘102 – No differences detected’ message when data sources are equal

- a date-stamped output summary

The following script allows us to achieve this:

xxxxxxxxxx

#define the tool’s location, date stamp variable and the tool’s parameters

$toolLocation = "C:\Program Files\Devart\dbForge Studio for MySQL\dbforgemysql.com"

$dateStamp = (Get-Date -Format "Mmddyyyy_HHMMss")

#output log file path

$logPath = "$outsLoc\DataOutput_$dateStamp.txt"

$Params = "/datacompare /compfile:""D:\DataSync\Project\test_DB_1vstest_DB_2.dcomp"" /log:""$logPath"""

$sync = " /sync"

Execution

The next part of the PowerShell script will call Data Compare from its location with the parameters we stated in the previous step. Then, the return code variable is defined:

xxxxxxxxxx

#initiate the comparison of data sources

(Invoke-Expression ("& `"" + $toolLocation +"`" " +$Params))

$returnCode = $LASTEXITCODE

$message = ""

The script’s final part serves to create proper responses for the three possible outcomes:

- An error occurred and the output summary will be opened.

- There are differences, e.g. return code 0 – Success

- There are no differences, e.g. return code 100 – No differences detected

xxxxxxxxxx

if ($returnCode -notin (100, 101))

{ #an error is encountered

$logPath = "$outsLoc\DataOutput_error.txt"

$message >> $logPath

clear-content $logPath

$message = "`r`n $returnCode - An error is encountered"

#output file is opened when an error is encountered

Invoke-Item "$logPath"

}

else{

if ($returnCode -eq 101)

{

clear-content $logPath

(Invoke-Expression ("& `"" + $toolLocation +"`" " +$Params+$sync))

$returnCode = $LASTEXITCODE

#schema changes are detected

}

if($returnCode -eq 0)

{

$message = "`r`n $returnCode - Schema changes were successfully synchronized"

}

else

{

#there are no schema changes

if($returnCode -eq 100)

{

$message = "`r`n $returnCode - There are no schema changes. Job aborted"

}

}

}

$message >> $logPath

Now that the job has been automated, it can be scheduled in any way you prefer – for example, with the help of Windows Scheduler.

Reviewing Results

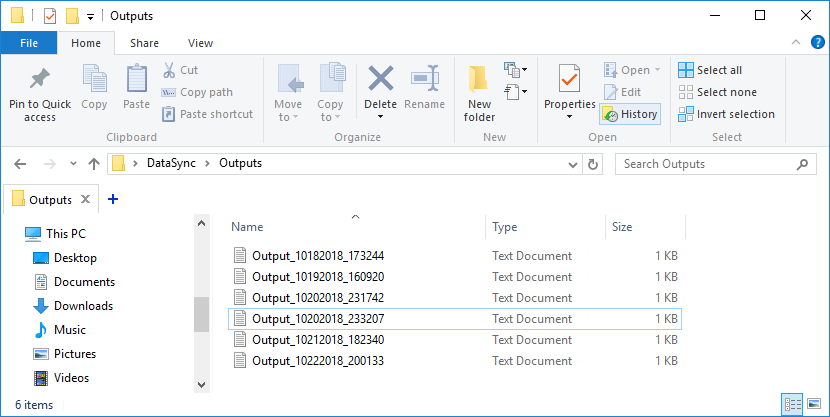

Once everything is up and running, the output summary can be reviewed anytime. In this example, the location of output files is defined by the $outsLoc variable, so the output files will be saved to $rootFolder\$outsLoc — in this particular example, DataSync\Outputs:

If an error occurs when the script is being executed, an error message will be displayed to provide more information about the potential cause of this error. Additionally, a DataOutput_error.txt file with details of the error will be created.

Here’s the script in its entirety:

xxxxxxxxxx

[expand title =”Full script“]

#checks if the Outputs folder exists. If it doesn’t, the script creates it and returns its full path

function CheckAndCreateFolder($rootFolder, [switch]$Outputs)

{

$location = $rootFolder

#set the location based on the used switch

if($Outputs -eq $true)

{

$location += "\Outputs"

}

#create the folder if it doesn't exist and return its path

if(-not (Test-Path $location))

{ mkdir $location -Force:$true -Confirm:$false | Out-Null }

return $location

}

#set the root folder

$rootFolder = "D:\DataSync\"

#set the location of output files

$outsLoc = CheckAndCreateFolder $rootFolder -Outputs

#define the tool’s location, date stamp variable and the tool’s parameters

$toolLocation = "C:\Program Files\Devart\dbForge Studio for MySQL\dbforgemysql.com"

$dateStamp = (Get-Date -Format "Mmddyyyy_HHMMss")

#output log file path

$logPath = "$outsLoc\DataOutput_$dateStamp.txt"

$Params = "/datacompare /compfile:""D:\DataSync\Project\ALLA1vsALLA2.dcomp"" /log:""$logPath"""

$sync = " /sync"

#initiate the comparison of data sources

(Invoke-Expression ("& `"" + $toolLocation +"`" " +$Params))

$returnCode = $LASTEXITCODE

$message = ""

if ($returnCode -notin (100, 101))

{ #an error is encountered

$logPath = "$outsLoc\DataOutput_error.txt"

$message >> $logPath

clear-content $logPath

$message = "`r`n $returnCode - An error is encountered"

#output file is opened when an error is encountered

Invoke-Item "$logPath"

}

else{

if ($returnCode -eq 101)

{

clear-content $logPath

(Invoke-Expression ("& `"" + $toolLocation +"`" " +$Params+$sync))

$returnCode = $LASTEXITCODE

#schema changes are detected

}

if($returnCode -eq 0)

{

$message = "`r`n $returnCode - Schema changes were successfully synchronized"

}

else

{

#there are no schema changes

if($returnCode -eq 100)

{

$message = "`r`n $returnCode - There are no schema changes. Job aborted"

}

}

}

$message >> $logPath

[/expand]

Thank you for reading.

Published at DZone with permission of Jordan Sanders. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments