Use Shared Packages From an Azure DevOps Feed in a Maven Project

We run through a tutorial on on how to create Azure DevOps Artifacts, connect our Artifacts to a Maven feed, and build and push the changes to our Artifacts.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

While building multiple apps, you will come across reusable code. This common code can be packaged, versioned, and hosted so that it can be reused within the organization.

Azure DevOps Artifacts is a service that is part of the Azure DevOps toolset, which allows organizations to create and share libraries securely within the organization.

In this post, we will cover the way in which a library is created and how it can be shared within the organization securely.

The following are the key points we'll discuss:

- Create and build a pipeline to publish the package to Azure Artifact.

- Connect an application to your Artifact's Maven feed to consume the library.

- Build and Push changes to the artifact and consume the newer version from your application.

This Demo library and application is built with Scala using Maven feeds.

Setting Up a Private Feed

Let us start by creating an organizational feed. Azure Artifacts support Maven, npm, NuGet, Python packages, and more.

In this demo we will be creating a private Maven feed.

Follow the below steps.

1. Log into the Azure DevOps portal and make sure that you have an organization and a project.

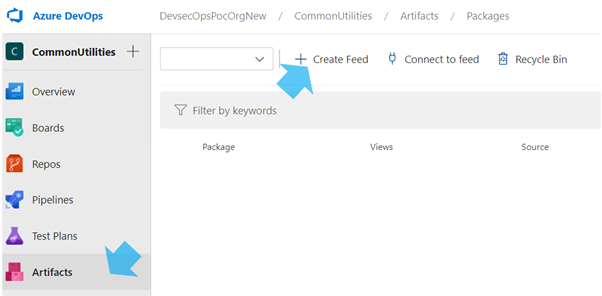

Select the Artifacts menu and click on Create feed.

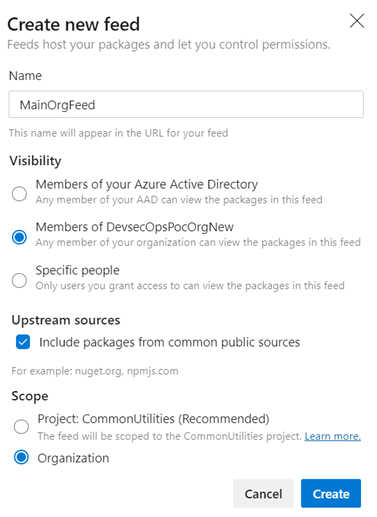

2. Enter the name of the new feed and select the visibility and select Organization as scope. Click on create.

Integrate the Artifacts Feed With Build Pipeline

1. Create a Maven project and check-in to the Azure DevOps repo.

(Sample Scala Maven project)

xxxxxxxxxx

package org.util.spark

import org.apache.spark.sql.Column

import org.apache.spark.sql.functions.{regexp_replace, to_date}

object DateUtils extends Serializable {

def cleanColumn(inputCol: Column): Column = {

regexp_replace(inputCol, "[^0-9A-Za-z]", "")

}

}

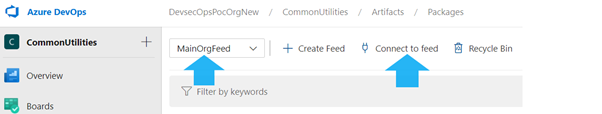

2. From the artifacts, select the feed which we created in the previous step and select connect to feed:

3. Select Maven from the menu and updated both the <repositories> and <distributionManagement> sections of the project pom.xml:

4. Create a build pipeline and add the following line in the azure-pipelines.yml file:

xxxxxxxxxx

mavenAuthenticateFeedtrue

5. Run the build pipeline to get the artifacts deployed to the created feed.

Connect to the Feed and Consume the Package on Your Local Machine

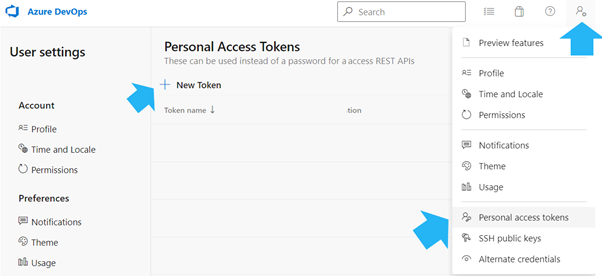

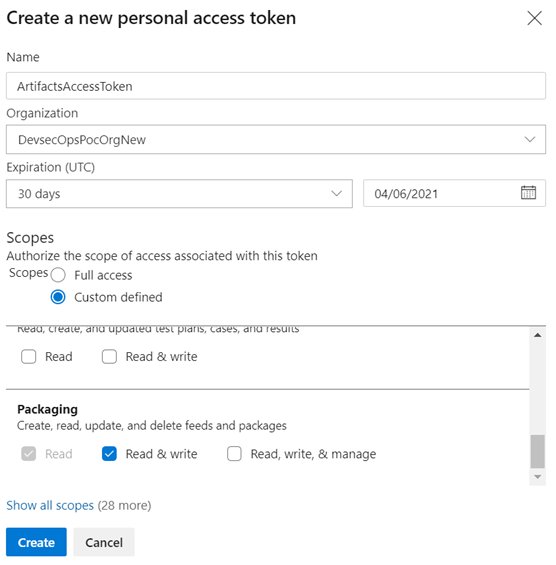

1. Create a persona access token to access the Azure DevOps Service from the local machine.

2. Select the PAT screen as indicated below

3. Create a personal access token with read and write access for packaging.

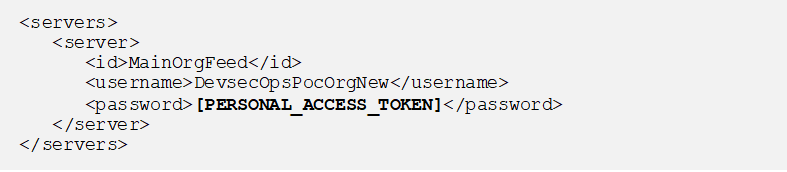

4. Maven pulls the credentials from setting.xml ("%USERPROFILE%/.m2/settings.xml" on Windows) to connect to the feed and thus add the below tags in settings.xml, replacing the above generated token password.

5. Create a Maven project to test the feed.

(Sample Scala Maven project)

x

package org.example

import org.apache.spark.sql.SparkSession

import org.util.spark.DateUtils._

object Main extends App {

val spark = SparkSession.builder().appName("DevOpstest").master("local").getOrCreate()

import spark.implicits._

/* Create a sample dataframe */

val initialDF = Seq((1, "02-03-2021"), (2, "2021-04-05"), (3, "2021-04-25")).toDF("item", "trans_date_str")

val transformedDF = initialDF.withColumn("trans_date_dt", cleanColumn($"trans_date_str"))

transformedDF.show()

}

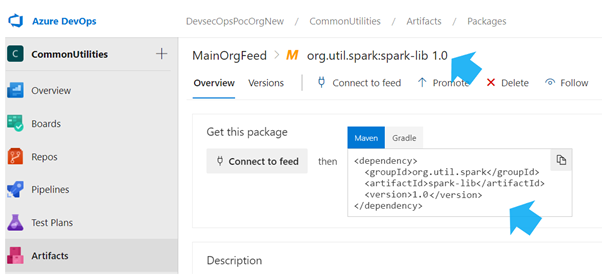

6. Update the application's pom.xml with the dependency details for the created Artifact Feed:

7. Update the <repositories> and <distributionManagement> sections of the project pom.xml file with the same content we used before for the library project.

Conclusion

Azure DevOps Artifacts are a great place to securely host and share libraries. Access to the feeds is based on the configuration.

Multiple versions of the same library can be hosted. A pom.xml version decides if the package can be used there by reducing the compatibility issues with the library versions.

Opinions expressed by DZone contributors are their own.

Comments