Boosting Model Interoperability and Efficiency With the ONNX Framework

ONNX is an open-source framework enabling easy transfer and execution of deep learning models between different platforms, boosting efficiency and performance.

Join the DZone community and get the full member experience.

Join For FreeThe rapid growth of artificial intelligence and machine learning has led to the development of numerous deep learning frameworks. Each framework has its strengths and weaknesses, making it challenging to deploy models across different platforms. However, the Open Neural Network Exchange (ONNX) framework has emerged as a powerful solution to this problem. This article introduces the ONNX framework, explains its basics, and highlights the benefits of using it.

Understanding the Basics of ONNX

What Is ONNX?

The Open Neural Network Exchange (ONNX) is an open-source framework that enables the seamless interchange of models between different deep learning frameworks. It provides a standardized format for representing trained models, allowing them to be transferred and executed on various platforms. ONNX allows you to train your models using one framework and then deploy them using a different framework, eliminating the need for time-consuming and error-prone model conversions.

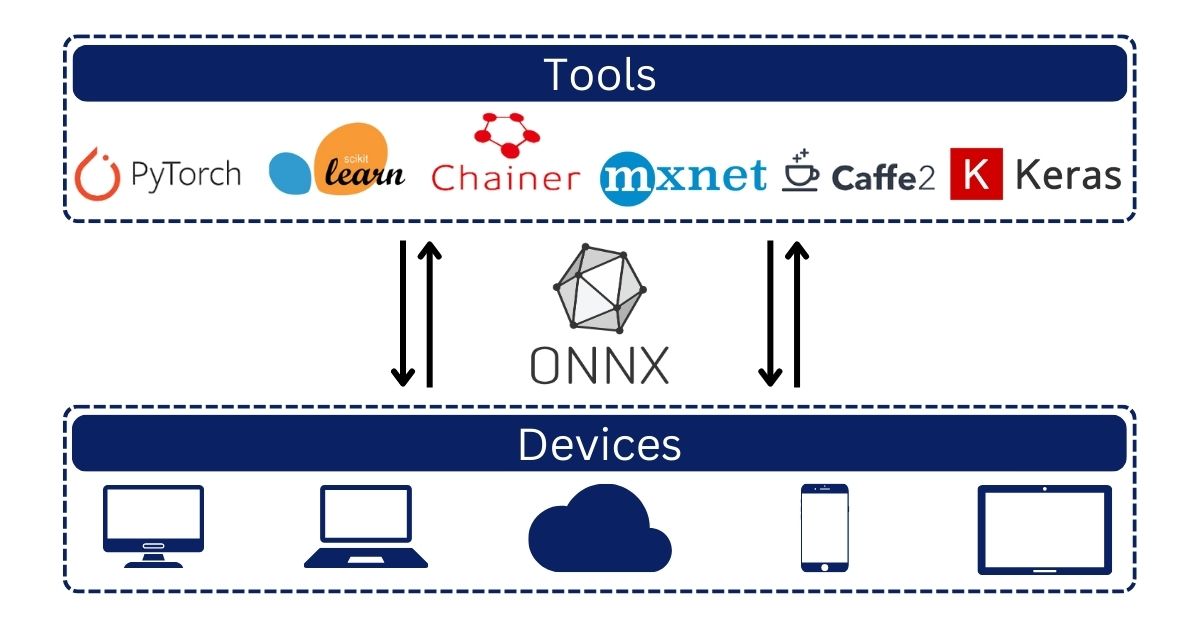

ONXX framework interoperability

Why Use ONNX?

There are several significant benefits of using the ONNX framework. First and foremost, it enhances model interoperability. By providing a standardized model format, ONNX enables seamless integration between different deep learning frameworks, such as PyTorch, TensorFlow, Keras, and Caffe. This interoperability allows researchers and developers to leverage the strengths of multiple frameworks and choose the one that best suits their specific needs.

Advantages of Using the ONNX Framework

ONNX support and capabilities across platforms: One of the major advantages of the ONNX framework is its wide support and capabilities across platforms. ONNX models can be deployed on a variety of devices and platforms, including CPUs, GPUs, and edge devices. This flexibility allows you to leverage the power of deep learning across a range of hardware, from high-performance servers to resource-constrained edge devices.

Simplified deployment: ONNX simplifies the deployment process by eliminating the need for model conversion. With ONNX, you can train your models in your preferred deep learning framework and then export them directly to ONNX format. This saves time and reduces the risk of introducing errors during the conversion process.

Efficient execution: The framework provides optimized runtimes that ensure fast and efficient inference across different platforms. This means that your models can deliver high-performance results, even on devices with limited computational resources. By using ONNX, you can maximize the efficiency of your deep learning models without compromising accuracy or speed.

Enhancing Model Interoperability With ONNX

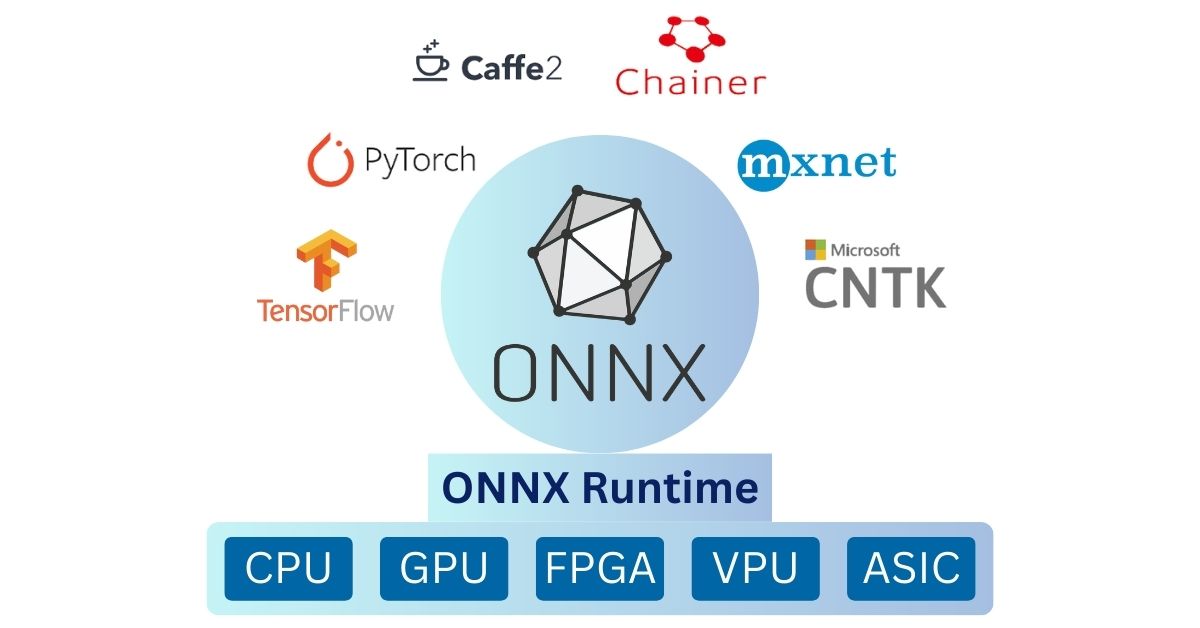

ONNX goes beyond just enabling model interoperability. It also provides a rich ecosystem of tools and libraries that further enhance the interoperability between different deep-learning frameworks. For example, ONNX Runtime is a high-performance inference engine that allows you to execute ONNX models on a wide range of platforms seamlessly. It provides support for a variety of hardware accelerators, such as GPUs and FPGAs, enabling you to unlock the full potential of your models.

ONNX Runtime

Moreover, ONNX also supports model optimization and quantization techniques. These techniques can help reduce the size of your models, making them more efficient to deploy and run on resource-constrained devices. By leveraging the optimization and quantization capabilities of ONNX, you can ensure that your models are not only interoperable but also highly efficient.

Improving Efficiency With the ONNX Framework

Efficiency is a critical factor in deep learning, especially when dealing with large-scale models and vast amounts of data. The ONNX framework offers several features that can help improve the efficiency of models and streamline the development process.

One such feature is the ONNX Model Zoo, which provides a collection of pre-trained models that anyone can use as a starting point for projects. These models cover a wide range of domains and tasks, including image classification, object detection, and natural language processing. By leveraging pre-trained models from the ONNX Model Zoo, it saves time and computational resources, allowing to focus on fine-tuning the models for specific needs.

Another efficiency-enhancing feature of ONNX is its support for model compression techniques. Model compression aims to reduce the size of deep learning models without significant loss in performance. ONNX provides tools and libraries that enable you to apply compression techniques, such as pruning, quantization, and knowledge distillation, to your models. By compressing the models with ONNX, you can achieve smaller model sizes, faster inference times, and reduced memory requirements.

Let Us See Successful Implementations of ONNX

To understand the real-world impact of the ONNX framework, let's look at some use cases where it has been successfully implemented.

Facebook AI Research used ONNX to improve the efficiency of their deep learning models for image recognition. By converting their models to the ONNX format, they were able to deploy them on a range of platforms, including mobile devices and web browsers. This improved the accessibility of their models and allowed them to reach a wider audience.

Microsoft utilized ONNX to optimize their machine learning models for speech recognition. By leveraging the ONNX Runtime, they achieved faster and more efficient inference on various platforms, enabling real-time speech-to-text transcription in their applications.

These use cases demonstrate the versatility and effectiveness of the ONNX framework in real-world scenarios, highlighting its ability to enhance model interoperability and efficiency.

Challenges and Limitations of the ONNX Framework

While the ONNX framework offers numerous benefits, it also has its challenges and limitations. One of the main challenges is the discrepancy in supported operators and layers across different deep-learning frameworks. Although ONNX aims to provide a comprehensive set of operators, there may still be cases where certain operators are not fully supported or behave differently across frameworks. This can lead to compatibility issues when transferring models between frameworks.

Another limitation of the ONNX framework is the lack of support for dynamic neural networks. ONNX primarily focuses on static computational graphs, which means that models with dynamic structures, such as Recurrent Neural Networks (RNNs) or models with varying input sizes, may not be fully supported.

It is important to carefully consider these challenges and limitations when deciding to adopt the ONNX framework for deep learning projects. However, it is worth noting that the ONNX community is actively working towards addressing these issues and improving the framework's capabilities.

Future Trends and Developments in ONNX

The ONNX framework is continuously evolving, with ongoing developments and future trends that promise to enhance its capabilities further. One such development is the integration of ONNX with other emerging technologies, such as federated learning and edge computing. This integration will enable efficient and privacy-preserving model exchange and execution in distributed environments.

Furthermore, the ONNX community is actively working on expanding the set of supported operators and layers, as well as improving the compatibility between different deep learning frameworks. These efforts will further enhance the interoperability and ease of using the ONNX framework.

To summarize, The ONNX framework provides a powerful solution to the challenges of model interoperability and efficiency in deep learning. By offering a standardized format for representing models and a rich ecosystem of tools and libraries, ONNX enables seamless integration between different deep-learning frameworks and platforms. Its support for model optimization and quantization techniques further enhances the efficiency of deep learning models.

While the ONNX framework has its challenges and limitations, its continuous development and future trends promise to address these issues and expand its capabilities. With the increasing adoption of ONNX in both research and industry, this framework is playing a crucial role in advancing the field of deep learning.

For those seeking to enhance the interoperability and efficiency of the deep learning models, exploring the ONNX framework is highly advisable. With its wide support, powerful capabilities, and vibrant community, ONNX is poised to revolutionize the development and deployment of deep learning models for organizations.

Published at DZone with permission of Rakesh Nakod. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments