Build a Streamlit App With LangChain and Amazon Bedrock

In this article, learn how to use ElastiCache Serverless Redis for chat history, deploy to EKS, and manage permissions with EKS Pod Identity.

Join the DZone community and get the full member experience.

Join For FreeIt’s one thing to build powerful machine-learning models and another thing to be able to make them useful. A big part of it is to be able to build applications to expose its features to end users. Popular examples include ChatGPT, Midjourney, etc.

Streamlit is an open-source Python library that makes it easy to build web applications for machine learning and data science. It has a set of rich APIs for visual components, including several chat elements, making it quite convenient to build conversational agents or chatbots, especially when combined with LLMs (Large Language Models).

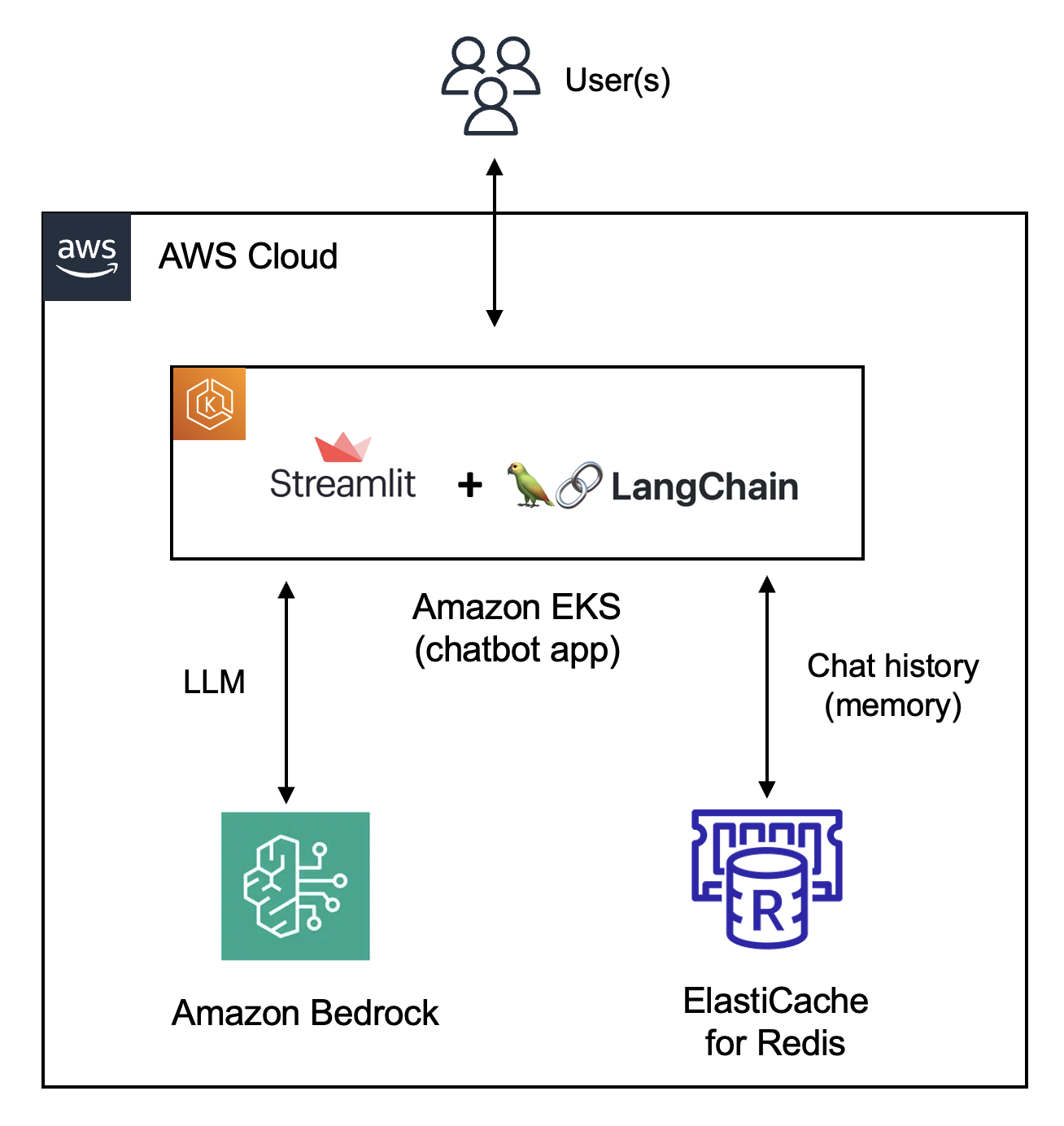

And that’s the example for this blog post as well — a Streamlit-based chatbot deployed to a Kubernetes cluster on Amazon EKS. But that’s not all!

We will use Streamlit with LangChain, which is a framework for developing applications powered by language models. The nice thing about LangChain is that it supports many platforms and LLMs, including Amazon Bedrock (which will be used for our application).

A key part of chat applications is the ability to refer to historical conversation(s) — at least within a certain time frame (window). In LangChain, this is referred to as Memory. Just like LLMs, you can plug-in different systems to work as the memory component of a LangChain application. This includes Redis, which is a great choice for this use case since it’s a high-performance in-memory database with flexible data structures. Redis is already a preferred choice for real-time applications (including chat) combined with Pub/Sub and WebSocket. This application will use Amazon ElastiCache Serverless for Redis, an option that simplifies cache management and scales instantly. This was announced at re:Invent 2023, so let’s explore while it’s still fresh!

To be honest, the application can be deployed on other compute options such as Amazon ECS, but I figured since it needs to invoke Amazon Bedrock, it’s a good opportunity to also cover how to use EKS Pod Identity (also announced at re:Invent 2023!!)

GitHub repository for the app.

Here is a simplified, high-level diagram:

Let’s go!!

Basic Setup

- Amazon Bedrock: Use the instructions in this blog post to set up and configure Amazon Bedrock.

- EKS cluster: Start by creating an EKS cluster. Point

kubectlto the new cluster usingaws eks update-kubeconfig --region <cluster_region> --name <cluster_name> - Create an IAM role: Use the trust policy and IAM permissions from the application GitHub repository.

- EKS Pod Identity Agent configuration: Set up the EKS Pod Identity Agent and associate EKS Pod Identity with the IAM role you created.

- ElastiCache Serverless for Redis: Create a Serverless Redis cache. Make sure it shares the same subnets as the EKS cluster. Once the cluster creation is complete, update the ElastiCache security group to add an inbound rule (TCP port

6379) to allow the application on the EKS cluster to access the ElastiCache cluster.

Push the Docker Image to ECR and Deploy the App to EKS

Clone the GitHub repository:

git clone https://github.com/abhirockzz/streamlit-langchain-chatbot-bedrock-redis-memory

cd streamlit-langchain-chatbot-bedrock-redis-memoryCreate an ECR repository:

export REPO_NAME=streamlit-chat

export REGION=<AWS region>

ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

aws ecr get-login-password --region $REGION | docker login --username AWS --password-stdin $ACCOUNT_ID.dkr.ecr.$REGION.amazonaws.com

aws ecr create-repository --repository-name $REPO_NAMECreate the Docker image and push it to ECR:

docker build -t $REPO_NAME .

docker tag $REPO_NAME:latest $ACCOUNT_ID.dkr.ecr.$REGION.amazonaws.com/$REPO_NAME:latest

docker push $ACCOUNT_ID.dkr.ecr.$REGION.amazonaws.com/$REPO_NAME:latestDeploy Streamlit Chatbot to EKS

Update the app.yaml file:

- Enter the ECR docker image info

- In the Redis connection string format, enter the Elasticache username and password along with the endpoint.

Deploy the application:

kubectl apply -f app.yamlTo check logs: kubectl logs -f -l=app=streamlit-chat

Start a Conversation!

To access the application:

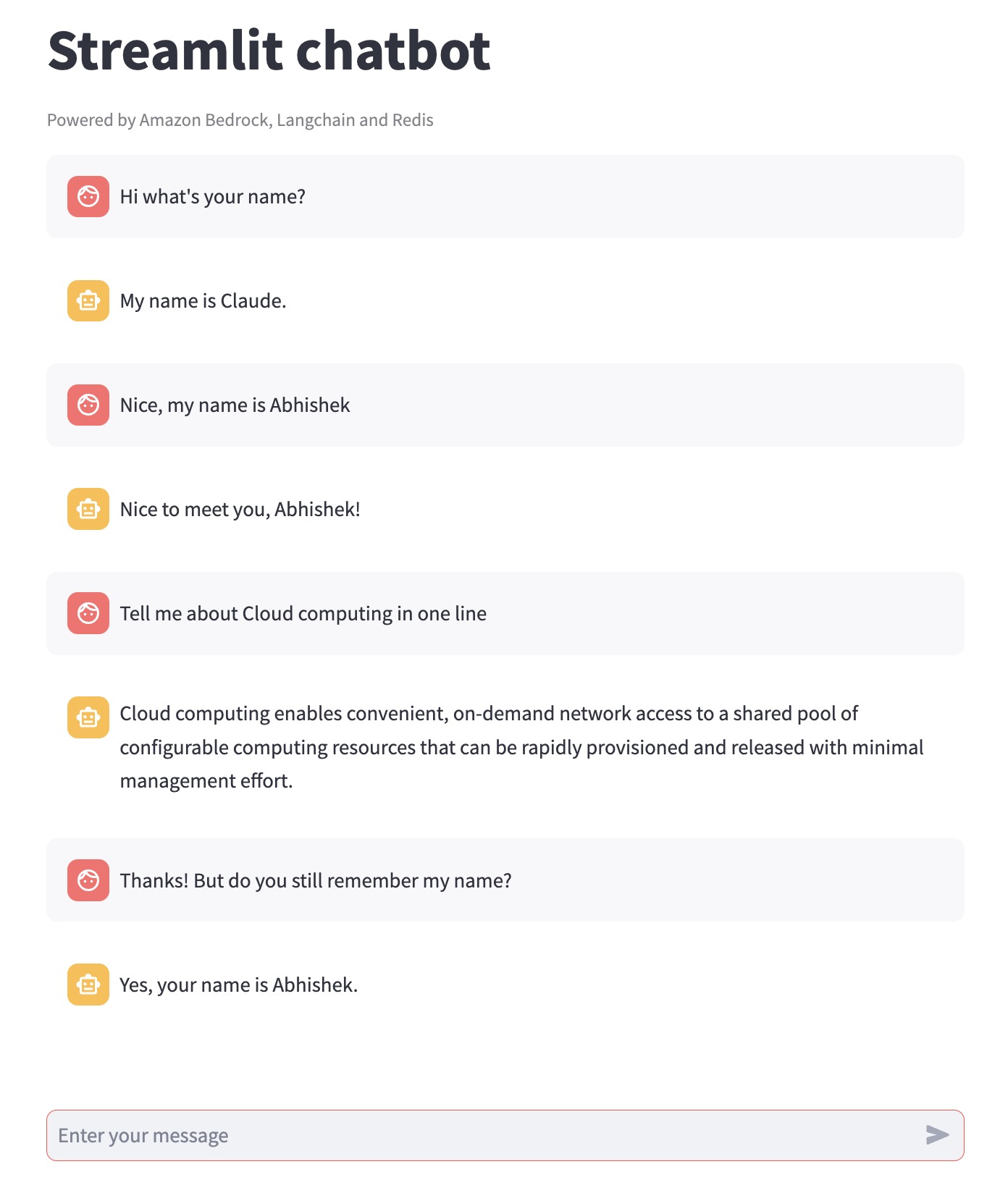

kubectl port-forward deployment/streamlit-chat 8080:8501Navigate to http://localhost:8080 using your browser and start chatting! The application uses the Anthropic Claude model on Amazon Bedrock as the LLM and Elasticache Serverless instance to persist the chat messages exchanged during a particular session.

Behind the Scenes in ElastiCache Redis

To better understand what’s going on, you can use redis-cli to access the Elasticache Redis instance from EC2 (or Cloud9) and introspect the data structure used by LangChain for storing chat history.

keys *

Don’t run keys * in a production Redis instance — this is just for demonstration purposes.

You should see a key similar to this — "message_store:d5f8c546-71cd-4c26-bafb-73af13a764a5" (the name will differ in your case).

Check it’s type: type message_store:d5f8c546-71cd-4c26-bafb-73af13a764a5 - you will notice that it's a Redis List.

To check the list contents, use the LRANGE command:

LRANGE message_store:d5f8c546-71cd-4c26-bafb-73af13a764a5 0 10You should see a similar output:

1) "{\"type\": \"ai\", \"data\": {\"content\": \" Yes, your name is Abhishek.\", \"additional_kwargs\": {}, \"type\": \"ai\", \"example\": false}}"

2) "{\"type\": \"human\", \"data\": {\"content\": \"Thanks! But do you still remember my name?\", \"additional_kwargs\": {}, \"type\": \"human\", \"example\": false}}"

3) "{\"type\": \"ai\", \"data\": {\"content\": \" Cloud computing enables convenient, on-demand network access to a shared pool of configurable computing resources that can be rapidly provisioned and released with minimal management effort.\", \"additional_kwargs\": {}, \"type\": \"ai\", \"example\": false}}"

4) "{\"type\": \"human\", \"data\": {\"content\": \"Tell me about Cloud computing in one line\", \"additional_kwargs\": {}, \"type\": \"human\", \"example\": false}}"

5) "{\"type\": \"ai\", \"data\": {\"content\": \" Nice to meet you, Abhishek!\", \"additional_kwargs\": {}, \"type\": \"ai\", \"example\": false}}"

6) "{\"type\": \"human\", \"data\": {\"content\": \"Nice, my name is Abhishek\", \"additional_kwargs\": {}, \"type\": \"human\", \"example\": false}}"

7) "{\"type\": \"ai\", \"data\": {\"content\": \" My name is Claude.\", \"additional_kwargs\": {}, \"type\": \"ai\", \"example\": false}}"

8) "{\"type\": \"human\", \"data\": {\"content\": \"Hi what's your name?\", \"additional_kwargs\": {}, \"type\": \"human\", \"example\": false}}"Basically, the Redis memory component for LangChain persists the messages as a List and passes its contents as additional context with every message.

Conclusion

To be completely honest, I am not a Python developer (I mostly use Go, Java, or sometimes Rust), but I found Streamlit relatively easy to start with, except for some of the session-related nuances.

I figured out that for each conversation, the entire Streamlit app is executed (this was a little unexpected coming from a backend dev background). That’s when I moved the chat ID (kind of unique session ID for each conversation) to the Streamlit session state, and things worked.

This is also used as part of the name of the Redis List that stores the conversation (message_store:<session_id>) — each Redis List is mapped to the Streamlist session. I also found the Streamlit component-based approach to be quite intuitive and pretty extensive as well.

I was wondering if there are similar solutions in Go. If you know of something, do let me know.

Happy building!

Published at DZone with permission of Abhishek Gupta, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments