Building Custom Tools With Model Context Protocol

Learn how to build MCP servers to extend AI capabilities. Create tools that AI models can seamlessly integrate, demonstrated through an arXiv paper search implementation.

Join the DZone community and get the full member experience.

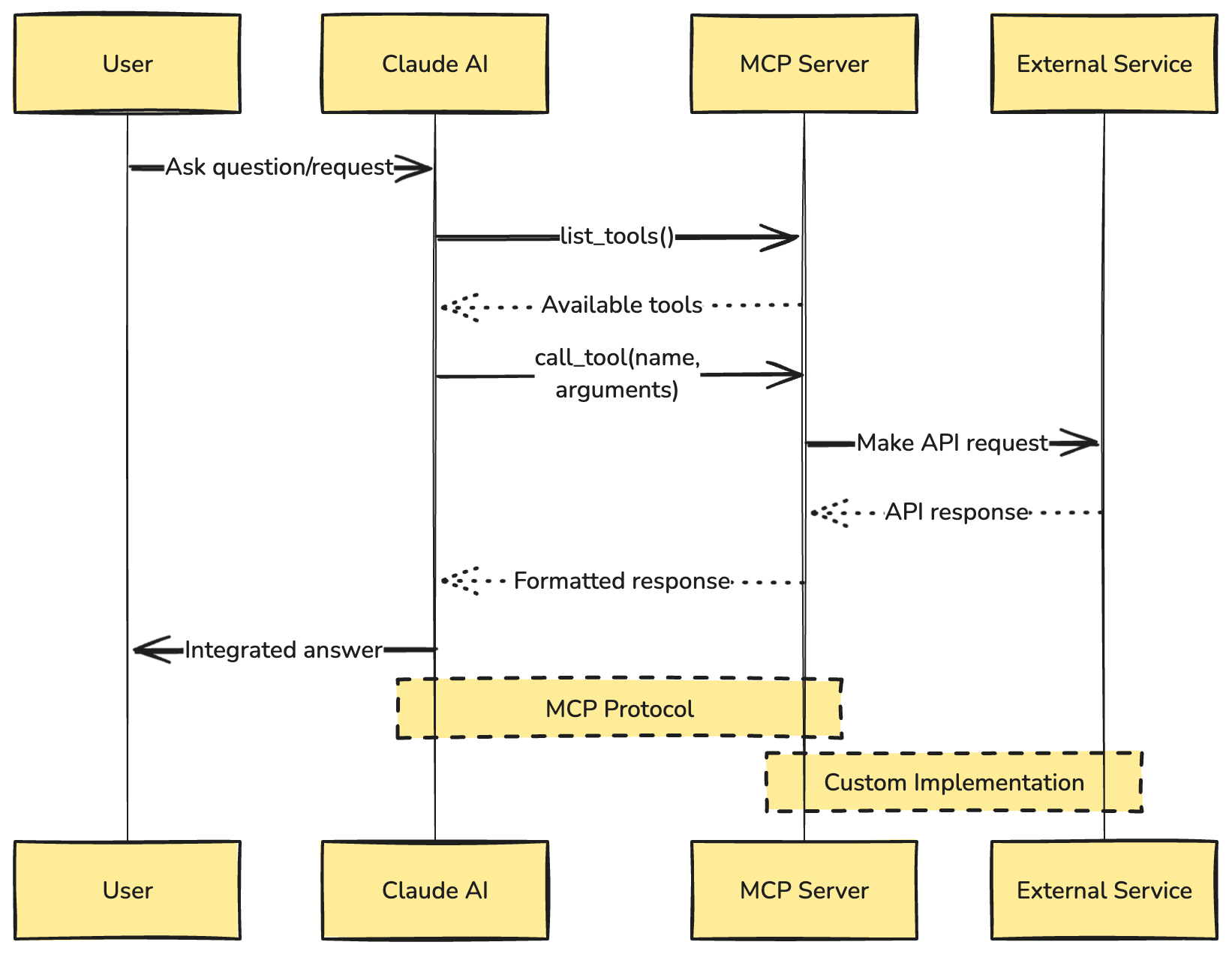

Join For FreeModel Context Protocol (MCP) is becoming increasingly important in the AI development landscape, enabling seamless integration between AI models and external tools. In this guide, we'll explore how to create an MCP server that enhances AI capabilities through custom tool implementations.

What Is Model Context Protocol?

MCP is a protocol that allows AI models to interact with external tools and services in a standardized way. It enables AI assistants like Claude to execute custom functions, process data, and interact with external services while maintaining a consistent interface.

Getting Started With MCP Server Development

To begin creating an MCP server, you'll need a basic understanding of Python and async programming. Let's walk through the process of setting up and implementing a custom MCP server.

Setting Up Your Project

The easiest way to start is by using the official MCP server creation tool. You have two options:

# Using uvx (recommended)

uvx create-mcp-server

# Or using pip

pip install create-mcp-server

create-mcp-serverThis creates a basic project structure:

my-server/

├── README.md

├── pyproject.toml

└── src/

└── my_server/

├── __init__.py

├── __main__.py

└── server.pyImplementing Your First MCP Server

Let's create a practical example: an arXiv paper search tool that AI models can use to fetch academic papers. Here's how to implement it:

import asyncio

from mcp.server.models import InitializationOptions

import mcp.types as types

from mcp.server import NotificationOptions, Server

import mcp.server.stdio

import arxiv

server = Server("mcp-scholarly")

client = arxiv.Client()

@server.list_tools()

async def handle_list_tools() -> list[types.Tool]:

"""

List available tools.

Each tool specifies its arguments using JSON Schema validation.

"""

return [

types.Tool(

name="search-arxiv",

description="Search arxiv for articles related to the given keyword.",

inputSchema={

"type": "object",

"properties": {

"keyword": {"type": "string"},

},

"required": ["keyword"],

},

)

]

@server.call_tool()

async def handle_call_tool(

name: str, arguments: dict | None

) -> list[types.TextContent | types.ImageContent | types.EmbeddedResource]:

"""

Handle tool execution requests.

Tools can modify server state and notify clients of changes.

"""

if name != "search-arxiv":

raise ValueError(f"Unknown tool: {name}")

if not arguments:

raise ValueError("Missing arguments")

keyword = arguments.get("keyword")

if not keyword:

raise ValueError("Missing keyword")

# Search arXiv papers

search = arxiv.Search(

query=keyword,

max_results=10,

sort_by=arxiv.SortCriterion.SubmittedDate

)

results = client.results(search)

# Format results

formatted_results = []

for result in results:

article_data = "\n".join([

f"Title: {result.title}",

f"Summary: {result.summary}",

f"Links: {'||'.join([link.href for link in result.links])}",

f"PDF URL: {result.pdf_url}",

])

formatted_results.append(article_data)

return [

types.TextContent(

type="text",

text=f"Search articles for {keyword}:\n"

+ "\n\n\n".join(formatted_results)

),

]Key Components Explained

- Server initialization. The server is initialized with a unique name that identifies your MCP service.

- Tool registration. The

@server.list_tools()decorator registers available tools and their specifications using JSON Schema. - Tool implementation. The

@server.call_tool()decorator handles the actual execution of the tool when called by an AI model. - Response formatting. Tools return structured responses that can include text, images, or other embedded resources.

Best Practices for MCP Server Development

- Input validation. Always validate input parameters thoroughly using JSON Schema.

- Error handling. Implement comprehensive error handling to provide meaningful feedback.

- Resource management. Properly manage external resources and connections.

- Documentation. Provide clear descriptions of your tools and their parameters.

- Type safety. Use Python's type hints to ensure type safety throughout your code.

Testing Your MCP Server

There are two main ways to test your MCP server:

1. Using MCP Inspector

For development and debugging, the MCP Inspector provides a great interface to test your server:

npx @modelcontextprotocol/inspector uv --directory /your/project/path run your-server-nameThe Inspector will display a URL that you can access in your browser to begin debugging.

2. Integration With Claude Desktop

To test your MCP server with Claude Desktop:

- Locate your Claude Desktop configuration file:

- MacOS:

~/Library/Application Support/Claude/claude_desktop_config.json - Windows:

%APPDATA%/Claude/claude_desktop_config.json

- MacOS:

- Add your MCP server configuration:

{

"mcpServers": {

"mcp-scholarly": {

"command": "uv",

"args": [

"--directory",

"/path/to/your/mcp-scholarly",

"run",

"mcp-scholarly"

]

}

}

}For published servers, you can use a simpler configuration:

{

"mcpServers": {

"mcp-scholarly": {

"command": "uvx",

"args": [

"mcp-scholarly"

]

}

}

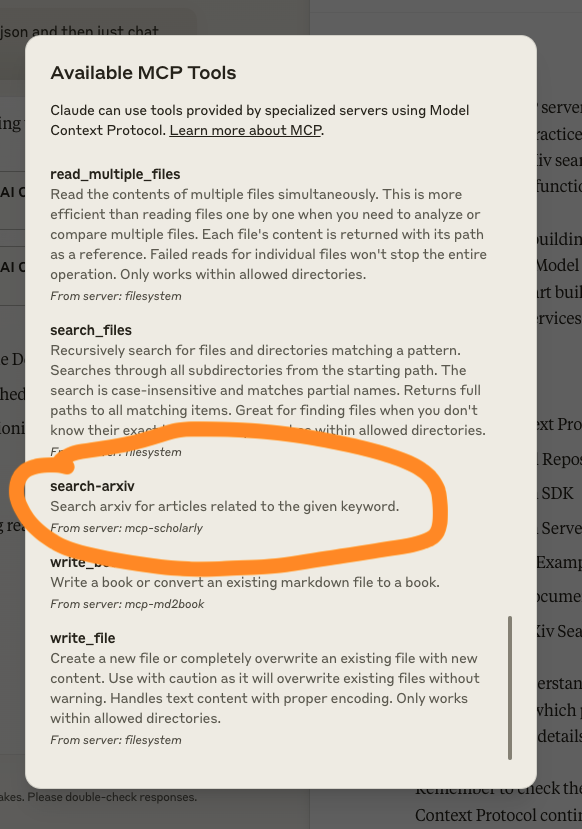

}- Start Claude Desktop — you should now see your tool (e.g., "search-arxiv") available in the tools list:

![search-arxiv is available in the tools list]()

Testing checklist:

- Verify tool registration and discovery

- Test input validation

- Check error handling

- Validate response formatting

- Ensure proper resource cleanup

Integration With AI Models

Once your MCP server is ready, it can be integrated with AI models that support the Model Context Protocol. The integration enables AI models to:

- Discover available tools through the list_tools endpoint

- Call specific tools with appropriate parameters

- Process the responses and incorporate them into their interactions

For example, when integrated with Claude Desktop, your MCP tools appear in the "Available MCP Tools" list, making them directly accessible during conversations. The AI can then use these tools to enhance its capabilities — in our arXiv example, Claude can search and reference academic papers in real time during discussions.

Common Challenges and Solutions

- Async operations. Ensure proper handling of asynchronous operations to prevent blocking.

- Resource limits. Implement appropriate timeouts and resource limits.

- Error recovery. Design robust error recovery mechanisms.

- State management. Handle server state carefully in concurrent operations.

Conclusion

Building an MCP server opens up new possibilities for extending AI capabilities. By following this guide and best practices, you can create robust tools that integrate seamlessly with AI models. The example arXiv search implementation demonstrates how to create practical, useful tools that enhance AI functionality.

Whether you're building research tools, data processing services, or other AI-enhanced capabilities, the Model Context Protocol provides a standardized way to extend AI model functionality. Start building your own MCP server today and contribute to the growing ecosystem of AI tools and services.

My official MCP Scholarly server has been accepted as a community server in the MCP repository. You can find it under the community section here.

Resources

- Model Context Protocol Documentation

- MCP Official Repository

- MCP Python SDK

- MCP Python Server Creator

- MCP Server Examples

- arXiv API Documentation

- Example arXiv Search MCP Server

For a deeper understanding of MCP and its capabilities, you can explore the official MCP documentation, which provides comprehensive information about the protocol specification and implementation details.

Opinions expressed by DZone contributors are their own.

Comments