How to Optimize Chatty Applications

Sometimes, claims call the same service for the same information over and over again. This behavior is called chattiness. Caching can help us optimize this chattiness.

Join the DZone community and get the full member experience.

Join For FreeYou may have seen chatty applications numerous times in your career. Any app that acts as a core component in the distributed architecture has no option but to rely on other services to fulfill a request or a process. These core apps can get chatty in the early stages of their lifecycle.

Chattiness can look like this:

What is wrong with chatty apps? Calling other services to fulfill a request is all part of the plan, isn’t it?

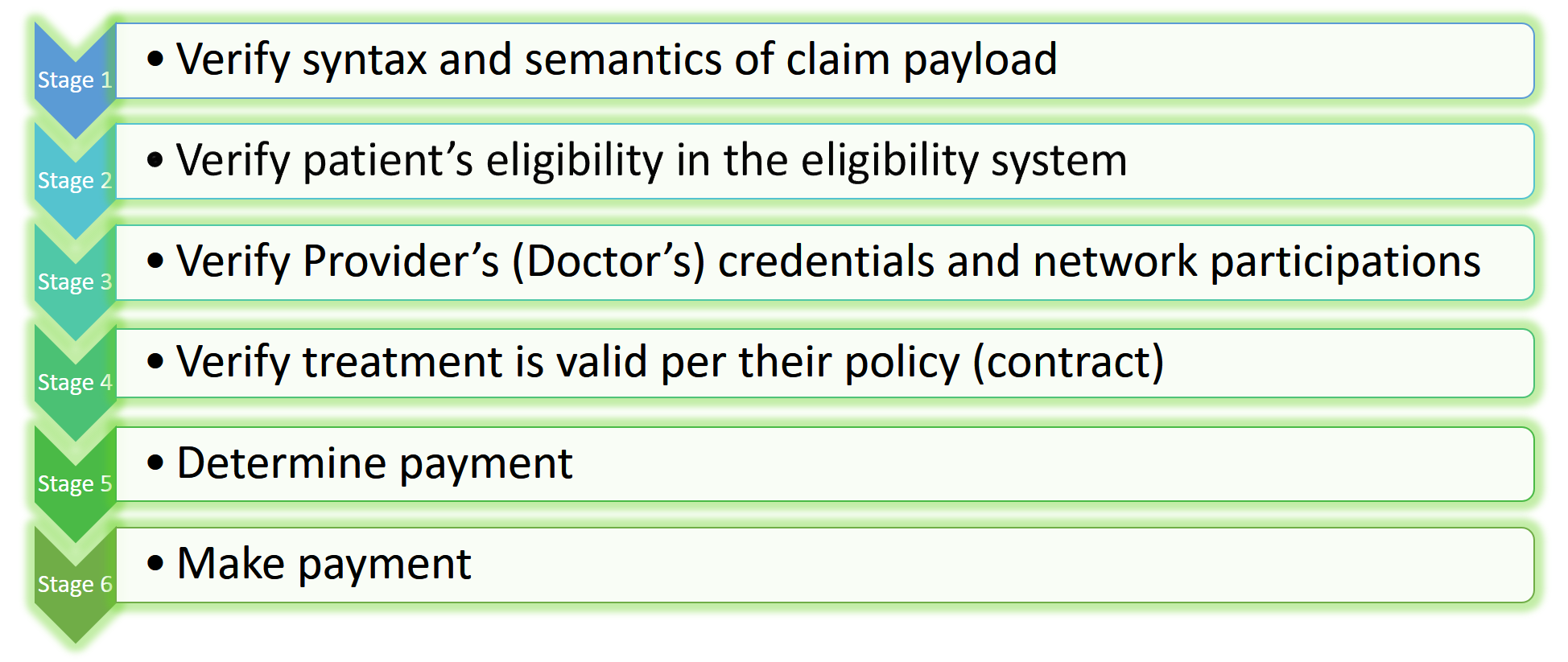

Let’s examine chattiness characteristics with claims processing system. Before I go further, let me summarize how a healthcare claims process orchestration is (10,000-foot view).

Claims Processing Overview

Each stage above requires the claim system to make calls to other services like database, eligibility service, provider service, pricing service, business rules services, etc. Did I forget to mention fraud detection service? Oh, no!

In each stage, claims might make repeated calls to the same services based on the claim received.

Sometimes, it calls the same service for the same information over and over again! This is the behavior I’m calling chattiness. Now, what’s wrong with this behavior? One can argue that it’s a necessity to call other services and that we shouldn’t call it chattiness.

Service calls in a distributed architecture can incur many overheads including network latency, disk reads, database queries, etc. on both the caller and callee side.

How Can We Optimize This Chattiness?

Drum roll...

Caching! Yes, Caching is one sure way to solve this problem.

Caching solutions take many shapes depending on your architecture:

In-memory caching (i.e., Ehcache, Redis, Hazelcast).

Distributed caching (i.e., Oracle Coherence, Memcached)

Caching at API gateways or the ESB level.

Note that all the above caching solutions require robust cache refresh and replication strategies.

You may have been in environments where systems have large relational database schemas with hundreds of tables. With such large schemas, data retrieval queries get really expensive over a period of time, and the queries often require several joins, inner queries, hints, etc.

It’s a great idea to introduce a NoSQL database in between application and relational databases and stage the result sets of such complex queries, so that they will offload some load from the relational database. It’s safe to assume that this will result in a huge performance gain when you apply this solution for tables that won’t change frequently.

Conclusion

Chattiness of applications may not always be avoidable, but it can be optimized using caching and/or a NoSQL database.

References

Opinions expressed by DZone contributors are their own.

Comments