Cloud-Based Integrations vs. On-Premise Models

Architecture strategies use design patterns; blueprints based on successful implementations. Read an assessment of common approaches, patterns, and use cases.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2022 Enterprise Application Integration Trend Report.

For more:

Read the Report

For large enterprises, where integration with different platforms is important for various business activities, enterprise integration is the most popular term for the integration architecture. Enterprise integration has evolved a lot from traditional batch processing using ETL (extract, transform, and load), where data feeds from sources were used for business processing/ transformation and loading to the target data source.

When you develop any architecture, not just integration architecture, your primary aim should be to prepare an agile, robust, and strong architecture. Any failure in the building blocks of the architecture can tumble the entire solution approach. Hence, all architecture strategies use design patterns — blueprints based on successful implementations. Additionally, design patterns have different flavors based on where you apply them and how you develop the design approach.

Enterprise Architecture vs. Enterprise Integration Approaches

Before getting to the details of integration approaches, we should be clear about the difference between enterprise architecture and enterprise integration:

- Enterprise architecture approaches – These methods are applied at the top level of an enterprise architecture construct and define how you look at the architecture's entire flow of operations. The entire communication path and interfacing design is based on these methods.

- Enterprise integration approaches – These are approaches implemented among your components. For interface design and integration/communication between enterprise components, it is important to understand how you look at the medium of communication, like asynchronous or synchronous interfaces. When the complexities in enterprise components grow, the importance of using enterprise integration best practices increases.

Cloud Integrations

Technically, cloud services (and applications) work in silos, and there should be a communication channel established between them to share data. This communication service can be synchronous calls through an API or web service invocation, and they generally are executed sequentially. Communication works as a one-to-one (consumer-to-provider) request processing call. The other option for this communication channel is through message brokers like queue or event hubs, where we can process multiple requests in parallel. There, it would be one-to-many (broadcasting requests to multiple subscribers) request processing calls.

Application

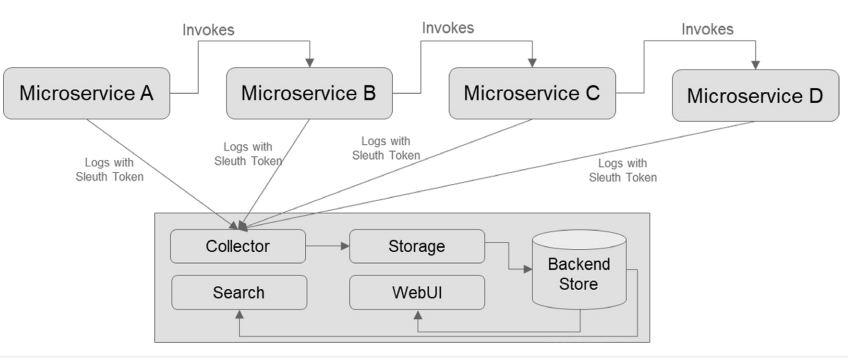

When you are working with microservices and interservice communication, one of the most common problems you face is network or call latency during invocation. For example, say you have four microservices — A, B, C, and D — and they get invoked in order: A calls B, B calls C, and C calls D. And say the total call time of A through D is not meeting the expected invocation time benchmark. Then, you need to find if there is a bottleneck in the call.

This can be traced dynamically by instrumenting the calls, finding the duration for each invocation, and finding which invocation looks wired to meet the expected duration. In a web application, this can be monitored through a browser-level instrumentation and call a tree graph. With microservices and APIs, this can be done through Zipkin and the Sleuth framework in Spring Cloud.

Data

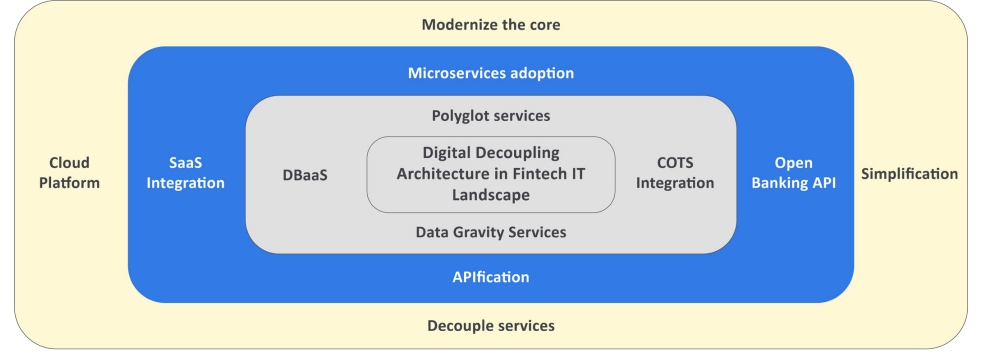

Digital transformation involves refactoring, re-architecting cloud-native solutions, or replacing cloud-native components; whereas cloud adoption means digital migration via re-hosting, re-configuration, or re-platforming applications. But there is another strategy trending across banking and insurance IT architecture that deserves consideration: digitally decoupling architecture. Digital decoupling enables accelerated progress with low-hanging fruit such as microservices adoption, AgileDevOps integration, data lake solutions, API adoption, and open platforms. Hence, it is best suited for the fintech industry to accelerate IT adoption of digital architecture.

The key to digital decoupling’s success is empowering enterprises to choose digital architecture based on the benefits in total cost of ownership. Whereas digital transformation paths sometimes involve large and costly IT transformations, digital decoupling improves agility in the IT landscape and leads to exponential IT solutions and easier digital adoption.

Digital decoupling architecture trends toward the cloud for scalable, reliable, and maintainable application architecture. As such, it relies on multifaced boundaries for technical and functional components, as illustrated in Figure 2 above. Components go through the cloud adoption journey based on core and non-core functional applications. This keeps the adoption roadmap flexible and cost-effective while ensuring the existing application landscape is not disturbed.

With these components providing a pattern for the digital decoupling architecture, enterprises can build a fintech application landscape that’s cost-efficient, delivers a positive user experience, and gives clear direction for the people (governance model), process (architectural decision), and technology (foundational blocks of the architecture) involved in the industry’s ongoing evolution.

Some common approaches to digital decoupling architecture are:

- Decoupling data and application architecture – Adopt a solution to decouple data architecture into reference data (regulator restricted/cross-border sensitive/data-sensitive) and transactional data. A reference database can be on-premises in legacy IT systems, and the application with transactional data can be moved to the cloud.

- DevTest adoption to cloud – On average, development and test (DevTest) environment usage of cloud resources, including infrastructure components, is 40 to 60 percent per year out of the total usage of all resources. Moving these environments to the cloud enables pay per use and optimizes costs for DevTest infrastructure and monitoring spend.

- Data lake adoption – Create a data lake solution in the cloud for reconciliation, regulatory reports, and batch job processing (e.g., using AWS Glue Job).

- Agile DevOps – Automate toolchain process in build, deploy, test, and review.

iPaaS

ETL processing would be idle for offline processing (batch operations) and real-time integration (e.g., service tax or VAT calculation in a retail store using VAT/tax API services), and it requires a new method to integrate using enterprise application integration (EAI) and middleware.

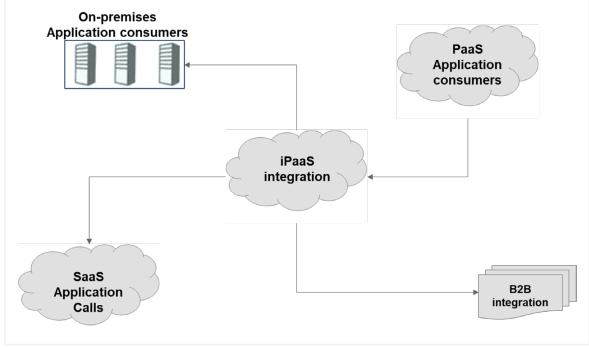

With scalability and agility being required in enterprise architecture, cloud-based SaaS integration has evolved as a next-generation integration service in which your application is hosted on-premises or in a public cloud (PaaS application) and connects to a SaaS provider application for integration services. For example, a core banking application connects to the ACI Worldwide SaaS application, or an inventory or retail application connects to SAP HANA for business processing services.

Since SaaS uses third-party cloud services, data security when storing application data in a SaaS provider cloud would be a debatable topic. Therefore, a hybrid solution combining the power of SaaS for integration services and data management to be controlled by the enterprise is achieved through the Integration Platform as a Service (iPaaS).

iPaaS supersedes the architectural benefits of an enterprise service bus (ESB) in terms of asynchronous transaction processing for integration service requests and API management design for service discovery and service lookup for integration API calls. TIBCO, Informatica, Microsoft Azure Logic Apps, Boomi, and MuleSoft are some of the more prominent iPaaS providers that can be used for customized application integration without using SaaS solutions; they use the infrastructure benefits of PaaS (in case of cloud integration) and server infrastructure (in case of on-premises integration).

On-Premises Integrations

On-premises data centers have the flexibility to develop heavyweight monolithic applications since resource usage and cost consumption may not vary after infrastructure procurement. The upside to this architecture is that we have the flexibility to leverage infrastructure-intensive integration patterns like IBM MQ. The downside is that there is less flexibility to decouple architecture, modernize the application, or offer service enhancements. Some of these integration patterns commonly used in on-premises application architectures are explained below.

EAI

When you have on-premises legacy applications integrated with downstream applications through middleware services like IBM MQ, asynchronous messaging would be costly for data center operations and infrastructure intensive. The choice could be to modernize or transform — like migrating the application to the cloud or virtualization environment — but many customers hesitate due to a tight dependency on their stack or are left with the choice of native migration by replacing IBM MQ with persistence queue options in a cloud service provider.

RabbitMQ and ActiveMQ are the most prominent frameworks using AMQP in middleware integration, and we can choose a suitable enterprise service bus for the message layer and event bubs for event handling using messages. When you migrate legacy Java applications that have messaging interfaces like JMS or MQ or use the fire-and-forget pattern, the PubSub pattern, or the topic subscription pattern, there should be a re-architecting approach to migrate the code to Azure Service Bus as we need to re-write the code to suit AQMP.

Traditionally, enterprise integration through queue processing is appreciated when designed for high-volume messages, which is achieved through most standard Pub/Sub queue patterns. The easiest pattern for queue processing is handled through fire-and-forget processing, where the producer publishes messages to the queue — when it is consumed, it is not traceable and saves many resources in persisting messages. This is best for non-critical, low-life messages.

Alternatively, we can use the fire-and-remember pattern, which is commonly designed when the messages are important (e.g., banking transactions) and should not get lost during erroneous processing by consumers. For this, we need a storage mechanism to record the messages, thus, the fire-and-remember pattern.

Middleware

In an enterprise cloud application, when there are managed SaaS applications like Salesforce, there is typically an overhead of enabling communication with cloud-native services (e.g., cloud data storage) by using a middleware service. Middleware integration acts as a major role in cloud application integration development, particularly for SaaS integration or hybrid or multi-cloud service integration. For low-volume interactions, we can use API integration for synchronous call request handling. For large-volume, dynamic data processing requests, we can rely on an event bus kind of mechanism.

For example, an ESB can help connect an application or service to another application, a SaaS integration, or a downstream service in an on-premises environment or hybrid cloud environment. ESBs like MuleSoft or Apache Camel work on event-driven architecture, where we can configure rules for how and where to deliver the events, such as a web application (event consumer and processor), and queue processors for message processing can be integrated to external targets like SaaS consumers.

Conclusion

When any enterprise embarks on their cloud journey, the first two problems they want to address are handling their legacy application architecture and managing various integration approaches. Since there are more isolated services in a cloud platform, deciding the right integration pattern helps inter-service communication. Enterprises that developed applications more than a decade ago will have most of their legacy applications in a monolithic architecture, which is hefty in size for covering all business functions in a single application component and single bulky back-end database. Therefore, their integration services can also be heavyweight in nature (e.g., MQ, SOAP services).

To make scalable architecture and perfect it for cloud adoption, the best approach would be to migrate monolithic applications to microservices applications so that the application is cloud-optimized and deployable to a target cloud platform — then create asynchronous or synchronous integration services to enable inter-service communication.

This is an article from DZone's 2022 Enterprise Application Integration Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments