Comparing Apache Hive and Spark

Big data analytics that work for you.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

Hive and Spark are two very popular and successful products for processing large-scale data sets. In other words, they do big data analytics. This article focuses on describing the history and various features of both products. A comparison of their capabilities will illustrate the various complex data processing problems these two products can address.

More on the subject:

- AWS EKS/ECS and Fargate: Understanding the Differences

- Chef vs. Puppet: Methodologies, Concepts, and Support

- So What is Observability Anyway

What is Hive?

Hive is an open-source distributed data warehousing database that operates on Hadoop Distributed File System. Hive was built for querying and analyzing big data. The data is stored in the form of tables (just like a RDBMS). Data operations can be performed using a SQL interface called HiveQL. Hive brings in SQL capability on top of Hadoop, making it a horizontally scalable database and a great choice for DWH environments.

A Bit of Hive’s History

Hive (which later became Apache) was initially developed by Facebook when they found their data growing exponentially from GBs to TBs in a matter of days. At the time, Facebook loaded their data into RDBMS databases using Python. Performance and scalability quickly became issues for them, since RDBMS databases can only scale vertically. They needed a database that could scale horizontally and handle really large volumes of data. Hadoop was already popular by then; shortly afterward, Hive, which was built on top of Hadoop, came along. Hive is similar to an RDBMS database, but it is not a complete RDBMS.

Why Hive?

The core reason for choosing Hive is because it is a SQL interface operating on Hadoop. In addition, it reduces the complexity of MapReduce frameworks. Hive helps perform large-scale data analysis for businesses on HDFS, making it a horizontally scalable database. Its SQL interface, HiveQL, makes it easier for developers who have RDBMS backgrounds to build and develop faster performing, scalable data warehousing type frameworks.

Hive Features and Capabilities

Hive comes with enterprise-grade features and capabilities that can help organizations build efficient, high-end data warehousing solutions.

Some of these features include:

- Hive uses Hadoop as its storage engine and only runs on HDFS.

- It is specially built for data warehousing operations and is not an option for OLTP or OLAP.

- HiveQL is a SQL engine that helps build complex SQL queries for data warehousing type operations. Hive can be integrated with other distributed databases like HBase and with NoSQL databases, such as Cassandra

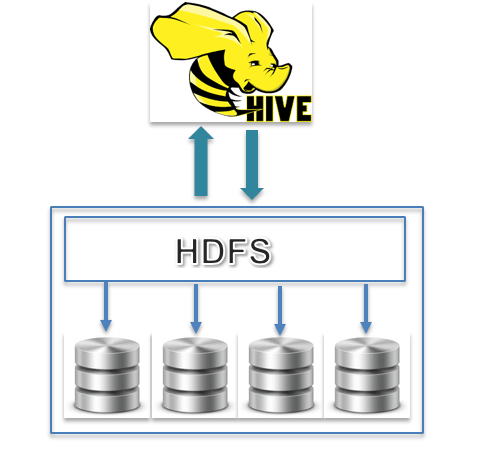

Hive Architecture

Hive Architecture is quite simple. It has a Hive interface and uses HDFS to store the data across multiple servers for distributed data processing.

Hive for Data Warehousing Systems

Hive is a specially built database for data warehousing operations, especially those that process terabytes or petabytes of data. It is an RDBMS-like database, but is not 100% RDBMS. As mentioned earlier, it is a database that scales horizontally and leverages Hadoop’s capabilities, making it a fast-performing, high-scale database. It can run on thousands of nodes and can make use of commodity hardware. This makes Hive a cost-effective product that renders high performance and scalability.

Hive Integration Capabilities

Because of its support for ANSI SQL standards, Hive can be integrated with databases like HBase and Cassandra. These tools have limited support for SQL and can help applications perform analytics and report on larger data sets. Hive can also be integrated with data streaming tools such as Spark, Kafka, and Flume.

Hive’s Limitations

Hive is a pure data warehousing database that stores data in the form of tables. As a result, it can only process structured data read and written using SQL queries. Hive is not an option for unstructured data. In addition, Hive is not ideal for OLTP or OLAP operations.

What is Spark?

Spark is a distributed big data framework that helps extract and process large volumes of data in RDD format for analytical purposes. In short, it is not a database, but rather a framework that can access external distributed data sets using an RDD (Resilient Distributed Data) methodology from data stores like Hive, Hadoop, and HBase. Spark operates quickly because it performs complex analytics in-memory.

What Is Spark Streaming?

Spark streaming is an extension of Spark that can stream live data in real-time from web sources to create various analytics. Though there are other tools, such as Kafka and Flume that do this, Spark becomes a good option performing really complex data analytics is necessary. Spark has its own SQL engine and works well when integrated with Kafka and Flume.

A Bit of Spark’s History

Spark was introduced as an alternative to MapReduce, a slow and resource-intensive programming model. Because Spark performs analytics on data in-memory, it does not have to depend on disk space or use network bandwidth.

Why Spark?

The core strength of Spark is its ability to perform complex in-memory analytics and stream data sizing up to petabytes, making it more efficient and faster than MapReduce. Spark can pull data from any data store running on Hadoop and perform complex analytics in-memory and in-parallel. This capability reduces Disk I/O and network contention, making it ten times or even a hundred times faster. Also, data analytics frameworks in Spark can be built using Java, Scala, Python, R, or even SQL.

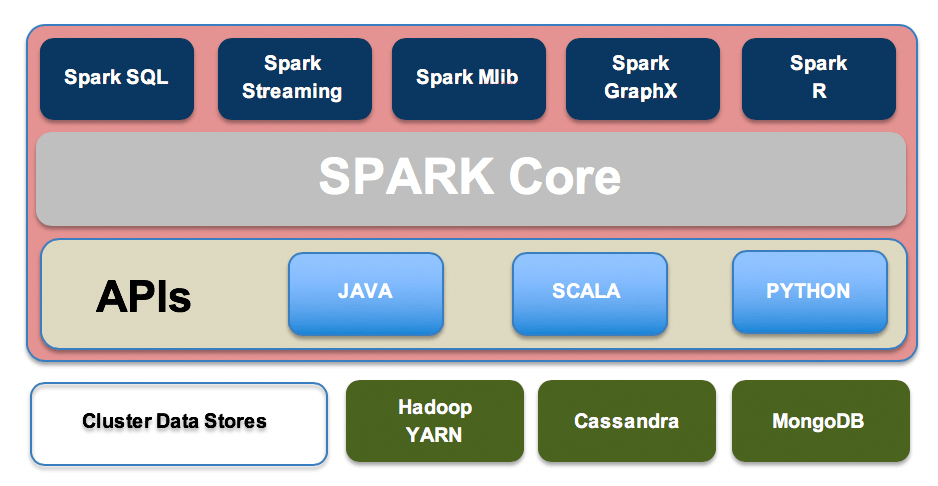

Spark Architecture

Spark Architecture can vary depending on the requirements. Typically, Spark architecture includes Spark Streaming, Spark SQL, a machine learning library, graph processing, a Spark core engine, and data stores like HDFS, MongoDB, and Cassandra.

Spark Features and Capabilities

Lightning-fast Analytics

Spark extracts data from Hadoop and performs analytics in-memory. The data is pulled into the memory in-parallel and in chunks. Then, the resulting data sets are pushed across to their destination. The data sets can also reside in the memory until they are consumed.

Spark Streaming

Spark Streaming is an extension of Spark that can live-stream large amounts of data from heavily-used web sources. Because of its ability to perform advanced analytics, Spark stands out when compared to other data streaming tools like Kafka and Flume.

Support for Various APIs

Spark supports different programming languages like Java, Python, and Scala that are immensely popular in big data and data analytics spaces. This allows data analytics frameworks to be written in any of these languages.

Massive Data Processing Capacity

As mentioned earlier, advanced data analytics often need to be performed on massive data sets. Before Spark came into the picture, these analytics were performed using MapReduce methodology. Spark not only supports MapReduce, but it also supports SQL-based data extraction. Applications needing to perform data extraction on huge data sets can employ Spark for faster analytics.

Integration with Data Stores and Tools

Spark can be integrated with various data stores like Hive and HBase running on Hadoop. It can also extract data from NoSQL databases like MongoDB. Spark pulls data from the data stores once, then performs analytics on the extracted data set in-memory, unlike other applications that perform analytics in databases.

Spark’s extension, Spark Streaming, can integrate smoothly with Kafka and Flume to build efficient and high-performing data pipelines.

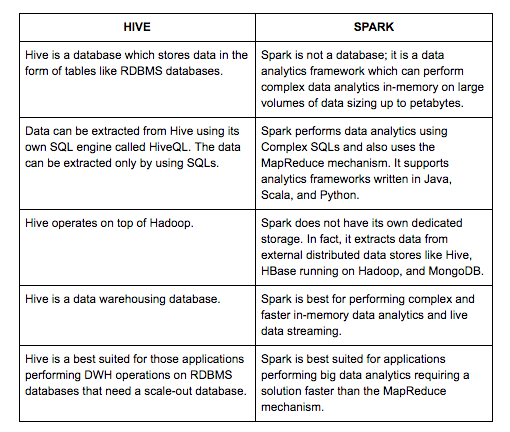

Differences Between Hive and Spark

Hive and Spark are different products built for different purposes in the big data space. Hive is a distributed database, and Spark is a framework for data analytics.

Differences in Features and Capabilities

Conclusion

Hive and Spark are both immensely popular tools in the big data world. Hive is the best option for performing data analytics on large volumes of data using SQL. Spark, on the other hand, is the best option for running big data analytics. It provides a faster, more modern alternative to MapReduce.

Published at DZone with permission of Daniel Berman, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments