Compliance Automated Standard Solution (COMPASS), Part 4: Topologies of Compliance Policy Administration Centers

In this post, we analyze the Compliance Policy Administration Center (CPAC), which provides several governance and operational functions.

Join the DZone community and get the full member experience.

Join For Free(Note: A list of links for all articles in this series can be found at the conclusion of this article.)

In the last post (part 3) of this multi-part blog series, we introduced methodologies and technologies for the various compliance personas to collaboratively author compliance artifacts such as regulation catalogs, baselines, profiles, system security plans, etc. These artifacts are automatically translated as code in view of supporting regulated environments enterprise-wide continuous compliance readiness processes in an automated and scalable manner. These artifacts aim to connect the regulatory and standards controls with the product vendors and service providers whose products are expected to adhere to those regulations and standards. The compliance as code data model we used is the NIST Open Security Controls Assessment Language (OSCAL) compliance standard framework.

Our compliance context here refers to the full spectrum of conformance from official regulatory compliance standards and laws, to internal enterprise policies and best practices for security, resiliency, and software engineering aspects.

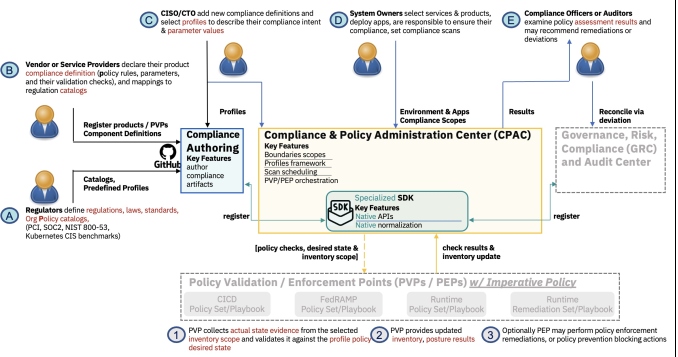

In this post, we analyze the Compliance Policy Administration Center (CPAC), which provides several governance and operational functions. First, a CPAC allows the user to register, select, and configure their required regulations compliance artifacts (e.g., catalogs, profiles, component mappings to controls and policies, systems policy desired state, assessment plans, etc.) as those described in our past post (part 3). It also allows the user to deploy, schedule, and run corresponding technical policy checks on policy validation or enforcement points (PVPs or PEPs). The PVPs/PEPs measure and optionally enforce the desired compliance in the regulated enterprise and produce posture results. CPAC may also allow the user to demarcate inventory scopes or boundaries to which particular regulations apply and thus trigger specific checks in the PVPs/PEPs for those selected systems. Finally, CPAC may additionally allow the user to produce audit reports which aggregate the systems evidence or the PVPs/PEPs posture results into the overall enterprise-wide regulation posture in view of further risk analysis.

We explore below various requirements and approaches to the CPAC design. The reasoning behind the need for various designs is a reflection of the level of generality or specialty of the systems the CPAC is expected to handle the compliance posture for. The more specialized the center (e.g., a center dedicated to managing compliance for IaaS or for Kubernetes clusters), the more tailored the center can be to accommodate the native interfaces and artifacts handled by that environment (e.g., well-known configuration APIs, existing support for policy templates and distribution). On the other hand, the more diverse the products the CPAC is expected to manage compliance (e.g., a cloud environment with its full stack of IaaS, CaaS, PaaS, and SaaS services), the more difficult it becomes to accommodate heterogeneous native interfaces and the more evident the need for a standardized approach to represent and handle compliance information. Obtaining the compliance posture across all layers of the software stack in a continuous manner, however, is a fundamental requirement to achieve continuous security and audit readiness.

We start by introducing a couple of tailored CPAC topologies that support specialized forms of policy. In one case, we deal with declarative policies which list the artifacts of a system’s desired state, as in the case of Open Cluster Management policy management for the Kubernetes PVP/PEP policy engines. In the second case, we deal with imperative policies which consist of a step-by-step sequence of commands to validate or enforce the system’s desired state, as in the case of the Auditree Python checks or Ansible Python policy modules.

Finally, we present how to standardize a CPAC interface to suit the requirements for cloud environments and how such a CPAC can orchestrate diverse PVPs/PEPs formats across heterogeneous cloud services and products.

1. Compliance as Reference in the Technical Policy as Code: Lesson Learned

An important lesson is for us to learn from the Compliance as Code projects. A few years back when the first attempts at digitizing compliance started, the pioneering Compliance as Code approach was to label compliance policies with compliance regulation metadata. As illustrated below, the metadata for a couple of regulations is added to the OAuth inactivity Kubernetes timeout policy as references.

prodtype: ocp4

title: "Configure OAuth server so that tokens expire after a set period of inactivity"

description: |-

<p>

You can configure OAuth tokens to expire after a set period of

inactivity. By default, no token inactivity timeout is set.

</p>

[...]

For more information on configuring the OAuth server, consult the

OpenShift documentation:

{{{ weblink(link="https://docs.openshift.com/container-platform/4.7/authentication/configuring-oauth-clients.html") }}}

</p>

[...]

references:

nerc-cip: CIP-004-6 R2.2.3,CIP-007-3 R5.1,CIP-007-3 R5.2,CIP-007-3 R5.3.1,CIP-007-3 R5.3.2,CIP-007-3 R5.3.3

nist: AC-2(5),SC-10

identifiers:

cce@ocp4: CCE-83511-6

ocil_clause: 'OAuth server inactivity timeout is not configured'

[...]

template:

name: yamlfile_value

vars:

ocp_data: 'true'

filepath: /apis/config.openshift.io/v1/oauths/cluster

yamlpath: ".spec.tokenConfig.accessTokenInactivityTimeout"

check_existence: "only_one_exists"

xccdf_variable: var_oauth_inactivity_timeoutFigure 1: OpenShift Kubernetes Policy with embedded Compliance Metadata

With the regulations' compliance frequent re-interpretation and its mapping to technical controls reaching into hundreds of changes, it became obvious that maintaining the compliance metadata as part of the technical policy code body was not practical. Moreover, when the organization grows from following one or two regulations into tens of regulations, this approach proves not to scale either, making it hard to maintain the thousands of regulation controls across the Kubernetes technical policies. A CPAC would have a difficult mission to accommodate this approach to track compliance embedded in policy.

2. Compliance as Code for Declarative Technical Policies

The industry moved more recently to an approach where compliance regulation controls are managed outside the body of technical policies. This allows the management of extensive compliance changes without impacting the technical policies, which change much less frequently. Figure 2 below illustrates a NIST compliance standard (FedRAMP) represented in its dedicated file as code, where the policy exemplified in the previous section, “OAuth inactivity timeout policy," is mapped as a supporting technical control to its relevant NIST controls. In the case of small to medium businesses, which are required to comply with a reduced number of controls and regulations and may have only a couple of people dedicated to managing the compliance, CPAC can support the compliance authoring with all the compliance items Catalog, Profiles, Mapping to Rules and Metadata in one artifact (Figure 2). However, to achieve scalability for numerous and highly customized regulations parametrization in large enterprises, the compliance authoring can be outsourced (Figure 3) to a tool specialized in collaborative compliance authoring like Trestle, introduced and detailed in part 3 of this series, while CPAC can focus on providing the technical policies governance support, PVP/PEP management, and the posture aggregation and reporting. CPAC can then leverage the authoring of compliance separately from the coding of technical policy and focus on the governance of the regulation's baseline customization and assessment.

Note in the example in Figure 2 from the Compliance as Code open-source project that it names “NIST” a Policy, meaning the large body of NIST 800-53 capital ”P” Policy available as text in PDF files, as opposed to the small “p” technical policies, which are called in this example rules and which are implemented as "policy as code."

policy: NIST

title: Configuration Recommendations for the OpenShift Container Platform

id: nist_ocp4

version: Revision 4

source: https://www.fedramp.gov/assets/resources/documents/FedRAMP_Security_Controls_Baseline.xlsx

[...]

- id: AC-2(5)

status: automated

notes: |-

To meet the requirements, OpenShift must be configured

to use centralized authentication via an IDP. The account

inactivity timeout can be either configured per OAuth client

as described here:

https://docs.openshift.com/container-platform/latest/authentication/configuring-oauth-clients.html#oauth-token-inactivity-timeout_configuring-oauth-clients

[...]

rules:

- idp_is_configured

- oauth_or_oauthclient_inactivity_timeout

description: |-

The organization requires that users log out when [Assignment: organization-defined time-period of expected inactivity or description of when to log out].

Supplemental Guidance: Related control: SC-23.

AC-2 (5) [inactivity is anticipated to exceed Fifteen (15) minutes]

AC-2 (5) Guidance: Should use a shorter timeframe than AC-12.

title: >-

AC-2(5) - ACCOUNT MANAGEMENT | INACTIVITY LOGOUT

levels:

- high

- moderate

Figure 3: Compliance Policy Administration Center Specialized for Kubernetes Policies

A CPAC solution for the specialized Kubernetes policies in our example above is the RedHat Advanced Cluster Management (ACM) which is detailed in this blog. ACM has taken the first step to separate compliance controls and desired state metadata from the technical policy rules and provides a tool called PolicyGenerator, which combines the two to configure the policies deployed to the Kubernetes PEPs.

ACM conveys the desired state to the PVP/PEP engines using declarative policies generated in CRDs format, based on engine-specific policy templates, with the configuration of those policies embedded in the policy itself. ACM covers multiple Kubernetes policy engines such as GateKeeper/OPA, Kyverno, Kube-bench, etc. The posture results from the Kubernetes PVPs/PEPs are translated back into standard Policy Report Common Resource Definition (CRD) for policy violation reports. The Kubernetes Policy Report CRD is the first attempt to abstract diverse policy reports into an aggregated, normalized report, including the OSCAL formatting.

The SIG-Security Policy Management White Paper provides a comprehensive overview of concepts and best practices architecture for Kubernetes Policy Management. The Open Cluster Management CNCF project includes building blocks needed to implement this architecture, including templatized policies for edge scalability, secure distribution of secrets to clusters, GitOps-based deployment fostering collaboration among multiple IT personas, PolicyGenerator to auto import existing Kubernetes configuration and policies for policy engines, and PolicySets for grouping of policies to facilitate policy management and governance for declarative policies.

3. Compliance as Code for Imperative Policies

Let us have a look at the management of compliance in the case of imperative policies, such as those developed for Auditree Python checks or Ansible Python playbooks. As in the case of the declarative policies, the compliance management can be outsourced as illustrated in Figure 4 to a tool specialized in collaborative compliance authoring like Trestle, while the CPAC can focus on the policy and PVP/PEP management, and the posture aggregation and reporting.

The Auditree PVP approach is detailed in "Compliance Automation via Auditree," while the Ansible documentation is available here. In both cases, the policy validation is done by tests implemented in Python (imperative language). The tests compare a desired state to the systems’ actual state (aka, evidence) collected by “fetchers” and the resulting posture results are stored locally in the tools’ repositories.

PVPs like Auditree and Ansible handle the desired state implicitly through collections of checks/actions, or explicitly through configuration files governed locally in their repositories. They can cover any type of policy validation for which actual state evidence can be programmatically collected (e.g., protect network boundaries, match inventory, users RBAC profile, standard documentation). This is possible because these types of PVPs hold both the means to collect any actual state evidence (e.g., set of public gateway attached to subnets, inventory, list of users, documents templates) and the means to compare it to the policy desired state, as opposed to the policy engines in the previous section which are limited to collect and evaluate only configuration policies as supported by their engine-specific policy capabilities.

In the case of imperative policies, in addition to the configuration of the policy desired state intended for a given regulation, the role of a CPAC is to convey the selection of any relevant checks or actions in playbooks associated with that regulation beyond configuration. This holistic assessment plan is conveyed to the specialized PVP to validate the environmental systems and capabilities compliance at each scan. The scan results sent back to CPAC represent systems and processes individual compliance postures. In order to be able to meaningfully aggregate them into a consolidated policy status report, these postures must follow a well-defined template. Since we are still in the case of a CPAC for a specialized PVP, i.e., Auditree or Ansible, the CPAC can suit the formats exposed by those tools.

Figure 4: Compliance Policy Administration Center Specialized for Imperative Policy Platforms, e.g., Ansible Automation Platform or Auditree

4. Compliance as Code for Cloud Heterogenous Policies and Policy Validation Tools

As we reach the evaluation of a complex environment (such as in the case of cloud with its full-stack IaaS, PaaS, and SaaS, involving a diverse set of declarative policy engines, imperative PVPs/PEPs, custom policies and checks, all with their individual native interfaces which the CPAC needs to accommodate), the only workable approach is to introduce a standard. And that is what NIST Open Security Control Assessment Language (OSCAL) targets with its compliance as code standards artifacts schemas.

Figure 5: Compliance Policy Administration Center for Cloud and its Standard Exchange Protocol for Policy Validation/Enforcement Points Orchestration

The role of a CPAC for the cloud is to provide an aggregated assessment of enterprise-wide compliance through the orchestration of various PVPs/PEPs. Governance of the regulations profiles and their associated checks those PVPs/PEPs run against the regulated environment's actual state to produce the scan results needed for the aggregation into the overall compliance posture.

To facilitate the PVPs/PEPs orchestration and policy governance, Trestle enables the authoring of PVP/PEP-related artifacts in the compliance context, such as regulation catalogs (e.g., CIS-benchmarks, NIST 800-53), profiles of desired controls and states (e.g., CIS Kubernetes node profile, FedRAMP High, Moderate and Low), component definitions, which map those controls to technical policy rules and PVP checks (e.g., OpenShift CIS-benchmarks rules and their Compliance Operator policy checks, or Organization Boundary Network Protection rules and their Auditree policy checks), and assessment plans, all in the NIST standard compliance as code OSCAL language.

Once the compliance and security personas completed the setup of the above pre-defined compliance artifacts in Trestle and developed the associated policy checks in their respective PVPs/PEPs, they use the CPAC to register these artifacts and make them available to the cloud customers. The CPAC would enable those cloud customers, owners of systems and services, to fine-tune the registered profiles and assessment plans for their particular regulatory requirements, environments scopes, and scan schedules. We illustrate in Figure 5 a set of steps (EP1-EP4) that a CPAC may leverage as standard Exchange Protocol to achieve (EP1) policy artifacts registration, (EP2) assessment plan distribution to PVPs/PEPs, (EP3) compliance scan result retrieval from the various PVPs/PEPs after the system owners completed the setup, and (EP4) enterprise-wide regulation posture reports registration into GRC (Governance, Risk, and Compliance) Centers or Audit Agencies Centers for farther business risk analysis or Authorization to Operate procedures.

By taking the explicit step to represent what controls each PVP/PEP assesses, we can map back the policy assessment results to the compliance standard context. With all the results in a standard format such as OSCAL, it is now possible to render the overall compliance posture end-to-end for all layers of the software stack, at least for the controls being monitored. This in turn helps to maintain continuous security and audit readiness.

One last comment on the integration of CPAC with the software lifecycle, particularly on the aim of providing posture assessment pre- as well as post-deployment: the agreed position in the industry for writing policies is to "write once and use multiple times." This means writing one single implementation of the policy check and using it both at the CI/CD pipeline run and at runtime, periodically, or at UI or CLI-based configuration change. In the CI/CD pipeline, in pre-deployment, we assess the posture against deployment artifacts with the option to block a deployment if not compliant. While at runtime and at UI or CLI-based configuration change, we assess the posture of the systems and services already deployed in the regulated environment. Reusing the same check implementation logic "written once" across the software lifecycle ensures the various posture results for one check are semantically equivalent and no ambiguity shadows the interpretation of a "pass" or "fail" meaning in the pre- or post-deployment context. The evidence available for the checks may be different pre- or post-deployment, in which case an evidence abstraction needs to be provided to truly have the same check logic.

We are referring here to regulation controls and technical policy checks which are applicable both pre-deployment, to deployment artifacts like Infrastructure as Code Terraform plans or application deployment helm charts, as well as post-deployment, to runtime evidence like API payloads, and pod configuration. Of course, in the cases of controls that are applicable to CI/CD pipelines only, like "use unit tests" or "run static scans," the checks are implemented and used specifically for the CI/CD pipeline only. Similarly, for controls that are applicable at runtime only like "database sample entries are encrypted," the checks are run only against the deployed systems and services.

In the case a CPAC covers the full software lifecycle, providing posture for pre- and post-deployment, the CPAC interlock with the PVPs/PEPs covering the various stages follows the same topology patterns as presented in the previous sections. An additional capability such a CPAC may provide is the correlation between the pre- and post-deployment assessment results for each policy. This capability is critical in case of policy failure to determine if a runtime remediation can proceed based on the original pipeline or if the pipeline itself was at fault.

Conclusion

In this blog post, we analyzed three approaches to Compliance Policy Administration Centers. Two were tailored CPAC topologies that support specialized forms of policy, as in the case of Open Cluster Management policy management for the Kubernetes PVP/PEP policy engines, and as in the case of Auditree and Ansible Python policy modules. The third CPAC topology was for cloud environments and the attempt to accommodate the generic case of PVPs/PEPs with diverse native formats across heterogeneous cloud services and products. The solution to orchestrate those various PVPs/PEPs was an Exchange Protocol based on the NIST Open Security Control Assessment Language (OSCAL) which provides compliance as code standard schemas.

The CPAC approaches presented here do not have to be exclusive: they can be used in hybrid ways in your compliance automation solution. For instance, a hierarchical approach will allow a CPAC PVPs/PEPs orchestrator of type cloud to work together via standard interfaces (Exchange Protocol-like) with dedicated CPACs which in turn natively interface with their specialized PVPs/PEPs.

What’s Coming Next

In our next blog posts, we plan to provide you with detailed coverage on authoring compliance policies that go deeper than configuration management. These complex policies made the subject of the PVPs/PEPs presented in our second type of Compliance Policy Administration Center.

We also plan to dedicate a future blog post to the Exchange Protocol introduced in our third type of Compliance Policy Administration Center to address the cloud standardization of heterogenous PVPs/PEPs.

Learn More

If you would like to learn about Kubernetes policy management presented in our first type of Compliance Policy Administration Center, why policy management is necessary for security and automation of Kubernetes clusters and workloads, and how Kubernetes policies are implemented, check out our Kubernetes Policy Management White Paper.

Below are the links to other articles in this series:

- Compliance Automated Standard Solution (COMPASS), Part 1: Personas and Roles

- Compliance Automated Standard Solution (COMPASS), Part 2: Trestle SDK

- Compliance Automated Standard Solution (COMPASS), Part 3: Artifacts and Personas

- Compliance Automated Standard Solution (COMPASS), Part 5: A Lack of Network Boundaries Invites a Lack of Compliance

- Compliance Automated Standard Solution (COMPASS), Part 6: Compliance to Policy for Multiple Kubernetes Clusters

- Compliance Automated Standard Solution (COMPASS), Part 7: Compliance-to-Policy for IT Operation Policies Using Auditree

Opinions expressed by DZone contributors are their own.

Comments