Ensuring Configuration Consistency Across Global Data Centers

Global data centers need consistent configurations, but engineers misalign across regions. This paper explores the problem, existing solutions, and a proposed approach.

Join the DZone community and get the full member experience.

Join For FreeAbstract

Today, nearly every large company operates several data centers distributed across the globe. Each of these data centers hosts a variety of infrastructure services such as MySQL, Redis, Hive, and message queues.

For scalability and adherence to the DRY (Don't Repeat Yourself) principle in system design, it's crucial that the same codebase is used across all regions. However, engineers responsible for writing and maintaining this code are often spread across different geographies. As a result, teams may not always be aligned on system configurations—even within the same region. This misalignment leads to discrepancies in configuration across data centers.

Such inconsistencies can cause serious issues, from failed API calls to legal compliance violations. This paper introduces the problem, discusses current solutions and their limitations, and proposes a unified approach to ensure configuration consistency across regions.

Introduction

Let’s begin by understanding what constitutes system configurations. The following list is not exhaustive, but it includes some of the most critical parameters:

- SQL/Hive table schemas

- Redis database TTL values

- Message queue capacity

- Timeout values for HTTP REST APIs and RPC requests

- Dynamic configurations

- Code versions

Currently, these configurations are manually maintained by engineers, which creates room for human error. Teams may forget to apply certain configurations or apply inconsistent values across regions. Now, we will get a high level understanding of some real life issues that happened in the industry which led to critical system failures/legal issues/sanctions/penalties:

Real-World Scenarios of Misaligned Configurations

Let’s explore a few real-world scenarios where misaligned configurations across data centers have led to critical issues:

1. Inconsistent Table Schema

--USA Data Center

column_name STRING

--Europe Data Center

column_name VARCHAR (8)If an SQL table in the USA uses a STRING type but the same table in Europe is defined as VARCHAR(8), any entry exceeding 8 characters will fail in Europe. These failures will cascade, breaking any dependent INSERT or UPDATE operations, and ultimately causing downstream HTTP or RPC requests to fail. This can happen very often because of misalignment in teams in the USA and Europe.

2. Version Mismatch

USA Data Center: Code Version X (latest)

Europe Data Center: Code Version Y (outdated)If different code versions are deployed in separate regions, missing features in Europe could negatively affect the business. Worse, if the missing version includes compliance updates, the company could be in violation of local laws—resulting in penalties, sanctions, or legal complications.

3. Timeout Discrepancies

Service A → Service B RPC Call

Timeout Settings:

USA: 500ms

Europe: 100msAn RPC or HTTP API call between two services could consistently fail in Europe due to a much lower timeout threshold. This leads to unreliable service behavior and business disruption in that region.

4. Missing Redis Dependencies

Europe: Redis DB present

USA: Redis DB missingLet's say a particular code commit requires a particular Redis database that is only deployed in Europe but not in the USA. In that case, during runtime in the USA, API calls which require access to this db will start failing and impact business.

As we can see above, misalignment in the system can lead to severe consequences. We need to make sure these configurations are the same across regions:

- We don’t have to maintain multiple code versions for each region.

- We don’t have to hire redundant people in each region for developing the region specific code. Hiring for development can be done in cost-effective locations.

- We can avoid all the critical issues which impact business revenue.

- We can avoid unwanted sanctions, penalties and legal issues.

Existing Solutions and Their Gaps

Several tools and services in the industry attempt to solve the configuration consistency problem, but most address only part of the challenge. Below is a summary of current solutions and their limitations:

1. Configuration Management Tools

These tools help enforce consistent configurations across distributed systems:

- HashiCorp Consul – Provides service discovery, distributed key-value storage, and configuration consistency.

- Apache ZooKeeper – A centralized service for maintaining configuration information and synchronization.

- etcd – A distributed key-value store used for configuration management in cloud environments.

- Ansible, Chef, Puppet, SaltStack – Automate infrastructure provisioning and configuration management.

2. Infrastructure-as-Code (IaC) Solutions

IaC ensures that configurations are defined as code and version-controlled:

- Terraform – Manages infrastructure as code, enabling identical configurations across data centers.

- AWS CloudFormation / Google Deployment Manager – Cloud-native IaC solutions for managing configuration consistency.

3. Centralized Configuration Management Services

These services manage external application configurations across environments:

- Spring Cloud Config – Provides centralized configuration management for Spring-based applications.

- Netflix Archaius – A dynamic configuration management library used at scale.

4. Database Schema and Versioning Tools

Ensure schema changes are versioned and consistent:

-

Liquibase / Flyway – Help manage and track database schema changes across environments.

5. Feature Flag Management

Allow deployment of the same code with region-specific behavior:

- LaunchDarkly – Enables teams to dynamically control feature rollout per environment or user group.

- Unleash / Flipper – Lightweight tools for managing feature toggles across deployments.

6. Service Mesh Solutions

Control networking-related configurations and service-to-service communication:

-

Istio / Linkerd / Consul Connect – Ensure consistent application of policies like timeouts, retries, and circuit breaking.

While each of these tools addresses part of the problem, there is no unified platform that ensures full configuration consistency (database schemas, timeouts, dependencies, code versions, etc.) across all regions and systems automatically.

Proposed Solution

We propose an industry-standard, unified platform that ensures end-to-end configuration consistency across global data centers. Our solution will focus on the following components:

- Centralized Policy Enforcement – A system that automatically detects and corrects misaligned configurations in real-time.

- Automated Drift Detection & Remediation – Continuous monitoring and syncing of configurations across regions to prevent divergence.

- Unified UI & Governance Layer – A central platform for managing all types of configurations—database, application, and infrastructure—across teams and regions, with audit trails and access controls.

Implementation Process

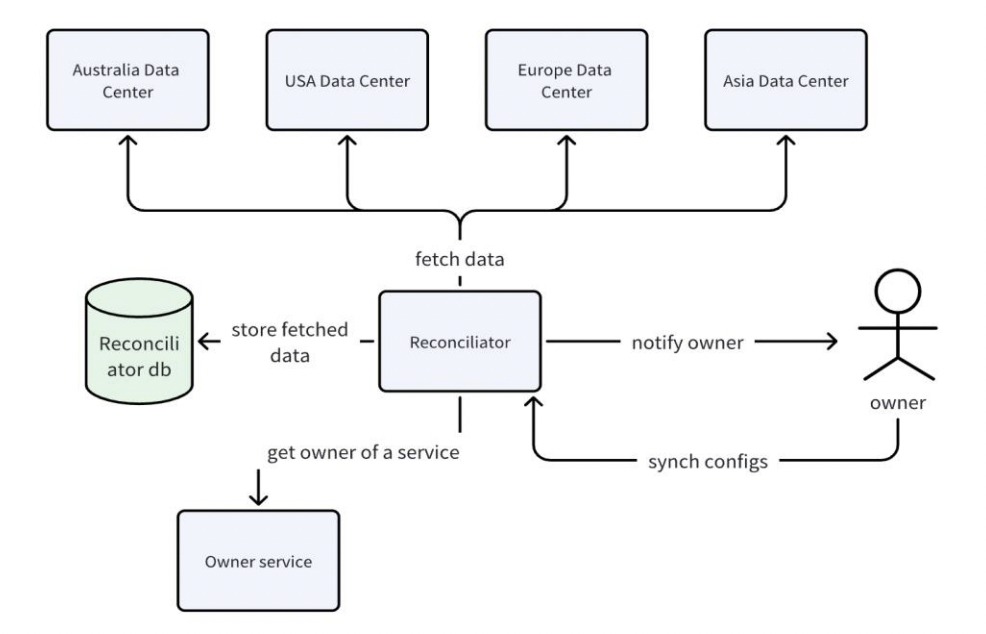

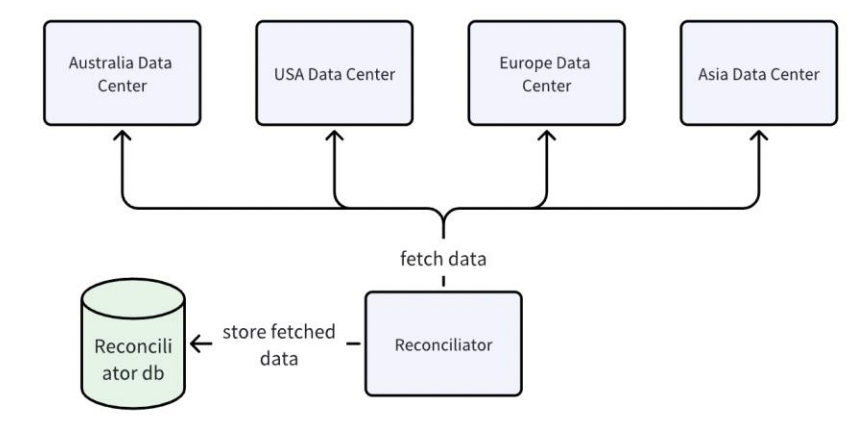

Any industry-standard cloud environment—such as Azure, AWS, or GCP—provides Cloud APIs that can be used to fetch data center configurations for infrastructure services like SQL, Message Queue, Redis, and service-level timeouts. These APIs allow us to identify configuration differences across regions. The following process outlines how to automate the detection and resolution of such differences across teams and regions:

-

Fetch Configurations

Make cross-regional API calls to data centers to retrieve configuration data for infrastructure services. -

Store in a Document-Based Database

Store these configurations at both the service and team levels in a document-based database. -

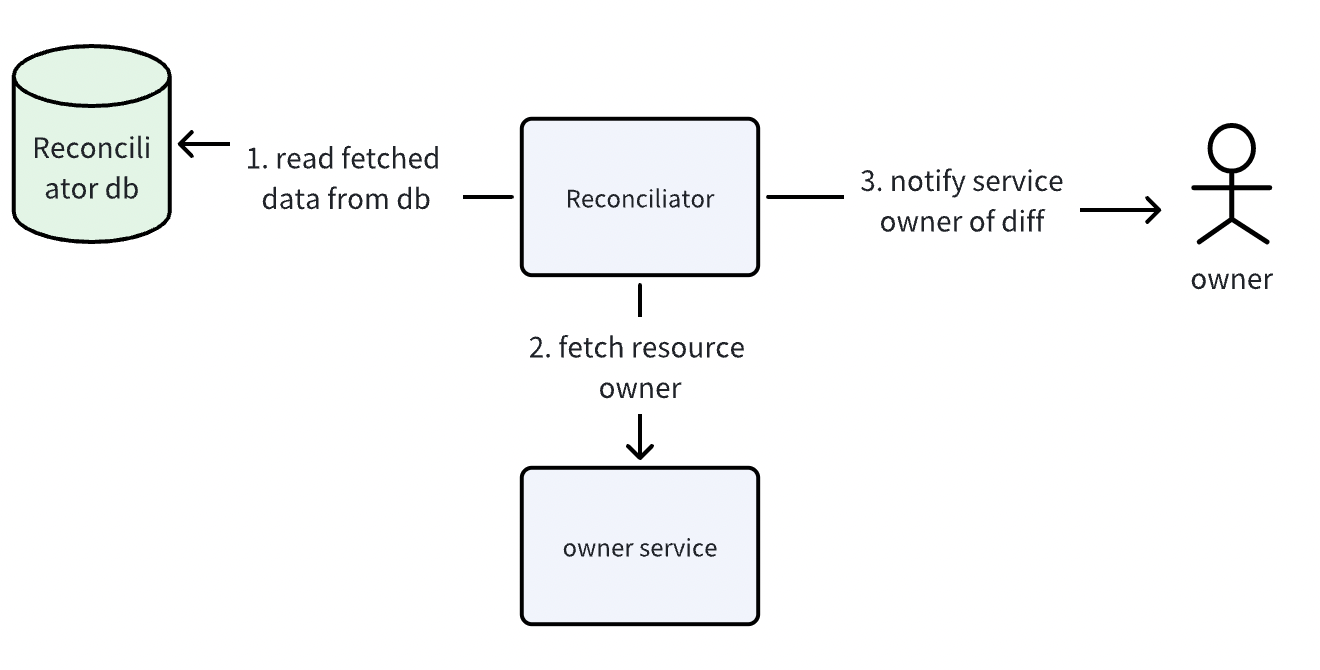

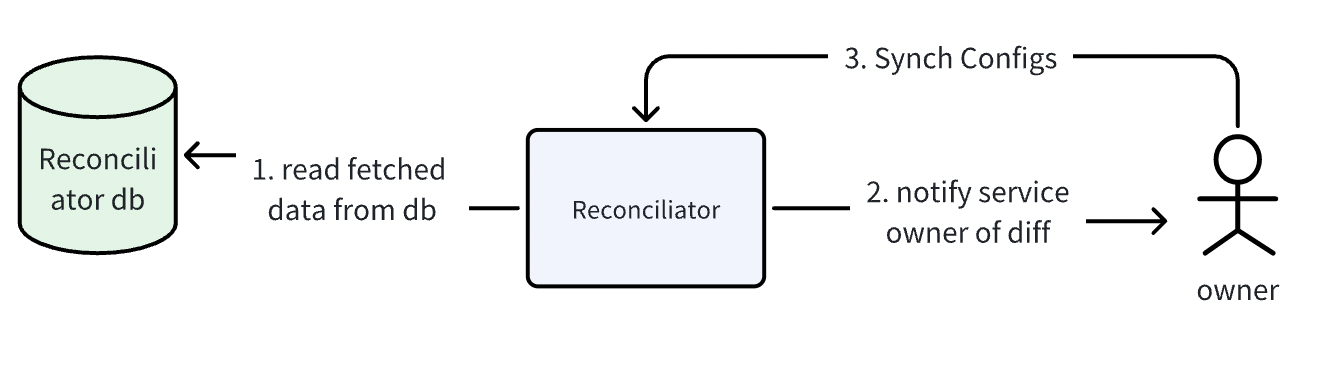

Retrieve and Compare

Periodically fetch the configurations from the database and compare them, two at a time. -

Detect and Notify

If any discrepancies are found, automatically send a notification to the owner of the affected infrastructure service instance. -

Continuous Monitoring

Repeat steps 1–4 everyXseconds (whereXis determined by business requirements). -

Owner Resolution

The service owner reviews the configuration differences, determines the correct values, and syncs them across all regions. -

Notification Management

Notifications will continue until the discrepancy is resolved. If the owner is already working on it, they can mute notifications for a specified period (e.g., 1 hour).

Mute Duration: 1 hour

Condition: Notification resumes if the issue remains unresolved after mute period.This solution ensures organization-wide consistency by extending coverage across all teams and infrastructure components, minimizing the risk of critical misconfigurations.

High-Level Design

- Fetch Configuration Data

GET /v1/fetch/<infra-service>/<team-id>: Fetches the infrastructure configuration for a given service and team.

- Notify Owners of Configuration Mismatches

POST /v1/notify: Notifies the owners about the differences

{

"infra-service": 12,

"team-id": "abc",

"configs": {

"usa": "<json-config>",

"europe": "<json-config>",

"asia": "<json-config>",

"australia": "<json-config>"

}

}

- Synchronize Configurations Across Regions

POST /v1/synch: Owners can decide the final config and sync all regions with the correct config

{

"infra-service": 12,

"team-id": "abc",

"final_configs": "<json-config>"

}

Conclusion

Ensuring system configuration consistency across multiple data centers is critical for business continuity, regulatory compliance, and operational efficiency. While existing solutions address specific aspects of configuration management, they lack a holistic approach to enforcing global uniformity for cloud services. Our proposed solution offers an automated, industry-standard approach to maintaining configuration alignment across distributed systems, reducing manual intervention and minimizing the risk of system failures across all cloud services. This makes it a centralized platform to sync system configurations across all data centers, cloud services, and teams within an organization This solution is already deployed in one of the big companies in the industry by me while working there.

Published at DZone with permission of Shubham Jindal. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments