Data Federation With CockroachDB and Presto

In this tutorial, I will demonstrate how to access federated CockroachDB data using an external project like Presto.

Join the DZone community and get the full member experience.

Join For FreeMotivation

A customer inquired whether data federation is possible natively in CockroachDB. Unfortunately, CockroachDB does not support features like foreign data wrappers and such. A quick search returned a slew of possibilities and Presto being a prominent choice, sparked my interest.

High-Level Steps

- Install Presto

- Configure Postgresql catalog

- Configure TPCH catalog

- Verify

- Wrap up

Step by Step Instructions

Install Presto

I'm using a Mac and luckily there's a homebrew package available.

brew install presto

Add connectors to /usr/local/opt/prestodb/libexec/etc/catalog/. See:

https://prestodb.io/docs/current/connector.html

To restart prestodb after an upgrade:

brew services restart prestodb

Or, if you don't want/need a background service you can just run:

/usr/local/opt/prestodb/bin/presto-server run

==> Summary

/usr/local/Cellar/prestodb/0.268: 1,967 files, 1.3GB

Remember the location of the connector catalog, we will use it shortly.

Before we can launch Presto, we need to configure JAVA_HOME. I'm on OSX and I used the following directions to set it up.

Configure Postgresql Connector

I am using CockroachDB Serverless for my data source. You can spin a free forever instance using this link

Being Postgresql wire compatible database, we can leverage the Postgresql connector to connect to CockroachDB with Presto, fill in your details and place the postgresql.properties file into /usr/local/opt/prestodb/libexec/etc/catalog/ directory.

connector.name=postgresql

connection-url=jdbc:postgresql://free-tier.gcp-us-central1.cockroachlabs.cloud:26257/yourclustername-5521.tpch?sslmode=verify-full&sslrootcert=/Users/username/.postgresql/root.crt

connection-user=yourusername

connection-password=yourpasswordA few things here, first, variable expansion does not work, you must specify the full path to the root certificate. In CockroachDB Serverless, you also need to prepend the database name tpch with the cluster name. So in other words, you need clustername.tpch and in my case artem-serverless-5521.tpch. Otherwise, you get the following error if you attempt to access the database connector.

Query 20220119_202320_00000_es5a4 failed: FATAL: codeParamsRoutingFailed: missing cluster name in connection string

Configure the Built-In TPCH Connector

Presto comes with a few built-in datasets, TPCH being one of them. I figured for time's sake to leverage what's out there.

Create tpch.properties file and place it in the same location as the postgresql.properties file.

connector.name=tpch

At this point, we can start the Presto server:

presto-server run --server localhost:8088

The port selected 8088 is in case you're using an instance of CockroachDB with DB Console using the default port 8080. We're trying to avoid port-used errors here.

Once the server is up, we can connect to the Presto CLI and pass the catalogs we're going to use during our session:

presto --server localhost:8088 --catalog postgresql,tpch

Let's browse the tables from within Presto:

show schemas from tpch;

Schema

--------------------

information_schema

sf1

sf100

sf1000

sf10000

sf100000

sf300

sf3000

sf30000

tiny

For some reason, I was unable to show tables in the TPCH workload from within Presto but knowing the workload, we know the table names are below:

Table

----------

customer

lineitem

nation

orders

part

partsupp

region

supplierUsing the fully qualified table names, we can fetch the table information:

describe tpch.sf100.part;

Column | Type | Extra | Comment

-------------+-------------+-------+---------

partkey | bigint | |

name | varchar(55) | |

mfgr | varchar(25) | |

brand | varchar(10) | |

type | varchar(25) | |

size | integer | |

container | varchar(10) | |

retailprice | double | |

comment | varchar(23) | |

We do not package TPCH workload with our binary and have to find it elsewhere. I found the dataset at the following site. Exporting the part table and importing into CockroachDB is out of the scope of this article. I may follow up with a tutorial on that at a later time.

Once I have TPCH data loaded into my CockroachDB cluster, we can view it, since we're in Presto CLI, let's use that to inspect the data.

Verify

The hierarchy in Presto is based on the catalog first.

SHOW SCHEMAS FROM postgresql;

Schema

--------------------

crdb_internal

information_schema

pg_catalog

pg_extension

publicWe know our data is in the default public schema. We have to reference both hierarchies to view the tables.

USE postgresql.public;

SHOW TABLES;Table

-------

part

(1 row)

Query 20220119_220205_00013_z5ufp, FINISHED, 1 node

Splits: 19 total, 19 done (100.00%)

0:03 [1 rows, 20B] [0 rows/s, 6B/s] We can now query CockroachDB and Presto TPCH datasets simultaneously:

SELECT partkey, name, mfgr, brand, type, size, container, retailprice, comment FROM tpch.sf100.part A INNER JOIN postgresql.public.part B ON A.partkey = B.p_partkey WHERE A.partkey > 10000 AND A.partkey <= 10010;

While the query runs, let's take a look at the Presto UI, recall we passed --server localhost:8088 the argument. The server exposes a UI we can access at the following address.

presto:public> SELECT partkey, name, mfgr, brand, type, size, container, retailprice, comment FROM tpch.sf100.part A INNER JOIN postgresql.public.part B ON A.partkey = B.p_partkey WHERE A.partkey > 10000 AND A.partkey <= 10010;

partkey | name | mfgr | brand | type | size | contai

---------+----------------------------------------+----------------+----------+-------------------------+------+-------

10001 | saddle honeydew red plum indian | Manufacturer#4 | Brand#42 | SMALL ANODIZED STEEL | 34 | SM BAG

10002 | cyan grey saddle olive pale | Manufacturer#5 | Brand#51 | STANDARD BRUSHED BRASS | 3 | SM PAC

10003 | spring sky moccasin orchid forest | Manufacturer#2 | Brand#23 | STANDARD PLATED COPPER | 30 | WRAP C

10004 | burlywood dim moccasin forest yellow | Manufacturer#1 | Brand#11 | ECONOMY ANODIZED COPPER | 4 | MED JA

10005 | sky mint magenta black misty | Manufacturer#3 | Brand#35 | MEDIUM POLISHED COPPER | 4 | WRAP P

10006 | azure metallic violet puff saddle | Manufacturer#2 | Brand#25 | LARGE ANODIZED TIN | 28 | JUMBO

10007 | burlywood ivory pink yellow forest | Manufacturer#2 | Brand#25 | PROMO POLISHED BRASS | 2 | SM CAN

10008 | light lavender lime spring burnished | Manufacturer#2 | Brand#24 | ECONOMY PLATED TIN | 16 | MED CA

10009 | indian smoke lime magenta tan | Manufacturer#4 | Brand#44 | SMALL ANODIZED BRASS | 47 | MED CA

10010 | sienna saddle chartreuse rose honeydew | Manufacturer#3 | Brand#32 | MEDIUM ANODIZED NICKEL | 10 | MED DR

(10 rows)

Query 20220119_220404_00014_z5ufp, FINISHED, 1 node

Splits: 81 total, 81 done (100.00%)

14:10 [20M rows, 0B] [23.5K rows/s, 0B/s]

We are accessing tpch.sf100.part dataset A that comes standard with Presto on the left and CockroachDB table on the right postgresql.public.part B simultaneously. This is a very simplistic scenario but at least it provides ideas on how to leverage Presto in federating data out of CockroachDB.

There's more to learn about Presto and I'm only scratching the surface with this article. The query takes a bit of time given my Presto environment is not optimized. I defer to the Presto documentation for further tuning.

Update

My colleague suggested leveraging the follower reads pattern for this use case. I think it's a brilliant idea and it makes follower reads a very suitable choice considering you'd use it in a live system. Alas, the CockroachDB specific AS OF SYSTEM TIME syntax is not compatible with Presto:

In cases where AS OF SYSTEM TIME syntax is not supported by third-party tooling, our engineering team introduced a session variable default_transaction_use_follower_reads=on we can leverage and force all read queries through follower reads. All we have to do is stop Presto, configure the postgresql.properties file with the session variable appended to the connection string and restart.

connection-url=jdbc:postgresql://free-tier.gcp-us-central1.cockroachlabs.cloud:26257/yourclustername-5521.tpch?sslmode=verify-full&sslrootcert=/Users/username/.postgresql/root.crt&default_transaction_use_follower_reads=on

Let's run our query again but without AS OF SYSTEM TIME syntax

presto> SELECT p_partkey, p_name, p_mfgr, p_brand, p_type, p_size, p_container, p_retailprice, p_comment FROM postgresql.public.part limit 5;

p_partkey | p_name | p_mfgr | p_brand | p_type | p_size |

-----------+------------------------------------+---------------------------+------------+--------------------------+--------+-

1 | burlywood plum powder puff mint | Manufacturer#4 | Brand#43 | LARGE PLATED TIN | 31 |

2 | hot spring dodger dim light | Manufacturer#5 | Brand#55 | LARGE POLISHED STEEL | 4 |

3 | dark slate grey steel misty | Manufacturer#5 | Brand#53 | STANDARD PLATED COPPER | 30 |

4 | cream turquoise dark thistle light | Manufacturer#1 | Brand#13 | STANDARD BURNISHED BRASS | 3 |

5 | drab papaya lemon orange yellow | Manufacturer#1 | Brand#14 | ECONOMY BRUSHED BRASS | 7 |

(5 rows)At this point let's navigate to the Statements page and observe whether queries are indeed read via follower reads.

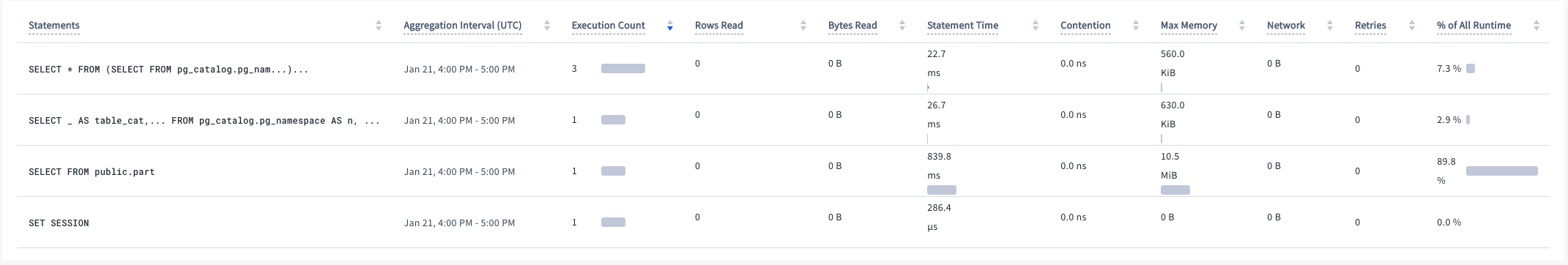

Here are all of the queries on this cluster:

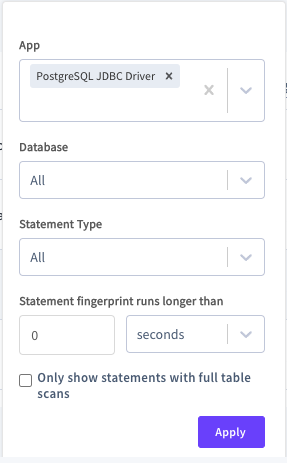

Let's filter by the JDBC Driver:

The following list is all of the statements executed via our connection:

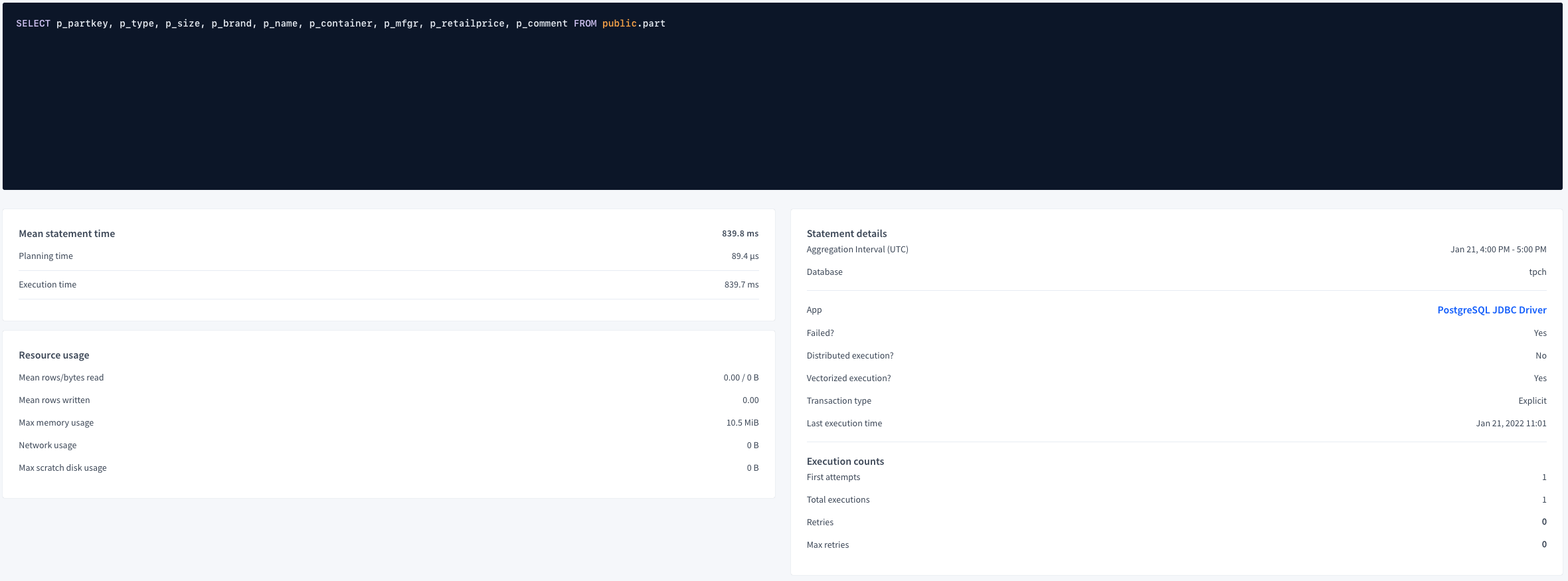

The two statements we need to focus on are our query and SET SESSION statement. Let's look at the query first:

Let's look at the SET SESSION

Conclusion

This tutorial only scratches the surface of federating data using an external project like Presto. The world of possibilities is ahead and I hope I was able to rouse some excitement about this new application.

Published at DZone with permission of Artem Ervits. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments