End to End Distributed Logging Traceability With Custom Context

This article helps a simple End to End Logging Traceability using Open Source Technologies such as SpringBoot, Sleuth, and more!

Join the DZone community and get the full member experience.

Join For FreeIntroduction

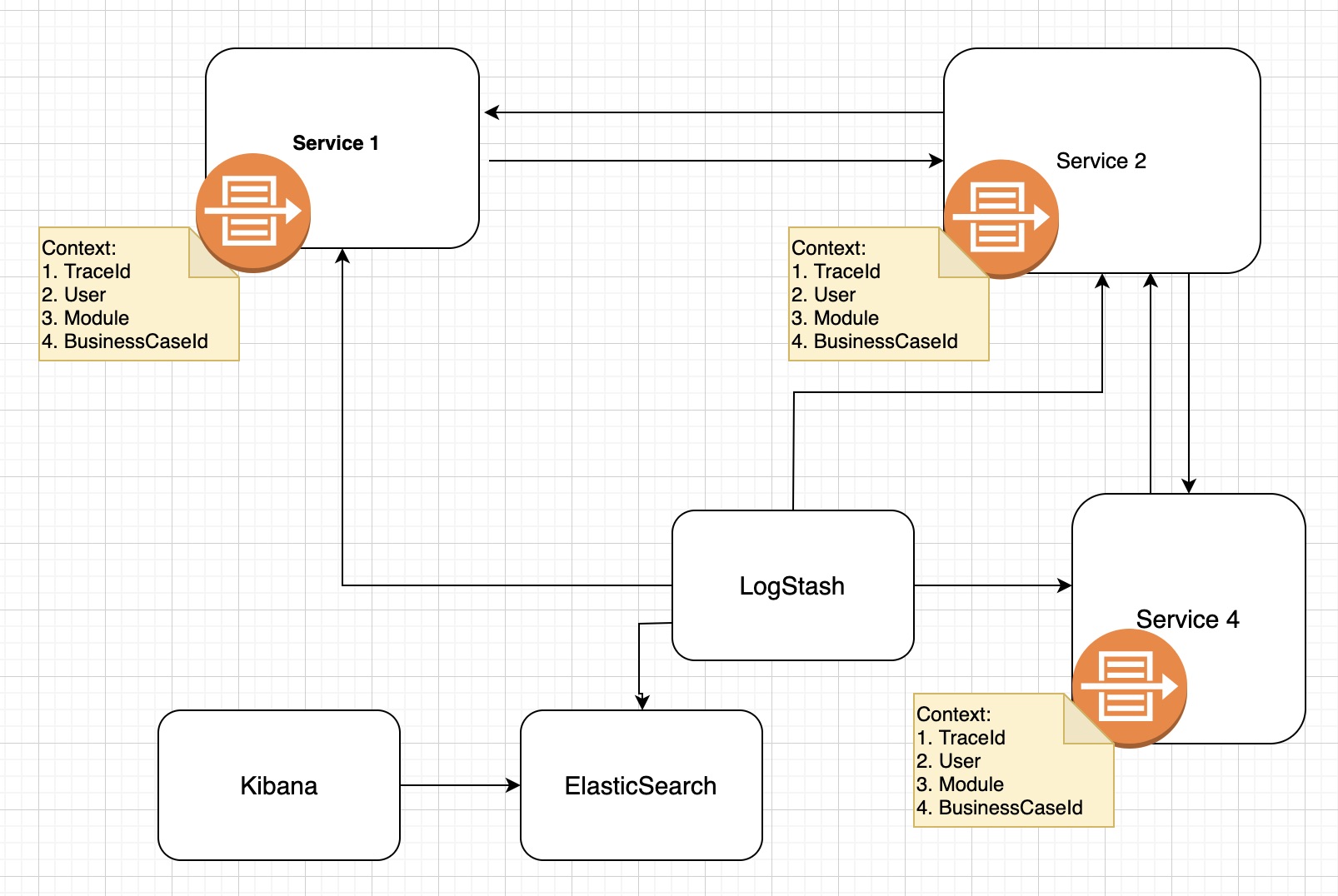

This article helps a simple End to End Logging Traceability using Open Source Technologies such as (SpringBoot, Sleuth, MDC, LogStash, ElasticSearch, and Kibana).

Problem Statement

In the Distributed Application world, their many application participates for a given customer business process. There are difficulties to trace the log details when any problem occurs for these distributed applications. Each development team uses its logging pattern and method or APIs (Apache Logger, ALF4J, Log4j or Log4j2, Java Util Logging, Logback.. ).

In the real production or Integration Environment, each of the module developers struggles to debug the problem since there is no Unique TraceId across the multiple Applications. Also, developers might struggle to identify the Functional Context information since which is not shared across multiple Applications.

Solution Approach

Option 1: You can make use of the built-in Springboot Sleuth which is a simple and more efficient way to create a TraceId and spanId. TraceId will be propagated to all the Microservices/Rest Components/Servlet Applications.

To view the Distributed Logs into a centralized Dashboard view, you can use even Zipkin or Kibana or any Visual Tools.

I have chosen Kibana which is easy to use, better performance using ElasticSearch, a more reliable Logstash pipeline, and much better usability.

Let's start with our solution

Prerequisites

Java 1.8 or 11 or 13

Any Java IDE (IntelliJ / Eclipse / ..)

Install LogStash: https://www.elastic.co/guide/en/logstash/current/installing-logstash.html

Install Elastic Search: https://www.elastic.co/guide/en/elasticsearch/reference/current/install-elasticsearch.html

Install Kibana: https://www.elastic.co/guide/en/kibana/current/install.html

Create 3 Springboot Projects using https://start.spring.io

Project 1: CoreAppOneService

src/main/resources/application.properties

server.port=8080

src/main/resources/bootstrap.yml

spring:

application:

name: coreappone

Src/main/resources/logback-spring.xml

xxxxxxxxxx

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!-- Example for logging into the build folder of your project -->

<p>

<!-- You can override this to have a custom pattern -->

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %X{context.userId} %X{context.moduleId} %X{context.caseId} %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs-->

<level>DEBUG</level>

</filter>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file in a JSON format -->

<appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<p>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<p>

<p>

{

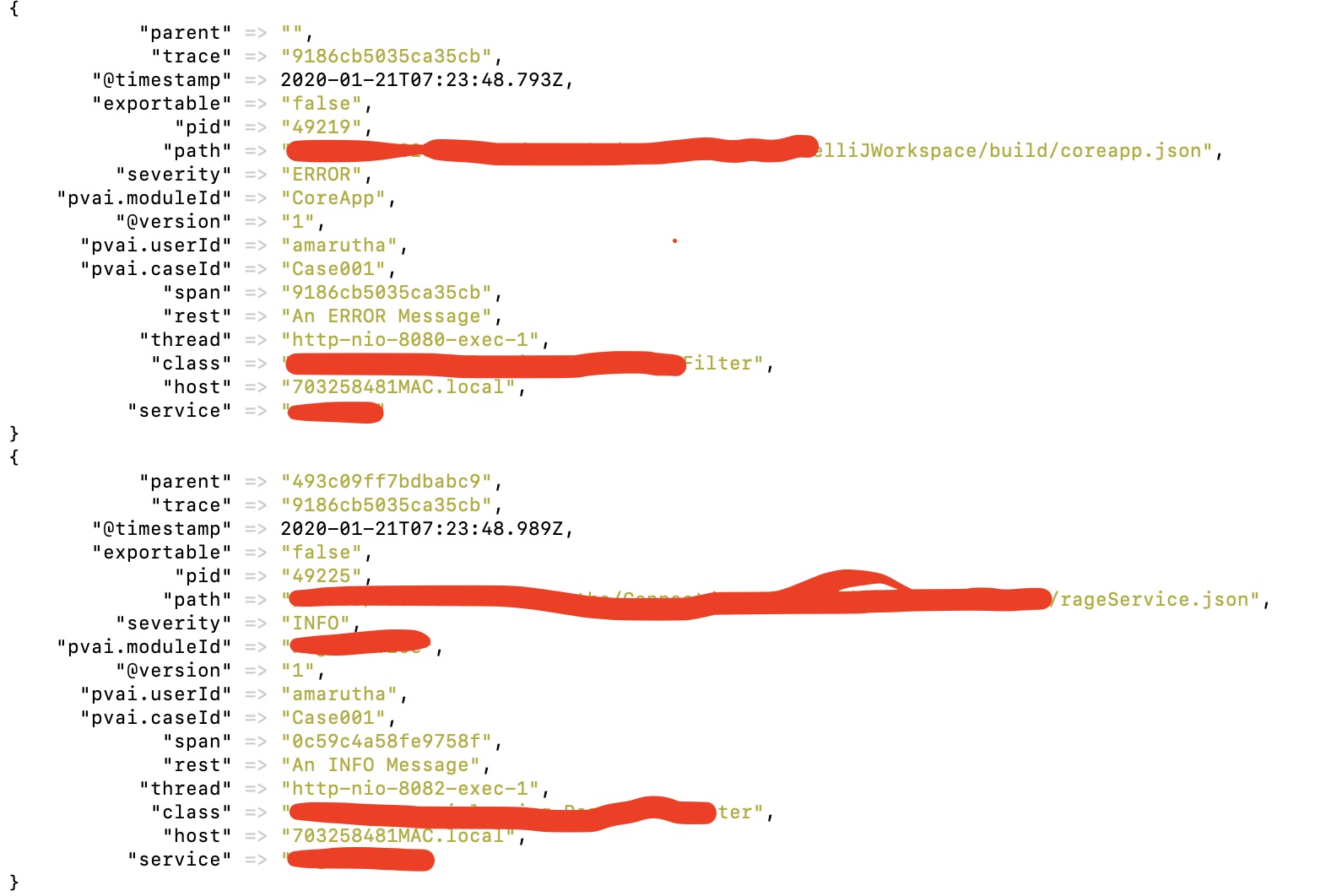

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"pvai.userId": "%X{context.userId:-}",

"pvai.moduleId": "%X{context.moduleId:-}",

"pvai.caseId": "%X{context.caseId:-}",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="console"/>

<!-- uncomment this to have also JSON logs -->

<appender-ref ref="logstash"/>

<appender-ref ref="flatfile"/>

</root>

</configuration>

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzone.sample.logging;

import java.io.IOException;

import javax.servlet.FilterChain;

import javax.servlet.ServletException;

import javax.servlet.ServletRequest;

import javax.servlet.ServletResponse;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.stereotype.Component;

import org.springframework.web.filter.GenericFilterBean;

public class CoreApp1MDCFilter extends GenericFilterBean {

private static final Logger logger = LoggerFactory.getLogger(CoreApp1MDCFilter.class);

public void destroy() {

}

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse,

FilterChain filterChain) throws IOException, ServletException {

MDC.put("context.userId", "amarutha");

MDC.put("context.moduleId", "CoreApp1");

MDC.put("context.caseId", "Case001");

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message");

logger.warn("A WARN Message");

logger.error("An ERROR Message");

filterChain.doFilter(servletRequest, servletResponse);

}

}

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzone.sample.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.devtools.remote.client.HttpHeaderInterceptor;

import org.springframework.context.annotation.Bean;

import org.springframework.http.MediaType;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.util.CollectionUtils;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

import java.util.ArrayList;

import java.util.List;

public class LoggingCoreApplicationOne {

private static final Logger logger = LoggerFactory.getLogger(LoggingCoreApplicationOne.class);

public static void main(String[] args) {

SpringApplication.run(LoggingCoreApplicationOne.class, args);

}

public RestTemplate getRestTemplate() {

return new RestTemplate();

}

("/coreapp1")

public String callCoreApp2Service() throws Exception {

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message");

logger.warn("A WARN Message");

logger.error("An ERROR Message");

final RestTemplate restTemplate = getRestTemplate();

List<ClientHttpRequestInterceptor> interceptors = restTemplate.getInterceptors();

if (CollectionUtils.isEmpty(interceptors)) {

interceptors = new ArrayList<>();

}

interceptors.add(new HttpHeaderInterceptor("pvai.userId", MDC.get("pvai.userId")));

interceptors.add(new HttpHeaderInterceptor("pvai.caseId", MDC.get("pvai.caseId")));

restTemplate.setInterceptors(interceptors);

return "This is CoreAppOne Service " + restTemplate

.getForObject("http://localhost:8081/coreapp2", String.class);

}

}

pom.xml

xxxxxxxxxx

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<p>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.3.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.dzone.sample.logging</groupId>

<artifactId>Logging_CoreAppOne</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>Logging_CoreAppOne</name>

<description>Demo project for Spring Boot</description>

<p>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

<version>2.2.1.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.3</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>log4j-over-slf4j</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

</dependency>

</dependencies>

<build>

<p>

<p>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

Project 2: CoreAppTwoService

xxxxxxxxxx

src/main/resources/application.properties

server.port=8081

src/main/resources/bootstrap.yml

spring:

application:

name: coreapptwo

src/main/resources/logback-spring.xml

xxxxxxxxxx

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!-- Example for logging into the build folder of your project -->

<p>

<!-- You can override this to have a custom pattern -->

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %X{context.userId} %X{contet.moduleId} %X{context.caseId} %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs-->

<level>DEBUG</level>

</filter>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file in a JSON format -->

<appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<p>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<p>

<p>

{

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"pvai.userId": "%X{context.userId:-}",

"pvai.moduleId": "%X{context.moduleId:-}",

"pvai.caseId": "%X{context.caseId:-}",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="console"/>

<!-- uncomment this to have also JSON logs -->

<appender-ref ref="logstash"/>

<appender-ref ref="flatfile"/>

</root>

</configuration>

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzone.sample.logging;

import java.io.IOException;

import javax.servlet.FilterChain;

import javax.servlet.ServletException;

import javax.servlet.ServletRequest;

import javax.servlet.ServletResponse;

import javax.servlet.http.HttpServletRequest;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.stereotype.Component;

import org.springframework.web.filter.GenericFilterBean;

public class CoreAppTwoMDCFilter extends GenericFilterBean {

private static final Logger logger = LoggerFactory.getLogger(CoreAppTwoMDCFilter.class);

public void destroy() {

}

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse,

FilterChain filterChain) throws IOException, ServletException {

MDC.put("context.userId", ((HttpServletRequest) servletRequest).getHeader("context.userId"));

MDC.put("context.moduleId", "CoreAppTwoService");

MDC.put("context.caseId", ((HttpServletRequest) servletRequest).getHeader("context.caseId"));

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message" );

logger.warn("A WARN Message" );

logger.error("An ERROR Message");

filterChain.doFilter(servletRequest, servletResponse);

}

}

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzone.sample.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.devtools.remote.client.HttpHeaderInterceptor;

import org.springframework.context.annotation.Bean;

import org.springframework.http.MediaType;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.util.CollectionUtils;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

import java.util.ArrayList;

import java.util.List;

public class LoggingCoreApplicationTwo {

private static final Logger logger = LoggerFactory.getLogger(LoggingCoreApplicationTwo.class);

public static void main(String[] args) {

SpringApplication.run(LoggingApplicationTwo.class, args);

}

public RestTemplate getRestTemplate() {

return new RestTemplate();

}

("/coreapp3")

public String callCoreAppThreeService() throws Exception {

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message");

logger.warn("A WARN Message");

logger.error("An ERROR Message");

final RestTemplate restTemplate = getRestTemplate();

List<ClientHttpRequestInterceptor> interceptors = restTemplate.getInterceptors();

if (CollectionUtils.isEmpty(interceptors)) {

interceptors = new ArrayList<>();

}

interceptors.add(new HttpHeaderInterceptor("context.userId", MDC.get("context.userId")));

interceptors.add(new HttpHeaderInterceptor("context.caseId", MDC.get("context.caseId")));

restTemplate.setInterceptors(interceptors);

return "This is CoreAppTwo Service " + restTemplate

.getForObject("http://localhost:8082/coreapp3", String.class);

}

}

Project 3: CoreAppThreeService

xxxxxxxxxx

src/main/resources/application.properties

server.port=8082

src/main/resources/bootstrap.yml

spring:

application:

name: coreappthree

src/main/resources/logback-spring.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!-- Example for logging into the build folder of your project -->

<p>

<!-- You can override this to have a custom pattern -->

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %X{context.userId} %X{context.moduleId} %X{context.caseId} %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs-->

<level>DEBUG</level>

</filter>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<p>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file in a JSON format -->

<appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<p>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<p>

<p>

{

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"pvai.userId": "%X{context.userId:-}",

"pvai.moduleId": "%X{context.moduleId:-}",

"pvai.caseId": "%X{context.caseId:-}",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="console"/>

<!-- uncomment this to have also JSON logs -->

<appender-ref ref="logstash"/>

<appender-ref ref="flatfile"/>

</root>

</configuration>

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzone.sample.logging;

import java.io.IOException;

import javax.servlet.FilterChain;

import javax.servlet.ServletException;

import javax.servlet.ServletRequest;

import javax.servlet.ServletResponse;

import javax.servlet.http.HttpServletRequest;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.stereotype.Component;

import org.springframework.web.filter.GenericFilterBean;

public class CoreAppThreeMDCFilter extends GenericFilterBean {

private static final Logger logger = LoggerFactory.getLogger(CoreAppThreeMDCFilter.class);

public void destroy() {

}

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse,

FilterChain filterChain) throws IOException, ServletException {

MDC.put("context.userId", ((HttpServletRequest) servletRequest).getHeader("context.userId"));

MDC.put("context.moduleId", "CoreAppThreeService");

MDC.put("context.caseId", ((HttpServletRequest) servletRequest).getHeader("context.caseId"));

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message");

logger.warn("A WARN Message");

logger.error("An ERROR Message");

filterChain.doFilter(servletRequest, servletResponse);

}

}

src/main/java/com.dzone.sample.logging

xxxxxxxxxx

package com.dzonea.sample.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

public class LoggingCoreApplicationThree {

private static final Logger logger = LoggerFactory.getLogger(LoggingCoreApplicationThree.class);

public static void main(String[] args) {

SpringApplication.run(LoggingCoreApplicationThree.class, args);

}

public RestTemplate getRestTemplate() {

return new RestTemplate();

}

("/coreapp3")

public String rageService() throws Exception {

logger.trace("A TRACE Message");

logger.debug("A DEBUG Message");

logger.info("An INFO Message");

logger.warn("A WARN Message");

logger.error("An ERROR Message");

return "This is CoreAppThree Service ";

}

}

Configure LogStash PipeLine

/usr/local/logstash-7.5.1/config/logstash.conf

xxxxxxxxxx

input {

file {

path => [ "/Users/703258481mac/Marutha/Genpact/PVAI/IntelliJWorkspace/build/*.json" ]

codec => json {

charset => "UTF-8"

}

}

}

filter {

if [message] =~ "timestamp" {

grok {

match => ["message", "^(timestamp)"]

add_tag => ["stacktrace"]

}

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["localhost:9200"]

}

}

Start LogStash:

/usr/local/logstash-7.5.1/bin

./logstash -f ../config/logstash.conf

Sample Logstash Pipeline execution log

Start Kibana

/usr/local/var/homebrew/linked/kibana-full/bin

./kibana

Start ElasticSearch

/usr/local/var/homebrew/linked/elasticsearch-full/bin

./elasticsearch

Once all the Servers are started, then hit the CoreAppOne Service in Browser.

http://localhost:8080/coreapp1

This CoreAppOne Service calls CoreAppServiceTwo, which intern calls CoreAppServiceThree.

Now, you can able to see both Console logs and JSON logs under Your Workspace/build folder

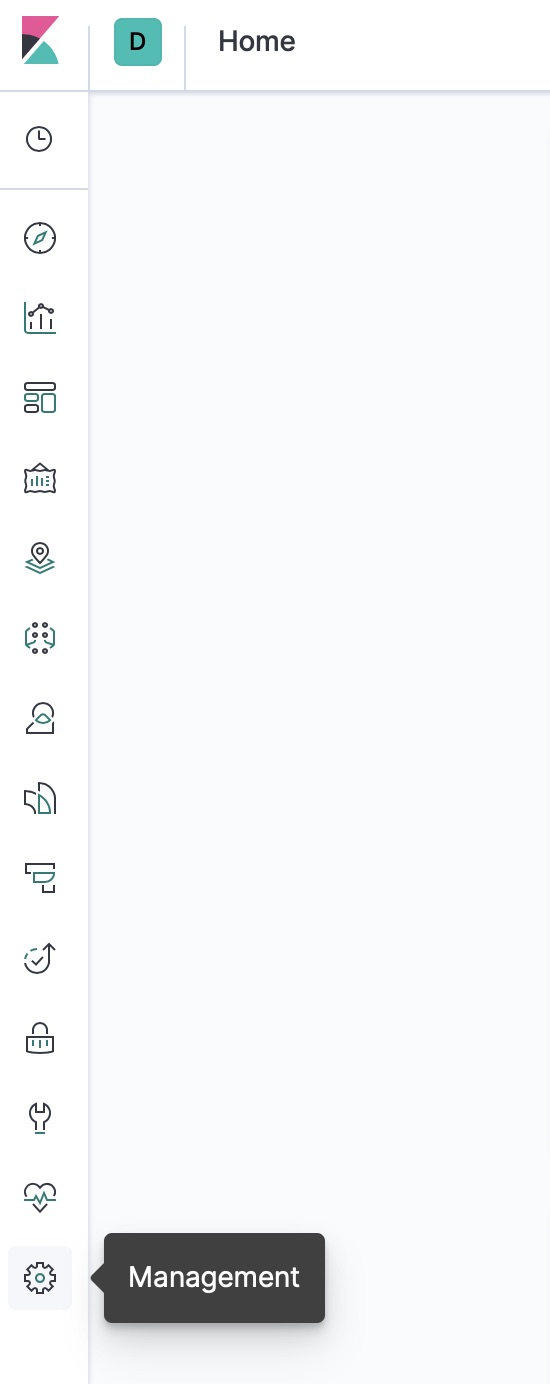

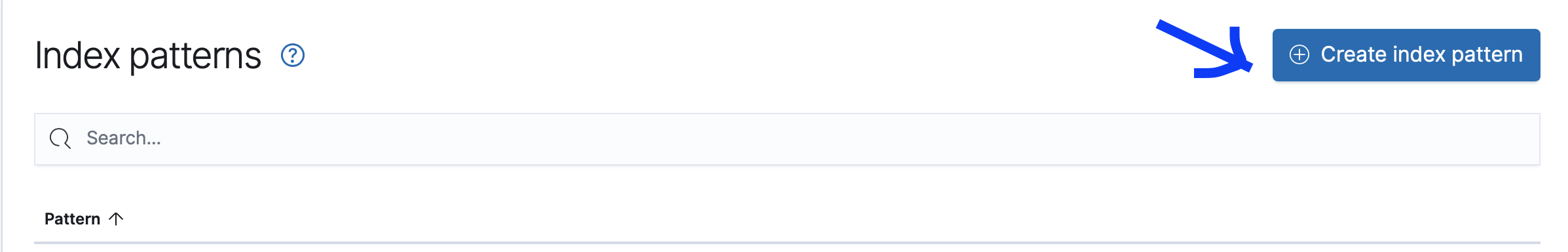

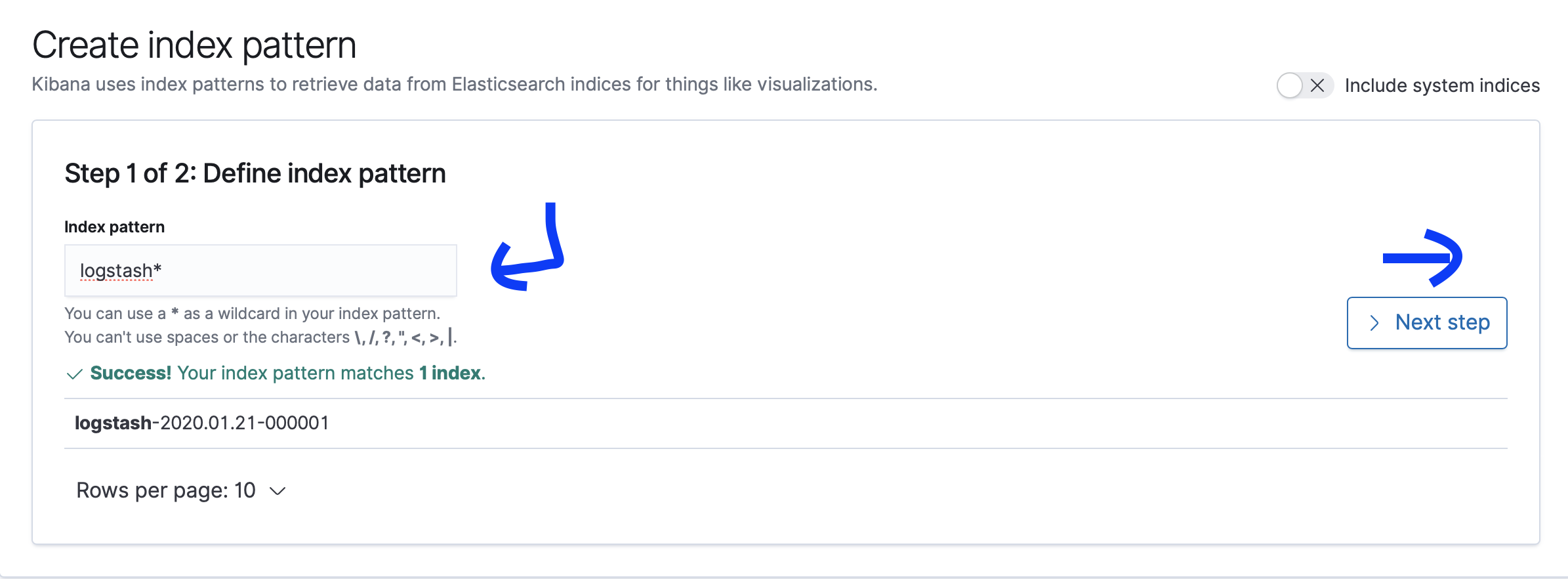

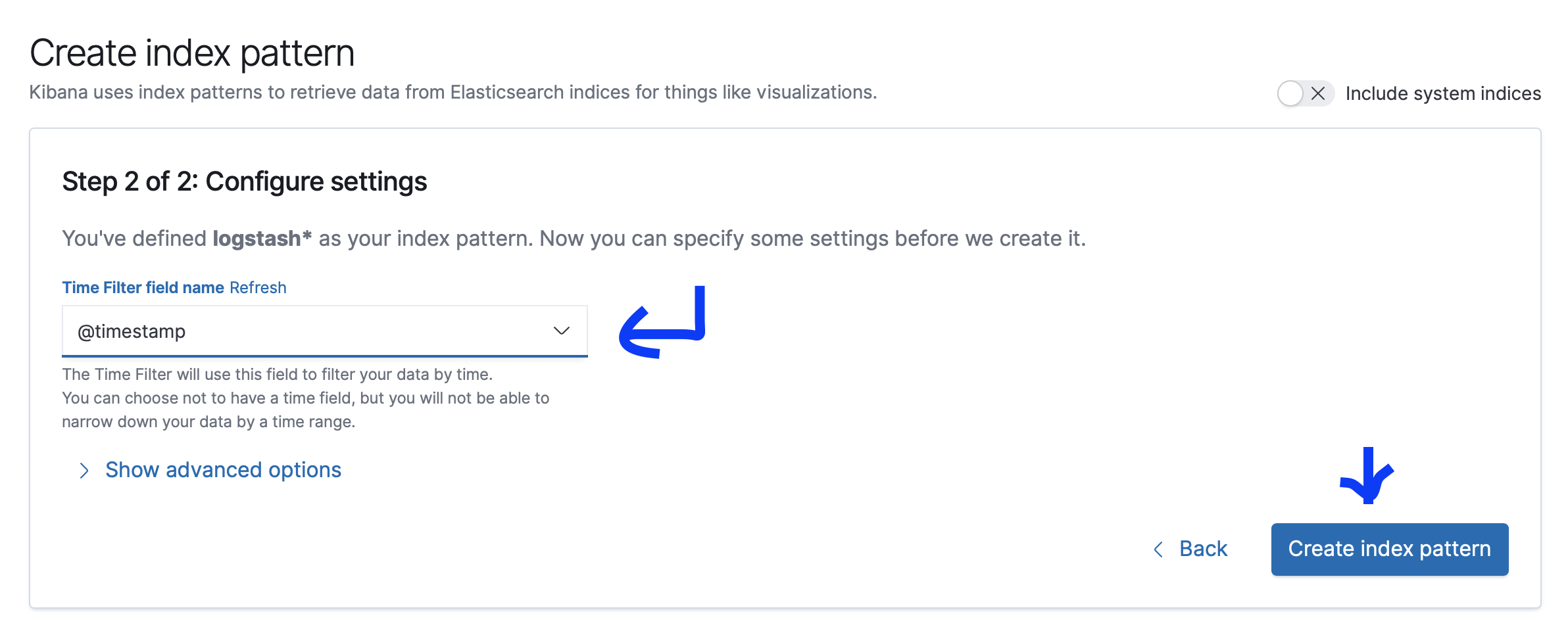

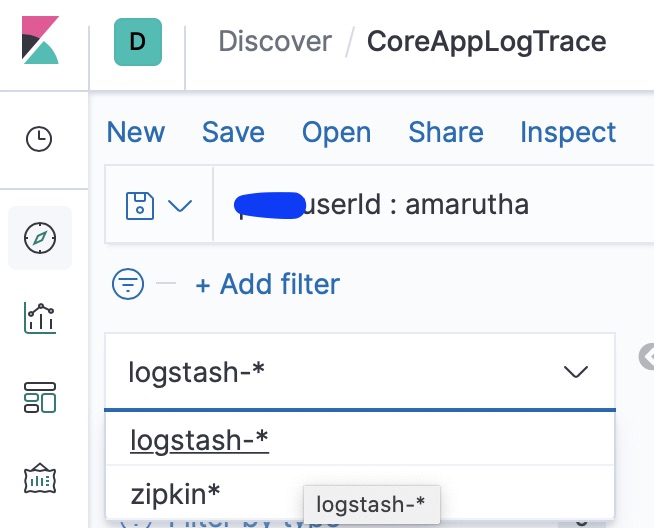

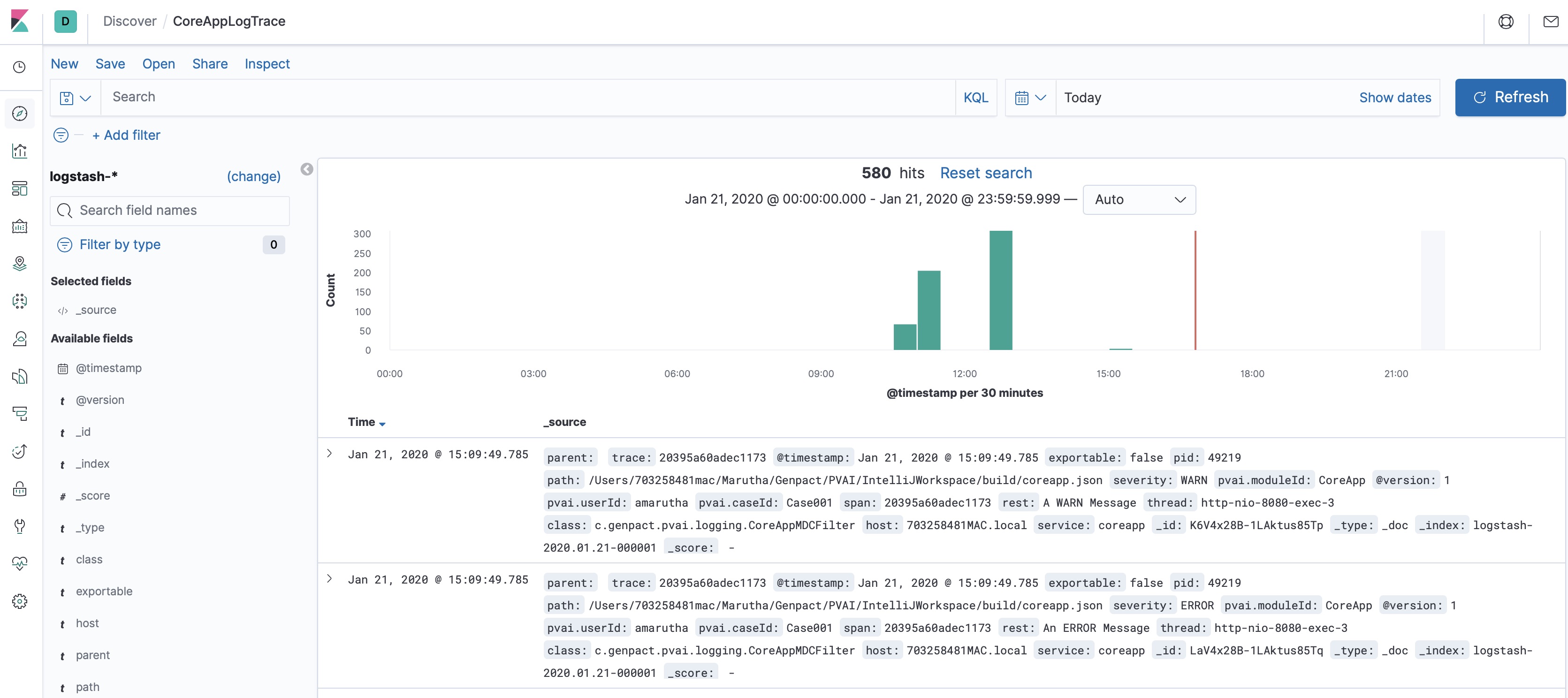

Let's Connect to Kibana to configure the Logstash index

Opinions expressed by DZone contributors are their own.

Comments