Extract Insights From Text Data Inside Databases

Apply the power of Open AI's GPT-3 to the text data in your database in just a few SQL lines.

Join the DZone community and get the full member experience.

Join For FreeImagine you have a lot of text data inside your database. And you want to extract insights to analyze it or perform various AI tasks on text data. In this article, you will learn how to integrate your database with OpenAI GPT-3 using MindsDB, an open-source AI platform to get insights from all your text data at once with a few SQL commands instead of making multiple individual API calls, ETL-ing and moving massive amounts of data. We'll walk you through the process using three practical examples.

What Is OpenAI GPT-3?

OpenAI GPT-3 is a powerful language model developed by OpenAI, a research lab focused on artificial general intelligence. It has earned its place in the world of machine learning by being one of the most powerful and accurate natural language models ever created.

What Is MindsDB?

MindsDB is an open-source machine-learning platform that makes it easy for developers to deploy machine-learning models into production by abstracting them as virtual database "AI tables". It supports a wide range of popular ML platforms, including OpenAI, Hugging Face, TensorFlow, PyTorch, XGBoost, LightGBM, and more. MindsDB integrates ML frameworks with the majority of available databases and data platforms, including MySQL, MongoDB, PostgreSQL, Clickhouse, etc, allowing developers to build and deploy AI projects using SQL with minimal setup time and no ML coding required.

Leverage the NLP Capabilities for Text Data

By integrating databases and OpenAI using MindsDB, you can easily extract insights from text data with just a few SQL commands, for example:

- Classify and label rich text, for instance, sentiment analysis, detecting hate speech, or spam;

- Extract meaning for labeling text even when you don't have any training data - so-called zero-shot classification;

- Answer questions or comments;

- Automatically summarize long texts and translate them;

- Convert rich text into JSON objects, and more!

Ultimately, this provides developers with an easy way to incorporate powerful NLP capabilities into their applications while saving time and resources compared to traditional ML development pipelines and methods.

Read on to see how to use OpenAI GPT-3 within MindsDB and explore the three different operation modes available.

Integrate SQL With OpenAI Using MindsDB

It has become easier than ever for developers to leverage large language models provided by OpenAI. With MindsDB, developers can now easily integrate their databases and OpenAI, allowing them to answer questions with or without context and complete general prompts with single queries. Let’s take a look at how this integration works.

MindsDB has implemented three operation modes to leverage large pre-trained language models provided by the OpenAI API.

- Answering questions without context

- Answering questions with context

- General prompt completion

The first operation mode - answering questions without context - requires users to input a question and an associated dataset for the model to provide an accurate response.

The second mode - answering questions with context - allows users to input a question along with additional contextual information, such as previous conversations or documents related to the topic under discussion.

The last mode - general prompt completion - enables users to input a prompt in order for the model to generate additional sentences based on its understanding of the prompt.

The choice of the operation mode depends on the use case. However, all three modes are slightly different formulations of the prompt completion task for which most OpenAI models are trained. In such cases, the objective is to optimize the quality of predicted words that follow any given text chunk as input.

Let’s find out how to create MindsDB models powered by OpenAI technology.

Apply OpenAI GPT-3 to your text data

Let’s go through all the available operation modes one by one.

Operation Mode 1: Answering Questions Without Context

Here is how to create a model that answers questions without any additional context:

CREATE MODEL questions_without_context_model

PREDICT answer

USING

engine = 'openai',

question_column = 'question';We create a model named questions_without_context_model in the current project. To learn more about the MindsDB project structure, check out our docs here.

We use the OpenAI engine to create a model in MindsDB. Its input data is stored in the question column, and the output data is saved in the answer column.

Please note that the api_key parameter is optional on cloud.mindsdb.com but mandatory for local/on-premise usage. You can obtain an OpenAI API key by signing up for OpenAI's API services on their website. Once you have signed up, you can find your API key in the API Key section of the OpenAI dashboard. You can then pass this API key to the MindsDB platform when creating models.

To use your own OpenAI API key, the above query would be:

CREATE MODEL questions_without_context_model

PREDICT answer

USING

engine = 'openai',

question_column = 'question',

api_key = 'YOUR_OPENAI_API_KEY';Alternatively, you can create a MindsDB ML engine that includes the API key, so you don't have to enter it each time:

CREATE ML_ENGINE openai

FROM openai

USING

api_key = 'YOUR_OPENAI_API_KEY';Once the model completes its training process, we can query it for answers.

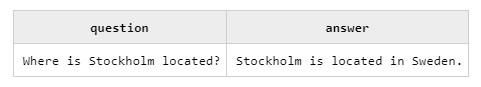

SELECT question, answer

FROM questions_without_context_model

WHERE question = 'Where is Stockholm located?';On execution, we get:

Operation Mode 2: Answering Questions With Context

Here is how to create a model that answers questions with additional context:

CREATE MODEL questions_with_context_model

PREDICT answer

USING

engine = 'openai',

question_column = 'question',

context_column = 'context';There is one additional parameter - the context parameter. We can define the context that should be considered when the model answers the question.

Once the model completes its training process, we can query it for answers.

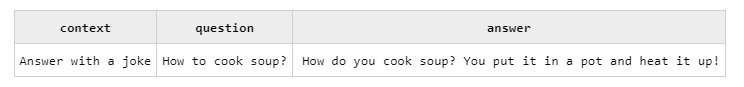

SELECT context, question, answer

FROM questions_with_context_model

WHERE context = 'Answer with a joke'

AND question = 'How to cook soup?';On execution, we get:

Operation Mode 3: Prompt Completion

Here is how to create a model that offers the most flexible mode of operation. It completes any query provided in the prompt_template parameter, which can involve multiple input columns. In contrast to the other two modes, templates can be used to do interesting things other than question answering, like summarization, translation, or automated text formatting.

Please note that good prompts are the key to getting great completions out of large language models like the ones that OpenAI offers. For best performance, we recommend you read their prompting guide before trying your hand at prompt templating.

CREATE MODEL prompt_completion_model

PREDICT answer

USING

engine = 'openai',

prompt_template = 'Context: {{context}}. Question: {{question}}. Answer:',

max_tokens = 100,

temperature = 0.3;Now we have three new parameters.

- The prompt_template parameter defines the input prompt to the model for each row in the data source. Multiple queries can be used in arbitrary order.

- The max_tokens parameter defines the maximum token cost of the prediction.

- The temperature parameter defines how creative or risky the answers are.

Please note that all three parameters can be overridden at prediction time.

Here is an example that uses parameters provided at model creation time:

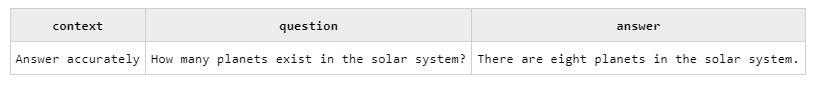

SELECT context, question, answer

FROM prompt_completion_model

WHERE context = 'Answer accurately'

AND question = 'How many planets exist in the solar system?';On execution, we get:

Now let's look at an example that overrides parameters at prediction time:

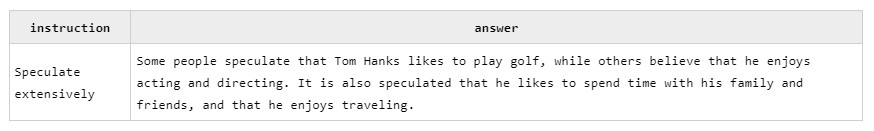

SELECT instruction, answer

FROM prompt_completion_model

WHERE instruction = 'Speculate extensively'

USING

prompt_template = '{{instruction}}. What does Tom Hanks like?',

max_tokens = 100,

temperature = 0.5;On execution, we get:

Conclusion

In this tutorial, you have learned how to use MindsDB and OpenAI GPT-3 to extract insights from text data inside databases with just a few SQL commands.

You can now run many NLP tasks on your own data, so check MindsDB docs for helpful examples library and code samples you can copy and execute.

Get started with NLP today!

Published at DZone with permission of Jorge Torres. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments