Foundational Building Blocks for AI Applications

This article explores how industries can build, utilize, and implement AI solutions, and provides an in-depth look at technical implementation strategies.

Join the DZone community and get the full member experience.

Join For FreeStartups are revolutionizing their growth trajectory by harnessing AI technologies. From intelligent chatbots handling customer inquiries to sophisticated recommendation systems personalizing user experiences, AI tools are enabling small teams to achieve outsized impact. Modern startups can now automate complex operations, derive actionable insights from data, and scale their services efficiently - capabilities once reserved for large enterprises. Let's explore the practical implementation of AI in startups, examining both the essential technology stack and real-world applications through the lens of system architecture.

In the following article, we will dive deep into two ways you can leverage AI models in your organization's solution stack:

- AI Chat Applications

- AI Personalization

AI Chat Applications

AI chat applications have revolutionized how businesses and individuals interact with technology, offering intelligent, real-time responses to queries and tasks. These applications leverage advanced natural language processing to understand user intent, provide contextually relevant information, and automate routine interactions that would traditionally require human intervention. From customer service and technical support to personal assistance and language learning, AI chat applications are transforming digital communication by delivering 24/7 availability, consistent responses, and scalable solutions that adapt to user needs.

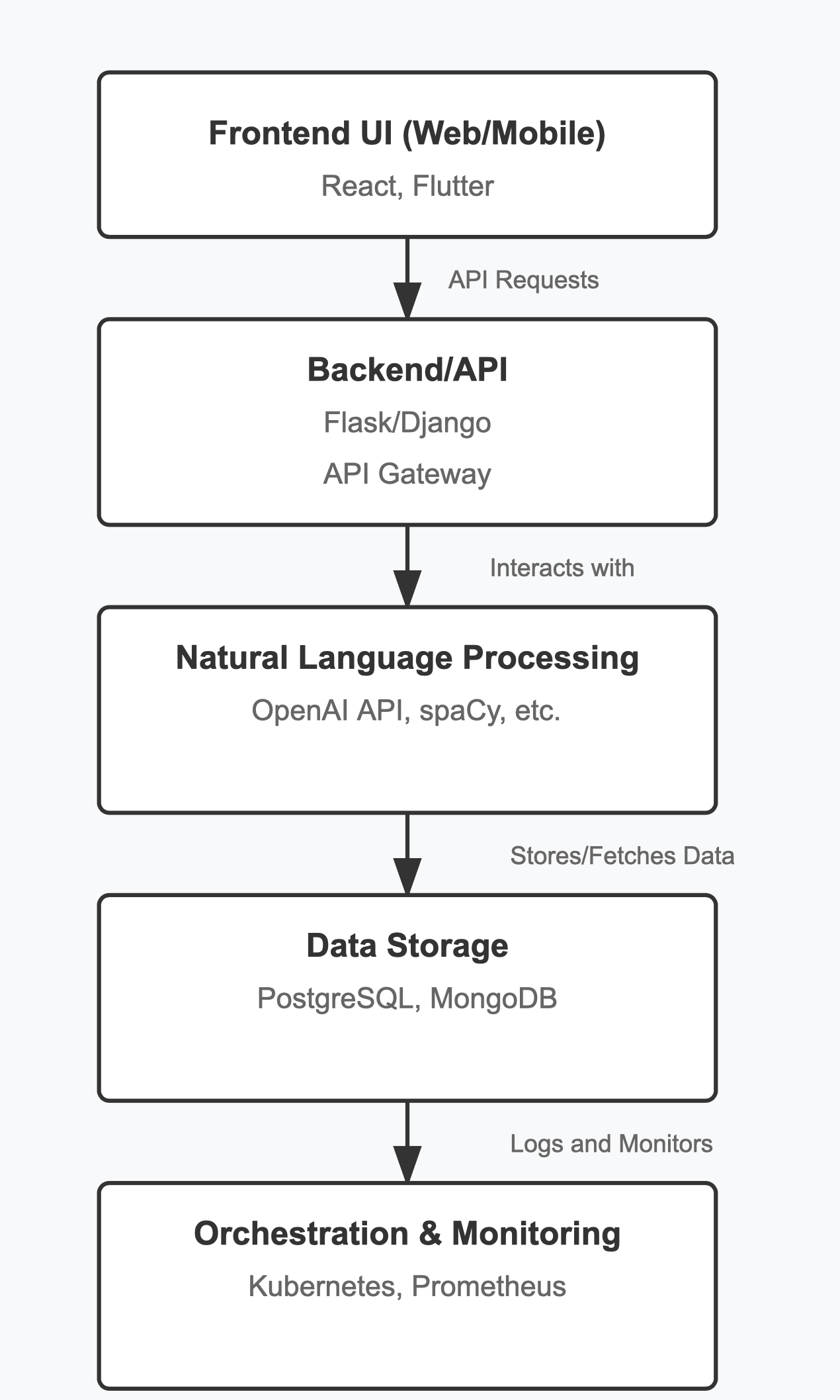

Building Blocks for AI Chat Applications

AI chat applications are built using key components like user interfaces, backend systems, AI processing, data management, and orchestration tools to create smooth and responsive experiences.

User Interface (UI)

- Frontend: React (for web) or Flutter (for cross-platform mobile).

- Messaging Platform Integration: Channels like WhatsApp, Facebook Messenger, or a custom web chat UI.

Backend

- Server Framework: Flask, Django, or Node.js to handle requests.

- API Gateway: Use API Gateway or NGINX for request routing and security.

AI Processing

- AI Model APIs: OpenAI API for NLP (for chatbots) or a framework like TensorFlow or PyTorch if training custom models.

- Language Understanding Layer: Incorporate NLP libraries, such as spaCy or NLTK, or use external services like Dialogflow or Rasa.

Data Layer

- Databases: PostgreSQL or MongoDB for structured and unstructured data.

- Data Pipelines: Use Apache Kafka or RabbitMQ to manage message streams.

- Data Warehouse: Amazon Redshift or Snowflake for storing and analyzing historical data.

Orchestration and Monitoring

-

Model Orchestration: Use Kubernetes or Docker Swarm to manage containers.

-

Monitoring Tools: Prometheus and Grafana are used to monitor API performance, model drift, and other metrics.

Here’s a basic code snippet for building a simple backend in Python using Flask and OpenAI’s API to handle chatbot responses.

Step 1: Install Dependencies

pip install flask openaiStep 2: Create the Flask Backend

from flask import Flask, request, jsonify

import openai

import os

# Set OpenAI API key

openai.api_key = os.getenv("OPENAI_API_KEY")

app = Flask(__name__)

@app.route('/chat', methods=['POST'])

def chat():

user_message = request.json.get("message")

# Generate a response from the AI model

response = openai.Completion.create(

engine="text-davinci-004",

prompt=user_message,

max_tokens=100,

temperature=0.7

)

bot_reply = response.choices[0].text.strip()

return jsonify({"reply": bot_reply})

if __name__ == "__main__":

app.run(debug=True)Explanation of Code

- Endpoint:

/chatendpoint receives user messages. - AI Model Integration: The

openai.Completion.create()function generates responses using the OpenAI model. - Response: The bot's reply is sent back in JSON format.

Considerations for Deployment

- Containerization: Use Docker to containerize the application for easy deployment.

- Scaling: For higher traffic, deploy using Kubernetes or a cloud provider like AWS, Azure, or Google Cloud.

- Data Security: Implement secure handling of user data, especially if handling sensitive information.

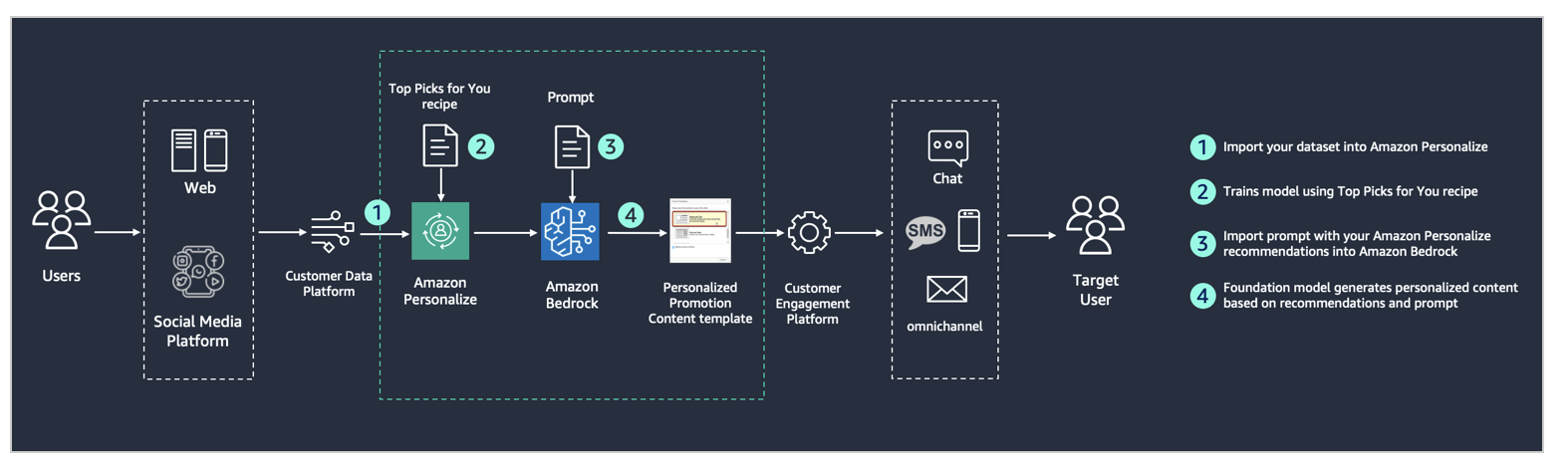

AI Applications for Personalization

To create personalized content for an end user, you can leverage AI models to analyze user data (like preferences or past interactions) and generate content based on those insights. The following is an example of how to use OpenAI's language model to generate personalized content, such as tailored recommendations or content summaries, based on a user's profile.

Building Blocks for AI Personalization

Personalization using AI requires several core building blocks. These components work together to understand user preferences, predict their needs, and deliver tailored recommendations or content.

Collect and Process Data

Collect and organize user data, such as browsing behavior, preferences, demographic data, and interaction history.

- Data Storage: SQL or NoSQL databases (e.g., PostgreSQL, MongoDB).

- Data Pipeline: Tools like Apache Kafka, Apache Spark, or ETL pipelines to manage the flow of data from collection to processing.

Build User Profiles

Create detailed profiles and segment users based on similar behaviors, preferences, or demographic data to enable more targeted recommendations.

- Clustering Models: Use k-means clustering or DBSCAN to group similar users.

- Feature Engineering: Tools like pandas in Python for data manipulation.

Recommend and Generate Content

Suggests relevant content or products to users by understanding their preferences or similarities with other users.

- Collaborative Filtering: Uses past interactions between users and items (like products, songs, or articles) to make recommendations.

- Content-Based Filtering: Uses user profile data and item characteristics for personalized suggestions.

- Hybrid Models: Combine collaborative and content-based approaches (e.g., TensorFlow, PyTorch).

Analyze and Adapt With Feedback

Continuously improve personalization models by incorporating user feedback, such as click-through rates or explicit ratings, to refine recommendations.

- Model Retraining Pipelines: Use MLops tools like Kubeflow, MLflow, or AWS SageMaker to update models based on feedback.

- Monitoring Tools: Prometheus and Grafana are used to monitor model performance and behavior in real time.

Here is a sample snippet to leverage OpenAI models for personalization:

# Import OpenAI's library

import openai

import os

# Set OpenAI API key

openai.api_key = os.getenv("OPENAI_API_KEY")

# Define a function to create personalized content

def generate_personalized_content(user_profile):

# User profile information can include preferences, interests, or recent activity

preferences = user_profile.get("preferences", "technology and science")

recent_activity = user_profile.get("recent_activity", "read articles on AI")

# Customize the prompt for content generation

prompt = (

f"Generate a personalized article summary for a user interested in {preferences}. "

f"The user recently engaged with content about {recent_activity}. "

f"Create a summary that includes recent trends, news, and interesting topics "

f"to keep them engaged and informed."

)

# Generate content based on the prompt and user profile

response = openai.Completion.create(

engine="text-davinci-004",

prompt=prompt,

max_tokens=150,

temperature=0.7,

n=1 # Generate one response

)

# Extract and return the generated content

personalized_content = response.choices[0].text.strip()

return personalized_content

# Example user profile data

user_profile = {

"preferences": "technology and science",

"recent_activity": "read articles on AI and machine learning",

}

# Generate personalized content for the user

content = generate_personalized_content(user_profile)

print("Personalized Content:\n", content)Explanation of Code

- User Profile Input: This snippet uses a dictionary to store user preferences and recent activity. These details allow the AI to create more relevant content.

- Customized Prompt: The prompt includes specific details from the user's profile, guiding the AI to generate personalized content that reflects their interests.

- AI Model Request: The

openai.Completion.create()method sends the prompt to the AI model, which then returns a response based on the input data. - Output: The function returns a personalized summary or content recommendation based on the user profile.

Industry Examples of Startups Leveraging AI

- E-commerce: Startups like Stitch Fix use AI to provide personalized shopping experiences through recommendation engines and chatbots that assist users in finding items based on preferences.

- Healthcare: Babylon Health and Ada Health use AI chatbots to provide symptom checks and virtual health consultations, allowing users to get medical advice instantly and securely.

- Finance: Digit and Trim use AI to analyze users’ spending habits, recommend savings strategies and even automate budget management through a user-friendly app interface.

Conclusion

By implementing a large language model in their technical stack, startups can offer personalized, scalable, and cost-effective solutions. Whether through customer service chatbots, recommendation engines, or predictive analytics, utilizing AI helps startups stand out, scale quickly, and meet growing demands. The sample architecture and code here are a foundation, but there’s flexibility for customization based on specific business needs and growth ambitions.

Opinions expressed by DZone contributors are their own.

Comments