HDFS Architecture and Functionality

In this article, we take a deep dive into the file system used by Hadoop called HDFS (Hadoop Distributed File System).

Join the DZone community and get the full member experience.

Join For FreeFirst of all, thank you for the overwhelming response to my previous article (Big Data and Hadoop: An Introduction). In my previous article, I gave a brief overview of Hadoop and its benefits. If you have not read it yet, please spend some time to get a glimpse into this rapidly growing technology. In this article, we will be taking a deep dive into the file system used by Hadoop called HDFS (Hadoop Distributed File System).

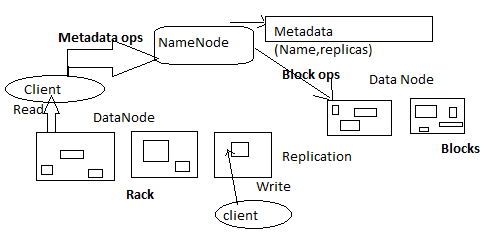

HDFS is the storage part of the Hadoop System. It is a block-structured file system where each file is divided into blocks of a predetermined size. These blocks are stored across a cluster of one or several machines. HDFS works with two types of nodes: NameNode (master) and DataNodes (slave). So let's dive.

Hadoop NameNodes

NameNodes are the centerpiece of the HDFS file system. They keep the directory tree of all files in the file system, and track where in the cluster the data is kept. They do not store the data of these files. It is a very highly available machine. Client applications talk to the NameNodes whenever they wish to locate a file, or when they want to add/copy/move/delete a file. NameNodes also executes file system executions, such as naming, closing, and opening files/directories. All DataNodes send a heartbeat and block report to the NameNode in the Hadoop cluster. It ensures that the DataNodes are alive. A block report contains a list of all blocks on a datanode. The major files associated with the metadata of the cluster in namenode are:

EditLogs: contains all the recent modifications made to the file system on the most recent FsImage. NameNodes receive a create/update/delete request from the client. After that this request is first recorded to edit files.

FsImage: stands for file system image. It contains the complete file system namespace since the creation of the NameNode. Files and directories are represented in the NameNode by inodes. Inodes record attributes like permissions, modifications, and access times, or namespace and disk space quotas.

Hadoop DataNodes

These are commodity hardwares where real data resides. These are also known as slave nodes. Multiple data nodes group up to form racks. DataNodes block replica creation, deletion, and replication according to the instruction of the NameNode(s). DataNodes send a heartbeat to the NameNode(s) to report the health of the HDFS data. By default, this frequency is set to 3 seconds.

Secondary NameNodes

In HDFS, when a NameNode starts, it first reads the state of the HDFS file from the FsImage file. After that, it applies edits from the edits log file. The NameNode then writes a new HDFS state to the FsImage. Then it starts normal operations with an empty edits file. At the time of startup, the NameNode merges with the FsImage and edits the files, so the edit log file could get very large over time. A side effect of a larger edits file is that the next restart of the NameNode takes longer. The secondary NameNode solves this issue. A secondary NameNode downloads the FsImage and EditLogs from the NameNode and then merges the edit logs with the FsImage (File System Image). It keeps the edit log's size within a limit. It stores the modified FsImage into persistent storage so we can use it in the case of a NameNode failure. Secondary NameNodes act as a regular checkpoint in HDFS.

How HDFS Works

HDFS works under the following assumption: write once and read often.

Write in HDFS

When a client wants to write a file to HDFS, it communicates with NameNode to obtain metadata. The NameNode responds with a number of blocks, their location, replicas, and other details. Based on information from the NameNode, the client splits the files into multiple blocks. After that, it starts sending them to the first DataNode.

The client first sends Block A to DataNode 1 with other two DataNodes' details. When DataNode 1 receives Block A from the client, DataNode 1 copies the same block to DataNode 2 of the same rack. As both the DataNodes are in the same rack they can transfer the block via a rack switch. Now DataNode 2 copies the same block to DataNode 3. As both the DataNodes are in different racks the block transfers the data via an out-of-rack switch. When a DataNode receives these blocks from the client, it sends a write confirmation to NameNode. The same process is repeated for each block in the file.

Create a File in HDFS

If the client has to create a file inside HDFS then it needs to interact with the NameNode. The NameNode provides the address of all the slaves where the client can write its data. The client also gets a security token from the NameNode which they need to present to the slaves for authentication before writing the block.

To create a file, the client executes the create() method on the DistributedFileSystem. Now the DistributedFileSystem interacts with the NameNode by making an RPC (Remote Procedure Call) for creating a new file that has no blocks associated with it in the file system namespace. Various checks are executed by the NameNode in order to make sure that there is no such file already present and that the client is authorized to create a new file.

If all this goes smoothly, then a record of the new file is created by the NameNode; otherwise, file creation fails and an IOException is thrown to the client. An FSDataOutputStream returns the DistributedFileSystem for the client in order to start writing data to DataNode. Communication with DataNodes and the client is handled by the DFSOutputStream which is a part of FSDataOutputStream.

Read in HDFS

This is a comparatively easier operation. This operation takes place in two steps:

Client interaction with the NameNode.

Client interaction with the DataNode.

NameNodes contain all the information regarding which block is stored on which particular slave in the HDFS, which blocks are used for that specific file. Hence, the client needs to interact with the NameNode in order to get the address of the slaves where the blocks are actually stored. The NameNode will provide the details of the slaves that contain the required blocks. Let’s understand the client and NameNode interaction in more detail. To access the blocks stored in HDFS, a client initiates a request using the open() method to get to the FileSystem object, which is a part of the DistributedFileSystem. Now the FileSystem will interact with the NameNode using RPC (Remote Procedure Call), to get the location of the slaves which contain the blocks of the file requested by the client. At this level, NameNodes will check whether the client is authorized to access that file. If they are, then it sends the information about the block's location. Also, it gives a security token to the client which the client needs to show to the slaves for authentication.

Now, after receiving the address of the slaves containing blocks, the client will directly interact with the slave to read the blocks. The data will flow directly from the slaves to the client (it will not flow via the NameNode). The client reads the data block from multiple slaves in parallel. Let’s understand the client and NameNode interaction in more detail. The client will send a read request to the slaves via the FSDataInputStream object. All the interactions between the client and DataNode are managed by the DFSInputStream which is a part of client APIs. The client will show authentication tokens to the slaves which were provided by the NameNode. Now the client will start reading the blocks using the InputStream API and data will be transmitted continuously from the DataNode and client. After reaching the end of a block, the connection to the DataNode is closed by the DFSInputStream. A read operation is highly optimized, as it does not involve teh to the NataNode for the actual data read, otherwise the NameNode would have become a bottleneck. Due to a distributed parallel read mechanism, thousands of clients can read the data directly from DataNodes very efficiently.

So that's a lot of stuff to remember. Stay tuned for the next article on the processing mechanics of Hadoop. Till then, happy learning!

Opinions expressed by DZone contributors are their own.

Comments