The Importance of Persistent Storage in Kubernetes- OpenEBS

Join the DZone community and get the full member experience.

Join For FreeContainers are not built to persist data. When a container is created, it only runs the process it hosts and terminates. Any data that it creates or processes are also discarded when the container exits. Containers are built to be ephemeral as this makes them lightweight and helps to keep containerized workloads independent of a host’s filesystem which results in flexible, portable and platform-agnostic applications. These benefits, however, creates a few challenges as well when it comes to orchestrating storage for containerized workloads:

- The need for appropriate tools that enable data sharing across immutable containers

- Options for backup and discovery in the event of application failure

- Means to get rid of stored data once it is no longer needed so that hosts can efficiently handle newer workloads

In Kubernetes, PODs are also ephemeral. Kubernetes supports various options to persist data for containerized workloads in different formats. This article explores various tools and strategies that facilitate persistent data storage in Kubernetes.

How Kubernetes handles Persistent Storage

Kubernetes supports multiple options for the requisition and consumption of storage resources. The basic building block of Kubernetes storage architecture is the volume. This section explores central Kubernetes storage concepts and other integrations that allow for the provisioning of highly available storage for containerized applications.

Kubernetes Storage Primitives:

Volume

A volume is a directory that contains data that can be consumed by containers running in a POD. To attach a volume to a specific POD, it is specified in .spec.volumes and is mounted to containers by specifying in .spec.containers[*].volumeMounts. Kubernetes supports different types of volumes depending on the medium hosting them and their contents. There are two main classes of volumes:

- Ephemeral volumes - These share the life of a POD and are destroyed as soon as the POD ceases to exist.

- Persistent Volumes - Exist beyond a POD’s lifetime.

Persistent Volume and Persistent Volume Claims

A Persistent Volume (PV) is a Kubernetes resource that represents a unit of storage available to the cluster. The lifecycle of a PV is independent of the POD consuming it, so data stored in the PV is available even after containers restart. A PV is an actual Kubernetes object that captures details of how a volume implements storage and is configured using a YAML file with specifications similar to:

apiVersion: v1

kind: PersistentVolume

metadata:

name: darwin-volume

labels:

type: local

spec:

storageClassName: dev

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/mnt/data"A Persistent Volume Claim (PVC) is the Kubernetes object that PODs use to request a specific portion of storage. The PVC is also a Kubernetes resource and can have specifications similar to:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: darwin-claim

spec:

storageClassName: dev

accessModes:

- ReadWriteMany

resources:

requests:

storage: 3GiA PVC can be attached to a pod with a specification similar to:

spec:

volumes:

- name: darwin-storage

persistentVolumeClaim:

claimName: darwin-claim

containers:

- name: darwin-container

image: nginx

ports:

- containerPort: 80Once the PVC is created in the cluster via the kubectl apply command, the Kubernetes Master Node searches for a PV that meets the requirements listed by the claim. If an appropriate PV exists with the same storageClassName specification, the PV is bound to the volume.

Supported Persistent Volumes in Kubernetes

Kubernetes supports different kinds of PVs for containerized applications. These include:

- awsElasticBlockStore (EBS)

- azureDisk

- azureFile

- cephfs

- Cinder

- gcePersistentDisk

- glusterfs

- hostPath

- Iscsi

- portworxVolume

- storageOS

- vsphereVolume

Storage Classes

Every application typically requires storage with different properties to run different workloads. PVCs allow for the static provision of abstracted storage, which restricts the volume’s properties of size and access modes.

The StorageClass resource allows cluster administrators to offer volumes with different properties such as performance, access modes or size without exposing the implementation of abstracted storage to users. Using the storageClass resource, cluster administrators can describe the different flavors of storage on offer, mapping to different quality-of-service levels or security policies. The storageClass resource is defined using three main specifications:

- Provisioner- determines the type of volume plugin used to avail Persistent Volumes

- Reclaim Policy- tells the cluster how to handle a volume after a PVC releases the PV it is attached to.

- Parameters- properties of the volumes accepted by a storage class

Since the StorageClass lets PVCs access volume resources with minimal human intervention, it enables dynamic provisioning of storage resources.

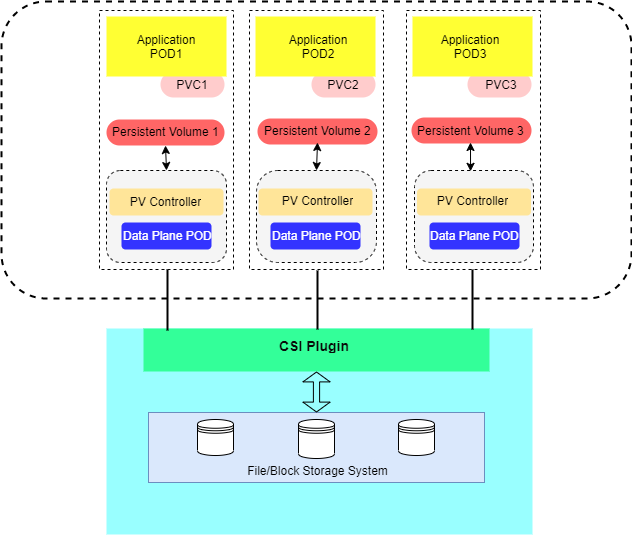

Storage Architecture

Kubernetes applications run in containers hosted in PODs. Each POD uses a PVC to request a specific portion of PV. PVs are managed by a control plane, which calls volume plugin API actions using logic used to implement storage. The volume plugins, therefore, allow for access to physical storage. The architecture of Kubernetes storage in clusters:

A typical Kubernetes Storage Cluster

Storage Plugins

Kubernetes supports different storage plugins- managed solutions built with a focus on enabling persistent storage for Kubernetes applications. With third-party plugins, Kubernetes developers and administrators can focus on an enhanced user experience, while vendors configure storage systems. Kubernetes supports all three types of file systems:

- File Storage Systems - Stores data as a single piece of information organized in a folder accessible through paths. It uses a logical hierarchy to store large arrays of files, making access and navigation simpler.

- Block Storage - Data is segregated into distinct clusters (blocks). Each block has a unique identifier so that storage drivers can store information in convenient chunks without needing file structures. Block storage offers granular control which is desirable for such use-cases as Mail Servers, Virtual Machines and Databases.

- Object Storage - Isolates data in encapsulated containers known as objects. Each object gets a unique ID which is stored in a flat memory model. This makes data easier to find in a large pool and also allows for the storage of data in different deployment environments. Object storage is most appropriate for highly flexible and scalable workloads such as Big Data, web applications and backup archives.

Container Storage Interface

Container Storage Interface (CSI) standardizes the management of persistent storage for containerized applications so that storage plugins can be developed for any container runtime or orchestrator. The CSI is a standard interface that allows storage systems to be exposed to containerized workloads. With CSI, storage product vendors can develop plugins and drivers that work across different orchestration platforms.

CSI also defines Remote Procedure Calls (RPCs) that enable various storage-related tasks. These tasks include:

- Dynamic volume provisioning

- Attaching and detaching nodes to volumes

- Mounting and unmounting volumes from nodes

- Consumption of volumes

- Identification of local storage providers Creating and deleting volume snapshots

With the CSI providing a standard approach to the implementation and consumption of storage by containerized applications, a number of solutions have been developed to enable persistent storage. Some top cloud-native storage solutions include:

Developed by Mayadata, OpenEBS is an open-source storage solution that entirely runs within the user space as Container Attached Storage. It allows for automated provisioning and high availability through replicated and dynamic Persistent Volumes. Some key features of OpenEBS include:

- Open-source and vendor-agnostic

- Utilizes hyperconverged infrastructure

- Supports both local and replicated volumes

- Built using microservices-based CAS architecture

Portworx

Portworx is an end-to-end storage solution for Kubernetes that offers granular storage, data security, migration across multiple cloud platforms and disaster recovery options. Portworx is built for containers from the ground up, making it a popular choice for cloud-native storage. Some features include:

- Elastic scaling with container-optimized volumes

- Uses multi-writer shared volumes

- Storage-aware and application-aware I/O tuning

- Enables data encryption at volume, storage class and cluster levels

Ceph

Ceph is founded on the Reliable Autonomic Distributed Object Store (RADOS) to provide pooled storage in a single unified cluster that is highly available, flexible and easy to manage. Ceph relies on the RADOS block storage system to decouple the namespace from underlying hardware to enable the creation of extensive, flexible storage clusters. Ceph features include:

- Uses the CRUSH algorithm for High Availability

- Supports file, block and object-storage systems

- Open-source

StorageOS

StorageOS is a complete cloud-native software-defined storage platform for running stateful Kubernetes applications. The solution is orchestrated as a cluster of containers that monitor and maintain the state of volumes and cluster nodes. Some features of StorageOS include:

- Reduces latency by enforcing data locality

- Uses in-memory caching to speed up volume access

- Enforces high availability using synchronous replication

- Utilizes standard AES encryption

LongHorn

Longhorn is a distributed, lightweight and reliable block storage solution for Kubernetes. It is built using container constructs and orchestrated using Kubernetes, making it a popular cloud native storage solution. Features of LongHorn include:

- Distributed, enterprise-grade storage with no single point of failure

- Change block detection for backup

- Automated, non-disruptive upgrades

- Incremental snapshots of storage for recovery

Directory Mounts

Kubernetes uses a hostPath volume to mount a directory from the host’s file system directly to a POD. This is mostly applicable for development and testing on single-node clusters. HostPath volumes are referenced via static provisioning. While not useful in a production environment, this method of persistence is beneficial for several use-cases, including:

- Running the container advisor (cAdvisor) inside a container

- When running a container that requires access to Docker internals

- Allowing a POD to specify whether a given volume path should exist before the POD starts running

- Containers are immutable, necessitating orchestration mechanisms that allow for the persistence of data they generate and process. Kubernetes uses volume primitives to enable the storage of cluster data. These include Volumes, Persistent Volumes and Persistent Volume Claims. Kubernetes also supports third-party storage vendors through the CSI. For single-node clusters, the hostPath volume attaches PODs directly to a node’s file system, facilitating development and testing.

This article has already been published on https://blog.mayadata.io/the-importance-of-persistent-storage-in-kubernetes-mayadata-openebs and has been authorized by MayaData for a republish.

Opinions expressed by DZone contributors are their own.

Comments