Easy and Accurate Performance Testing With JUnit/Maven

Learn how you can utilize JUnit and Maven for performance and load testing in a variety of scenarios, like reusing test code and testing in parallel.

Join the DZone community and get the full member experience.

Join For Free1. Introduction

This article will demonstrate how easily we can do performance testing with the help of JUnit, Zerocode, and an IDE(IntelliJ, Eclipse, etc).

We will cover the following aspects.

- The problem and the solution

- (JUnit + Zerocode-TDD + Maven) Open-Source Frameworks

- Usage with examples

- Reports and failure test logs

You can find the demo performance test project on GitHub.

2. The Problem and The Solution

We can do Performance Testing as easily as we do JUnit for unit testing.

Sometimes, we keep the Performance Testing ticket toward the end of the development sprints, i.e., much nearer to the production release date. Then, the developers or performance-testers (sometimes a specialized team) are asked to choose a standalone tool from the market to produce fancy and impressive reports for the sake of doing it, but without discovering various capacity boundaries of the target systems.

That means it is done in isolation from the regular builds, and this reactive approach misses critical aspects of API service or DB performance, leaving little time to address potential issues. This fails to provide any room for improvement or fixing of the potential issues found in the product.

The Solution is to have this aspect of testing in the CI Build Pipeline, if not regularly on every commit/merge as it is highly resource-consuming, then a nightly build should be good enough.

3. Mocking or Stubbing Boundry APIs

It is very important we mock or stub our boundary APIs which are external to the application under test. Otherwise, we will be unnecessarily generating load on other applications as well as producing the wrong analytics reports.

The clip below shows, how easily you can do external service virtualization via WireMock without writing Java code i.e., by simply putting JSON payloads for mock APIs.

4. How Can We Integrate Performance Testing With the CI Build?

In the traditional approach, we spent too much time understanding a tool and making the tool work since some are not IDE-friendly or even Maven/JUnit-based.

At times, pointing our performance testing tool anywhere in the tech stack is not easy or straightforward, for example, pointing to a REST endpoint, DB server, SOAP endpoint, Kafka topic, or SSL Host via corporate proxy.

This makes it a bit difficult to isolate the issue. We are not sure then whether, our application APIs are underperforming, and only the downstream systems are underperforming. Let's explain what it means.

For instance, we just tested our GET API's performance, pointing to the URL "/api/v1/loans/LD1001" using a standalone tool, and found that the response delay was very high. Then we (the development team) tend to blame it on the DB with the reasoning that the OracleDB server is pretty slow while handling parallel loads.

5. How Can You Prove Your APIs Aren’t Slowing Down Performance?

Now, we wish we had a mechanism or tool to isolate this issue from our application by pointing our performance testing tool directly to the DB, Kafka-topics, etc.

Because (as a developer) we are aware of which SQL queries are fired to the DB to fetch the result.

Or the topic/partition names from which we can directly fetch data bypassing the application API processing layer.

This could prove our point meaningfully as well as produce evidence.

6. How Do You Prove That Your Database Isn't Slow?

To truly understand our database’s performance, we need a mechanism to simulate various loads and stress-test it. Ideally, we’d overwhelm the database by firing a specific number of queries, simulating both sequential and parallel load patterns.

Since as developers (or SDETs), we typically know which SQL queries are executed for different purposes, we can easily bundle these into a "barrel" and fire them off, simulating a minimum load. But to discover the actual capacity boundaries of our database instance — whether it's hosted on RDS, a VPS, or EC2 — you should multiply the load (2x, 3x, 5x, or 10x times) and observe how it handles the pressure.

Testing should cover two key scenarios:

- Dedicated DB connections for each request, simulating a real-world environment where each user has their own session.

- Cached DB connections, reusing existing ones to optimize performance for repeated queries.

Each approach addresses different use cases. For instance, if you have a single website or API talking to a database, cached connections can save resources. On the other hand, if multiple subdomains or applications or APIs access the same database, dedicated connections may be more representative of real-world scenarios.

The critical factor is flexibility. Our testing framework must allow us to simulate various business requirements easily(or declaratively), ensuring our database is equipped to handle real-world demands without slowing down, failing to respond, or throwing unexpected errors.

For this purpose, you can straight away plugin a DB Executor to JUnit and run it via Maven. See an example Sample-DB-SQL-Executor. This uses Postgres inside a Docker container for demo purposes only, but you can point to any of your environments and run it to simulate the load on the target DB.

Reusing the Existing JUnit Tests for Load/Stress Generation

Ideally, we need a custom JUnit load runner with reusing our existing JUnit tests (e2e integration tests, feature tests, or component tests, as most of them, use JUnit behind the scenes) to generate load or stress on the target application.

Luckily, we have this ready-made runner available which we will learn in the next section.

The load runner would look like the following:

@LoadWith("load_config.properties")

@TestMapping(testClass = AnyTest.class, testMethod = "testXyz")

@RunWith(ZeroCodeLoadRunner.class)

public class LoadTest {

}Where load_config.properties should hold the below properties:

number.of.threads=80

ramp.up.period.in.seconds=80

loop.count=2Here, we have 80 users to be ramped up in 80 seconds (each user firing tests in a one-second gap) and this is to be run twice (loop=2) for a total of 160 parallel users firing requests each approximately in a one-second gap.

@TestMapping means:

@TestMapping(testClass = AnyTest.class, testMethod = "testXyz")Your "testXyz" method of AnyTest above had the required test "assertions".

Once the load running is completed, we should be able to derive statistics like below:

|

Total number of tests fired |

160 |

|

Total number of tests passed |

140 |

|

Total number of tests failed |

20 |

|

The average delay between requests (in sec) |

1 |

|

Average response time delay (in sec) |

5 |

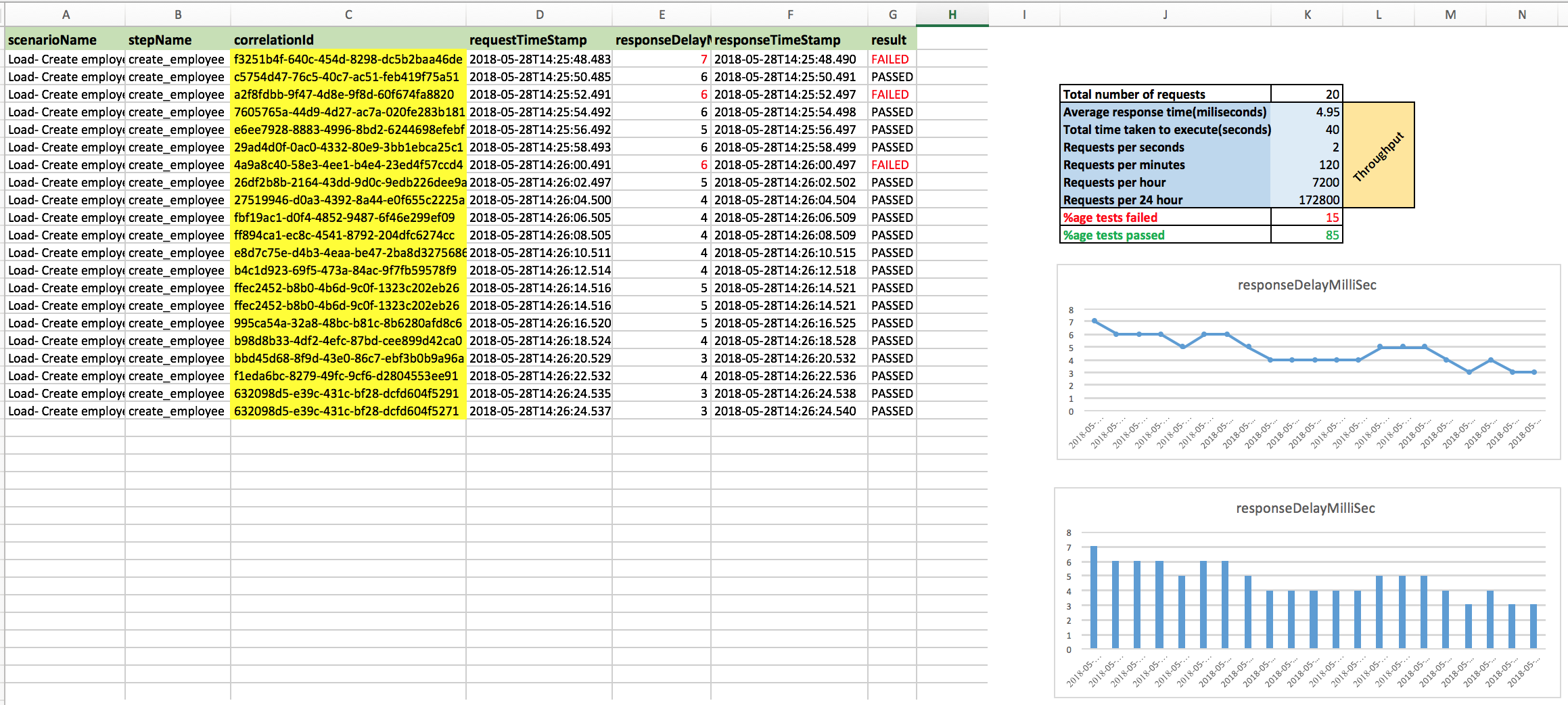

Ideally, more statistics could be drawn on demand, as the LoadRunner produces a CSV/Spreadsheet with the kinds of data below (or more):

| TestClassName | TestMethod | UniqueTestId | RequestTimeStamp | ResponseDelay | ResponseTimeStamp | Result |

|---|---|---|---|---|---|---|

|

YourExistingTest |

aTest |

test-id-001 |

2018-05-09T21:31:38.695 |

165 |

2018-05-09T21:31:38.860 |

PASSED |

|

YourExistingTest |

aTest |

test-id-002 |

2018-05-09T21:31:39.695 |

169 |

2018-05-09T21:31:39.864 |

FAILED |

Of course, these are the basics and you should be able to do all this testing using any given tools.

6. Firing Different Kinds of Requests for Each User Concurrently

- What if we want to gradually increase or decrease the load on the application under test?

- What if one of our users wants to fire POST, then GET?

- What if another user is dynamically changing the payload every time they fire a request?

- What if another user keeps on firing POST, then GET, then PUT, then GET to verify all CRUD operations are going well? And so on, every scenario here is asserting their corresponding outcome of the test.

Now we definitely need a mechanism to reuse your existing tests, as we might already have test cases doing these in your regular e2e testing (sequentially and independently, but not in parallel).

You might need a JUnit runner like below, which could create a production-like load, with parallel users firing different types of requests and asserting them for each call. Go to the demo repo to see this in action.

@LoadWith("load_generation.properties")

@TestMappings({

@TestMapping(testClass = GetServiceTest.class, testMethod = "testGet"),

@TestMapping(testClass = PostServiceTest.class, testMethod = "testPostGet"),

@TestMapping(testClass = PutServiceTest.class, testMethod = "testPostGetPutGet"),

@TestMapping(testClass = PostServiceTest.class, testMethod = "testStress")

})

@RunWith(ZeroCodeMultiLoadRunner.class)

public class LoadMultipleGroupAnnotationTest {

}7. Junit + Zerocode TDD Open Source Testing Library

<dependency> <groupId>org.jsmart</groupId><artifactId>zerocode-tdd</artifactId> <!-- <artifactId>zerocode-rest-bdd</artifactId> --> <!-- OLD --> <version>1.3.x</version> <!-- Pick the latest from maven repo --> <scope>test</scope> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version> <!-- or 4.13 --> <scope>test</scope> </dependency>

Basically, you combine these two libraries, JUnit and Zerocode, to generate load/stress:

- JUnit (very popular, open-source, and commonly used in the Java community)

- zerocode-tdd (like JMeter in headless mode - Enables easy assertions for BDD/TDD automation)

Recently, our team used the open-source Maven library Zerocode (see the README on GitHub) with JUnit, which made the performance testing an effortless job.

We quickly set up multiple load test scenarios and had everything ready for execution in no time. Simultaneously, we added these tests to our load regression pack, ensuring they were always available for the CI build pipeline.

One significant advantage was the ability to reuse subsets of these tests in JMeter to generate load. This approach was particularly beneficial for our business users(non-Developers), who preferred a UI-based tool. They could easily select specific business scenarios and execute the tests without needing deep technical knowledge.

8. Usage With Examples

Browse the sample performance testing repo performance-test project.

We can find the working examples here.

- A single scenario load runner to generate load/stress

- A multi-user scenario load runner to generate load/stress (production-like scenario)

9. Reports and Reading Failed Test Logs

When you have the test run statistics generated in a CSV file, you can draw the charts or graphs using the datasets produced by the framework.

This framework generates two kinds of reports (see sample load test reports here):

- CSV report (in the

targetfolder) - Interactive fuzzy search and filter HTML report (in the

targetfolder - Make sure you disable this when you generate high load volumes to save resources locally or in a Jenkins Slave)

You can trace a failed test by many parameters, but most easily by its uniquestep-correlation-id.

Most importantly, there will be times when tests fail and we need to know the reason for the failure of that particular instance of the request. In the CSV report (as well as HTML), you will find a column correlationId holding a unique ID corresponding to a test step for every run. Just pick this ID and search in the target/zerocode_rest_bdd_logs.log file — you will get the entire details for the matching TEST-STEP-CORRELATION-ID, as below:

2018-06-23 21:55:39,865 [main] INFO org.jsmart.zerocode.core.runner.ZeroCodeMultiStepsScenarioRunnerImpl -

--------- TEST-STEP-CORRELATION-ID: b3ce510c-cafb-4fc5-81dd-17901c7e2393 ---------

*requestTimeStamp:2018-06-23T21:55:39.071

step:get_user_details

url:https://api.github.com:443/users/octocat

method:GET

request:

{ }

--------- TEST-STEP-CORRELATION-ID: b3ce510c-cafb-4fc5-81dd-17901c7e2393 ---------

Response:

{

"status" : 200,

"headers" : {

"Server" : [ [ "GitHub.com" ] ],

"Status" : [ [ "200 OK" ] ]

},

"body" : {

"login" : "octocat",

"id" : 583231,

"updated_at" : "2018-05-23T04:11:18Z"

}

}

*responseTimeStamp:2018-06-23T21:55:39.749

*Response delay:678.0 milli-secs

---------> Assertion: <----------

{

"status" : 200,

"body" : {

"login" : "octocat-REALLY",

"id" : 583231,

"type" : "User"

}

}

-done-

java.lang.RuntimeException: Assertion failed for :-

[GIVEN-the GitHub REST end point, WHEN-I invoke GET, THEN-I will receive the 200 status with body]

|

|

+---Step --> [get_user_details]

Failures:

---------

Assertion path '$.body.login' with actual value 'octocat' did not match the expected value 'octocat-REALLY'

Throughput results can be drawn as below:

Of course, you can draw line graphs, pie charts, and 3D charts using Excel or any other handy tools.

Good luck and happy testing!

DON'T FORGET to support our OSS by leaving a star on our GitHub repo (top right corner)!

The article was originally posted on August 28, 2018.

Opinions expressed by DZone contributors are their own.

Comments