Introduction and History of Unit Testing [Part 1]

We all know we should be incorporating unit testing into our development processes, but do you know the history of this concept? We take a look into the history of test automation to get a look at where this all got started.

Join the DZone community and get the full member experience.

Join For Free

Developers are Artists

We developers often think that we write better code than many others do. As software progresses and becomes bigger and more complex, the question arises as to whether we are growing and scaling accordingly. It is almost impossible for any developer to know everything there is. There is simply too much to know! And don’t get me started on the subject of successful deployments that have to undergo changes just because the client decides at the last minute to do so.

A developer has to face many challenges in order to keep up. That’s why good practices are essential and unit testing ought to be an integral part of everyone’s daily work routine. Though unit testing may not be everyone’s favorite, one should view testing as an essential part of many professions where it is implemented in order to ensure quality, save costs and try to understand as to whether their work functions as was intended:

Writers employ editors and proofreaders to check their texts; fashion designers inspect patterns first made in a fabric called toile to see if the design and each part (or shall I say “unit”) combine well together, so that the design can be tested and perfected. At a movie shooting a script supervisor ensures each department’s (department = unit) continuity on set (check out these fun Continuity Errors in Movies though…).

The Birth of QA

As you can see, most professions acknowledge testing as an integral part of their work routine, and in this series, you’ll be able to learn the context, history, and background to unit testing.

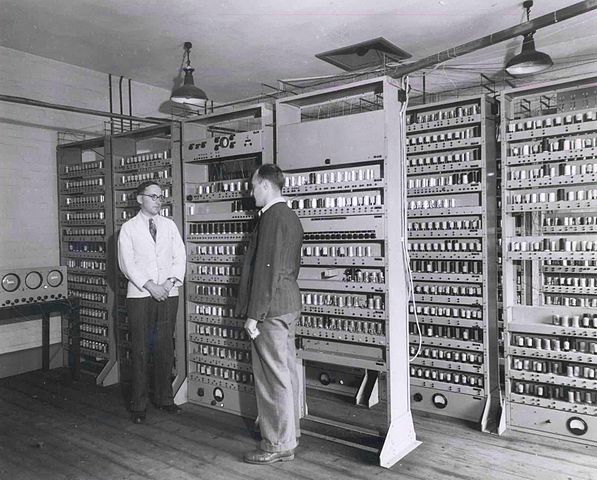

Without further ado, let’s begin by going back in time to the history of programming! People had already begun developing software in the 30s, 40s, and 50s, however, there certainly were not the likes of QA or testers. There were very few developers and they had to test their own work.

Taking into account that way back then the computers were very large, the size of houses and painstakingly slow, making computer time extremely expensive, considerable effort was made by the scant number of developers available to deal with every bug fix.

It was crucial for the developers to produce working goods and they came through. This took place until the 60s and 70s when businesses started to expand and around the 80s, it was very apparent to everyone that software was going to be of prime importance in the world, therefore making it very lucrative for the people working with it. At the same time, computers became smaller and PCs were becoming very widespread.

The Market for Developers

As a result, there were two events happening concurrently: the market for developers was growing due to business demands, while computer size was shrinking. Adding this all together leads us to the reality where more and more inexperienced people started working, writing software and this increased acceleration resulted in many bugs.

In fact, this is where our story actually begins. Seeing that software was of paramount importance and due to the fact that we were now contending with a multitude of bugs, the business people involved were intent on solving the bug problem. This became much more critical in the 90s, and towards the 2000s, with the addition of the internet, more and more people exponentially started writing software, unfortunately resulting in substandard code. Hence the exacerbation of the problem.

The idea in the 90s was that because developers were still in very high demand, and that this demand had become even greater than before, the objective was to separate the responsibility. The goal was to keep developers writing software while at the same time positioning gatekeepers, known as the testers. These testers, QA, quality assurance, would ensure the release of something of a higher quality. This is where the term quality assurance comes from, to assure the quality of the product.

Obviously, this was an incorrect assumption, especially as this became exaggerated when we started showing off testing capabilities. The idea was that if we were going to separate it then everyone would have the ability to test. By virtue of the fact that everyone could operate it and confirm functionality would consequently make it more cost efficient. However, in reality, it doesn’t work on that scale.The fact of the matter is that more code is being written and we do not have sufficient manpower to test the code, thus resulting in the demand for more professional testers.

With this thought in mind, I’ll conclude this post for the time being. I hope that the history and background of test automation has provided you with some informative background. The second part is to be continued shortly.

Published at DZone with permission of Leah Grantz, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments