Java High Availability With WildFly on Kubernetes

Join the DZone community and get the full member experience.

Join For Freelegacy java applications

with the advancement of the dockerization of applications, kubernetes has become a standard in the market, but we must remember that there are still thousands of legacy applications that depend on certain features provided by the application servers. so, if you need to use session replication, you will surely need wildfly instances to be clustered. to solve this smoothly, you can use the “kubernetes discovery protocol for jgroups” aka “kube-ping”. kube_ping is a discovery protocol for jgroups cluster nodes managed by kubernetes: jgroups-kubernetes .

walkthrough

i assume you already have a kubernetes cluster, so let’s just focus on wildfly settings.

the first step is to create a repository that will contain all our files:

xxxxxxxxxx

[root@workstation ~]# mkdir wildfly20-kubeping

[root@workstation ~]# mkdir -p wildfly20-kubeping/application

[root@workstation ~]# mkdir -p wildfly20-kubeping/configuration

[root@workstation ~]# mkdir -p wildfly20-kubeping/kubernetes

then create a dockerfile with the necessary steps for image customization:

xxxxxxxxxx

[root@workstation ~]# vi wildfly20-kubeping/dockerfile

from jboss/wildfly:20.0.0.final

label maintainer mauricio magnani <msmagnanijr@gmail.com>

run /opt/jboss/wildfly/bin/add-user.sh admin redhat --silent

add configuration/config-server.cli /opt/jboss/

run /opt/jboss/wildfly/bin/jboss-cli.sh --file=config-server.cli

run rm -rf /opt/jboss/wildfly/standalone/configuration/standalone_xml_history/*

add application/cluster.war /opt/jboss/wildfly/standalone/deployments/

expose 8080 9990 7600 8888

in the same directory, create the file “config-server.cli”, which will contain the steps to configure kubeping correctly:

xxxxxxxxxx

[root@workstation ~]# vi wildfly20-kubeping/configuration/config-server.cli

###start the server in admin-only mode, using/modifying standalone-full-ha.xml

embed-server --server-config=standalone-full-ha.xml --std-out=echo

###apply all configuration to the server

batch

#/subsystem=logging/logger=org.openshift.ping:add()

#/subsystem=logging/logger=org.openshift.ping:write-attribute(name=level, value=debug)

#/subsystem=logging/logger=org.openshift.ping:add-handler(name=console)

/subsystem=messaging-activemq/server=default/cluster-connection=my-cluster:write-attribute(name=reconnect-attempts,value=10)

/interface=kubernetes:add(nic=eth0)

/socket-binding-group=standard-sockets/socket-binding=jgroups-tcp/:write-attribute(name=interface,value=kubernetes)

/socket-binding-group=standard-sockets/socket-binding=jgroups-tcp-fd/:write-attribute(name=interface,value=kubernetes)

/subsystem=jgroups/channel=ee:write-attribute(name=stack,value=tcp)

/subsystem=jgroups/channel=ee:write-attribute(name=cluster,value=kubernetes)

/subsystem=jgroups/stack=tcp/protocol=mping:remove()

/subsystem=jgroups/stack=tcp/protocol=kubernetes.kube_ping:add(add-index=0,properties={namespace=${env.kubernetes_namespace},labels=${env.kubernetes_labels},port_range=0,masterhost=kubernetes.default.svc,masterport=443})

run-batch

###stop embedded server

stop-embedded-server

now put the “package of your application” to the directory “application”

xxxxxxxxxx

[root@workstation ~]# cp cluster.war wildfly20-kubeping/application

the next step is to create all kubernetes objects so we can do the tests later. i’m using the namespace “labs”:

xxxxxxxxxx

[root@workstation ~]# vi wildfly20-kubeping/kubernetes/01-wildfly-sa-role.yaml

apiversion: v1

kind: serviceaccount

metadata:

name: jgroups-kubeping-service-account

namespace: labs

---

kind: clusterrole

apiversion: rbac.authorization.k8s.io/v1

metadata:

name: jgroups-kubeping-pod-reader

namespace: labs

rules:

- apigroups: [""]

resources: ["pods"]

verbs: ["get", "list"]

---

apiversion: rbac.authorization.k8s.io/v1beta1

kind: clusterrolebinding

metadata:

name: jgroups-kubeping-api-access

roleref:

apigroup: rbac.authorization.k8s.io

kind: clusterrole

name: jgroups-kubeping-pod-reader

subjects:

- kind: serviceaccount

name: jgroups-kubeping-service-account

namespace: labs

[root@workstation ~]# vi wildfly20-kubeping/kubernetes/02-wildfly-deployment.yaml

apiversion: apps/v1

kind: deployment

metadata:

name: wildfly

namespace: labs

labels:

app: wildfly

tier: devops

spec:

selector:

matchlabels:

app: wildfly

tier: devops

replicas: 2

template:

metadata:

labels:

app: wildfly

tier: devops

spec:

serviceaccountname: jgroups-kubeping-service-account

containers:

- name: kube-ping

image: mmagnani/wildfly20-kubeping:latest

command: ["/opt/jboss/wildfly/bin/standalone.sh"]

args: ["--server-config", "standalone-full-ha.xml", "-b", $(pod_ip), "-bmanagement", $(pod_ip) ,"-bprivate", $(pod_ip) ]

resources:

requests:

memory: 256mi

limits:

memory: 512mi

imagepullpolicy: always

ports:

- containerport: 8080

- containerport: 9990

- containerport: 7600

- containerport: 8888

env:

- name: pod_ip

valuefrom:

fieldref:

apiversion: v1

fieldpath: status.podip

- name: kubernetes_namespace

valuefrom:

fieldref:

apiversion: v1

fieldpath: metadata.namespace

- name: kubernetes_labels

value: app=wildfly

- name: java_opts

value: -djdk.tls.client.protocols=tlsv1.2

[root@workstation ~]# vi wildfly20-kubeping/kubernetes/03-wildfly-service.yaml

apiversion: v1

kind: service

metadata:

name: wildfly

namespace: labs

labels:

app: wildfly

tier: devops

spec:

type: clusterip

ports:

- targetport: 8080

port: 8080

selector:

app: wildfly

tier: devops

[root@workstation ~]# vi wildfly20-kubeping/kubernetes/04-wildfly-ingress.yaml

---

apiversion: extensions/v1beta1

kind: ingress

metadata:

name: wildfly-ingress

namespace: labs

spec:

rules:

- host: wildfly.mmagnani.lab

http:

paths:

- path: /

backend:

servicename: wildfly

serviceport: 8080

now just run the image build and push this to the docker hub or any other “registry”:

xxxxxxxxxx

[root@workstation ~]# docker build -t mmagnani/wildfly20-kubeping:latest .

[root@workstation ~]# docker push mmagnani/wildfly20-kubeping

in the context of your kubernetes cluster, just create those objects:

xxxxxxxxxx

[root@k8s-master kubernetes]# kubelet --version

kubernetes v1.18.3

[root@k8s-master kubernetes]# pwd

/root/wildfly20-kubeping/kubernetes

[root@k8s-master kubernetes]# kubectl create -f . -n labs

[root@k8s-master kubernetes]# kubectl get pods -n labs

name ready status restarts age

wildfly-cc8b9546f-df9sw 1/1 running 0 48s

wildfly-cc8b9546f-scfdp 1/1 running 0 48s

check the logs. you should find two members as we only define 2 replicas:

xxxxxxxxxx

[root@k8s-master kubernetes]# kubectl logs -f wildfly-cc8b9546f-df9sw -n labs

14:23:33,253 info [org.infinispan.cluster] (msc service thread 1-2) ispn000078: starting jgroups channel kubernetes

14:23:33,253 info [org.infinispan.cluster] (msc service thread 1-2) ispn000094: received new cluster view for channel kubernetes: [wildfly-cc8b9546f-scfdp|1] (2) [wildfly-cc8b9546f-scfdp, wildfly-cc8b9546f-df9sw]

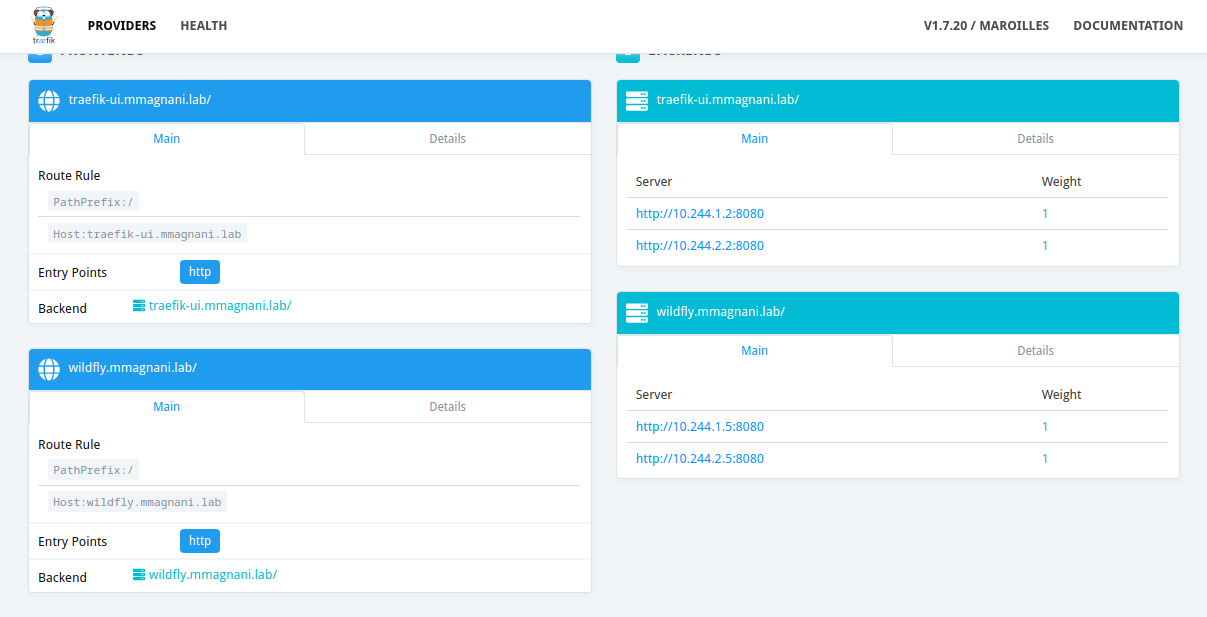

as i am using “traefik”, my “ingress” is available:

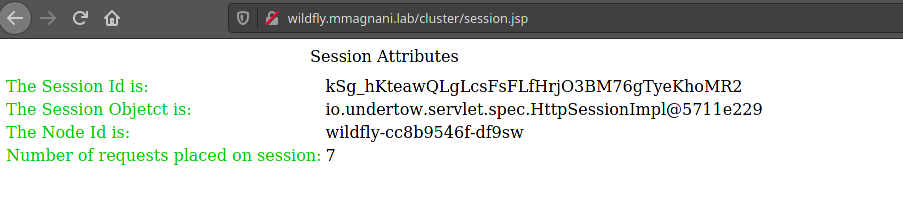

in the application, it is also possible to add the object to the session:

Opinions expressed by DZone contributors are their own.

Comments