Solving Four Kubernetes Networking Challenges

Deploying across multiple clouds, maintaining multiple environments, and ensuring reliable and scalable network policies; we’ll look at how to tackle those challenges.

Join the DZone community and get the full member experience.

Join For FreeOne of the main responsibilities of Kubernetes is to share nodes between applications. Networking is a fundamental requirement since those applications need to communicate with one another and the outside world.

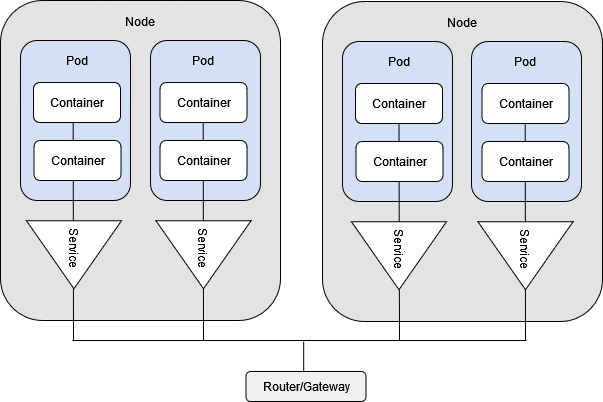

Requests from outside a Kubernetes cluster usually go through a router or API gateway responsible for proxying them to the appropriate services. The responsibility of Kubernetes networking is to provide the underlying communication layer, enabling requests to reach their intended destinations.

Distributed applications spread across many nodes. When there are multiple replicas of each application, Kubernetes handles service discovery and communication between the service and Pods. Inside a Pod, containers can communicate easily and transparently. Inside a cluster, Pods can connect to other Pods, made possible through a combination of virtual network interfaces, bridges, and routing rules through an overlay network.

Despite the transparent handling, however, Kubernetes networking is more complex than it seems. Deploying across multiple clouds, maintaining multiple environments, and ensuring reliable and scalable network policies are significant challenges. Not all of these complexities are natively addressed by Kubernetes. In this article, we’ll look at how to tackle those challenges.

The Basics of Kubernetes Networking

In Kubernetes, Pods are responsible for handling container-to-container communication. Pods leverage network namespaces with their own network resources (interfaces and routing tables). Inside a Pod, containers share these resources, allowing them to communicate via localhost.

Pod-to-Pod communication must meet the following Kubernetes requirements:

- Pods need to communicate without network address translation (NAT).

- Nodes need to be able to communicate with Pods without NAT.

- The IP address that a Pod can see assigned to itself must match the IP that other Pods see.

The Container Network Interface (CNI) includes a specification for writing network plugins to configure network interfaces. This allows you to create overlay networks that satisfy Pod-to-Pod communication requirements.

A service is a Kubernetes abstraction that allows Pods to expose and receive requests. It provides a service discovery mechanism through Pod labels and basic load balancing capabilities. Applications running inside Pods can easily use services to connect to other applications running in the cluster. Requests from outside the cluster can be routed through Ingress controllers. These controllers will use Ingress resources to configure routing rules, usually leveraging services to facilitate routing to the correct applications.

Non-Trivial Challenges

While these networking capabilities provide the foundational building blocks for Kubernetes managed workloads, the dynamic and complex nature of cloud native systems presents several challenges.

Service-to-Service Communication Reliability

In distributed systems, business functions are divided into multiple, autonomous services running over a cluster of nodes, Pods, and containers. A microservice architecture introduces the need for services to communicate over the network.

The volatility and elastic nature of the cloud require constant monitoring of the Kubernetes cluster and rerouting in case of failures. With ephemeral Pods and continual rerouting of resources, reliable service-to-service communication is not a given.

Efficient load balancing algorithms need to assign traffic to available replicas and isolate the overloaded ones. Similarly, service failure means client requests need to be retried and timed out gracefully. Complex scenarios might need circuit breakers and load-shedding techniques to handle surges in demand and failures.

Elaborate Multi-Cloud Deployments

Complex, large-scale systems are often divided into multiple environments, with different parts deployed to different cloud platforms. These heterogeneous environments need to communicate with one another.

Even within the same cloud tenancy—or on-premise—the same workload can run in different environments (development, staging, and production). Though separated, these environments sometimes need to communicate with one another. For example, a staging environment may need to emulate the production workload and rigorously test the application before it goes live. With successful testing, both code and data may need to migrate from it.

A seamless migration can be challenging in such cases. Also, there may be cases where a team simultaneously supports both VM and Kubernetes-hosted services. Or, perhaps a team designs systems that support multi-cloud—or at least multi-region—deployments for reliability, specifying the complex network configurations and elaborate ingress and egress rules.

Service Discovery

When running Kubernetes in cloud-native environments, it’s easy to scale services by spawning several replicas across multiple nodes. These application replicas are ephemeral—instantiated and destroyed as Kubernetes deems necessary. It’s non-trivial for microservices in the application to keep track of all these changes to IP addresses and ports. Nonetheless, these microservices need an efficient way to find service replicas.

Network Rules Scalability

Security best practices and industry regulations like the Payment Card Industry Data Security Standard (PCI DSS) enforce strict networking rules. These rules dictate strict communication constraints between services.

Kubernetes has the concept of Network Policies. These allow you to control traffic at the IP address or port level. You can specify the rules that would enable a Pod to communicate with other services using labels and selectors.

As your system of microservices grows in number, reaching hundreds or thousands of services, network policy management becomes a complex, tedious, and error-prone process.

How Kong Ingress Controller Can Help

The Kubernetes Ingress Controller (KIC) from Kong is an Ingress implementation for Kubernetes. This Ingress controller, powered by Kong Gateway, acts as a cloud native, platform-agnostic, scalable API gateway. It’s built for hybrid and multi-cloud environments and optimized for microservices and distributed architectures.

The KIC allows for creating a configuration of routing rules, health checks, and load balancing, and it supports a variety of plugins that provide advanced functionality. This wide range of capabilities can help address the challenges we’ve discussed.

Reliable Service-to-Service Communication

Kubernetes services provide simple load balancing capabilities (Round Robin). One of the core features of KIC is to load balance between replicas of the same application. It can use algorithms like weighted connections or least connections, or even sophisticated, custom implementations. These algorithms leverage the service registry of KIC to provide efficient routing.

With KIC, you can easily configure retries when a service is down, sensible timeouts, rerouting requests to healthy service instances, or error handling. You can also implement failure patterns such as circuit breaking and load-shedding to smooth and throttle traffic.

Simpler Multi-Cloud Environments Deployments

Multi-environment and heterogeneous infrastructure deployments demand complex network policies and routing configurations. Kong Gateway, built into KIC, addresses many of these challenges.

Kong Gateway allows service registration independent of where services are deployed. With a registered service, you’ll be able to add routes, and KIC will be ready to proxy requests to your service. Additionally, while complex systems can sometimes communicate with different protocols (REST versus gRPC), you can easily configure KIC to support multiple protocols.

The plugin system allows you to extend the functionality of KIC for more complex scenarios. Kong Plugin Hub contains a strong collection of useful and battle-tested plugins, and KIC also enables you to develop and use whatever plugin best suits your needs.

Enhanced Service Discovery

As mentioned, KIC tracks available instances through its registry of services. As services integrate with KIC, they can self-register and report their availability. This registration can also be done through third-party registration services. By taking advantage of the service registry, KIC can proxy client requests to the proper backends at any time.

Scalable Network Rules

Although enforcing network rules through Network Policies can be complicated, KIC can easily integrate with service mesh implementations like the CNCF’s Kuma or Istio with Kong Istio Gateway, extending the capabilities of Network Policies and guaranteeing additional security.

With authentication and authorization policies, you’ll be able to enhance network security in a secure, consistent, and automated way. Moreover, you can use network policies and service mesh policies together to provide an even better security posture.

An added benefit of the service mesh integration is that it allows for deployment patterns like canary deployments and blue/green deployments. It also enhances observability with reliable metrics and traces.

Conclusion

Kubernetes can handle common networking tasks, making it easier for developers and operators to onboard services. However, with large and complex cloud-native systems, networking concerns are rarely simple. Organizations want to split monoliths into microservices, but they need to address unique concerns such as efficient load balancing or fault tolerance. Similarly, enabling seamless service migrations and transitions between different environments is not easy. Kubernetes networking capabilities need to be extended to support a wider range of scenarios.

KIC can efficiently tackle many of these challenges. It offers a broad range of functionality, including advanced routing and load balancing rules, complex ingress and egress rules, and fault tolerance measures. You can greatly improve service discovery with the service registry of KIC, which can track all available instances of each service. The easy integration with KIC and service meshes can help establish strong network security policies and leverage different deployment patterns.

Opinions expressed by DZone contributors are their own.

Comments