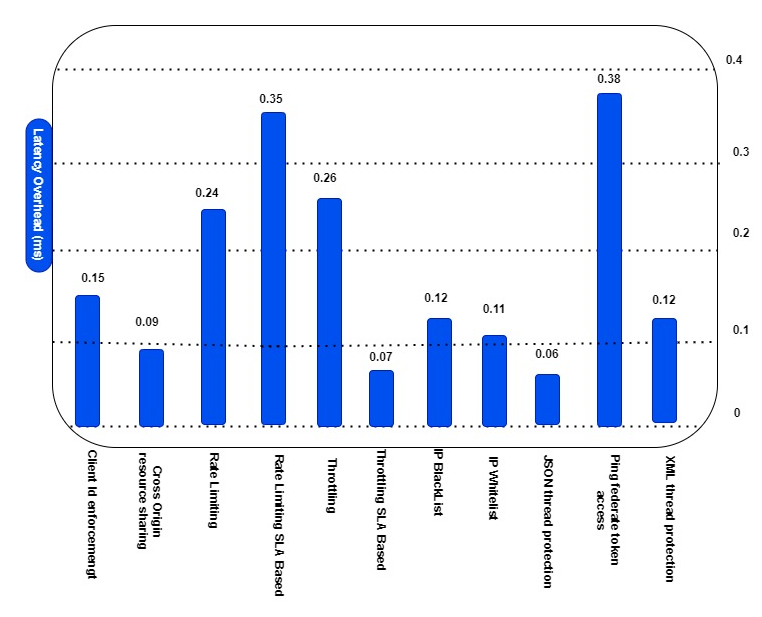

Latency Cost of Implementing API Policies (Anypoint Platform)

A quick analysis of the latency cost associated with implementing API policies. Depending on the type of API policy, that latency is up to ~0.38 milliseconds.

Join the DZone community and get the full member experience.

Join For FreePracticing an API policy to API invocations sums treating overhead, which rises in increased latency (decreased response time) as seen by API clients. Depending on the type of API policy, that latency is up to 0.38 milliseconds (approx.) per HTTP request.

Improvement in HTTP request-response latency into the application of several API policies, which are enforced set in the API implementation.

Measurement Outlined for Above Graph

Measures for the above Figure are performed appropriating API Gateway 2.0, 1kB payloads, c3.xlarge (4-vCore, 7.5GB RAM, standard disks, 1Gbit network).

The non-function requirements for the "Integration" product and "Self-Service App" product are a combination of constraints on throughput, response time, security, and reliability.

- Anypoint API Manager and API policies manage APIs and API invocations and can force NFRs on that level in multiple areas.

- API policies can be implemented directly in an API implementation. that is a Mule application or in a distinctly extended API proxy

- Client ID-based API policies require API clients to be registered for access to an API example.

- Must pass client ID/secret with every API invocation, likely implicitly via OAuth 2.0 access token.

- The Hotel Management C4E has specified guidelines for the API policies to apply to System APIs, Process APIs, and Experience APIs.

- C4E has created reusable RAML fragments for API policies and published them to Anypoint Exchange.

Opinions expressed by DZone contributors are their own.

Comments