Linking Apache Ignite and Apache Kafka for Highly Scalable and Reliable Data Processing

Here's how to link Apache Kafka and Ignite, for maintaining scalability and reliability for data processing. We'll explore injecting data with KafkaStreamer, as well as IgniteSinkConnector.

Join the DZone community and get the full member experience.

Join For FreeApache Ignite is a "high-performance, integrated and distributed in-memory platform for computing and transacting on large-scale data sets in real-time, orders of magnitude faster than possible with traditional disk-based or flash-based technologies." It has a vast, mature and continuously growing arsenal of in-memory components to boost the performance and increase the scalability of applications. In addition, it provides a great number of integration solutions including integrations with Apache Spark and Hadoop, Apache Flume, Apache Camel, and others.

Both Apache Ignite and Apache Kafka are known for their high scalability and reliability, and in this article, I will describe how one can easily link it to Apache Kafka messaging system to achieve a robust data processing pipeline. I will present two solutions available out of the box: KafkaStreamer and IgniteSinkConnector, based on the recently released feature of Apache Kafka -- Kafka Connect.

Injecting Data via KafkaStreamer

Fetching data from Kafka and injecting it into Ignite for further in-memory processing became possible from Ignite 1.3 via its KafkaStreamer, which is an implementation of IgniteDataStreamer using Kafka's consumer to pull data from Kafka brokers and efficiently placing it into Ignite caches.

To use it, first of all, you will have to add KafkaStreamer dependency to the pom.xml

<dependency>

<groupId>org.apache.ignite</groupId>

<artifactId>ignite-kafka</artifactId>

<version>${ignite.version}</version>

</dependency>Assuming you have a cache with String keys and String values, data streaming can be done in a simple manner.

KafkaStreamer<String, String, String> kafkaStreamer = new KafkaStreamer<>();

try (IgniteDataStreamer<String, String> stmr = ignite.dataStreamer(null)) {

// allow overwriting cache data

stmr.allowOverwrite(true);

kafkaStreamer.setIgnite(ignite);

kafkaStreamer.setStreamer(stmr);

// set the topic

kafkaStreamer.setTopic(someKafkaTopic);

// set the number of threads to process Kafka streams

kafkaStreamer.setThreads(4);

// set Kafka consumer configurations

kafkaStreamer.setConsumerConfig(kafkaConsumerConfig);

// set decoders

kafkaStreamer.setKeyDecoder(strDecoder);

kafkaStreamer.setValueDecoder(strDecoder);

kafkaStreamer.start();

}

finally {

kafkaStreamer.stop();

}For consumer configurations, you can refer Apache Kafka docs.

Injecting Data via IgniteSinkConnector

From the upcoming Apache Ignite release 1.6 yet another way to integrate your data processing becomes possible. It is based on Kafka Connect, a new feature introduced in Apache Kafka 0.9 that enables scalable and reliable streaming data between Apache Kafka and other data systems.

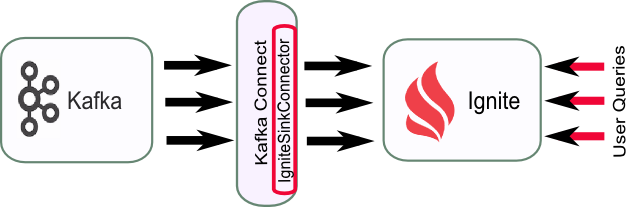

Such integration enables continuous and safe streaming data from Kafka to Ignite for computing and transacting on large-scale data sets in memory.

Fig 1. Data Continuously Injected via IgniteSinkConnector and Queries by Users.

Connector can be found in 'optional/ignite-kafka.' It and its dependencies have to be on the classpath of a Kafka running instance.

Also, you will need to configure your connector, for instance, as follows

# connector

name=my-ignite-connector

connector.class=org.apache.ignite.stream.kafka.connect.IgniteSinkConnector

tasks.max=2

topics=someTopic1,someTopic2

# cache

cacheName=myCache

cacheAllowOverwrite=true

igniteCfg=/some-path/ignite.xmlwhere igniteCfg is set to the path to the Ignite cache configuration file, cacheName is the name of the cache you specify in '/some-path/ignite.xml' and the data from someTopic1,someTopic2 will be streamed to. cacheAllowOverwrite is set to true to enable overwriting existing values in the cache.

Another important configuration is Kafka Connect workers' properties, which can look like this for our simple example

bootstrap.servers=localhost:9092

key.converter=org.apache.kafka.connect.storage.StringConverter

value.converter=org.apache.kafka.connect.storage.StringConverter

key.converter.schemas.enable=false

value.converter.schemas.enable=false

internal.key.converter=org.apache.kafka.connect.storage.StringConverter

internal.value.converter=org.apache.kafka.connect.storage.StringConverter

internal.key.converter.schemas.enable=false

internal.value.converter.schemas.enable=false

offset.storage.file.filename=/tmp/connect.offsets

offset.flush.interval.ms=10000Now you can start your connector, for example, in a standalone mode

bin/connect-standalone.sh myconfig/connect-standalone.properties myconfig/ignite-connector.propertiesNote that for a distributed mode, myconfig/ignite-connector.properties are not passed on the command line. Use REST API to create, modify and destroy the connector.

Checking the Flow

For a very basic verification of the flow, we can perform the following quick check.

Start Zookeeper and Kafka servers

bin/zookeeper-server-start.sh config/zookeeper.properties

bin/kafka-server-start.sh config/server.propertiesSince our sample schema handles string data, we can feed key1,val1 via kafka-console-producer (This is done just for illustration -- normally you will use a Kafka producer or source connectors to feed data)

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --property parse.key=true --property key.separator=,

key1,val1Then, start the connector as described.

That's all! The data ends up in the Ignite cache, ready for further in-memory processing or analysis via SQL queries (See Apache Ignite docs).

Opinions expressed by DZone contributors are their own.

Comments