Building a Reactive Event-Driven App With Dead Letter Queue

Learn how to build fault-tolerant, reactive event-driven applications using Spring WebFlux, Apache Kafka, and Dead Letter Queue to handle data loss efficiently.

Join the DZone community and get the full member experience.

Join For FreeEvent-driven architecture facilitates systems to reply to real-life events, such as when the user's profile is updated. This post illustrates building reactive event-driven applications that handle data loss by combining Spring WebFlux, Apache Kafka, and Dead Letter Queue. When used together, these provide the framework for creating fault-tolerant, resilient, and high-performance systems that are important for large applications that need to handle massive volumes of data efficiently.

Features Used in this Article

- Spring Webflux: It provides a Reactive paradigm that depends on non-blocking back pressure for the simultaneous processing of events.

- Apache Kafka: Reactive Kafka producers and consumers help in building competent and adaptable processing pipelines.

- Reactive Streams: They do not block the execution of Kafka producers and consumers' streams.

- Dead Letter Queue (DLQ): A DLQ stores messages temporarily that could not have been processed due to various reasons. DLQ messages can be later used to reprocess messages to prevent data loss and make event processing resilient.

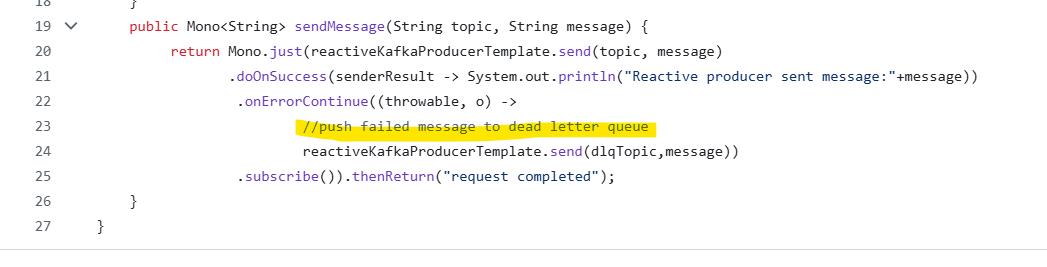

Reactive Kafka Producer

A Reactive Kafka Producer pushes messages in parallel and does not block other threads while publishing. It is beneficial where large data to be processed. It blends well with Spring WebFlux and handles backpressure within microservices architectures. This integration helps in not only processing large messages but also managing cloud resources well.

The reactive Kafka producer shown above can be found on GitHub.

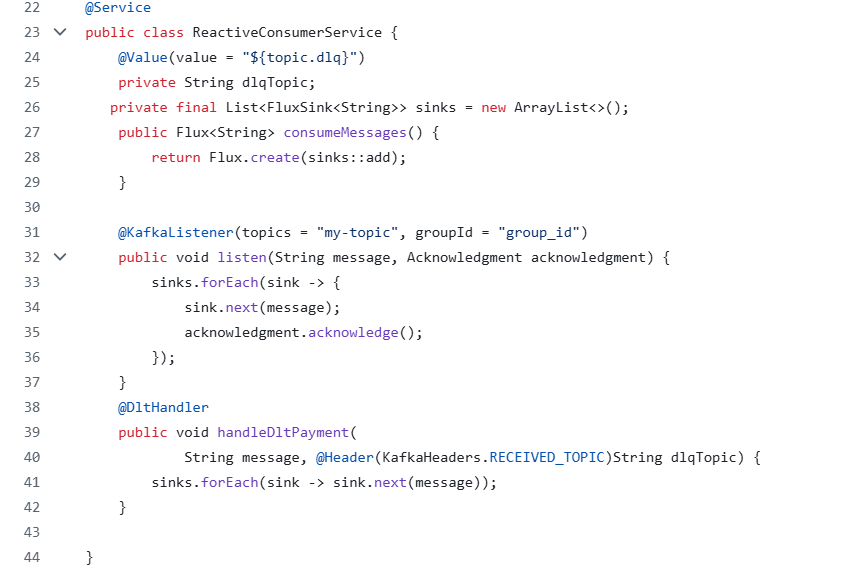

Reactive Kafka Consumer

Reactive Kafka Consumer pulls Kafka messages without blocking and maintains high throughput. It also supports backpressure handling and integrates perfectly with WebFlux for real-time data processing. The reactive consumer pipeline manages resources well and is highly suited for applications deployed in the cloud.

The reactive Kafka consumer shown above can be found on GitHub.

Dead Letter Queue (DLQ)

A DLQ is a simple Kafka topic that stores messages sent by producers and fails to be processed. In real time, we need systems to be functional without blockages and failure, and this can be achieved by redirecting such messages to the Dead Letter Queue in the event-driven architecture.

Benefits of Dead Letter Queue Integration

- It provides a fallback mechanism to prevent interruption in the flow of messages.

- It allows the retention of unprocessed data and helps to prevent data loss.

- It stores metadata for failure, which eventually aids in analyzing the root cause.

- It provides as many retries to process unprocessed messages.

- It decouples error handling and makes the system resilient.

Failed messages can be pushed to DLQ from the producer code as shown below:

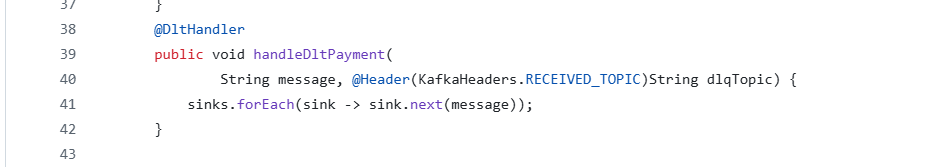

A DLQ Handler needs to be created in the reactive consumer as shown below:

Conclusion

The incorporation of DLQ with a reactive producer and consumer helps build resilient, fault-tolerant, and efficient event-driven applications. Reactive producers ensure nonblocking message publication; on the other hand, Reactive consumers process messages with backpressure, improving responsiveness. The DLQ provides a fallback mechanism preventing disruptions and prevents data loss.

The above architecture ensures isolation of system failure and helps in debugging which can further be addressed to improve applications.

The above reference code can be found in the GitHub producer and GitHub consumer.

More details regarding the reactive producer and consumer can be found at ReactiveEventDriven. Spring Apache Kafka documents more information regarding DLQ.

Opinions expressed by DZone contributors are their own.

Comments