Microservice Testing: Coupling and Cohesion (All the Way Down)

This article will walk you through the important points of coupling and cohesion in microservices testing, from the architectural level down.

Join the DZone community and get the full member experience.

Join For FreeThe last couple of weeks have involved my training co-conspirator Andrew Morgan and I teaching several workshops on microservice testing, most notably at O'Reilly SACON NY and QCon London. This is always great fun - we enjoy sharing our knowledge, we typically learn a bunch, and we also get a glimpse into many of the attendees approaches to testing.

The "best practices" in testing microservice projects is still very much an evolving space - my current go-to material includes Toby Clemson's excellent 2014 work, alongside Cindy Sridharan's more modern take on " Testing Microservices, the sane way", and my own attempts too - but I'm starting to see some current challenges and potential antipatterns emerge. This is generally a good thing, as the formation of antipatterns around the edges of the practice can sometimes indicate the overall maturation of an approach. Here are my (still crystallizing) high-level thoughts on the subject of testing microservice-based applications.

tl;dr:

- Think about coupling and cohesion when designing microservices (yeah, yeah, I know, but I mean seriously think about this, and even do some upfront design!)

- Watch for various types of "monolith" creeping into the design and operation of the system - there is more than one monolith!

- Avoid over-reliance on "monolithic" end-to-end testing

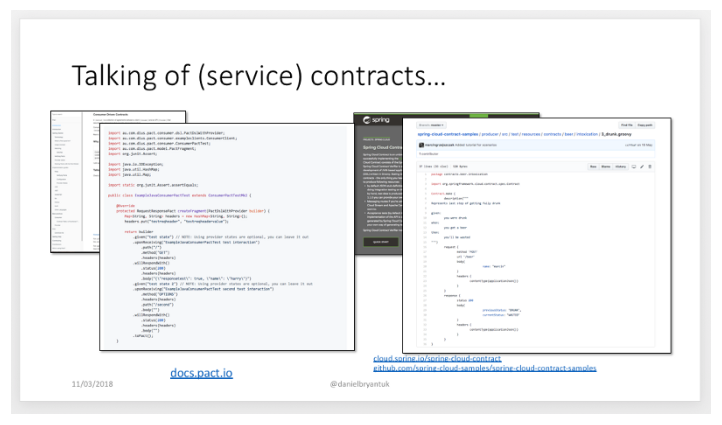

- Use contracts- via IDLs like gRPC and Avro, or CDC tooling like Pact and Spring Cloud Contract - to define and test inter-service integrations

- Isolate intra-service tests with appropriate use of mocking, stubbing and virtualization/simulation

- Avoid platform/infra over-coupling when testing - if your infrastructure test setup looks too complicated for a local test, then it probably is

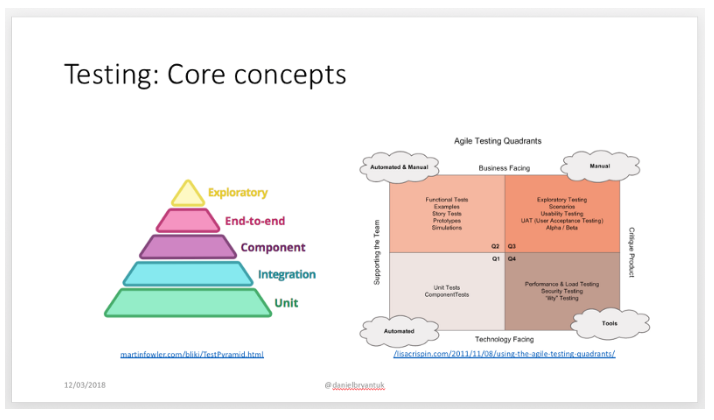

- Categorise and define the purpose of each test category - unit, integration, component, e2e etc - and ensure cohesion (and a single reason to change) within not only the groups, but also the implementation

Architectural Coupling and Cohesion

I'm sure you've heard it all before - maybe in college, maybe from a book, or perhaps at a local meetup - but everyone "knows" that in the general case you should strive to build systems that are loosely-coupled and highly-cohesive. I'm not going to talk too much about this, as other people like Martin Fowler, Robert Martin and Simon Brown (and many others) have been talking about this for years. However, a quick reminder of the core concepts won't hurt:

- Coupling: the degree to which components have knowledge of other components. Think well-defined interfaces, inversion of control etc

- Cohesion: the degree to which the elements within a component belong together. Think single responsibility principle and single reason to change

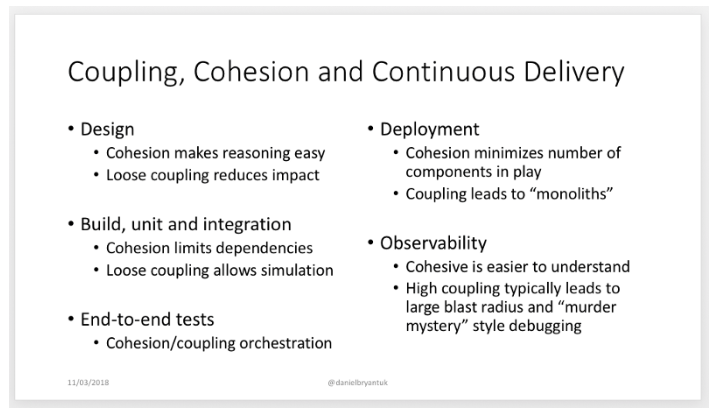

I talked at O'Reilly SACON NY about how these properties play into every stage of the continuous delivery cycle:

So, for the rest of this post I'm going to take as a given that we all agree these properties are beneficial for creating a good, evolutionary architecture for the software systems we build - after all, this ability to rapidly and safely evolve our applications was core to the acceptance of the microservice architectural style as the current "best practice" style of building many (but not all) modern software systems.

Where I think it gets interesting is this "turtles all the way down" mindset of loose-coupling and high-cohesion can be applied to our goals, practices, and tooling - particular in regards to testing.

Testing Coupling and Cohesion

It's super-easy to create a distributed monolith when designing a system using the microservice pattern - I know, I've done it once - but it's also easy to allow the monolith to sneak in elsewhere. My friend Matthew Skelton has presented a series of excellent talks on the types of "software monoliths" that can creep into a project:

- Application monolith

- Joined at the DB

- Monolithic build (rebuild everything)

- Monolithic releases (coupled)

- Monolithic thinking (standardization)

I'm going to "stand on the shoulders of giants" and suggest that a sixth type of monolith may be the "testing monolith" (which is somewhat similar to the monolithic build and release types Matthew defines). To be honest, it's not just Matthew's shoulders I'm standing on, as fellow Londoner and CD guru Steve Smith has already suggested that he believes "End-to-end Testing [is] Considered Harmful". In essence, what Steve suggests is that (monolithically) spinning everything up in order to verify the system has a bunch of issues, not least to say the "decomposition fallacy" and the "cheap investment fallacy". I believe that the challenges with testing microservice-based applications can be even more insidious than this though.

Highly-Coupled Microservice Testing

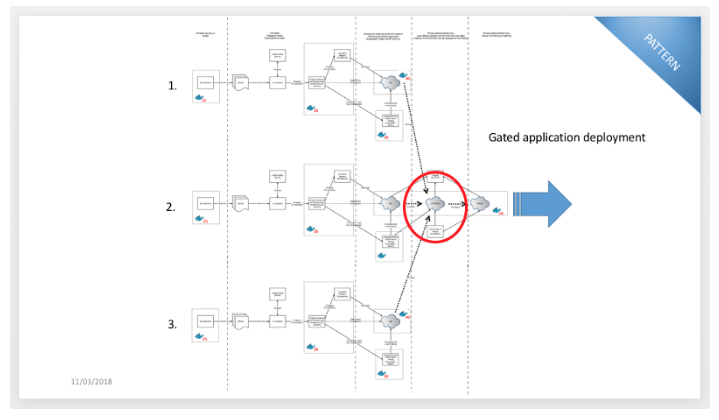

The ultimate goal of many (all?) microservice-based applications is the independent deployability of each service. When done correctly this enables increased pace of deployment, and correspondently an increase in speed of the evolution of the system. However, many of us have to start somewhere, and so we often begin with gated microservice deployment - designing and building our microservices in isolation (ideally with cross-functional teams) and verifying all of our services together in a staging environment before releasing to production. This isn't a particularly bad pattern, but it doesn't provide much in the way of the independent pace of evolution:

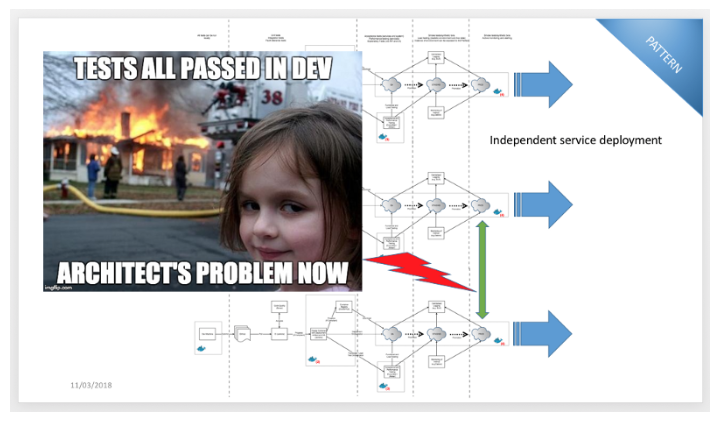

Many teams successfully implement this pattern, and then try to move towards independent deployment. The only snag is that at least some of the services are dependent on one another - perhaps through an RPC API call or a message payload contract. If teams forget this then they often successfully verify everything locally during testing, but the services (and the application) can fall over in production. I joked about this (and the role I've played in relation to this as a "microservices architect") at SACON:

In my (anecdotal) experience, developers and architects have limited experience in techniques that can promote the loose-coupling (and isolation) of tests across domain boundaries, and my hypothesis is that this is primarily because it wasn't much of an issue with a monolith - or if it was, it was relatively easy to catch and fix, as all of the domains were bundled into one artifact for test and deploy. The original "legacy" approaches to distributed computing, such as CORBA and classical SOA, got around this challenge by embracing Interface Definition Languages (IDLs) - somewhat ironically called "OMG" IDL - and web service contracts - WSDLs.

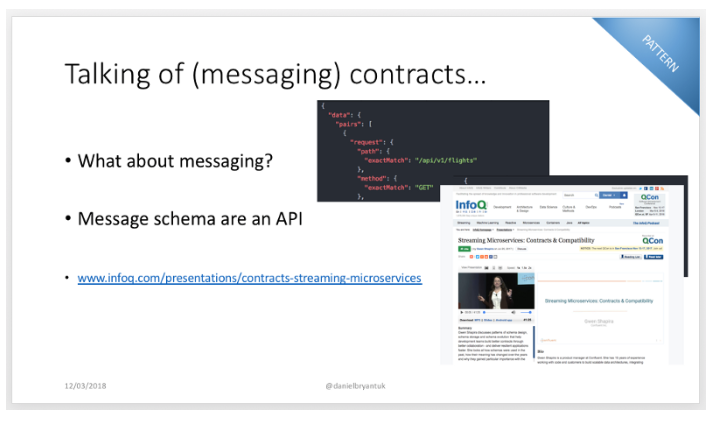

On an unrelated topic, I'm somewhat of a history geek, and a well-accepted saying within this community is that "history doesn't repeat, but it often rhymes". So, it is with limited innovation on my part when I say that the existing solutions of IDLs and contracts are exactly the approaches I recommend when testing microservices. Using IDLs like gRPC for inter-service RPC and Avro for message payload definitions (perhaps for Kafka) help greatly for verification of interfaces across service boundaries.

For more loosely-coupled approaches to communication, like HTTP and AMQP message payloads, I recommend testing technologies such as Consumer-Driven Contract tools Pact and Spring Cloud Contract:

One final warning in this space is to be aware of coupling yourself to a testing framework or infrastructure configuration. Most of our job as software developers, testers and architects is about making tradeoffs, but often half of the battle is spotting that you are making a tradeoff in the first place - I have seen many engineers create (with best intentions) hideously complicated and highly-coupled bespoke testing frameworks. This starts from the language platform itself - in my JVM-biased world I have seen people seriously abuse the awesome Spring Boot application framework by layering on more and more bespoke framework elements and scaffolding in the name of easy testing - and extends into the infra/ops world - for example, requiring the local installation of Docker, Kubernetes, Ansible, Cassandra, MySQL, the Internet (you get the point) just to be able to initialize the tests.

I have seen many engineers create (with best intentions) hideously complicated and highly-coupled bespoke testing frameworks. I'm slightly biased in this space (having previously worked as the CTO at SpectoLabs), but I often reach for service virtualization or API simulation tooling to minimize test coupling. Tools like Hoverfly, Mountebank or WireMock allow me to "virtualize" dependent services via running automated tests - perhaps driven by Serenity BDD or Gatling - against the real services (spun up in a production-like environment) and recording the responses. I can then replay these responses in a variety of test categories without needing to spin up the complete service and supporting infrastructure.

Equally valid tooling in this space includes mocking and stubbing, but watch for the blurring or poor development of your mental model of the dependency being doubled - it's all too easy to encode your bias and misunderstanding into a test double, and this will come back to haunt when the system being tested hits the reality of a production environment.

Low Cohesion Microservice Testing

Cohesion is all about things belonging together, and with testing, this starts with the obvious - group unit tests together, group integration tests together etc. - but quickly extends to much more when you think about the "single reason to change" aspects. This may sound obvious, but if you are refactoring the internals of a single microservice, then this probably shouldn't impact the few end-to-end, happy path, business-facing tests that you and your team have created. If you are swapping out data stores within a service then the service's API contract tests probably shouldn't need to be changed. If you are changing scenario data for an integration test then you probably shouldn't need to ensure that the unit tests still work correctly. You get my drift, but I frequently bump into these challenges.

This may sound obvious, but if you are refactoring the internals of a single microservice, then this probably shouldn't impact the few end-to-end, happy path, business-facing tests that you and your team have created.

My current hypothesis in this space is that if engineers spent a little more time upfront in specifying clearly what needed to be tested, and what the goals of each testing category was, then we would be in better shape. Lisa Crispin and Janet Gregory have done excellent work in this space with their books, "Agile Testing" and "More Agile Testing". Too often, I believe we start with best intentions with testing microservices, but quickly migrate to the approach of "all the tests test all the things". This often manifests itself either as the "ice cream cone" testing antipattern, or the heavy top and bottom test "pyramid" where there are lots of unit tests and lots of end-to-end tests, but not much in the way of integration or component tests. We could get away with this in a monolith, but not so much with a supposedly loosely-coupled architecture that we espouse with microservices.

The full slide deck on my recent SACON talk goes into these concepts in a bit more "Continuous Delivery Patterns for Contemporary Architecture."

Parting Thoughts

I'll close this article by stating that these observations and thoughts are largely based on my own anecdotal experiences, but I am fortunate through my consulting, conference attending and teaching activities to see a wide(ish) cross-section of the industry. Just as the microservice architectural pattern is evolving, so too are the operational and testing patterns, and it's up to all of us to share our learnings and continually chip away at creating "best practice".

Later in the year, I'm planning to write a series of posts on the challenges and good practices of microservice testing, but I had better finish my book first, " Continuous Delivery in Java!"

I hope this article has been helpful, and I would love to know your experiences and challenges of testing microservices!

Published at DZone with permission of Daniel Bryant, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments