Monitoring and Profiling Your Spring Boot Application

Learn how about the different tools available to monitor and profile your Spring Boot application. Set up your monitor and more!

Join the DZone community and get the full member experience.

Join For Free

You may also like: Monitoring Using Spring Boot 2.0, Prometheus, and Grafana (Part 1 — REST API)

Monitoring is very essential for modern applications, modern applications are highly distributed in nature and have different dependencies like database, service, caching and many more. It’s more of a like service mesh, tracing and monitoring these services are very essential to adhere to SLA (Service Level Agreement). SLA is an agreement between client and server, It accounts for reliability, responsiveness and other service-level metrics.

We always tend not to violate any SLAs, violating any part of the SLA can have many consequences. If a service fails to meet the terms defined in an SLA, it risks brand reputation damage and revenue losses. Worst of all, a company may lose a customer to a competitor due to its inability to meet a customer’s service-level requirements.

What kind of metrics should be monitored?

- Service availability: the amount of time the service is available for use. This can be measured in terms of response time, for example, X percentile abbreviated as pX e.g p95, p99, p99.999. Not all services would require p99.999, systems that are guaranteed to be highly available like E-Commerce, Search, Payment, etc, should have higher SLA.

- Defect rates: Despite good efforts in system development, no system is 100% perfect. We should counts or percentages of errors in major flows. Production failures such as server error, database query error, connection error, network errors and missed deadlines can be included in this category.

- Security: In these hyper-regulated times, application and network security breaches can be costly. Measuring controllable security measures like system access, unauthorized access to a database, bulk downloading of database records or large data movement can be included in this category.

- Business results: Increasingly, IT customers would like to incorporate business process metrics into their SLAs so that a better business decision can be made. In this category, different data can be collected like the number of users visited the page, login activity, coupon effectiveness, etc.

Choosing a set of metrics could be non-trivial in the beginning, also we can be in the dilemma of how much we should monitor. We can start with a bare minimum and add as many as we need later.

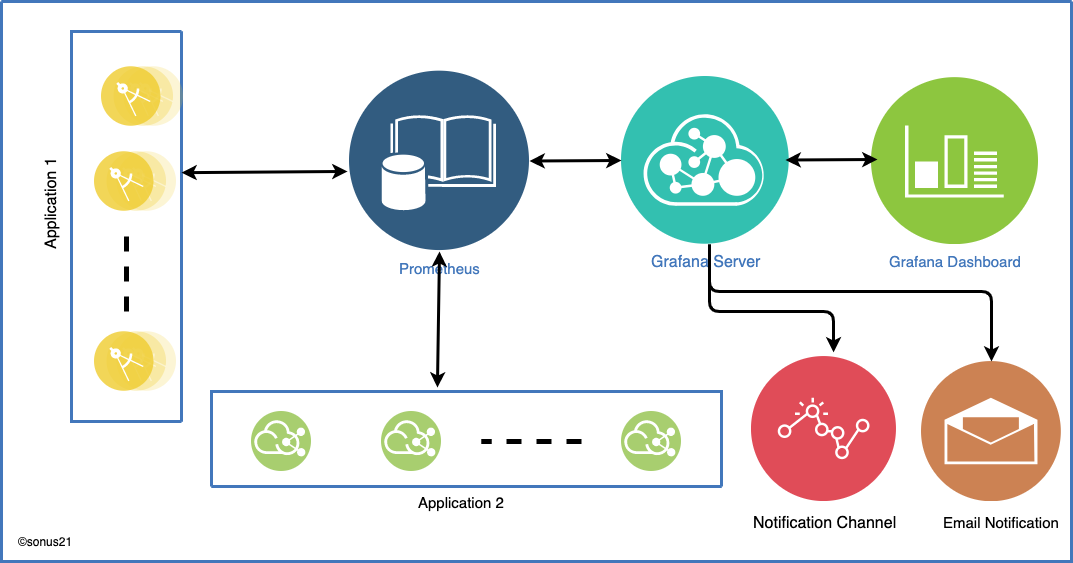

Monitoring Set-Up

A typical monitoring setup system would have three components

- Metrics store (generally a time series database) like InfluxDB, TimescaleDB, Prometheus, etc.

- Dashboard (dashboard would be used to visualize the data stored in the metrics store).

- Applications that would keep pushing metrics to the metrics store or metrics store periodically pull data from the application’s local state.

We can have other components as well for example alerting, where the alert channels could be Email, Slack or any others. Alerting component would be sending alerts to the application owners or subscribers of events. We’re going to use Grafana as a dashboard and alerting system, Prometheus as a metrics store system.

Things we need

1. Any IDE.

2. Java platform.

3. Gradle.

Create a project from Spring Boot initializer, add dependencies as many as we need. We’re going to use the Micrometer library, it is an instrumentation facade that provides bindings for many metric stores like Prometheus, Datadog, and New Relic just name a few.

Out of the box, Micrometer provides

1. HTTP request.

2. JVM.

3. Database.

3. Cache system etc related metrics.

Some of the metrics are enabled by default, whereas others can be enabled, disabled or customized. We’ll use the application.properties file to handle enabling, disabling and customization. We need to also use Spring boot actuator as this is going to expose the Prometheus endpoint.

Add these dependencies in build.gradle file:

- io.micrometer:micrometer-registry-prometheus.

- org.springframework.boot:spring-boot-starter-actuator.

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-data-jpa'

implementation 'org.springframework.boot:spring-boot-starter-web'

compileOnly 'org.projectlombok:lombok'

annotationProcessor 'org.projectlombok:lombok'

providedRuntime 'org.springframework.boot:spring-boot-starter-tomcat'

implementation 'io.micrometer:micrometer-registry-prometheus'

implementation 'org.springframework.boot:spring-boot-starter-actuator'

// https://mvnrepository.com/artifact/com.h2database/h2

compile group: 'com.h2database', name: 'h2', version: '1.4.200'

testImplementation('org.springframework.boot:spring-boot-starter-test') {

exclude group: 'org.junit.vintage', module: 'junit-vintage-engine'

}

}We can enable Prometheus export by adding the following line to the properties file.

management.metrics.export.prometheus.enabled=trueOnce this line is added Micrometer will start accumulating data about the application, and this data can be viewed by visiting actuator/Prometheus endpoint, this endpoint would be used in the Prometheus script to fetch the data from our application servers.

Even though we have added this line in properties, we can’t browse Prometheus endpoint, since this is disabled by default, we can expose that using management endpoint, include Prometheus in the list.

management.endpoints.web.exposure.include=prometheusNOTE: Do not enable all endpoints from actuator as it can lead to a security loophole. We should choose them selectively, especially in the production system, even if we want then do not expose the endpoint to the whole world as it can expose a whole lot of data about the application, use some proxy or some rule to hide from the outside world.

The different parts of HTTP requests are customizable like SLA, percentile histogram should be computed or not, this is done using metrics.distribution properties.

A sample application.properties can have these lines

# Enable prometheus exporter

management.metrics.export.prometheus.enabled=true

# Enable prometheus end point

management.endpoints.web.exposure.include=prometheus

# enable percentile-based histogram for http requests

management.metrics.distribution.percentiles-histogram.http.server.requests=true

# http SLA histogram buckets

management.metrics.distribution.sla.http.server.requests=100ms,150ms,250ms,500ms,1s

# enable JVM metrics

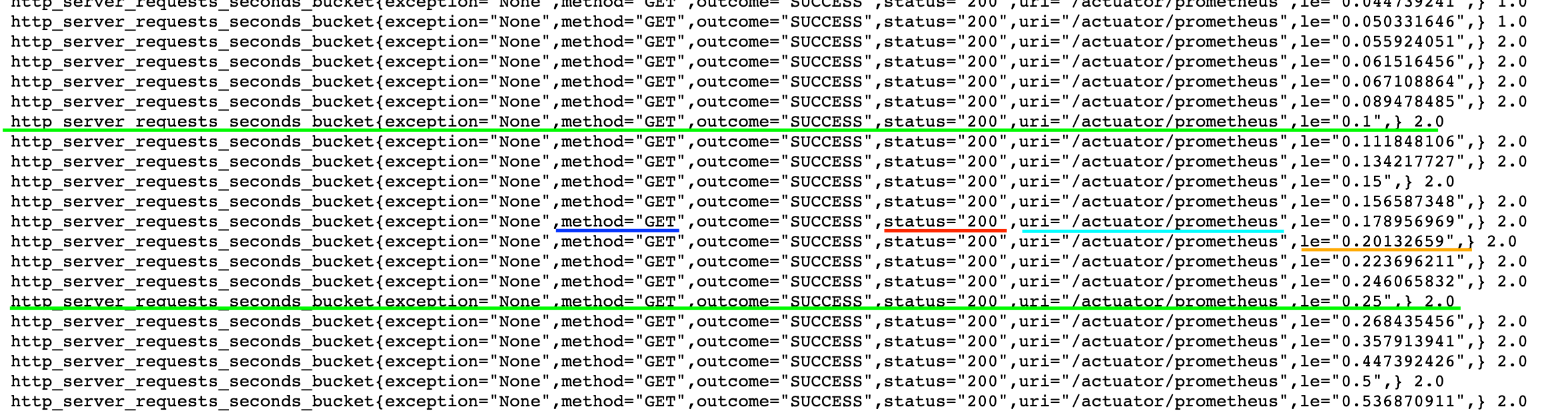

management.metrics.enable.jvm=trueNow, if we run the application and browse to the page http://locahost:8080/actuator/prometheus, this will display hell lot of data.

The above data displays HTTP request detail, exception=None means no exception occurred if any then we can use that to filter how many requests have failed due to that exception, method=GET HTTP method name. status=200 HTTP status code is 200, uri=/actuator/prometheus displays the URL path, le=xyz displays the processing time, N.0 displays the number of times that end-point was called.

This data is a histogram that can be plotted in Grafana, for example to plot p95 over 5 minutes we can use the following query.

histogram_quantile(0.95,sum(rate(http_server_requests_seconds_bucke[5m])) by (le))We can plot other metrics as well in Grafana like Pie-Chart etc.

Custom Metrics

Many times we need custom metrics, some of the use cases are the number of logged-in users, currently available stock details, number of orders in the order queue, etc. Some of the business use cases can be solved using custom metrics, micrometer supports different types of metrics like Timer, Gauge, Counter, Distribution summaries, and Long task timers, etc. We’ll mainly focus on Gauge and Counter. Gauge gives us instantaneous data like the length of a queue, whereas the counter is like a monotonically increasing number starts from one.

For this, we’re going to create a demo stock manager that will store details in memory and would provide two functionalities:

1. Add items.

2. Get items.

In this, we’ve created one counter and one gauge in init method, whenever getItems is called then we increase the counter as well as we measure the stock size whereas when addItems is called then we only update the gauge.

@Component

public class StockManager {

@Autowired private MeterRegistry meterRegistry;

private List<String> orders = new Vector<>();

private Counter counter;

private Gauge gauge;

@PostConstruct

public void init() {

counter =

Counter.builder("order_created")

.description("number of orders created")

.register(meterRegistry);

gauge =

Gauge.builder("stock.size", this, StockManager::getNumberOfItems)

.description("Number of items in stocks")

.register(meterRegistry);

}

public int getNumberOfItems() {

return orders.size();

}

public void addItems(List<String> items) {

orders.addAll(items);

// measure gauge

gauge.measure();

}

public List<String> getItem(int count) {

List<String> items = new ArrayList<>(count);

while (count > 0) {

try {

items.add(orders.remove(0));

} catch (ArrayIndexOutOfBoundsException e) {

break;

}

count -= 1;

}

// increase counter

counter.increment();

// measure gauge

gauge.measure();

return items;

}

}For demonstration purposes, we'll add two endpoints to add items and get items.

@RestController

@RequestMapping(path = "stocks")

@RequiredArgsConstructor(onConstructor = @__(@Autowired))

public class StockController {

@NonNull private StockManager stockManager;

@GetMapping

@ResponseBody

public List<String> getItems(@RequestParam int size) {

return stockManager.getItem(size);

}

@PostMapping

@ResponseBody

public int addItems(@RequestParam List<String> items) {

stockManager.addItems(items);

return stockManager.getNumberOfItems();

}

}Let’s fist add ten items using two API calls.

Curl -X POST http://localhost:8080/stocks?items=1,2,3,4

Curl -X POST http://localhost:8080/stocks?items=5,6,7,8,9,10

Now if we browse to Prometheus endpoints then we can see the following data, which indicates currently we have 10 items in the stock.

# HELP stock_size Number of items in stocks

# TYPE stock_size gauge

stock_size 10.0Now, we’re going to place an order of size three.

http://localhost:8080/stocks?size=3

Again, if we browse Prometheus endpoint then we get the following data that indicates stock size has been changed to seven.

# HELP stock_size Number of items in stocks

# TYPE stock_size gauge

stock_size 7.0Also, we can see the counter has been added with value 1, this indicates one order has been placed.

# HELP order_created_total number of orders created

# TYPE order_created_total counter

order_created_total 1.0Profiling

In software engineering, profiling (“program profiling”, “software profiling”) is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls.

Most commonly, profiling information serves to aid program optimization. Profiling is achieved by instrumenting either the program source code or its binary executable form using a tool called a profiler (or code profiler). Profilers may use a number of different techniques, such as event-based, statistical, instrumented, and simulation methods.

— Wikipedia

Profiling is very helpfull in diagnostic system problems like how much time an HTTP call takes, even if it takes N seconds, then where all this time has been spent, what’s the distribution of N seconds among different database queries, downstream service calls, etc. We can use the histogram to plot the distribution in the dashboard, also we can use a counter to measure the DB queries count, etc. For profiling, we need to inject some code in many functions that would be executed as part of the method execution.

The interesting part about the profiling code is that they are the same for similar types of profiler which means we need to copy-paste similar code at thousands of places, if we have to change anything then we need to update the same. Profiler code in every file and likely in every function that requires profiling would increase the complexity and can become a complete mess; though we can avoid this mess using Aspect-Oriented Programming (AOP).

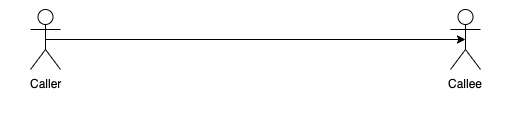

In short, AOP works on proxy design pattern, though it can be implemented using byte code modification as well.

Whenever a method is called then our expectation is that the callee method would be directly called without any intermediate steps, but when AOP is put in place then method call is intercepted by a proxy method and proxy method calls the target method, the proxy method returns the result to the caller, as depicted in below figure.

The system depends on different other systems, so we might be interested in profiling different components differently, for example, database calls, HTTP requests, downstream service calls or some specific methods that are critical or would like to see what’s going in some specific methods. We can use the same Micrometer library for profiling as well but that may not be exactly what we want so we’ll change code.

Micrometer comes with a Timed annotation, this annotation can be placed on any public method, as the name suggests this can measure execution time, this is going to measure the execution time of the corresponding method. Instead of directly using this annotation, we’ll extend this annotation to support other features like logging, retry, etc.

Timed annotation is useless without TimedAspect bean, as we’re redefining the Timed annotation so we’ll also define TimedAspect class as per need like logging, mass profiling (profile all methods in a package without adding any annotation on any method or class), retry, etc. In this story, we’ll see three of the use cases:

Mass profiling.

Logging.

Profile Specific method.

Create a java file MonitoringTimed.java, in this we’ve added a new field called loggingEnabled, this field would be used to check whether logging is enabled or not if it’s enabled then log the method arguments and return values.

@Target({ElementType.ANNOTATION_TYPE, ElementType.TYPE, ElementType.METHOD})

@Retention(RetentionPolicy.RUNTIME)

public @interface MonitoringTimed {

/** All fields are same as in {@link io.micrometer.core.annotation.Timed} */

String value() default "";

String[] extraTags() default {};

boolean longTask() default false;

double[] percentiles() default {};

boolean histogram() default false;

String description() default "";

// NEW fields starts here

boolean loggingEnabled() default false;

}This annotation is not useful without the timed aspect class, so a new class MonitoringTimedAspect will be defined with all required details, this class would have a method to profile any method based on the processing joint object and MonitoringTimed object and another one to profile method based on the MonitoringTimed annotation.

@Around("execution (@com.gitbub.sonus21.monitoring.aop.MonitoringTimed * *.*(..))")

public Object timedMethod(ProceedingJoinPoint pjp) throws Throwable {

Method method = ((MethodSignature) pjp.getSignature()).getMethod();

MonitoringTimed timed = method.getAnnotation(MonitoringTimed.class);

if (timed == null) {

method = pjp.getTarget().getClass().getMethod(method.getName(), method.getParameterTypes());

timed = method.getAnnotation(MonitoringTimed.class);

}

final String metricName = generateMetricName(pjp, timed);

return timeThisMethod(pjp, timed, metricName);

}

public Object timeThisMethod(ProceedingJoinPoint pjp, MonitoringTimed timed) throws Throwable {

final String metricName = generateMetricName(pjp, timed);

return timeThisMethod(pjp, timed, metricName);

}The method TimedMethod having Around annotation is used to filter all the method calls having annotated with MonitoringTimed.

For mass profiling, we’ll define a profiler class that will work on the package level filtering, For example, for HTTP request profiling we can add ControllerProfiler, which would handle the profiling of all public methods available in the controller package.

@Aspect

@Component

public class ControllerProfiler {

private static Map<String, Object> timedAnnotationData = new HashMap<>();

static {

// use percentile data of p90, p95, p99.99

double[] percentiles = {0.90, 0.95, 0.9999};

// set histogram to true

timedAnnotationData.put("histogram", true);

// set percentile

timedAnnotationData.put("percentiles", percentiles);

}

@Autowired private MonitoringTimedAspect timedAspect;

private static final MonitoringTimed timed = Javanna.createAnnotation(MonitoringTimed.class, timedAnnotationData);

private static final Logger logger = LoggerFactory.getLogger(ControllerProfiler.class);

@Pointcut("execution(* com.gitbub.sonus21.monitoring.controller..*.*(..))")

public void controller() {}

@Around("controller()")

public Object profile(ProceedingJoinPoint pjp) throws Throwable {

// here add other logic like error happen then log parameters etc

return timedAspect.timeThisMethod(pjp, timed);

}

}Interesting line in the above code is @Pointcut(“execution(*com.gitbub.sonus21.monitoring.controller..*.*(..))”), this defines a pointcut, a pointcut expression can be defined using boolean operators like not(!), or (||),and(&&). Once a method is qualified as per the pointcut expression then it can call the corresponding method defined using [at]Around annotation. As we have defined a profile method that would get called, we can also define other methods using [at]After, [at]Before, etc annotations.

After adding a few items using the POST method, we can see the following data at Prometheus endpoint.

method_timed_seconds{class="com.gitbub.sonus21.monitoring.controller.StockController",exception="none",method="addItems",quantile="0.9",} 0.0

method_timed_seconds_bucket{class="com.gitbub.sonus21.monitoring.controller.StockController",exception="none",method="addItems",le="0.001",} 3.0

method_timed_seconds_bucket{class="com.gitbub.sonus21.monitoring.controller.StockController",exception="none",method="addItems",le="0.002446676",} 3.0We can directly use MonitoringTimed annotation as well on any method to measure execution time, for example, let’s measure how much time StockManager’smethod addItems takes in adding items.

@MonitoringTimed

public void addItems(List<String> items) {

orders.addAll(items);

// measure gauge

gauge.measure();

}Once we start the application and add a few items then we can see following at the Prometheus endpoint.

method_timed_seconds_count{class="com.gitbub.sonus21.monitoring.service.StockManager",exception="none",method="addItems",} 4.0

method_timed_seconds_sum{class="com.gitbub.sonus21.monitoring.service.StockManager",exception="none",method="addItems",} 0.005457965

method_timed_seconds_max{class="com.gitbub.sonus21.monitoring.service.StockManager",exception="none",method="addItems",} 0.00615316MonitoringTimed can be further customized, like the number of retries can be added to support retry in the case of failure, log function arguments in case of failure so that it can be analyzed later why it’s failed.

Complete code is available at GitHub.

If you found this post helpful please share across and give a thumbs up.

Further Reading

Magic With the Spring Boot Actuator

Spring Boot Actuator in Spring Boot 2.0

Spring Boot Admin Client Configuration Using Basic HTTP Authentication

Opinions expressed by DZone contributors are their own.

Comments