OpenShift vs. Kubernetes: The Unfair Battle

In this article, we are going to be comparing OpenShift and Kubernetes, and let me tell you, the comparison is far from fair.

Join the DZone community and get the full member experience.

Join For FreeThe most popular container orchestration software alternatives available today are OpenShift and Kubernetes.

In this article, we are going to be comparing OpenShift and Kubernetes, and let me tell you, the comparison is far from fair. Indeed, comparing OpenShift and Kubernetes is difficult, as they are two very different solutions altogether. Comparing them is a little like comparing a Personal Computer (OpenShift) and a CPU (Kubernetes).

As Kubernetes is a crucial component of OpenShift, comparing the two platforms can be confusing. Hence, to help you determine which is a better option for you, we will cover the most significant differences between the two, including installation, command-line options, user interface, security, support, and other topics.

Before we proceed and take a look at the differences between OpenShift and Kubernetes, i.e., OpenShift vs. Kubernetes, let’s attempt to understand them briefly.

What Is Kubernetes?

Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications. It’s also commonly referred to as K8s. Kubernetes was the third container-management system developed by Google. The first and second were Borg and Omega, respectively. Click here to learn more about these three container-management systems built and used by Google.

What Is OpenShift?

Red Hat OpenShift is an enterprise-ready Kubernetes container platform that enables automation inside and outside your Kubernetes clusters and contains a private container registry installed as part of the Kubernetes cluster.

What Is a Container Orchestration System?

The automation of the operational tasks necessary to execute containerized workloads and services, such as container provisioning, deployment, scaling, networking, and load balancing, is known as container orchestration. Indeed, the system that aids with automation is a Container Orchestration System. As mentioned earlier, there are various alternatives available on the market. These include Kubernetes, OpenShift, Amazon ECS, Docker Swarm, and Nomad, to name a few. In this blog, we will address K8S vs. OpenShift, where Kubernetes is purely a container orchestration engine, and OpenShift is a platform-as-a-service (PAAS) solution used to make container orchestration easier. Before we go ahead with the comparison, let’s try to understand the architecture of OpenShift and Kubernetes quickly.

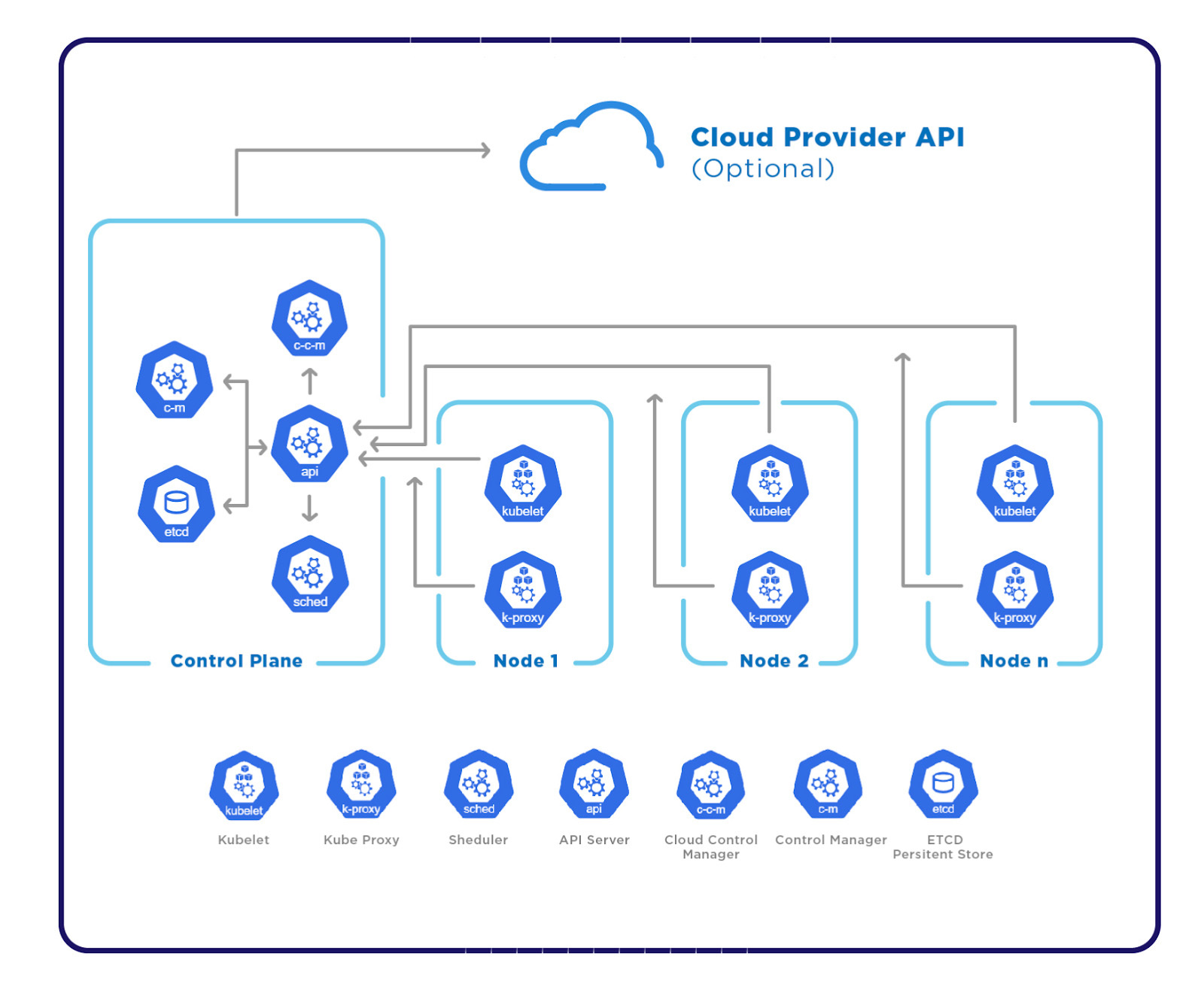

The Quick Architecture of Kubernetes

A Kubernetes cluster is made up of a single, or a number of master machines called control planes and a single, or a set of worker machines called nodes. The components of the application workload, known as Pods, are hosted by the worker nodes, while the control planes, or master nodes, oversee the cluster’s worker nodes and Pods.

For a Kubernetes cluster to be complete and functional, you must have a number of different components. A Kubernetes cluster is made up of the following parts:

Control Plane or Master Node Components

- kube-apiserver: The Kubernetes API is made available through the Kubernetes control plane component known as kube-apiserver. The Kubernetes control plane’s front end is the API server.

- etcd: Kubernetes uses etcd as the backing store for all cluster data seeing as it’s a reliable and highly available key-value store.

- kube-scheduler: The kube-scheduler component of the Control plane is responsible for choosing a node for newly formed Pods with no associated node.

- kube-controller-manager: Running controller processes is the responsibility of the Control plane component kube-controller-manager.

- cloud-controller-manager: The cloud controller manager separates the components that communicate with the cloud platform from those that only interface with your cluster and enables you to connect your cluster to the API of your cloud provider.

Worker Machine or Node Components

- kubelet: The responsibility of kubelet is to make sure that containers are running in a Pod.

- kube-proxy: Every node in your cluster runs kube-proxy, a network proxy that executes a portion of the Kubernetes Service concept. On nodes, kube-proxy keeps track of network policies. These network rules allow network communication to your Pods from sessions both inside and outside of your cluster.

- Container runtime: Container runtime is the software responsible for running containers. Containerd and CRI-O are two of the container runtimes that Kubernetes supports besides any other implementation of the Kubernetes CRI.

The Quick Architecture of OpenShift

It’s possible to create and run containerized apps on the OpenShift Container Platform. The technology that powers containerized apps is incorporated into the OpenShift Container Platform, which has its roots in Kubernetes.

Control Plane or Master Node Components

1. OpenShift Service:

- OpenShift API server: For OpenShift resources such as projects, routes, and templates, the OpenShift API server validates and configures the data.

- OpenShift controller manager: The project, route, and template controller objects are examples of OpenShift objects that the OpenShift controller manager monitors for changes in etcd before using the API to enforce the desired state.

- OpenShift OAuth API server: Users, groups, and OAuth tokens are all configured and verified by the OpenShift OAuth API server before being used to authenticate the OpenShift Container Platform.

- OpenShift OAuth server: In order to authenticate themselves to the API, users must ask the OpenShift OAuth server for tokens.

2. Networking Components: A unified cluster network is provided by the OpenShift Container Platform using a software-defined networking (SDN) strategy, allowing for communication across pods throughout the cluster.

3. Kubernetes Service:

- Kubernetes API server: The data for pods, services, and replication controllers is verified and set up by the Kubernetes API server. Additionally, it serves as a focal point for the cluster’s shared state.

- Kubernetes controller manager: When items like replication, namespace, and service account controller objects change, the Kubernetes controller manager monitors etcd for those changes and then utilizes the API to enforce the desired state.

- Kubernetes scheduler: The ideal node for hosting the pod is chosen by the Kubernetes scheduler when it notices newly produced pods without a designated node.

4. Cluster Version Operator: Many cluster operators are deployed by default in the OpenShift Container Platform, and the Cluster Version Operator (CVO) handles their lifecycle. Using the most recent component versions and data, the CVO also consults the OpenShift Update Service to determine the updates and update the pathways which are still valid.

5. etcd: While other components wait for etcd to make modifications so they can enter the desired state, etcd stores the persistent master state.

Control Plane also includes CRI-O and Kubelet, where CRI-O provides facilities for running, stopping, and restarting containers. Kubelet acts as a primary node agent for Kubernetes responsible for launching and monitoring containers.

Worker Machine or Node Components

- Observability: The key platform components of the OpenShift Container Platform are monitored via a pre-set, preinstalled, and self-updating monitoring stack. Cluster administrators have the option to enable monitoring for user-defined projects following the installation of the OpenShift Container Platform.

- Networking: There are various options available to cluster administrators for opening up cluster applications to outside traffic and securing network connections.

- OpenShift Lifecycle Manager: The facilities provided by Operator Lifecycle Manager to those creating and deploying apps allow for the distribution and storage of Operators.

- Integrated Image Repository: From your source code, the OpenShift Container Platform can create images, deploy them, and manage their lifespan. In order to manage images locally, it offers an internal, integrated container image registry that can be installed in your OpenShift Container Platform environment.

- Machine Management: To administer the OpenShift Container Platform cluster, you can leverage machine management to flexibly interact with underlying infrastructures such as AWS, Azure, GCP, OpenStack, Red Hat Virtualization, and vSphere.

Same as Control Plane, the Worker node also contains CRI-O and Kubelet.

Advantages of Using Kubernetes

- Kubernetes can be used for free on any platform, as it’s open-source.

- It has a sizable, active developer and engineering community which helps with the regular release of new features.

- Several installation tools, such as kubeadm, kops, and kube-spray, can be used to install Kubernetes on the majority of systems.

Advantages of Using OpenShift

- Almost every Kubernetes task can be built, deployed, scaled, monitored, and implemented using OpenShift’s default feature-rich graphical interface, which is available to administrators and developers.

- Different cloud service providers offer various Kubernetes-managed services, each with its own set of add-ons, plugins, and usage guidelines. Hence, you need an understanding of how things work when you move from one cloud provider to another using Kubernetes. That said when it comes to OpenShift, the same web interface can be used to build, deploy, and manage your application on all cloud service platforms.

- In order to prevent the problem of account compromise, OpenShift provides role-based access control (RBAC) by default, which helps to make sure that each developer only receives permission to access the features they require.

- Red Hat OpenShift offers commercial support, updates, patches, and better security for Kubernetes and Kubernetes-native apps.

- Red Hat OpenShift provides control, visibility, and management to deploy, maintain, and create code pipelines with ease by integrating platform monitoring and including automated maintenance operations and upgrades.

1. OpenShift vs. Kubernetes: Product vs. Project

The first and most important distinction between OpenShift and Kubernetes is that OpenShift is a commercial product that requires a membership. In contrast, Kubernetes is an open-source Project available for free. Therefore, in the case of any issues or bugs, OpenShift offers a good paid support alternative for troubleshooting the issues. On the other hand, in Kubernetes, you need to contact the Kubernetes community, which is made up of several professionals, including developers, administrators, and architects, to troubleshoot the issues or bugs found in the tool.

Question for you: Are you ready to pay for the subscription for OpenShift, or are you good with Kubernetes, which is free of cost?

2. OpenShift vs. Kubernetes: Installation

Installation is the first thing that you really need to do to get your Cluster up and running, and one of the most important points to consider when discussing the OpenShift vs. Kubernetes topic.

In the case of OpenShift, you must use one of the platforms listed below to install it. It cannot be installed on any other Linux distribution.

- Red Hat Enterprise Linux CoreOS (RHCOS) (for master nodes)

- Red Hat Enterprise Linux (RHEL) (for worker nodes)

On the contrary, Kubernetes can be set up on the majority of systems and is installable via a variety of tools, including Kubeadm, Kube-spray, Kops, and Booktube.

Question for you: Do you want a restriction on the operating system, or are you comfortable using any of the available and supported systems?

3. OpenShift vs. Kubernetes: Command line

Once you’ve set up your cluster, you need a way to interact with it. Hence, “Command line” is our next point of discussion in this OpenShift vs. Kubernetes article.

Kubernetes offers a command-line tool for interacting with the control plane of a Kubernetes cluster. Kubectl is the name of this utility. You can issue commands to Kubernetes clusters using Kubectl. With Kubectl, applications can be deployed, cluster resources can be inspected and managed, and logs can be seen.

With OpenShift, similar functionality is provided by the oc command seeing as it was developed by Kubectl.

With OpenShift, similar functionality as that of kubectl is provided by the oc command. That said, it also expands to natively support more OpenShift Container Platform features, such as:

- Full support for OpenShift Container Platform resources:

DeploymentConfig, BuildConfig, Route, ImageStream, and ImageStreamTag objects are examples of resources that are exclusive to the OpenShift Container Platform that can be managed using oc command. - Authentication:

The built-in login command provided by the oc binary provides authentication and allows you to interact with the OpenShift Container Platform. - Additional commands like oc new-app, oc new-project:

The oc new-app command makes it simpler to launch new apps using pre-built images or existing source code. Similarly, starting a project that you can use as your default is made simpler by the oc new-project command.

Question for you: Do you want to use Kubectl, which issues commands to your Kubernetes cluster, or do you have resources that require oc command to be in place?

4. OpenShift vs. Kubernetes: User Interface

The command line is not the only option that interacts with your Cluster, and so does User Interface. Hence, an efficient web-based User Interface (UI) is necessary for cluster administration and cannot, therefore, be skipped when talking about Kubernetes vs. OpenShift.

The Kubernetes dashboard must be installed independently, and you must use the kube-proxy to route a local machine port to the cluster’s admin server. Additionally, seeing as the dashboard lacks a login page, you must manually establish a bearer token to serve as authorization and authentication.

The web console for OpenShift contains a login page. The console is easily accessible, and most resources can be created or modified via a form. Servers, projects, and cluster roles can all be seen.

Question for you: Can you afford to invest efforts to install a Dashboard on your own, or do you want a fancy User Interface to access your cluster?

5. OpenShift vs. Kubernetes: Project vs. Namespace

A way to separate Kubernetes cluster resources within a single cluster is through the use of namespaces in Kubernetes. Namespaces are designed for environments with a large user base spread across numerous teams or projects. Namespaces are a technique used to allocate cluster resources to different users.

There are projects in OpenShift that are nothing more than enhanced Kubernetes namespaces. The project is used in exactly the same way as a Kubernetes namespace when deploying software on OpenShift, with the exception that users cannot create projects on their own and must be granted access by administrators.

Question for you: Does Namespace in Kubernetes meet your requirement to isolate resources in your Cluster, or do you explicitly need Projects in OpenShift?

6. OpenShift vs. Kubernetes: Templates vs. Helm

Helm templates are available in Kubernetes and are flexible and simple to utilize. Charts are packages, and Helm is the package management tool. When talking about Kubernetes vs. OpenShift, this point should definitely be considered.

In the context of OpenShift, a template defines a collection of objects that can be processed and parameterized to generate a list of objects for generation by the OpenShift Container Platform. Anything you are authorized to produce within a project can be created using a template.

OpenShift templates lack the advanced templates and package versioning found in Helm charts. As a result, OpenShift deployment becomes more difficult, and, in most cases, external wrappers are required.

Question for you: If you are already familiar with Helm, do you still want to learn OpenShift Templates?

7. OpenShift vs. Kubernetes: Image Registry

You can use your own Docker registry with Kubernetes. However, Kubernetes doesn’t have an integrated image registry. In contrast, the built-in container image registry offered by the OpenShift Container Platform is a regular workload for the cluster. It works on top of the current cluster infrastructure while offering users an out-of-the-box solution for managing the images that run their workloads. This registry doesn’t need special infrastructure configuration, and it can be scaled up or down like any other cluster workload. The ability to produce and retrieve images is further controlled by setting user permissions on the image resources, seeing as they are linked to the cluster user authentication and authorization system. This is one of the OpenShift features that differentiate it from Kubernetes. Make a note that you can also integrate your OpenShift Cluster with several major image registries such as, but not limited to, Docker Hub, Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), and Microsoft Azure Container Registry (ACR).

Question for you: Do you require an integrated image registry within your cluster, or do you have no issues using your own image registry?

8. OpenShift vs. Kubernetes: Security

OpenShift has stricter security guidelines than Kubernetes. Indeed, in Openshift, you aren’t allowed to execute basic container images or many official images due to security requirements.

For instance, seeing as OpenShift restricts running a container as root and many official images don’t comply, the majority of container images available on Docker Hub don’t work on the platform.

Role-based access control (RBAC), a feature that OpenShift offers by default, helps to ensure that each developer only has access to the capabilities they require to prevent account compromise problems. Due to the lack of native authentication and authorization features, Kubernetes security features require a more complicated setup.

Other security rules, such as IAM and OAuth, are set by default when you create a project with OpenShift. User permissions only need to be added if required. This speeds up the setup process for your application environment and therefore saves you time.

With respect to security, the comparison between these two options simply isn’t fair, seeing as OpenShift’s security is, in fact, quite strict.

Question for you: Do you want security by default in your Cluster, or can you manage it on your own?

9. OpenShift vs. Kubernetes: CI/CD

Organizations can use the OpenShift Container Platform to automate the delivery of their applications using DevOps techniques such as continuous integration (CI) and continuous delivery (CD). The OpenShift Container Platform offers the following CI/CD options to fulfill organizational needs:

- OpenShift Builds: Using a declarative build process, OpenShift Builds enables you to build cloud-native apps.

- OpenShift Pipelines: OpenShift Pipelines offers a Kubernetes-native CI/CD platform for designing and running each stage of the pipeline in its own container.

- OpenShift GitOps: With OpenShift GitOps, administrators can deploy and configure Kubernetes-based infrastructure and apps reliably across clusters and development lifecycles.

- Jenkins: Jenkins automates the development, testing, and deployment of projects and applications. A Jenkins image that is directly integrated with the OpenShift Container Platform is offered via the OpenShift Developer Tools.

This is one of the features that differentiates OpenShift from Kubernetes. Indeed, OpenShift provides built-in CI/CD integration. On the other hand, Kubernetes doesn’t have an official CI/CD integration option. Therefore, in order to create a CI/CD pipeline using Kubernetes, you must integrate external tools.

Question for you: Do you want an integrated CI/CD solution within your cluster, or can you take care of the tools and their installation on your own?

10. OpenShift vs. Kubernetes: Support

Since Kubernetes is an open-source project, a sizable and engaged developer community constantly works together to improve the platform. When it comes to OpenShift, the support group is substantially smaller and consists mainly of Red Hat developers.

OpenShift provides committed customer service, support, and advice as a commercial offering. As an open-source, community-based, free project, Kubernetes doesn’t provide specialized customer support.

In light of the above, when developers run into Kubernetes problems, they must wait for their questions to be answered, relying on the experience of other developers on discussion forums. Red Hat engineers are available to support OpenShift users around the clock.

Question for you: Do you want a paid dedicated support team to help you with your issues, or you can rely on the community and search for solutions free of cost?

11. OpenShift vs. Kubernetes: Cloud Agnostic

Ideally, to increase productivity, you want the flexibility to move your application between different cloud service providers without having to modify or replace your application infrastructure.

There are different cloud providers, including AWS, GCP, and Azure, that offer various Kubernetes-managed services, each with its own set of add-ons, plugins, and usage guidelines. Before switching between cloud services, you need to become familiar with the managed Kubernetes services in order to grasp how things work. This is why Kubernetes is not as cloud-agnostic as OpenShift. True enough, the user experience and features of hosted or managed OpenShift remain the same.

Question for you: Do you plan to move from one cloud provider to another, or would you rather always use the same one?

12. OpenShift vs. Kubernetes: Pricing

Since Kubernetes is an open-source project, it’s free and doesn’t require any licensing. Therefore, you aren’t required to pay anyone if you manage Kubernetes on your own. However, you will be charged if you utilize a managed service offered by any provider, such as AWS, GCP, or Azure. The cost will be determined by the platform you choose and the number of resources you use.

OpenShift provides two types of services – Red Hat OpenShift cloud services editions and Self-managed Red Hat OpenShift editions. If you are using cloud services, Red Hat OpenShift reserved instances can be purchased for as little as $0.076/hour as of November 20, 2022, and the cost of self-managed Red Hat OpenShift depends on your subscription and sizing choices.

Question for you: Would you rather use a self-managed Kubernetes cluster and save money or spend on a managed service?

Conclusion

Both OpenShift and Kubernetes allow you to deploy and manage containerized applications quickly. However, they do differ in certain ways, which is why we have this blog on OpenShift vs. Kubernetes for you. Kubernetes is available free of cost, whereas Openshift has different plans to match your needs. So, OpenShift asks you to pay; however, it provides customer support that Kubernetes doesn’t. This doesn’t mean you won’t get help if you face issues while using Kubernetes. Indeed, Kubernetes has a huge community available to support you. Another thing to note is that Kubernetes Helm charts are great to work with, while OpenShift has a fancy user interface dashboard. The list of differences is long.

Now that you’ve read this article, you should better understand the key distinctions between OpenShift and Kubernetes. You should consider your skill set, requirements, and specifications when selecting a platform. It’s also important to explore and test the solution before integrating the tool into your workflow, seeing as you want to develop the pipeline that works best for you.

Published at DZone with permission of Rahul Shivalkar. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments