Snowflake Data Encryption and Data Masking Policies

This post will describe data encryption and data masking functionalities.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

Snowflake architecture has been built with security in mind from the very beginning. A wide range of security features are made available from data encryption to advanced key management to versatile security policies to role-based data access and many more, at no additional cost. This post will describe data encryption and data masking functionalities.

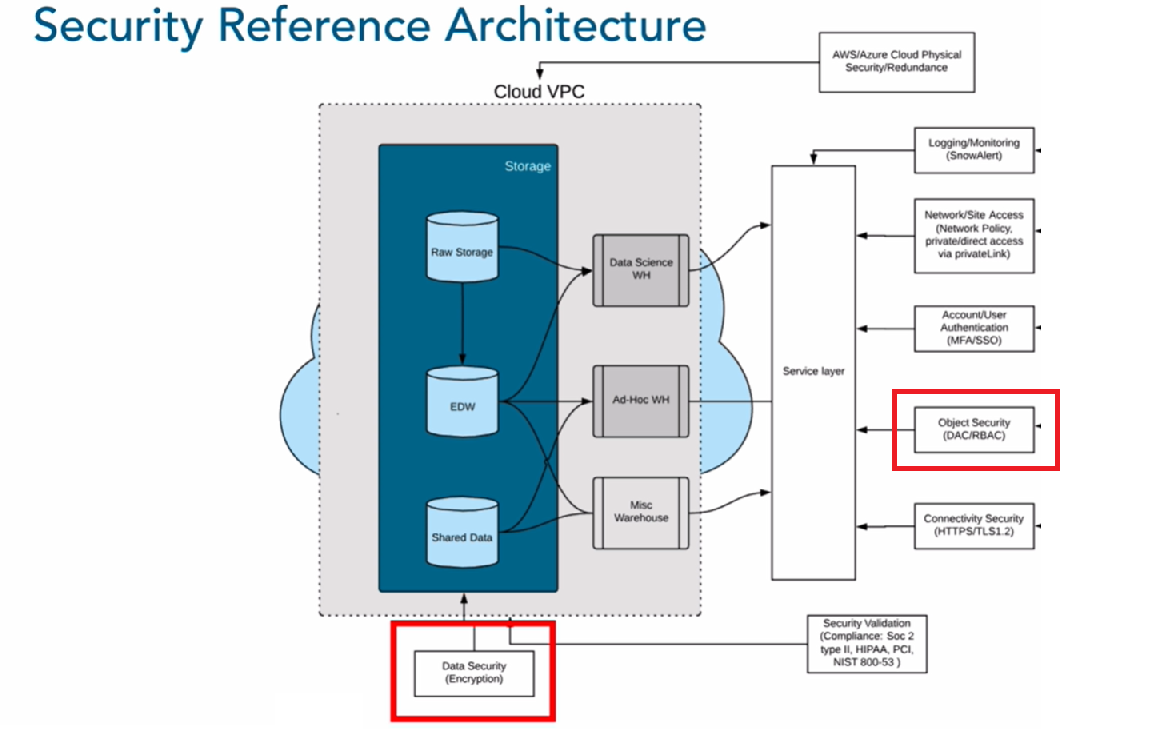

Snowflake Security Reference Architecture

Snowflake Security Reference Architecture includes various state-of-the-art security techniques that offer multiple outstanding cloud security capabilities. It includes data encryption while data at rest, secure data transfers while data in transit, role-based table access, column and row-level access to a particular table, network access/IP range filtering, multi-factor authentication, Federated Single Single On, etc.

In this article, we are going to illustrate the data encryption and data masking solutions available in Snowflake.

Data encryption and data masking are two distinct functionalities; data encryption is essentially a cryptography process to transform the data into unreadable cypher text, whereas data masking is meant to anonymize the data and prevent sensitive personally identifiable information (PII) to be accessible and identifiable for unwanted users. As such, data masking does not involve encryption.

Data Encryption in Snowflake

Snowflake stores all data automatically encrypted using AES-256 strong encryption. In addition, all files stored in stages for data loading and unloading automatically encrypted (using either AES 128 standard or 256 strong encryption).

For data loading, there are two types of supported staging areas:

1. Snowflake-provided staging area

In case of Snowflake-provided staging area (a.k.a. internal Snowflake staging) the uploaded files are automatically encrypted with 128-bit or 256-bit keys, depending on the CLIENT_ENCRYPTION_KEY_SIZE account parameter. See Snowflake documentation for reference and more details about PUT command to upload files from local computer to an internal staging area.

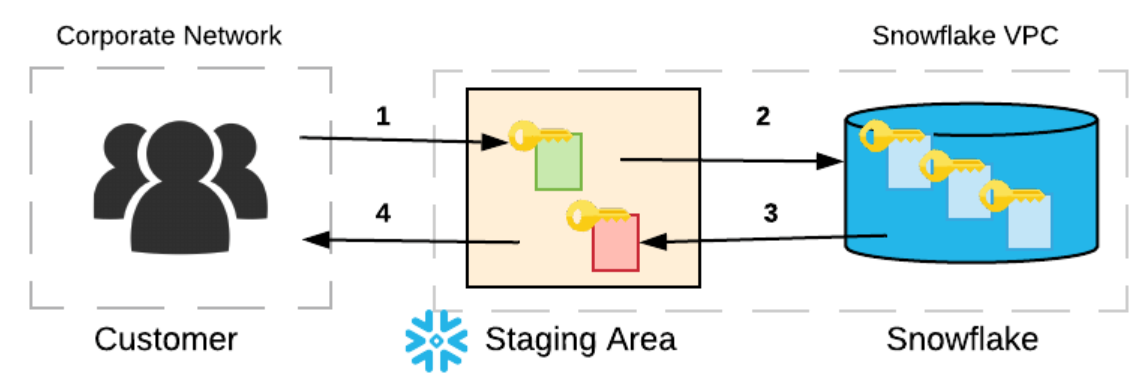

2. Customer-provided staging area

With a customer-provided staging area, encryption is optional but highly recommended. Nevertheless, if the customer does not encrypt the data in the staging area, Snowflake will still immediately encrypt the data when it is loaded into a table. The customer can choose her preference to encrypt staged files, for instance, in the AWS environment, it is fairly common to use AWS S3 client-side encryption.

For the customer-provided staging area, the customer must provide a master key in the CREATE SIZE command that can be used by Snowflake to process the uploaded files.

x

create stage encrypted_staging_are

url='s3://mybucket/datafiles/'

credentials=(aws _key_id='ABCDEFGHIJKL' aws_secret_key='xxxxxxxxxxxxxxxxxxxxxxxxx')

encryption=(master_key='asiIOIsaskjh%$asjlk+gsTHhs8197=');

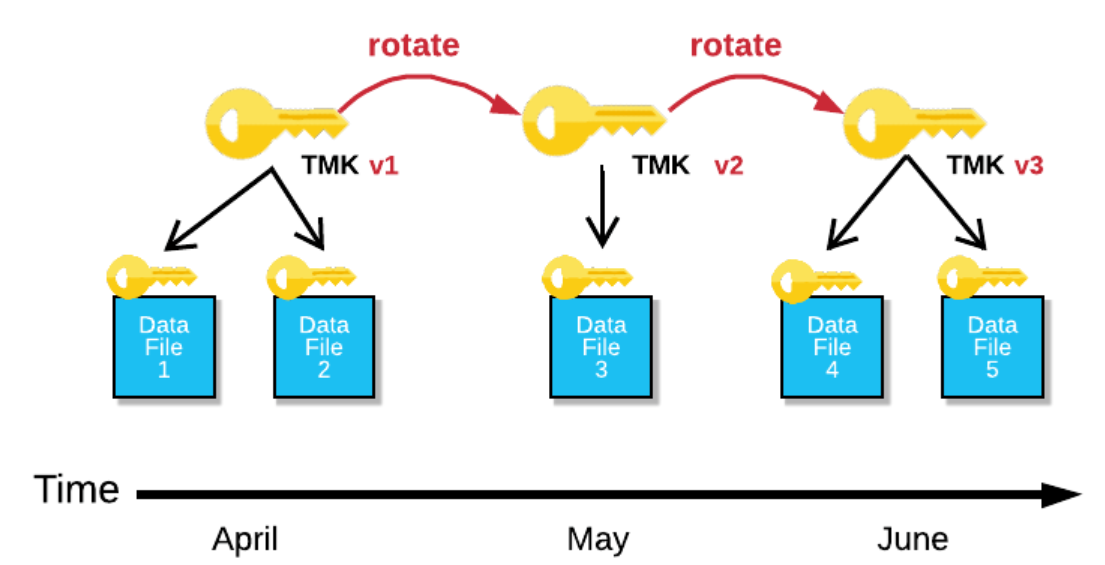

On top of the encryption capability, Snowflake provides encryption key rotation and automatic re-keying of the files. Keys are automatically rotated on a regular basis, the entire process - data encryption and key management - is transparent and no configuration is needed.

For Enterprise Edition Snowflake supports automatic rekeying. When this feature is enabled, and the retired encryption key for a table is older than one year, Snowflake will automatically re-encrypt the data files using the newly generated key.

Data Masking - Column Level Security

This year Snowflake announced support for column-level security/data masking policy. Column-level security allows to protect sensitive data from unauthorized access whereas the authorized users can still access sensitive data at runtime. This is a dynamic feature, i.e. the fields are not masked in the table in a static way as the records are stored, instead, Snowflake policy conditions will determine on the fly whether users will see masked or obfuscated data when they execute their query. As a result, authorized users can see the content of a particular data field (e.g. email or social security number), while unauthorized users will have no visibility of these fields.

The database administrator can easily define the data masking policy using SQL statements from Snowflake Worksheet (or from any supported client, like SnowSQL):

xxxxxxxxxx

create masking policy employee_ssn_data_mask as (val string) returns string ->

case

when current_role() in ('HR') then val

else '********'

end;

Once the masking policy is defined, you can apply it to a particular table or view using the following SQL statements:

x

-- table

alter table if exists employee modify column ssn_number set masking policy employee_ssn_data_mask;

-- view

alter view employee_view modify column ssn_number set masking employee_ssn_data_mask_view;

The data masking policy can be applied to internal and external tables as well as to views and also to COPY INTO command while unloading tables into the staging area. Thus, unauthorized users see masked data after executing the COPY INTO command.

Data Masking Policy for Row Level Security

During the latest Data Cloud Summit in November 2020, Snowflake announced support for row-level security policy as well. This is not publicly available yet but will come soon. The functionality assumes that the database administrator defines a lookup table to determine which role can see what rows within a table and then enforce it using row-level masking policy:

x

-- Create a SalesRegion lookup table for geographical regions

-- Then create a row access policy

create or replace row access policy row_level_access_policy as (sales_region varchar) returns boolean ->

'sales_executive_role' = current_role()

or exists (

select 1 from SalesRegion

where sales_role = current_role()

and region = sales_region

)

;

-- Assign row level access policy to a table

alter table sales add row access policy row_level_access_policy on(region);

I cannot wait to start testing this new row-level access functionality.

Opinions expressed by DZone contributors are their own.

Comments