Supercharged LLMs: Combining Retrieval Augmented Generation and AI Agents to Transform Business Operations

In this article, see how RAG and AI agents improve enterprise AI by enhancing accuracy, automation, and decision-making beyond LLM limitations.

Join the DZone community and get the full member experience.

Join For FreeEditor's Note: The following is an article written for and published in DZone's 2025 Trend Report, Generative AI: The Democratization of Intelligent Systems.

Enterprise AI is rapidly evolving and transforming. With the recent hype around large language models (LLMs), which promise intelligent automation and seamless workflows, we are moving beyond mere data synthesis toward a more immersive experience. Despite the initial enthusiasm surrounding LLM adoption, practical limitations soon became apparent. These limitations included the generation of "hallucinations" (incorrect or contextually flawed information), reliance on stale data, difficulties integrating proprietary knowledge, and a lack of transparency and auditability.

Managing these models within existing governance frameworks also proved challenging, revealing the need for a more robust solution. The promise of LLMs must be tempered by their real-world limitations, creating a gap that calls for a more sophisticated approach to AI integration.

The solution lies in the combination of LLMs with retrieval augmented generation (RAG) and intelligent AI agents. By grounding AI outputs in relevant real-time data and leveraging intelligent agents to execute complex tasks, we move beyond hype-driven solutions and FOMO. RAG + agents together focus on practical, ROI-driven implementations that deliver measurable business value. This powerful approach is unlocking new levels of enterprise value and paving the way for a more reliable, impactful, and contextually aware AI-driven future.

The Power of Retrieval Augmented Generation

RAG addresses the inherent data limitations of standalone LLMs by grounding them in external, up-to-date knowledge bases. This grounding allows LLMs to access and process information beyond their initial training data, significantly enhancing their accuracy, relevance, and overall utility within enterprise environments. RAG effectively bridges the gap between the vast general knowledge encoded within LLMs and the specific but often proprietary data that drives enterprise operations.

Several key trends are shaping the evolution and effectiveness of RAG systems to make real-world, production-grade, and business-critical scenarios a reality:

- Vector databases: The heart of semantic search — Specialized vector databases (there many commercial products available in the market to unlock this) enable efficient semantic search by capturing relationships between data points. This helps RAG quickly retrieve relevant information from massive datasets using conceptual similarity rather than just keywords.

- Hybrid search: Best of both worlds — Combining semantic search with traditional keyword search maximizes accuracy. While keyword search identifies relevant terms, semantic search refines results by understanding meaning, therefore ensuring no crucial information is overlooked.

- Context window expansion: Handling larger texts — LLMs are limited by context windows, which can hinder processing large documents. Techniques like summarization condense content for easier processing, while memory management helps retain key information across longer texts, ensuring coherent understanding.

- Evaluation metrics for RAG: Beyond LLM output quality— Evaluating RAG systems requires a more holistic approach than simply assessing the quality of the LLM's output. While the LLM's generated text is important, the accuracy, relevance, and efficiency of the retrieval process are equally crucial. Key metrics for evaluating RAG systems include:

- Retrieval accuracy — How well does the system retrieve the most relevant documents for a given query?

- Retrieval relevance — How closely does the retrieved information align with the user's information needs?

- Retrieval efficiency — How quickly can the system retrieve the necessary information?

By focusing on these metrics, developers can optimize RAG systems to ensure they are not only generating highquality text but also retrieving the right information in a timely manner.

Enterprise Use Cases: Real-World Applications

The versatility of RAG makes it applicable to a wide range of enterprise use cases. For example, RAG can:

- Be used to access customer records and knowledge bases to provide personalized support experiences

- Empower employees to quickly find relevant information within vast internal document repositories

- Be integrated with data analysis tools to provide context and insights from relevant documents

- Be used to generate reports that are grounded in factual data and relevant research

- Streamline legal research by retrieving relevant case laws, statutes, and legal documents

These examples illustrate the potential of RAG to transform enterprise workflows and drive significant business value.

The Role of AI Agents in Orchestrating Complexity

The combination of RAG and AI agents is a game-changer for enterprise AI, creating a powerful partnership that takes automation to a whole new level with practical reality and feasibility. This synergy goes beyond the limitations of standalone language models, enabling systems that can reason, plan, and handle complex tasks. By connecting AI agents to constantly updated knowledge bases through RAG, these systems can access the data or events they need to make informed decisions, manage workflows, and deliver real business value. This collaboration helps enterprises build AI solutions that are not just smart, but also adaptable, transparent, and rooted in real-world data.

Agents as Intelligent Intermediaries

AI agents act as intermediaries that manage the retrieval process and break down complex tasks. They autonomously interact with external tools and APIs to gather data, analyze it, and execute tasks efficiently.

Autonomous Agents

Autonomous agents are evolving to plan and execute tasks independently, reducing the need for human intervention. These agents can process real-time data, make decisions, and complete processes on their own, therefore streamlining operations.

Agent Frameworks

Frameworks like LangChain and LlamaIndex are simplifying the development and deployment of agent-based systems. These tools offer pre-built capabilities to create, manage, and scale intelligent agents, making it easier for enterprises to integrate automation.

Tool Use and API Integration

Agents can leverage external tools and APIs — such as calculators, search engines, and CRM systems — to access real-world data and perform complex actions. This allows agents to handle a wide range of tasks, from data retrieval to interaction with business systems.

Memory and Planning

Advancements in agent memory and planning allow agents to tackle longer and more complex tasks. By retaining context and applying long-term strategies, agents can effectively manage multi-step processes and ensure continuous, goal-driven execution.

RAG and AI Agents in Synergy

The combination of RAG and AI agents is more than just a technical integration — it's a strategic alignment (I call it a wonder alliance) that amplifies the strengths of both components. Here's how they work together:

- Orchestration by AI agents — AI agents manage the RAG process, from query initiation to output, ensuring accurate information retrieval and contextually meaningful responses. This reduces "hallucinations" and improves output reliability.

- RAG as the knowledge base — RAG provides the up-to-date, context-specific data that AI agents use to make informed decisions and complete tasks accurately, enhancing performance and system transparency.

- Improved explainability and transparency — By grounding outputs in real-world data, RAG makes it easier to trace information sources, which increases transparency and trust — especially in industries like finance, healthcare, and legal services where compliance is critical.

Advanced Concepts in RAG + Agents

The combined power of RAG and AI agents opens the door to advanced capabilities that are reshaping the future of enterprise AI. Here are some key concepts driving this evolution:

- Multi-agent systems — Multiple AI agents can collaborate to tackle complex tasks by specializing in different areas, like data retrieval, decision making, or execution. For example, in supply chain management, agents manage logistics, inventory, and demand forecasting independently but coordinate for optimal efficiency.

- Feedback loops and continuous improvement — RAG + agent systems use feedback loops to continuously learn and improve. By monitoring accuracy and relevance, these systems refine retrieval strategies and decision making, ensuring alignment with business goals and evolving user needs.

- Autonomy and complex task execution — AI agents are becoming more autonomous, enabling independent decision making and task execution. This capability automates processes like financial reporting and customer support, with RAG ensuring accurate information retrieval for efficient, high-quality results.

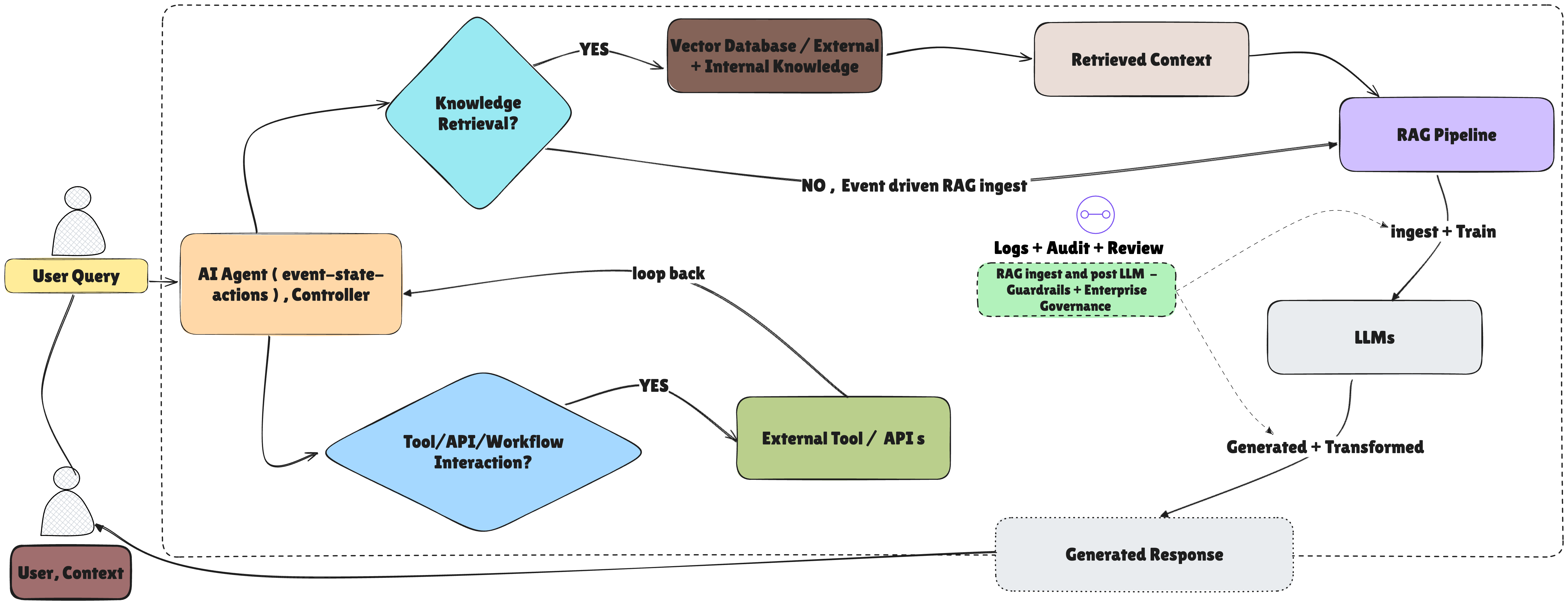

Figure 1: RAG + agent architecture diagram

Addressing Challenges and Future Directions of RAG + Agents

Implementing RAG + agents presents key hurdles and bumps. Data quality is paramount, and data curation should be meticulous, as flawed data leads to ineffective outcomes. Security is critical due to sensitive information handling, requiring robust protection and vigilant monitoring to maintain user trust. Scalability must ensure consistent speed and accuracy despite exponential data growth, demanding flexible architecture and efficient resource management.

Essentially, the system must not only be technically sound but also reliably perform its intended function as the volume of inputs and requests increases, preserving both its intelligent output and operational speed.

Table 1 details the emerging trends in RAG and agent AI, covering technological advancements like efficient systems and multi-modality, as well as crucial aspects like AI governance, personalization, and human-AI collaboration.

| Category | Details |

|---|---|

| Efficient RAG systems | Optimization of RAG for faster retrieval, greater indexing, and accuracy to improve performance and adaptability to growing data needs |

| AI lifecycle governance | Comprehensive frameworks are needed for the governance, compliance, and traceability of AI systems within enterprises |

| Legacy system integration | Integrating RAG + agents with legacy systems allows businesses to leverage existing infrastructure while adopting new AI capabilities, though this integration may not always be seamless |

| Multi-modality | Combining data types like text, images, audio, and video to enable richer, more informed decision making across various industries |

| Personalization | AI systems will tailor interactions and recommendations based on user preferences, increasing user engagement and satisfaction |

| Human-AI collaboration | Creating much needed interactions between humans and AI agents, allowing AI to assist with tasks while maintaining human oversight and decision making |

| Embodiment | AI agents will interact with the physical world, leading to applications like robotics and autonomous systems that perform tasks in real-world environments |

| Explainability and transparency | Increased transparency in AI decision-making processes to ensure trust, accountability, and understanding of how AI arrives at conclusions |

Ethical Imperatives in RAG + Agents

Ethical considerations are crucial as RAG + agents become more prevalent. Fairness and accountability demand unbiased, transparent AI decisions, requiring thorough testing. Privacy and security must be prioritized to protect personal data. Maintaining human control is essential, especially in critical sectors.

Conclusion: The Pragmatic Future of Enterprise AI

The convergence of RAG and AI agents is poised to redefine enterprise AI, offering unprecedented opportunities for value creation through efficient, informed, and personalized solutions. By bridging the gap between raw AI potential and the nuanced needs of modern enterprises, RAG + agents leverage real-time data retrieval and intelligent automation to unlock tangible business outcomes. This shift emphasizes practical, ROI-driven implementations that prioritize measurable value like improved efficiency, cost reduction, enhanced customer satisfaction, and innovation.

Crucially, it heralds an era of human-AI collaboration, where seamless interaction empowers human expertise by automating data-heavy and repetitive processes, allowing staff or human workforce to focus on higher-order tasks. Looking forward, the future of enterprise AI hinges on continuous innovation, evolving toward scalable, transparent, and ethical systems. While the next generation promises advancements like multimodality, autonomous decision making, and personalized interactions, it is essential to have a balanced approach that acknowledges practical limitations and prioritizes governance, security, and ethical considerations.

Success lies not in chasing hype, but in thoughtfully integrating AI to deliver lasting value. RAG + agents are at the forefront of this pragmatic evolution, guiding businesses toward a more intelligent, efficient, and collaborative future where AI adapts to organizational needs and fuels the next wave of innovation.

This is an excerpt from DZone's 2025 Trend Report, Generative AI: The Democratization of Intelligent Systems

Read the Free Report

Opinions expressed by DZone contributors are their own.

Comments