Table Store Time Series Data Storage Architecture

Explore table store time series data storage architecture.

Join the DZone community and get the full member experience.

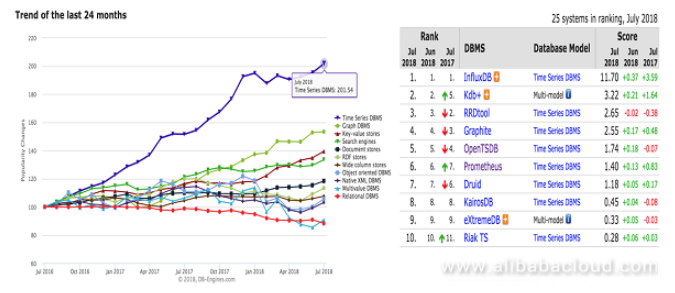

Join For FreeWith the rapid development Internet of Things (IoT) in recent years, there has been an explosion in time series data. Based on the growth trends of database types in DB-Engines over the past two years, the growth of time series database has been immense. Implementations of these large open source time series databases are different, and none of them are perfect. However, the advantages of these databases can be combined to implement a perfect time series database.

Alibaba Cloud Table Store is a distributed NoSQL database developed by Alibaba Cloud. Table Store uses a multi-model design, including the same Wide Column model as BigTable and Time series model for message data. In terms of storage model, data size, and write and query capabilities, it can meet the needs of time-series data scenarios. However, as a general-purpose model database, the time series data storage should fully utilize the capabilities of the underlying database. In the schema design and computational docking, there must be a special design, such as OpenTSDB's RowKey design for HBase and UID encoding.

This article focuses on data model definitions and core processing flows for time series data, and the architecture for building time series data storage based on Table Store. We will first talk about time series data and then discuss how we can process this data for our business applications with Table Store.

What Is Time Series Data?

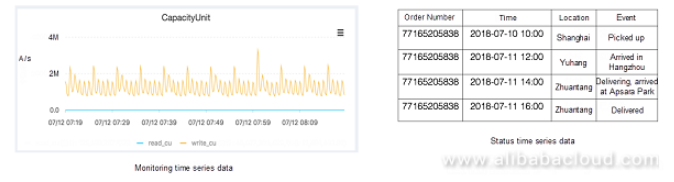

The time series data is mainly divided into two types, for monitoring and status. Current open source time series databases are aimed at time series data for monitoring, and some specific optimizations are made for the data characteristics in this scenario. In terms of the characteristics of time series data, the other type is time series data for status. The two types of time series data correspond to different scenarios. The monitoring type corresponds to the monitoring scenario, and the status type is for other scenarios, such as tracing and abnormal status recording. The most common package trace is the time series data for status.

The reason why both types of data are classified into time series is that these types are completely consistent in data model definition, data collection, data storage, and computing, and the same database and the same technical architecture can be abstracted.

Time Series Data Model

Before defining the time series data model, we first make an abstract representation of the time series data.

- Individual or group (WHO): Describes the subject that produces the data, which can be a person, a monitoring metric, or an object. It generally describes that an individual has multi-dimensional attributes, and a certain unique ID can be used to locate an individual, for example, using a person ID to locate a person, and using a device ID to locate a device. Individuals can also be located through multi-dimensional attributes, for example, using cluster, machine ID, and process name to locate a process.

- Time (WHEN): Time is the most important feature of time series data and is a key attribute that distinguishes it from other data.

- Location (WHERE): A location is usually located by a two-dimensional coordinate of latitude and longitude, and by a three-dimensional coordinate of latitude, longitude and altitude, in the field of scientific computing such as meteorology.

- Status (WHAT): Used to describe the status of a specific individual at a certain moment. The time series data for monitoring is usually a numerical description status, and the trace data is an event-expressed status, in which there are different expressions for different scenarios.

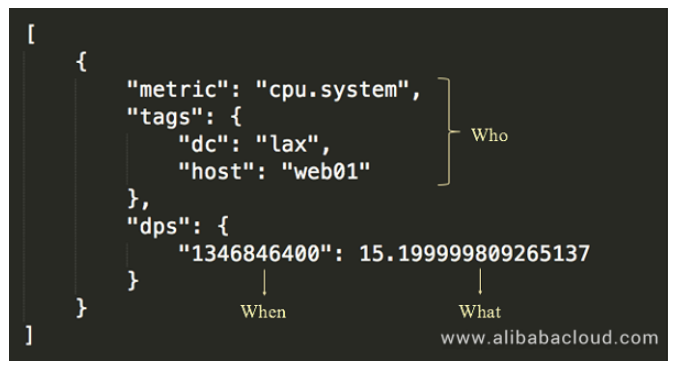

The above is an abstract representation of the time series data. Each open source time series database has its own definition of the time series data model, defining the time series data for monitoring. The data model of OpenTSDB is used as an example:

The monitoring time series data model definition includes:

- Metric: Used for describing the monitoring metric.

- Tags: Used for locating the monitored object, which is described by one or more tags.

- Timestamp: The time point when the monitoring value is collected.

- Value: The collected monitoring value, which is usually numeric.

The monitoring time series data is the most typical type of time series data, and has specific characteristics. The characteristics of the monitoring time series data determine that such time series databases have specific storage and computing methods. Compared with the status time series data, it has specific optimizations in computing and storage. For example, the aggregation computing has several specific numerical aggregate functions, and there will be specially optimized compression algorithms on the storage. In the data model, the monitoring time series data usually does not need to express the location, while the overall model is in line with our unified abstract representation of the time series.

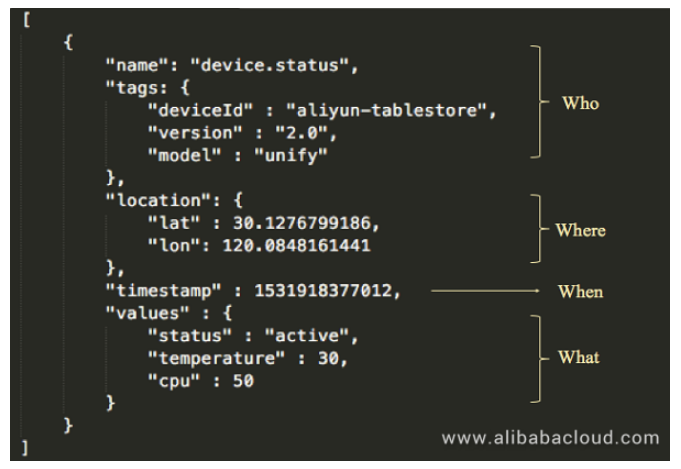

Based on the monitoring time series data model, we can define a complete model of the time series data according to a time series data abstract model described above:

The definition includes:

- Name: Defines the type of the data.

- Tags: Describes the metadata of the individual.

- Location: The location of the data.

- Timestamp: The time stamp when the data is generated.

- Values: The value or status corresponding to the data. Multiple values or statuses can be provided, which are not necessarily numeric.

This is a more complete time series data model, and has two main differences with the model definition of the monitoring time series data of OpenTSDB: first, there is one more dimension, location, in metadata; second, it can express more values.

Time Series Data Query, Computing, and Analysis

Time series data has its own query and computing methods, which roughly include:

Time series Retrieval

According to the data model definition, name + tags + location can be used to locate an individual, which has a time series, and the points on the time series are timestamps and values. For the query of time series data, first the time series needs to be located, which is a process of retrieval based on a combination of one or more values of the metadata. You can also drill down based on the association of metadata.

Time Range Query

After the Time series is located through retrieval, the time series is queried. There are very few queries on single time points on a time series, and queries are usually on all points in a continuous time range. Interpolation is usually done for missing points in this continuous time range.

Aggregation

A query can be for a single time series or multiple time series. For range queries for multiple time series, the results are usually aggregated. This aggregation is for values at the same time point on different time series, commonly referred to as "post-aggregation."

The opposite of "post-aggregation" is "pre-aggregation", which is the process of aggregating multiple time series into one time series before time series data storage. Pre-aggregation computes the data and then store it, while post-aggregation queries the stored data and then computes it.

Downsample

The computational logic of downsample is similar with that of aggregation. The difference is that down sampling is for a single time series instead of multiple time series, which aggregates the data points in a time range in a single time series. One of the purposes of downsample is to make the presentation of data points in a large time range, another is to reduce storage costs.

Analysis

Analysis is to extract more value from the time series data. There is a special research field called "time series analysis".

Time Series Data Processing Procedure

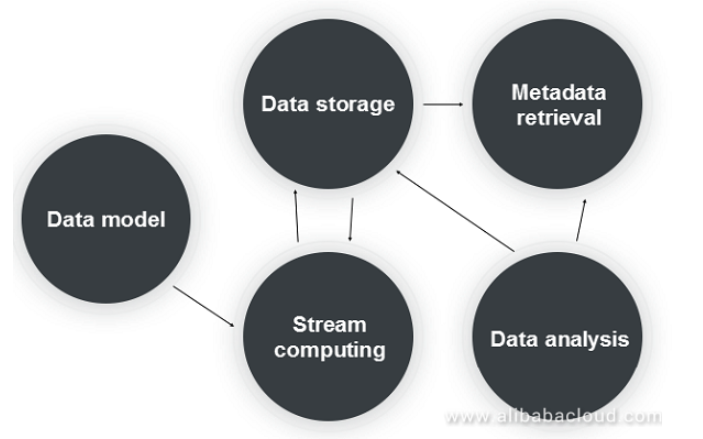

The core procedure of time series data processing is as shown above, including:

- Data model: For the standard definition of time series data, the collected time series data must conform to the definition of the model, including all the characteristic attributes of the time series data.

- Stream computing: Pre-aggregation, downsample, and post-aggregation for the time series data.

- Data storage: The storage system provides high-throughput, massive, and low-cost storage, supporting separation of cold/hot data, and efficient range query.

- Metadata retrieval: Provides the storage and retrieval of time series metadata in the order of 10 million to 100 million, and supports different retrieval methods (multidimensional filtering and location query).

- Data analysis: Provides time series analysis and computing capabilities for time series data.

Let us see the products that can be used in product selection in these core processes.

Data storage

Time series data is typical non-relational data. It is characterized by high concurrency, high throughput, large data volume, and high write and low read. The query mode is usually range query. For these data characteristics, it is very suitable to use a database such as NoSQL. Several popular open source time series databases use NoSQL database as the data storage layer, such as OpenTSDB based on HBase, and KairosDB based on Cassandra. Therefore, for the product selection of "data storage", an open source distributed NoSQL database such as HBase or Cassandra, or a cloud service such as Alibaba Cloud's Table Store can be selected.

Stream Computing

For stream computing, open source products such as JStrom, Spark Streaming, and Flink, or Alibaba's Blink and cloud product StreamCompute on the cloud can be used.

Metadata Search

Metadata for the time series will also be large in magnitude, so a distributed database is first considered. In addition, because the query mode needs to support retrieval, the database needs to support interted index and spatial index, and open source Elasticsearch or Solr can be used.

Data analysis

Data analysis requires a powerful distributed computing engine, open source Spark, cloud product MaxCompute, or a serverless SQL engine such as Presto or cloud product Data Lake Analytic can be selected.

Open Source Time Series Databases

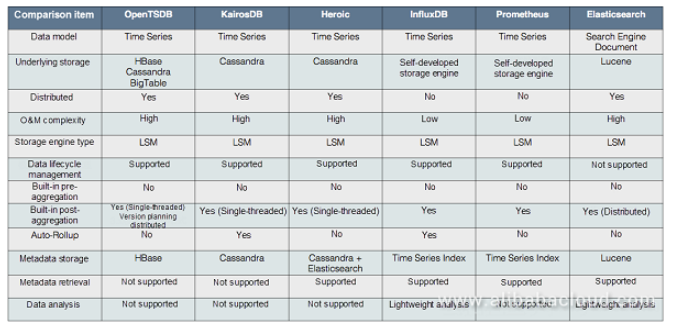

Based on the database development trends on DB-Engines, we see that time series databases have developed rapidly over the past two years, and a number of excellent open source time series databases have emerged. Each implementation of major time series databases also has its own merits. Here is a comprehensive comparison from some dimensions:

- Data Storage: All the databases utilize distributed NoSQL (LSM engine) storage, including distributed databases such as HBase and Cassandra, and cloud products such as BigTable, and self-developed storage engines.

- Aggregation: Pre-aggregation can only rely on external stream computing engines, such as Storm or Spark Streaming. At the post-aggregation level, query for post-aggregation is an interactive process, so it generally does not rely on the stream computing engine. Different time series databases provide a single-threaded simple method or a concurrent computing method. Automatic downsample is also a post-aggregation process, but is but a stream process instead of an interactive process. This computing is suitable for the stream computing engine but is not implemented in this way.

- Metadata storage and retrieval: The classic OpenTSDB does not have a dedicated metadata storage, and does not support the retrieval of metadata. The metadata is retrieved and queried by scanning the row keys of the data table. KairosDB uses a table for metadata storage in Cassandra, but retrieval efficiency is very low because the table needs to be scanned. Heroic was developed based on KairosDB. It uses Elasticsearch for metadata storage and indexing and supports better metadata retrieval. InfluxDB and Prometheus implement indexing independently, but indexing is not easy and requires time series metadata in the order of 10 million to 100 million. InfluxDB implemented a memory-based metadata indexing in an earlier version, which has a number of restrictions, for example, the size of the memory will limit the size of the time series, and the construction of the memory index needs to scan all-time series metadata, causing a long failover time for the node.

- Data analysis: Most TSDBs do not naturally have analysis capabilities other than query and analysis capabilities for post-aggregation, except for Elasticsearch, this is an important advantage for it to keep a foothold in the time series field.

Table Store Time Series Data storage

As a distributed NoSQL database developed by Alibaba Cloud, Table Store uses the same Wide Column model as Bigtable in terms of data model. The product is well-suited for time series data scenarios in terms of storage model, data size, and write and query capabilities. We have also supported monitoring time series products such as CloudMonitor, status time series products such as AliHealth's drug tracing, and core services such as postal package trace. There is also a complete computing ecosystem to support the computing and analysis of time series data. In future planning, we have specific optimizations for time series scenarios in terms of metadata retrieval, time series data storage, computing and analysis, and cost reduction.

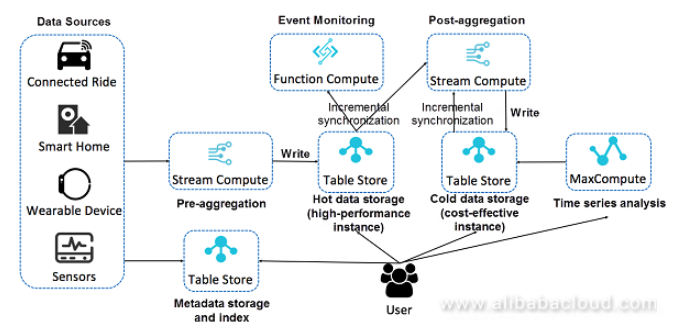

The above is a complete architecture of time series data storage, computing, and analysis based on Table Store. This is a serverless architecture that implements all the features needed to provide a complete time series scenario by combining cloud products. Each module has a distributed architecture, providing powerful storage and computing capabilities, and resources can be dynamically expanded. Each component can also be replaced with other similar cloud products. The architecture is flexible and has great advantages over open source time series databases. The core advantages of this architecture are analyzed below:

Separation of Storage and Computing

Separation of storage computing is a leading technical architecture. Its core advantage is to provide more flexible computing and storage resource configuration, with more flexible cost, and better load balancing and data management. In the cloud environment, to allow users to truly take advantage of the benefits brought by the separation of storage and computing, products for the separation of storage and computing need to be provided.

Table Store implements separation of storage and computing in both technical architecture and product form, and can freely allocate storage and computing resources at a relatively low cost. This is especially important in the time series data scenario, where computing is a relatively constant, and the storage grows linearly. The primary way to optimize costs is to allocate constant computing resources and infinitely scalable storage, without the extra computing cost.

Separation of Cold/Hot Data

A notable feature of time series data is that there is distinctive hot and cold data access, and recently written data is accessed more frequently. Based on this feature, the hot data adopts storage medium with a higher IOPS, which greatly improves the overall query efficiency. Table Store provides two types of instances: high-performance instances and cost-effective instances, corresponding to SSD and SATA storage media respectively. The service feature allows users to freely allocate tables with different specifications according to data of different precisions and different performance requirements for query and analysis. For example, for high-concurrency and low-latency queries, high-performance instances are allocated; for cold data storage and low-frequency queries, cost-effective instances are allocated. For interactive data analysis that requires high speed, high-performance instances can be allocated. For scenarios of time series data analysis and offline computing, cost-effective instances can be allocated.

For each table, the lifecycle of the data can be freely defined, for example, for a high-precision table, a relatively short lifecycle can be configured. For a low-precision table, a longer lifecycle can be configured.

The bulk of the storage is for cold data. For this part of less-frequently accessed data, we will further reduce the storage cost through Erasing Coding and the ultimate compression algorithm.

Closed Loop of Data Flow

Stream computing is the core computing scenario in time series data computing, which performs pre-aggregation and post-aggregation on the time series data. The common monitoring system architecture uses a front stream computing solution. Pre-aggregation and downsample of the data are all performed in the front stream computing. That is, the data has been processed before it is stored, and what is stored is only the result. A second downsample is no longer required, and only the query of post-aggregation may be required.

Table Store is deeply integrated with Blink and is now available as a Blink maintenance table and a result table. The source table has been developed and is ready for release. Table Store can be used as the source and back-end of Blink, and the entire data stream can form a closed loop, which can bring more flexible computing configuration. The original data will subject to a data cleansing and pre-aggregation after entering the Blink and then is written to the hot data table. This data can automatically flow into Blink for post-aggregation, and historical data backtracking for a certain period of time is supported. The results of post-aggregation can be written to the cold storage.

In addition to integrating with Blink, Table Store can also integrate with Function Compute for event programming, and can enable real-time abnormal status monitoring in time series scenarios. It can also read incremental data through the Stream APIs for custom analysis.

Big Data Analysis Engine

Table Store deeply integrates with distributed computing engines developed by Alibaba Cloud, such as MaxCompute (formerly ODPS). MaxCompute can directly read the data on the Table Store for analysis, eliminating the ETL process for data.

There are some optimizations in the entire analysis process, for example, optimization of queries through the index, and providing more operators for computing pushdown at the bottom.

Service Capabilities

In a word, Table Store's service capabilities are characterized by zero-cost integration, out-of-the-box, global deployment, multi-language SDK, and fully managed services.

Metadata Storage and Retrieval

Metadata is also a very important part in time series data. It is much smaller than the time series data in terms of volume, but it is much more complicated than the time series data in query complexity.

From the definition we provided above, metadata is mainly divided into Tags and Location. Tags are mainly used for multidimensional retrieval, and Location is mainly for location retrieval. So for the underlying storage, Tags must implement the inverted rank index for providing efficient retrieval, and the Location needs to implement the location index. The order of time series for a service-level monitoring system or tracing system is 10 million to 100 million, or higher. Metadata also needs a distributed retrieval system to provide a high-concurrency low-latency solution, and a preferable implementation in the industry is to use Elasticsearch for the storage and retrieval of metadata.

Summary

Alibaba Cloud Table Store is a general-purpose distributed NoSQL database that supports multiple data models. The data models currently available include Wide Column (BigTable) and time series (message data model).

In applications of similar database products (such as HBase and Cassandra) in the industry, time series data is a very important field. Table Store is constantly exploring in the time series data storage. We are constantly improving in the process of closed loop of stream computing data, data analysis optimization, and metadata retrieval, to provide a unified time series data storage platform.

Published at DZone with permission of Leona Zhang. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments