TensorFlow + MQTT + Apache Kafka

Let's take a brief look at a use case with connected cars and real-time streaming analytics using deep learning.

Join the DZone community and get the full member experience.

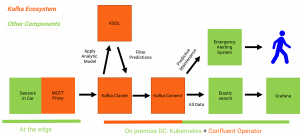

Join For FreeI built a scenario for a hybrid Machine Learning infrastructure leveraging Apache Kafka as a scalable central nervous system. The public cloud is used for training analytic models at extreme scale (e.g. using TensorFlow and TPUs on Google Cloud Platform (GCP) via Google ML Engine. The predictions (i.e. model inference) are executed on-premise at the edge in a local Kafka infrastructure (e.g. leveraging Kafka Streams or KSQL for streaming analytics).

This post focuses on the on-premise deployment. I created a Github project with a KSQL UDF for sensor analytics. It leverages the new API features of KSQL to build UDF / UDAF functions easily with Java to do continuous stream processing on incoming events.

Use Case: Connected Cars: Real-Time Streaming Analytics Using Deep Learning

Continuously process millions of events from connected devices (sensors of cars in this example):

I built different analytic models for this. They are trained on public cloud leveraging TensorFlow, H2O and Google ML Engine. Model creation is not focus of this example. The final model is ready for production already and can be deployed for doing predictions in real time.

Model serving can be done via a model server or natively embedded into the stream processing application. See the trade-offs of RPC vs. Stream Processing for model deployment and a "TensorFlow + gRPC + Kafka Streams" example here.

Demo: Model Inference at the Edge with MQTT, Kafka and KSQL

The Github project generates car sensor data, forwards it via Confluent MQTT Proxy to Kafka cluster for KSQL processing and real time analytics.

This project focuses on the ingestion of data into Kafka via MQTT and processing of data via KSQL:

A great benefit of Confluent MQTT Proxy is simplicity for realizing IoT scenarios without the need for a MQTT Broker. You can forward messages directly from the MQTT devices to Kafka via the MQTT Proxy. This reduces efforts and costs significantly. This is a perfect solution if you "just" want to communicate between Kafka and MQTT devices.

If you want to see the other part of the story (integration with sink applications like Elasticsearch / Grafana), please take a look at the Github project "KSQL for streaming IoT data." This realizes the integration with ElasticSearch and Grafana via Kafka Connect and the Elastic connector.

KSQL UDF: Source Code

It is pretty easy to develop UDFs. Just implement the function in one Java method within a UDF class:

@Udf(description = "apply analytic model to sensor input")

public String anomaly(String sensorinput){ "YOUR LOGIC" }Here is the full source code for the Anomaly Detection KSQL UDF.

How to Run the Demo With Apache Kafka and MQTT Proxy?

All steps to execute the demo are describe in the Github project.

You just need to install Confluent Platform and then follow these steps to deploy the UDF, create MQTT events and process them via KSQL leveraging the analytic model.

I use Mosquitto to generate MQTT messages. Of course, you can use any other MQTT client, too. That is the great benefit of an open and standardized protocol.

Hybrid Cloud Architecture for Apache Kafka and Machine Learning

If you want to learn more about the concepts behind a scalable, vendor-agnostic Machine Learning infrastructure, take a look at my presentation on Slideshare or watch the recording of the corresponding Confluent webinar "Unleashing Apache Kafka and TensorFlow in the Cloud."

Please share any feedback! Do you like it, or not? Any other thoughts?

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments